Fabian Fuchs

@FabianFuchsML

Research Scientist at DeepMind. Interested in invariant and equivariant neural nets and applications to the natural sciences. Views are my own.

You might like

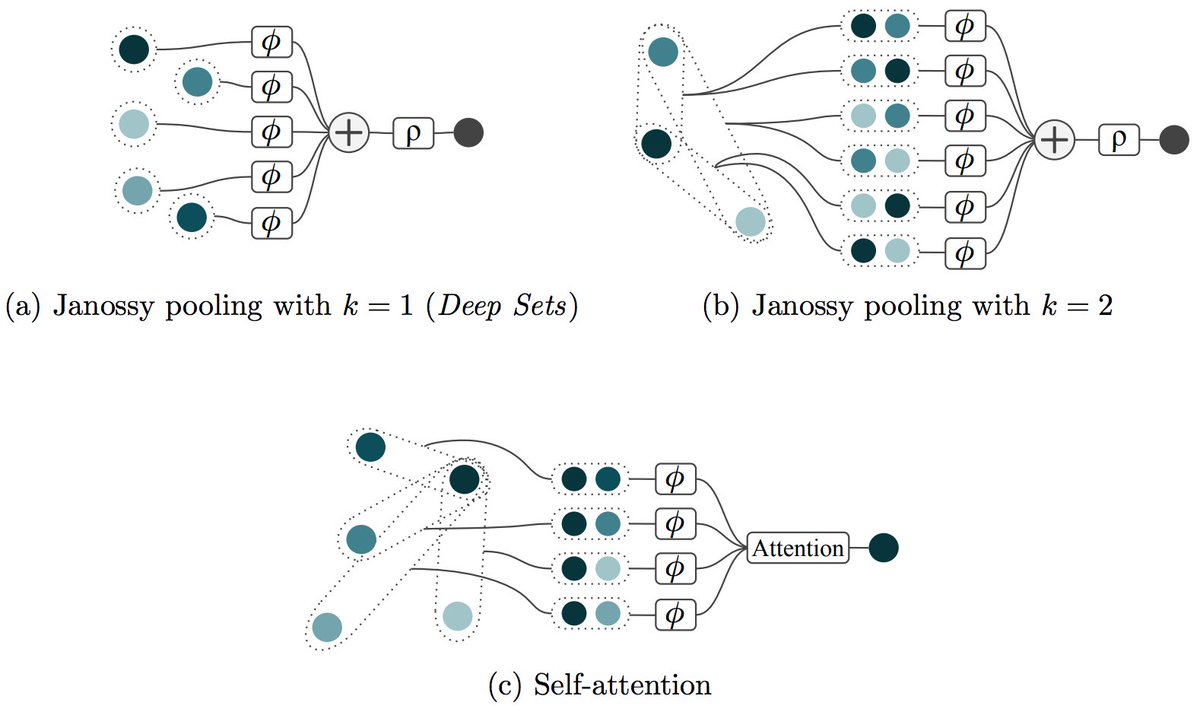

A year ago I asked: Is there more than Self-Attention and Deep Sets? - and got very insightful answers. 🙏 Now, Ed, Martin and I wrote up our own take on the various neural networks architectures for sets. Have a look and tell us what you think! :) ➡️fabianfuchsml.github.io/learningonsets/ ☕️

Both Max-Pooling (e.g. DeepSets) and Self-Attention are permutation invariant/equivariant neural network architectures for set-based problems. I am aware of a couple of variations for both of these. Are there additional, fundamentally different architectures for sets? 🤔

🧵1/7 Interested in who will become the next US President and how recent news events influenced the chances of each candidate? Let's dive into how election odds can offer insights. 👀

Our Apple ML Research team in Barcelona is looking for a PhD intern! 🎓 Curiosity-driven research 🧠 with the goal to publish 📝 Topics: Confidence/uncertainty quantification and reliability of LLMs 🤖 Apple here: jobs.apple.com/en-gb/details/…

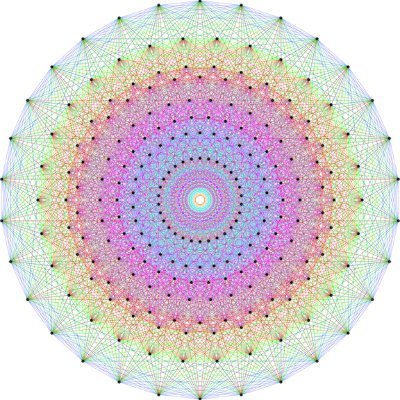

Graphs , Sets, Universality We put more work into this and are presenting it via the ICLR blogpost track (thanks to organisers and reviewers!). Have a read and let us know what you think: iclr-blogposts.github.io/2023/blog/2023… better in light mode💡, dark mode🌙 messes with the latex a bit

📢 New blog post! Realising an intricate connection between PNA (@GabriCorso @lukecavabarrett @dom_beaini @pl219_Cambridge) & the seminal work on set representations (Wagstaff @FabianFuchsML @martinengelcke @IngmarPosner @maosbot), Fabian and I join forces to attempt to explain!

Text-to-image diffusion models seem to have a good idea of geometry. Can we extract that geometry? Or maybe we can nudge these models to create large 3D consistent environments? Here's a blog summarizing some ideas in this space :) akosiorek.github.io/geometry_in_im…

📢 New blog post! Realising an intricate connection between PNA (@GabriCorso @lukecavabarrett @dom_beaini @pl219_Cambridge) & the seminal work on set representations (Wagstaff @FabianFuchsML @martinengelcke @IngmarPosner @maosbot), Fabian and I join forces to attempt to explain!

I have recently had a range of very insightful conversations with @PetarV_93 about graph neural networks, networks on sets, universality and how ideas have spread in the two communities. This is our write up, feedback welcome as always! :) ➡️fabianfuchsml.github.io/universalgraphs ☕️

I have recently had a range of very insightful conversations with @PetarV_93 about graph neural networks, networks on sets, universality and how ideas have spread in the two communities. This is our write up, feedback welcome as always! :) ➡️fabianfuchsml.github.io/universalgraphs ☕️

Anyone know of a department looking to hire faculty in the protein/genome+evolution+ML space? Also RNA biology (asking for a friend) 🙂🥼🧪

New blog post! Find out: - what reconstructing masked images and our brains have in common, - why reconstructing masked images is a good idea for learning representations, - what makes a good mask and how to learn one akosiorek.github.io/ml/2022/07/04/…

Graph neural networks often have to globally aggregate over all nodes. How we do this can have a significant impact on performance 🎯. After we recently finished a project on this, I wrote a blog post on this topic. Let me know what you think! :) ➡️fabianfuchsml.github.io/equilibriumagg… ☕️

Molecule Generation in 3D with Equivariant Diffusion (arxiv.org/abs/2203.17003). Very happy to share this project (the last of my PhD woohoo 🥳) and a super nice collab with @vgsatorras @ClementVignac (equal contrib shared among three of us) and of course @wellingmax

Amazing video! Fantastic book, too, I am glad it receives all this attention. Many of those ideas & concepts are very fundamental and so helpful to understand, regardless of which specific sub-field of machine learning one is in.

Epic special edition MLST on geometric deep learning! Been in the works since May! with @mmbronstein @PetarV_93 @TacoCohen @joanbruna @ykilcher @ecsquendor youtu.be/bIZB1hIJ4u8

Metacognition in AI? We'd love for you to join us at #NeurIPS2021 and tell us about your work. Closing soon... #ai #artificialintelligence #ml #machinelearning #robotics #cogsci sites.google.com/view/metacogne…

What startups are out there these days that need or make good use of the probabilistic ML toolkit? (thinking mostly: generative modeling, prob. inference, BO/active learning) Slowly starting to explore the job market and need your help! 🙏 RT

Just to clarify. RoseTTAFold uses SE3 transformers (the core part of the structure module) and Nvidia just released a much faster version of this core part. The current code does not yet integrate these updates.

RoseTTAFold about to become 21X faster? "NVIDIA just released an open-source optimized implementation that uses 9x less memory and is up to 21x faster than the baseline official implementation." developer.nvidia.com/blog/accelerat…

Thank you for this great write-up about AF2! journals.iucr.org/d/issues/2021/… "The SE(3)-Transformer [...] is currently too computationally expensive for protein-scale tasks" ➡️ To be honest, I initially thought so as well; but @minkbaek et al made it work! 😍 science.sciencemag.org/content/sci/ea…

![FabianFuchsML's tweet image. Thank you for this great write-up about AF2!

journals.iucr.org/d/issues/2021/…

"The SE(3)-Transformer [...] is currently too computationally

expensive for protein-scale tasks"

➡️ To be honest, I initially thought so as well; but @minkbaek et al made it work! 😍

science.sciencemag.org/content/sci/ea…](https://pbs.twimg.com/media/E7782RmWQAM_1qY.jpg)

Particularly the proof in chapter 4 (entirely @EdWagstaff 's work) blows my mind🤯. He worked on this proof for an entire year and, imho, totally worth it. I love his talent for making abstract theory accessible for the reader. Blog post will follow 🙂 arxiv.org/abs/2107.01959 ☕️

United States Trends

- 1. Penn State 24.5K posts

- 2. Indiana 40.5K posts

- 3. Mendoza 21.6K posts

- 4. Gus Johnson 7,396 posts

- 5. Heisman 9,792 posts

- 6. Iowa 22K posts

- 7. #UFCVegas111 6,722 posts

- 8. Mizzou 4,453 posts

- 9. Sayin 70.8K posts

- 10. Oregon 35.1K posts

- 11. #iufb 4,500 posts

- 12. Cam Coleman N/A

- 13. Omar Cooper 10.2K posts

- 14. Barcelos N/A

- 15. Josh Hokit N/A

- 16. Beck 7,741 posts

- 17. Preston Howard N/A

- 18. Kirby Moore N/A

- 19. #LightningStrikes N/A

- 20. Stein 3,319 posts

You might like

-

Taco Cohen

Taco Cohen

@TacoCohen -

Max Welling

Max Welling

@wellingmax -

Durk Kingma

Durk Kingma

@dpkingma -

Thomas Kipf

Thomas Kipf

@tkipf -

Chaitanya K. Joshi

Chaitanya K. Joshi

@chaitjo -

Francesco Locatello

Francesco Locatello

@FrancescoLocat8 -

yobibyte

yobibyte

@y0b1byte -

Rianne van den Berg

Rianne van den Berg

@vdbergrianne -

Adam Golinski

Adam Golinski

@adam_golinski -

Maurice Weiler

Maurice Weiler

@maurice_weiler -

Xavier Bresson

Xavier Bresson

@xbresson -

will grathwohl

will grathwohl

@wgrathwohl -

Cristian Bodnar

Cristian Bodnar

@crisbodnar -

Sindy Löwe

Sindy Löwe

@sindy_loewe -

Ingmar Posner

Ingmar Posner

@IngmarPosner

Something went wrong.

Something went wrong.