GrumpyGradient

@GrumpyGradient

Just your average grumpy gradient, on a quest for local optima. PhD student in machine learning, statistics, and genetics.

Super interesting work and great thread!

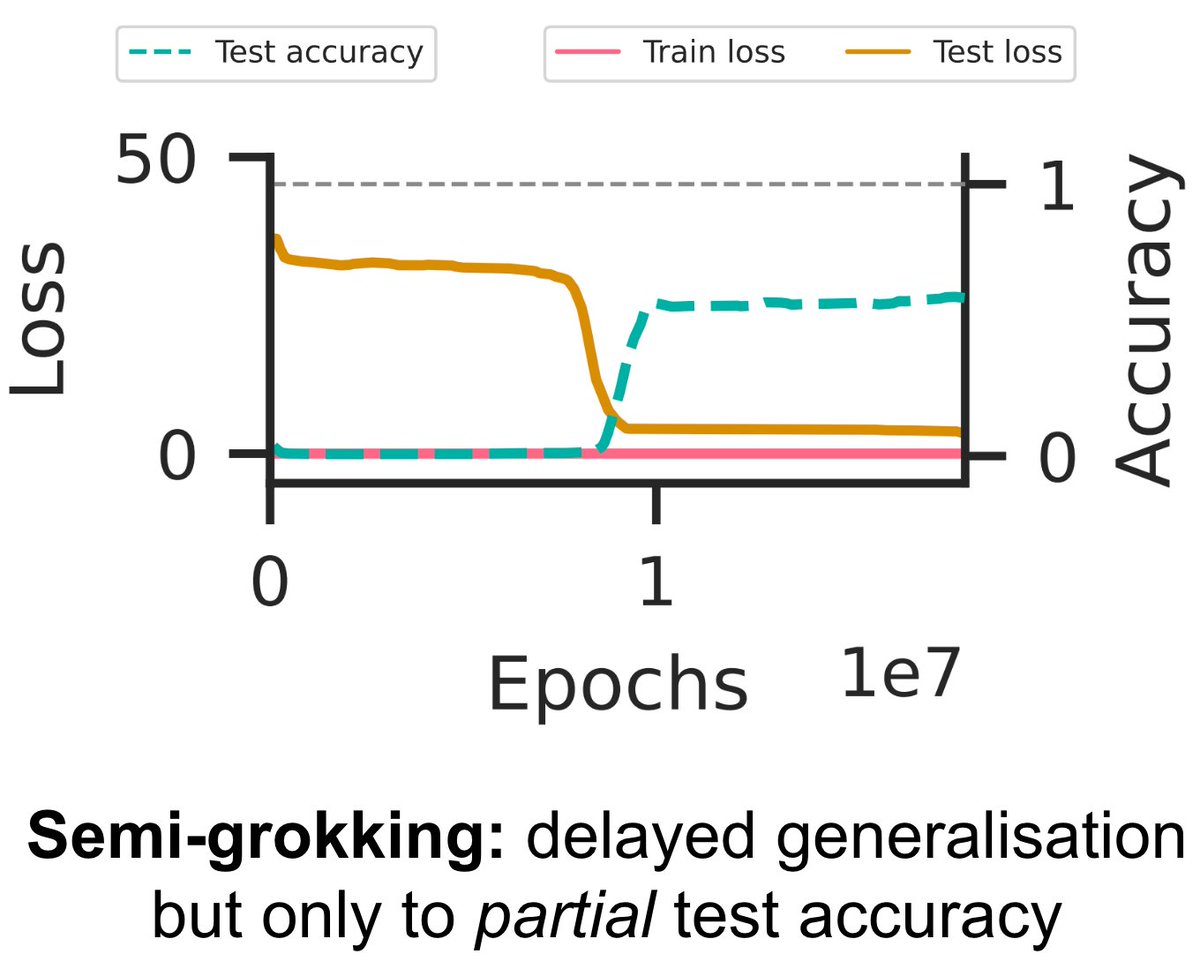

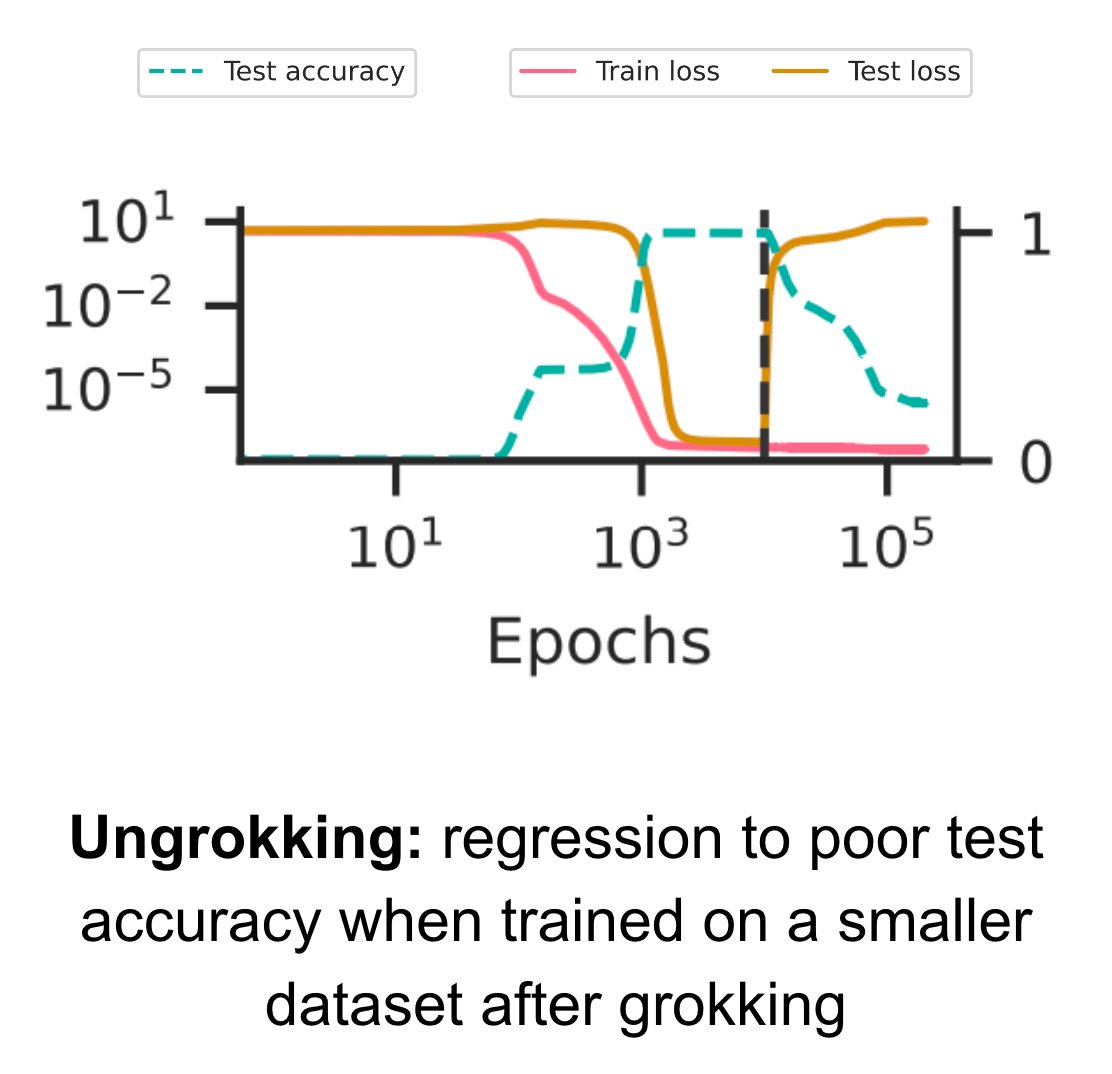

Our latest paper (arxiv.org/abs/2309.02390) provides a general theory explaining when and why grokking (aka delayed generalisation) occurs – a theory so precise that we can predict hyperparameters that lead to partial grokking, and design interventions that reverse grokking! 🧵👇

Q: What's better than Mixture of Experts? A: Mixture of Mixture of Experts! Introducing GodMode: the AI Chat Browser smol.ai/godmode Fast, Free, Access to ChatGPT, Bing, Bard, Claude, YouChat, Poe, Perplexity, Phind, and Local/GGML Models like Vicuna and Alpaca No…

As an LLM outsider I was surprised to learn at #OpenAI's #ICML2023 party that everyone in the field expects that AGI is basically solved and it just needs another 1-2 orders of magnitude increase in data and compute.

These kinds of issues make me doubt that not releasing model details will help at all with ai safety. Lots of people have to waste their time guessing what's going on instead of stress testing models more efficiently. Safe systems become safer the more people can look into them

GPT-4 is getting worse over time, not better. Many people have reported noticing a significant degradation in the quality of the model responses, but so far, it was all anecdotal. But now we know. At least one study shows how the June version of GPT-4 is objectively worse than…

United States Trends

- 1. #DWTS 50K posts

- 2. Elaine 39K posts

- 3. Robert 105K posts

- 4. Alix 11.4K posts

- 5. Dylan 33.6K posts

- 6. Carrie Ann 3,680 posts

- 7. Anthony Black 2,537 posts

- 8. Ezra 11.2K posts

- 9. Drummond 2,585 posts

- 10. #WWENXT 7,666 posts

- 11. Sixers 4,163 posts

- 12. Suggs 2,431 posts

- 13. Jalen Johnson 3,763 posts

- 14. #DancingWithTheStars 1,411 posts

- 15. Godzilla 32.2K posts

- 16. #iubb 1,140 posts

- 17. Auburn 9,037 posts

- 18. Michigan 84.7K posts

- 19. Bruce Pearl N/A

- 20. CJ McCollum 1,698 posts

Something went wrong.

Something went wrong.