Noam Brown

@polynoamial

Researching reasoning @OpenAI | Co-created Libratus/Pluribus superhuman poker AIs, CICERO Diplomacy AI, and OpenAI o3 / o1 / 🍓 reasoning models

Dit vind je misschien leuk

Today, I’m excited to share with you all the fruit of our effort at @OpenAI to create AI models capable of truly general reasoning: OpenAI's new o1 model series! (aka 🍓) Let me explain 🧵 1/

Our efforts at @OpenAI to advance scientific progress aren't just limited to math/physics/coding. In this work, GPT-5 was evaluated on its ability to optimize wet lab experiments. Very impressive work from @MilesKWang and team!

While humans acted as GPT-5’s hands for carrying out the protocols, we also piloted an autonomous robot. It was built to execute arbitrary Gibson cloning protocols from natural language, with human supervision for safety.

Today, we're launching @worktrace_ai to help businesses uncover their best automation opportunities and build those automations. Our founders, Angela Jiang (product manager of GPT-3.5 and GPT-4 at OpenAI) and Deepak Vasisht (UIUC CS professor, MIT researcher, IIT graduate of the…

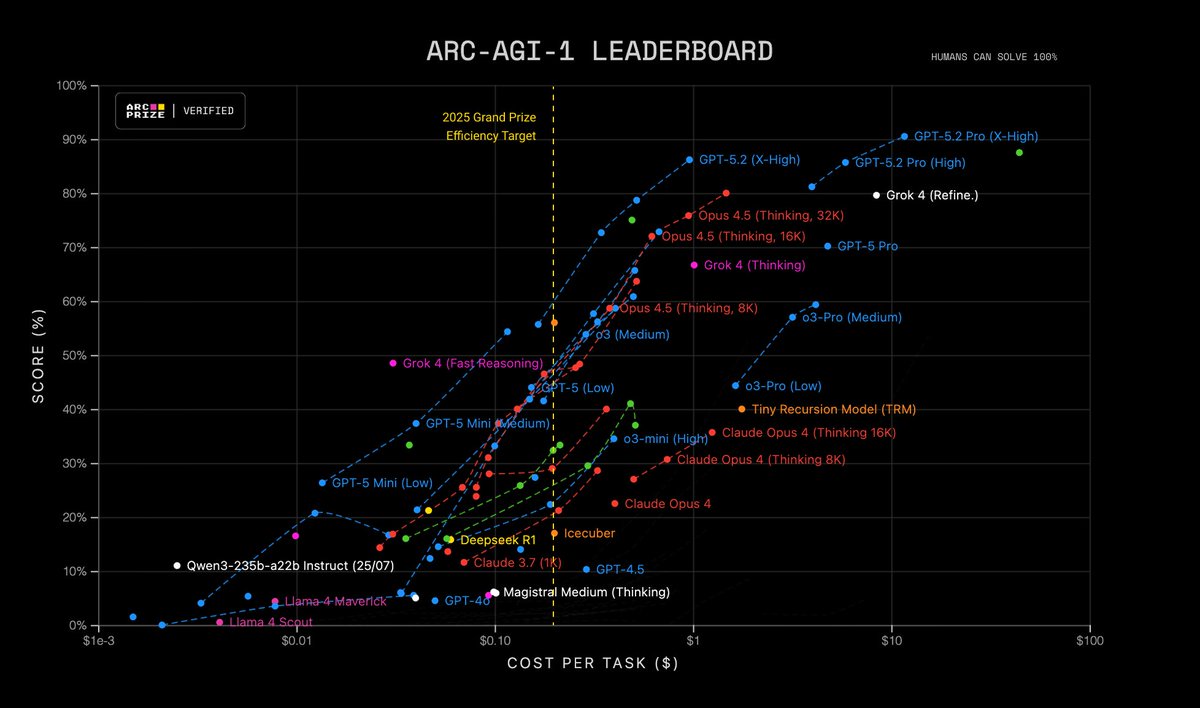

An important lesson that ARC-AGI has internalized, but not many others have, is that benchmark perf is a function of test-time compute. @OpenAI publishes single-number benchmark results because it's simpler and people expect to see it, but ideally all evals would have an x-axis.

A year ago, we verified a preview of an unreleased version of @OpenAI o3 (High) that scored 88% on ARC-AGI-1 at est. $4.5k/task Today, we’ve verified a new GPT-5.2 Pro (X-High) SOTA score of 90.5% at $11.64/task This represents a ~390X efficiency improvement in one year

From inception to release, the journal publication process can easily take over a year. @OpenAI o1 was made available only a year ago.

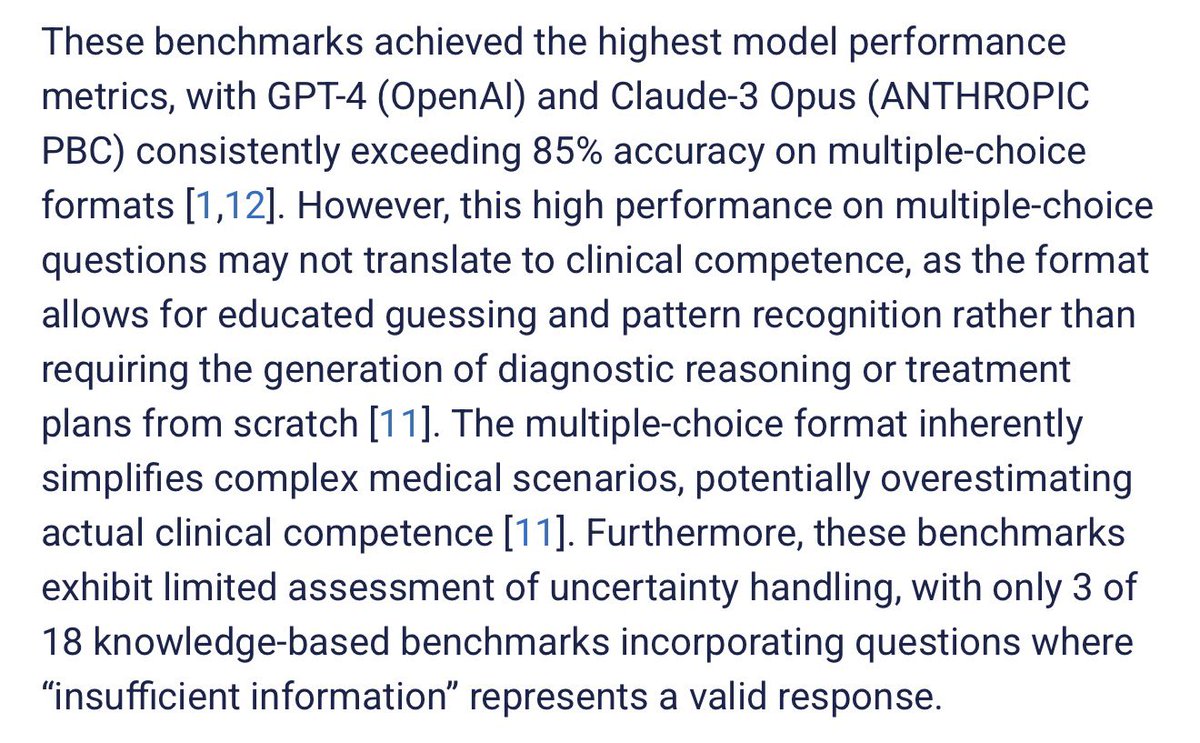

I hate to keep bringing this up, but studies cannot lump reasoners with earlier models when considering AI abilities And while studies don’t need to always use the latest models, they should test to see if there are trends in ability as model size scales to anticipate the future

Social media tends to frame AI debate into two caricatures: (A) Skeptics who think LLMs are doomed and AI is a bunch of hype. (B) Fanatics who think we have all the ingredients and superintelligence is imminent. But if you read what leading researchers actually say (beyond the…

One point I made that didn’t come across: - Scaling the current thing will keep leading to improvements. In particular, it won’t stall. - But something important will continue to be missing.

The biggest misconception I hear about GenAI is that it inevitably outputs slop because it's trained to output "the average of the internet". But that's simply not true. It's trained to model the *entire distribution*, and RL lets it go beyond the human distribution. AlphaGo was…

3 years ago we could showcase AI's frontier w. a unicorn drawing. Today we do so w. AI outputs touching the scientific frontier: cdn.openai.com/pdf/4a25f921-e… Use the doc to judge for yourself the status of AI-aided science acceleration, and hopefully be inspired by a couple examples!

There are few as qualified as @zicokolter to teach a modern AI course. He’s both head of the machine learning department at @CarnegieMellon and on the board of @OpenAI. Intro to AI courses have badly needed an update with the rise of deep learning. Happy to see it happen at CMU!

I'm teaching a new "Intro to Modern AI" course at CMU this Spring: modernaicourse.org. It's an early-undergrad course on how to build a chatbot from scratch (well, from PyTorch). The course name has bothered some people – "AI" usually means something much broader in academic…

In 2019 @hughbzhang sent me a detailed personalized cold email asking to intern with me. I was impressed with what he wrote and his background, so I hired him as an AI resident for his gap year before grad school. If I got that email today I’d just assume it was AI generated.

What happens when online job applicants start using LLMs? It ain't good. 1. Pre-LLM, cover letter quality predicts your work quality, and a good cover gets you a job 2. LLMs wipe out the signal, and employer demand falls 3. Model suggests high ability workers lose the most 1/n

Below is a deep dive into why self play works for two-player zero-sum (2p0s) games like Go/Poker/Starcraft but is so much harder to use in "real world" domains. tl;dr: self play converges to minimax in 2p0s games, and minimax is really useful in those games. Every finite 2p0s…

Self play works so well in chess, go, and poker because those games are two-player zero-sum. That simplifies a lot of problems. The real world is messier, which is why we haven’t seen many successes from self play in LLMs yet. Btw @karpathy did great and I mostly agree with him!

Self play works so well in chess, go, and poker because those games are two-player zero-sum. That simplifies a lot of problems. The real world is messier, which is why we haven’t seen many successes from self play in LLMs yet. Btw @karpathy did great and I mostly agree with him!

.@karpathy says that LLMs currently lack the cultural accumulation and self-play that propelled humans out of the savannah: Culture: > “Why can’t an LLM write a book for the other LLMs? Why can’t other LLMs read this LLM’s book and be inspired by it, or shocked by it?” Self…

.@Stanford courses are high-quality but the policies are definitely outdated. I’m hearing of rampant blatant cheating happening where students are plugging the questions directly into ChatGPT during the midterms, but professors are not allowed to proctor the exams due to the…

Harvard and Stanford students tell me their professors don't understand AI and the courses are outdated. If elite schools can't keep up, the credential arms race is over. Self-learning is the only way now.

United States Trends

- 1. Markstrom N/A

- 2. Dunesday 1,561 posts

- 3. Joel Embiid N/A

- 4. Tyrese Maxey 1,069 posts

- 5. Christmas Eve 134K posts

- 6. Eli Knight N/A

- 7. Insurrection Act 11.4K posts

- 8. Southern Miss 1,407 posts

- 9. Arsenal 174K posts

- 10. Western Kentucky N/A

- 11. Fight Club 2,482 posts

- 12. PGA Tour 1,110 posts

- 13. Eric Gordon N/A

- 14. Rosetta Stone N/A

- 15. Flagg 3,825 posts

- 16. Kennedy Center Honors 18.6K posts

- 17. Jordy Nelson N/A

- 18. Toledo 9,708 posts

- 19. Ronnie Lott N/A

- 20. Brian's Song N/A

Dit vind je misschien leuk

-

Jan Leike

Jan Leike

@janleike -

Lilian Weng

Lilian Weng

@lilianweng -

Tengyu Ma

Tengyu Ma

@tengyuma -

Shimon Whiteson

Shimon Whiteson

@shimon8282 -

Zoubin Ghahramani

Zoubin Ghahramani

@ZoubinGhahrama1 -

Sergey Levine

Sergey Levine

@svlevine -

Danijar Hafner

Danijar Hafner

@danijarh -

Jakob Foerster

Jakob Foerster

@j_foerst -

Mustafa Suleyman

Mustafa Suleyman

@mustafasuleyman -

Sebastien Bubeck

Sebastien Bubeck

@SebastienBubeck -

Tim Rocktäschel

Tim Rocktäschel

@_rockt -

Michael Black

Michael Black

@Michael_J_Black -

Jürgen Schmidhuber

Jürgen Schmidhuber

@SchmidhuberAI -

Behnam Neyshabur

Behnam Neyshabur

@bneyshabur -

Ilya Sutskever

Ilya Sutskever

@ilyasut

Something went wrong.

Something went wrong.