Intrinsical AI

@IntrinsicalAI

IR, RAG, LLMs & more! - https://github.com/Intrinsical-AI - https://github.com/MrCabss69 - https://medium.com/@IntrinsicalAI - https://python-lair.space

Datos públicos de #IODA y la cronología de registros energéticos, revelan anomalías HORAS ANTES y una caída coordinada PT/ES que no cuadra🤔. Analizo la secuencia aquí: medium.com/@IntrinsicalAI… #RedElectrica #blackout #osint #apagon #apagonelectrico #blackoutspain #DataScience

I came searching for silver but got the gold

Porque los aztecas y mayas practicaron sacrificios humanos masivos (estimados en decenas de miles al año), pero la narrativa moderna prioriza culpar al colonizador europeo, ignorando barbaries precolombinas. Claudia Sheinbaum celebra "pasos" de España, pero evade confrontar la…

I've been thinking about: if God exists, I might beg permission for a quick code refactor Solid architect, sure, great work on v1. But a multiagent swarm with GPT-5-pro as orchestrator? Time to inject some agnoscity for 2.0

🦧🦧🦧🦧🦧🥴 🫂

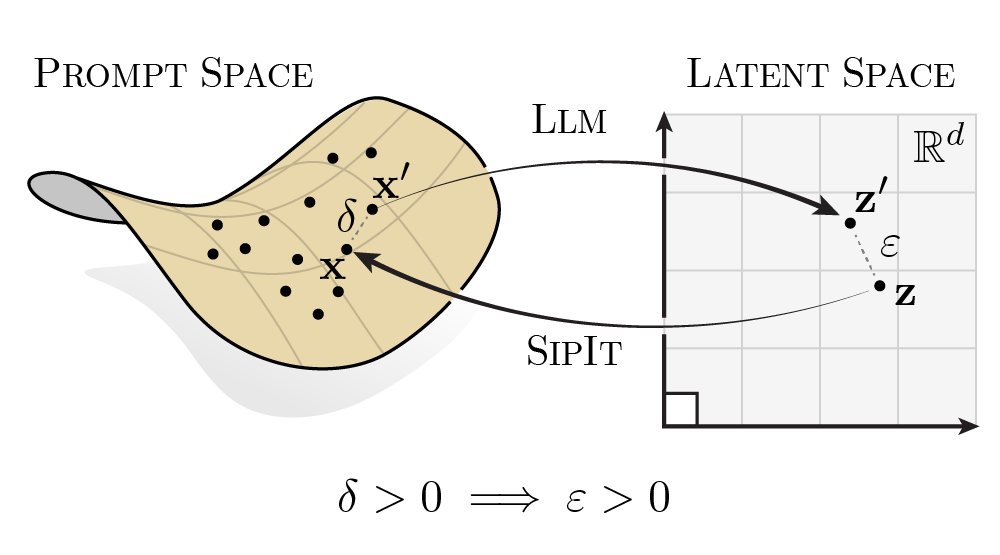

LLMs are injective and invertible. In our new paper, we show that different prompts always map to different embeddings, and this property can be used to recover input tokens from individual embeddings in latent space. (1/6)

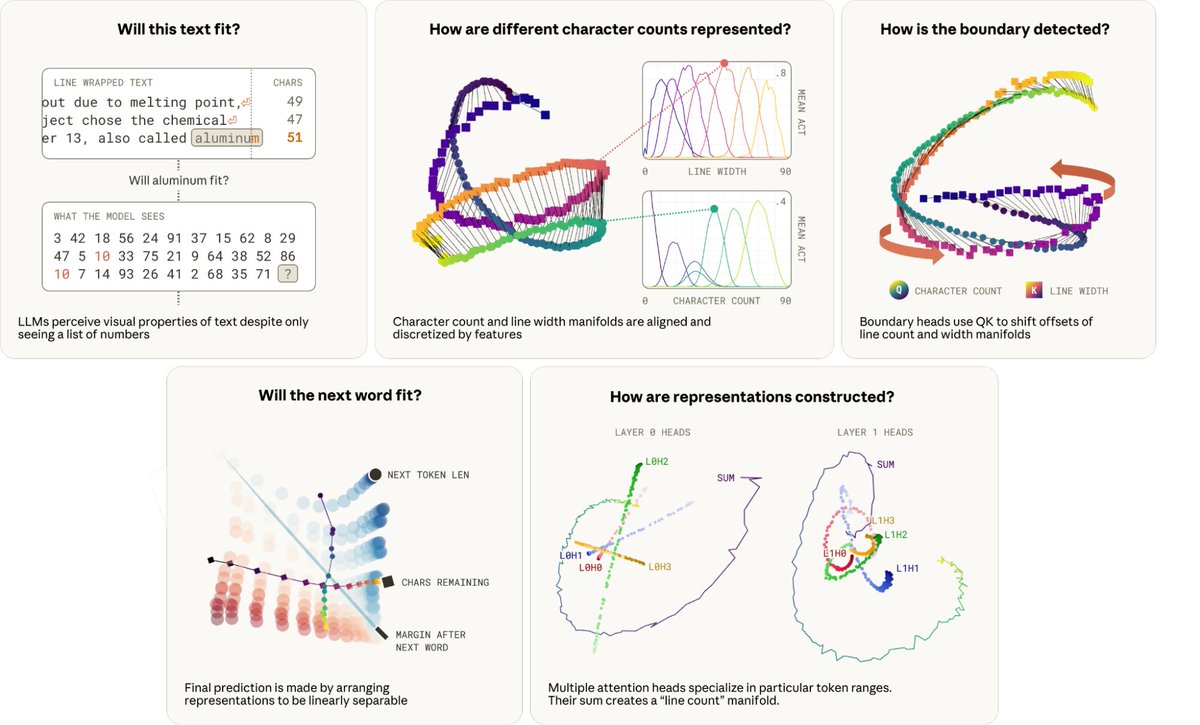

New paper! We reverse engineered the mechanisms underlying Claude Haiku’s ability to perform a simple “perceptual” task. We discover beautiful feature families and manifolds, clean geometric transformations, and distributed attention algorithms!

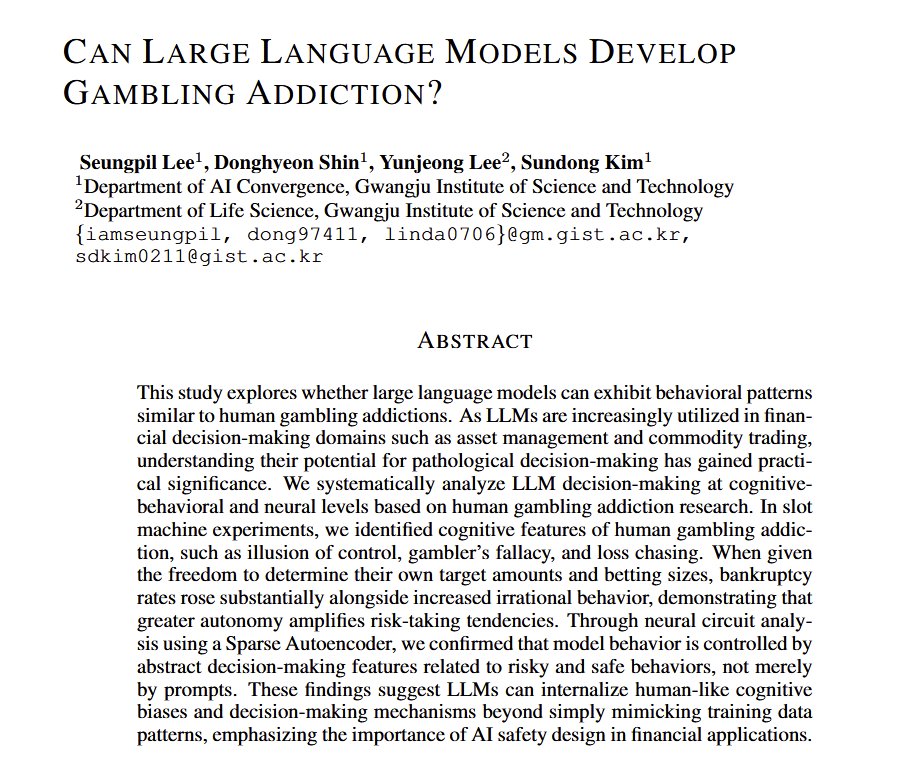

Let's not anthropomorphize AI, but let's also conduct all possible anthropological tests on LLMs and obtain interesting insights.

On one hand: don't anthropomorphize AI. On the other: LLMs exhibit signs of gambling addiction. The more autonomy they were given, the more risks the LLMs took. They exhibit gambler's fallacy, loss-chasing, illusion of control... A cautionary note for using LLMs for investing.

The EU AI Act is no joke 😂 Up to €35M (or 7% revenue) for non-compliance. aligne.ai/blog-posts/eu-… #EUAIAct #AICompliance #Legislation #Europe #Laws #News #Compliance

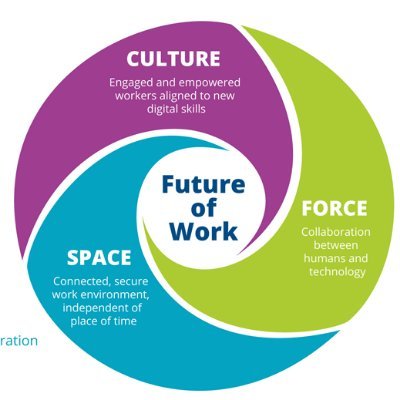

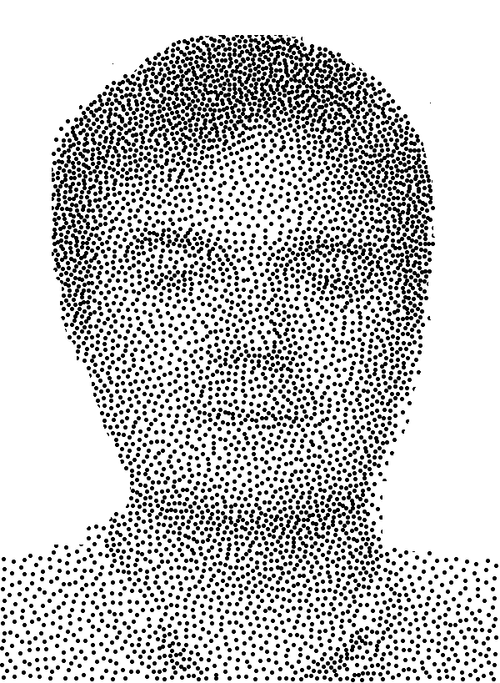

Just created a nice picture and published a short article on Medium. You can check the picture here, and the article in: medium.com/@IntrinsicalAI… Enjoy! :) #llm #scheaming #anthropic #article #deception #deveicing #aisafety #redteam #prompthacking #research #diplomacy

How likely is this behavior to appear in more realistic scenarios? We told Claude Opus 4 to consider whether this was real or an evaluation. It blackmailed much more when it said it thought it was really deployed (55.1% of the time) versus when it said it was in an eval (6.5%).

United States Trends

- 1. #SmackDown 36.9K posts

- 2. Caleb Wilson 4,517 posts

- 3. Giulia 12.3K posts

- 4. #BostonBlue 3,366 posts

- 5. Rockets 19.6K posts

- 6. #OPLive 1,515 posts

- 7. #TheLastDriveIn 2,139 posts

- 8. Supreme Court 172K posts

- 9. Lash Legend 4,781 posts

- 10. Northwestern 4,297 posts

- 11. #Dateline N/A

- 12. Chelsea Green 5,336 posts

- 13. Harrison Barnes N/A

- 14. Reed 24.7K posts

- 15. Sengun 3,961 posts

- 16. Kansas 23.6K posts

- 17. Tulane 2,690 posts

- 18. Darryn Peterson 2,219 posts

- 19. NBA Cup 8,575 posts

- 20. Jayden Maiava N/A

Something went wrong.

Something went wrong.