As a founder, you’re the one carrying all the risk. You don’t build a ship just to keep it sitting safely in the harbor.

I always love roasting thoughtless products like this. A $2,000 robotic arm should be in a lab doing dangerous chemical and biological experiments, not making your coffee, even for an elaborate cappuccino. Hopefully we won’t see products like this out there.

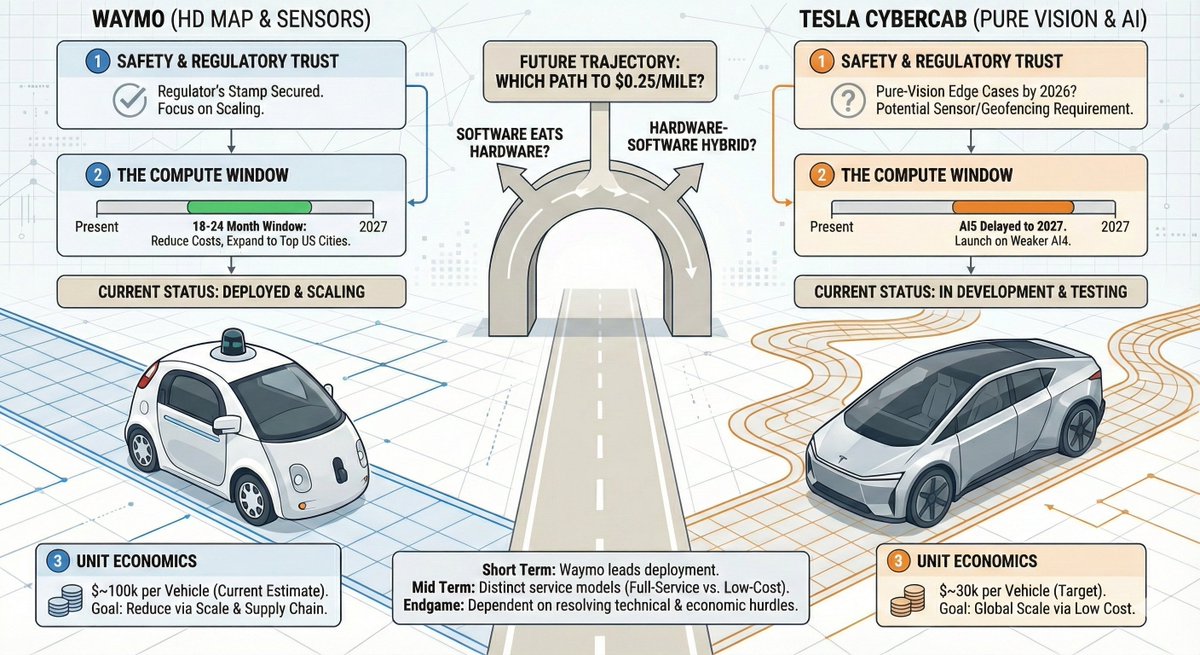

The Robotaxi endgame won’t be won by speed — but by who cracks three hard locks first. (2026-2027) will be the KEY 1️⃣ Safety vs Regulatory Trust Waymo already has the regulator’s stamp. If Tesla can’t solve pure-vision edge cases by 2026, it gets forced to add sensors or accept…

“If you want the future to be good, you must make it so.” Elon Musk The Starship is the vehicle for us to become multi planetary

Alex Rampell on why AI is still underhyped and how it will diffuse inside companies. “Not to oversimplify human behavior, but it’s, ‘I want to be lazy and I want to be rich.’” “There’s somebody at every big company who has figured out, ‘I can do something in one minute that…

A classic story about “value”

Banksy’s famous painting that shredded itself right after selling for $1.4 million later increased in value to $25.4 million.

Nicely done — but don’t underestimate the competition.

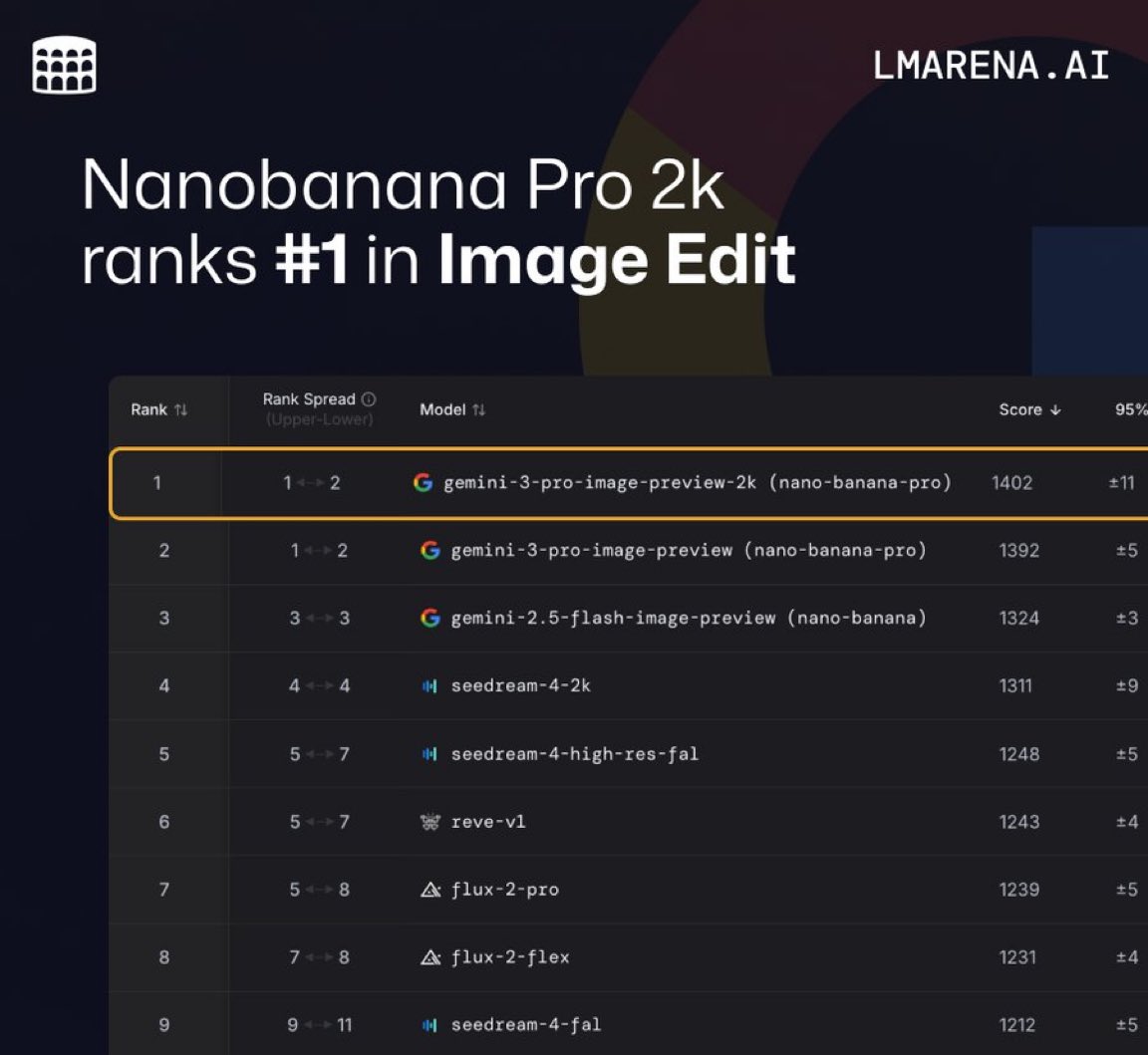

Nano Banana Pro keeps getting more SOTA (support for 2K and 4K is available in the API!) 🍌

"Only wimps use tape backup: real men just upload their important stuff on ftp, and let the rest of the world mirror it " -Linus Torvalds

Just curious — how many people actually used AI to shop during Black Friday last week? I’d love to hear your experiences. Did it genuinely help you make better buying decisions?

In the near future, the biggest opportunities and investments will be in the realm between Earth orbit and escaping Earth’s gravity entirely.🚀 Right now, you can count on one hand the companies really working in this space, they’re extremely rare! #invest

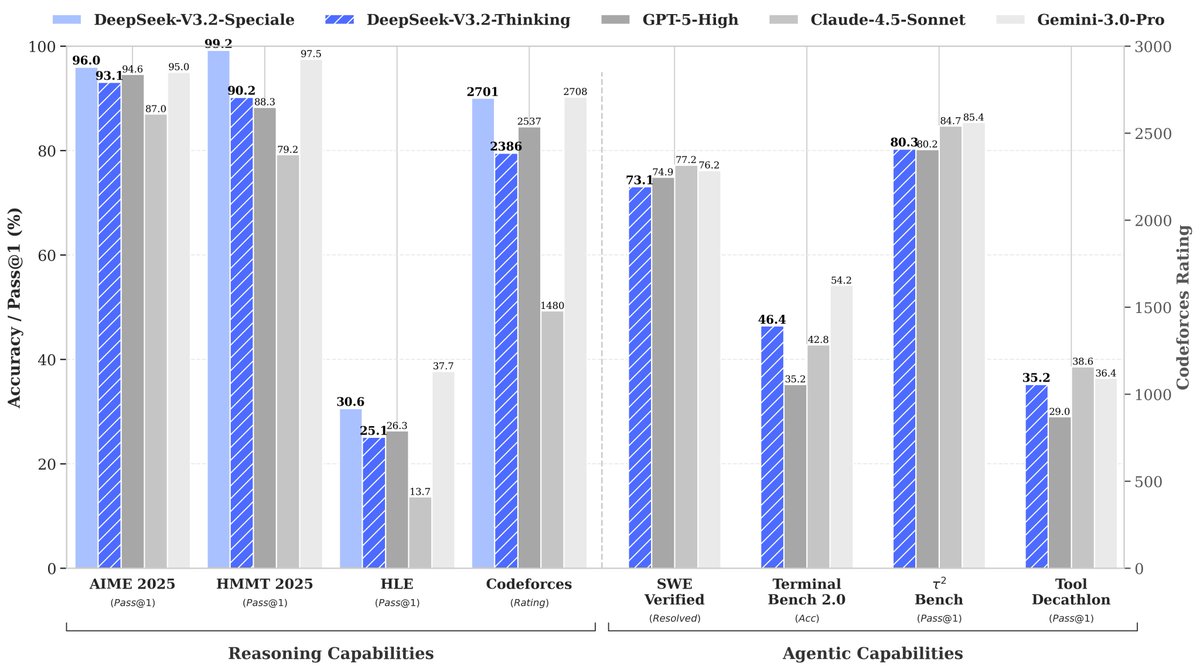

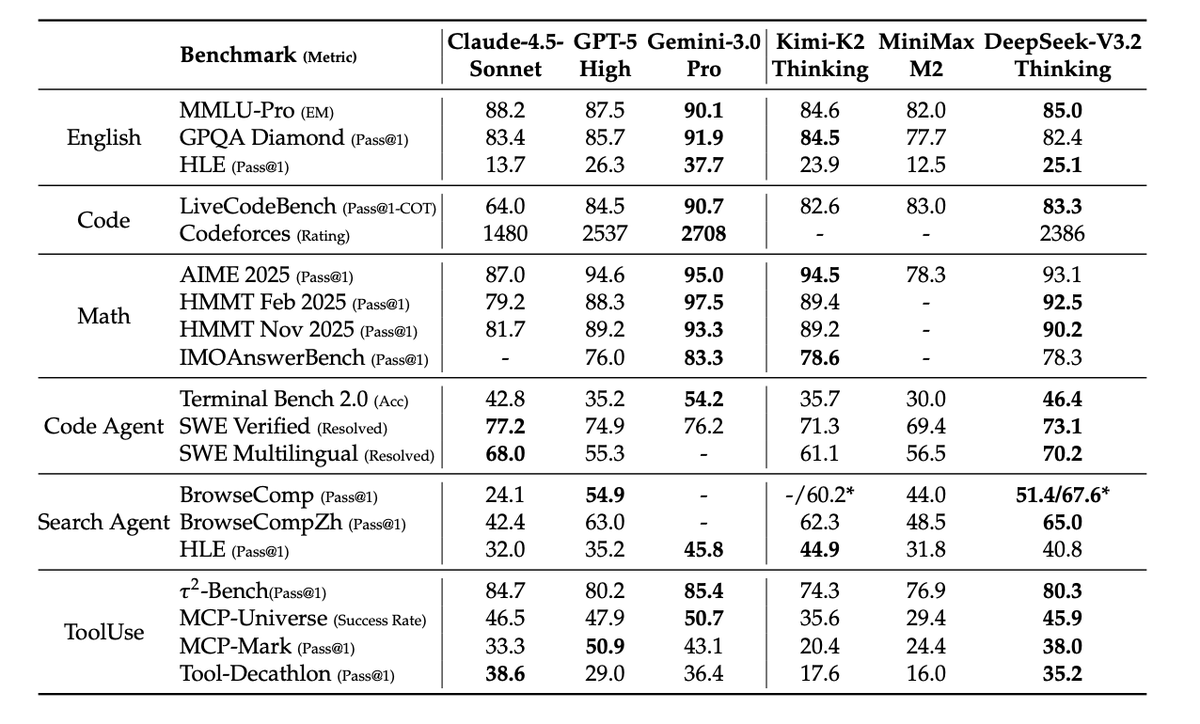

Breaking🔥: By introducing its DeepSeek Sparse Attention (DSA) technique, the DeepSeek-V3.2 model keeps all the low-memory advantages of the MLA architecture while using sparse computation to dramatically cut the cost of training and inference on long-context workloads. The…

United States 趨勢

- 1. Warner Bros 144K posts

- 2. HBO Max 62.9K posts

- 3. #FanCashDropPromotion 1,940 posts

- 4. #NXXT_CleanEra N/A

- 5. NextNRG Inc 2,222 posts

- 6. #FridayVibes 5,125 posts

- 7. Paramount 29.1K posts

- 8. Good Friday 62.3K posts

- 9. Cyclist 2,486 posts

- 10. Ted Sarandos 4,837 posts

- 11. Jake Tapper 63.3K posts

- 12. National Security Strategy 15K posts

- 13. David Zaslav 2,360 posts

- 14. $NFLX 5,938 posts

- 15. The EU 148K posts

- 16. TINY CARS 2,149 posts

- 17. Bandcamp Friday 2,236 posts

- 18. #FridayMotivation 5,077 posts

- 19. World Cup 76K posts

- 20. Blockbuster 19.3K posts

Something went wrong.

Something went wrong.