LabInteract

@LabInteract

This is the inactive Twitter account of a research lab at Sussex University. The lab has now moved and this account is not active anymore.

You might like

We are excited to be exhibiting Particle-Based-Display #ultrasound #levitation technologies on the 11.2020 at the #Design Capital of The World Exhibition In co-operation with #Shanghai Academy of Fine Arts @ShanghaiEye

Everything ready for showcasing our work in #SoftRobotics #bioinspiration #haptics for bringing people closer and growing together at the #DiscoveryHub - Grow #BloomsburyFestival2023!

Get ready for a thrilling showcase of cutting-edge research by @SoftHaptics and @uclrobotics at this year's #BloomsburyFestival2023! Don't miss out on the future of technology! @uclmecheng @UCLEngineering @UCLEastEngage

The deadline for these positions is August 16th; please share with job seekers in Human-Centered Computing. Thx

Do you want to work on HCI in Copenhagen? We look for assistant professors that focus on technologies, including AR, UBICOMP, tangible, IUI, VR, fabrication, mobile. Deadline August 16th, details at bit.ly/2YfK0OC. Please RT and encourage folks to get in touch 🇩🇰🏛️🎉📚🙏

Using #NeuralNetworks and #GeneticAlgorithms, #Interactlab researchers are exploring how to use #AcousticMetamaterials to #cloak an object from an incoming ultrasound wave. See how the “sound shadow” is reduced by the strategically placed blocks in the second image?

With real time path generation and image projection, our #ParticleBasedDisplays could be used to generate a #hologram of each participant in a conference call, for the next level of #Remote #Interaction

From our virtual reading group: #visual distortion is common in museums or events, but what about #auditory distortion? Here, we see how #training users can improve sound localization for error reduction to spot distorted #spatial sound sources. tinyurl.com/yajw52sw

#Schlieren optics can help to visualise invisible air pressure differences, including sound! Here is a neat little project on background oriented schlieren imaging used on a candle: calebkruse.com/10-projects/sc…

Looking forward to a new exciting chapter of multisensory experience and interface research at @uclic together with @sssram and Diego Martinez Plasencia uclic.ucl.ac.uk/news-events-se…

Really excited to announce my move to @uclic with @obristmarianna and Diego Martinez Plasencia to form one of the largest HCI research group in the world 😀. uclic.ucl.ac.uk/news-events-se…

In a spooky haunted house attraction, a visitor feels a ghostly hand touch his shoulder. This remote #hapticfeedback is being provided by #Interactlab Phased Array Transducers boards.

This coming tuesday, there’ll be an online @sig_chi Smell, Taste, & Temperature symposium (stt20.plopes.org) with a very exciting line-up! It’s entirely free and open to the public 💐🍦❄️ The symposium is brought to you by the HCIntegration Lab at @uchicagocs @uchicago 1/5

TableHop an interactive tabletop display made from stretchable spandex and actuated using transparent electrodes. Visuals are projected beneath the fabric and the electrodes provide #HapticFeedback to the fingertips of the users @acmsigchi #CHI2016 tinyurl.com/ybxubs88

We also strongly encourage proposals that align with SIGCHI's commitment towards addressing racial and other systemic injustices. medium.com/sigchi/a-time-… #BLM

Good read read on the purpose and role of Venture Capitalists from GP of Concentric - Kjartan Risk forbes.com/sites/kjartanr…

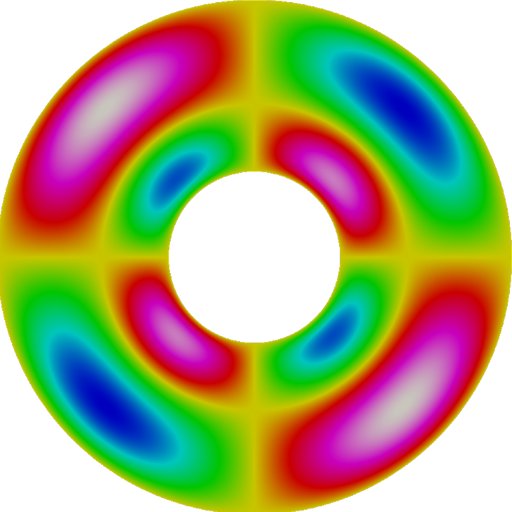

Check out the newest publication in Advanced Materials Technologies. We use individually tuned unit cells to show focusing of ultrasonic waves at different points in space as well as generation of sonic hologram images.tinyurl.com/y8x9e6qy

From our virtual reading group: “super-oscillation” is a concept whereby #wave-packets can temporarily oscillate at frequencies much higher than their largest frequency component and thus resolve & manipulate subwavelength areas of space @NatureComms tinyurl.com/y7cao3x8

Before diving into the excellent BEM/FEM software for acoustic simulations like #BEMpp #Interactlab researchers have been building their own scripts for discovering the capabilities of specially designed #acoustic #metamaterials by outputting numerically determined sound fields.

Sinch to buy India's ACL Mobile for $70 million tcrn.ch/2Y6CIxX by @refsrc

From our #virtual reading group: State-of-the-art #LiDAR technology and a new #algorithm enable #3D reconstructions as far as 320m away in real-time! The algorithm can even #reconstruct targets behind semi-opaque surfaces like a #camouflage net. tiny.cc/o4oaqz

Many thanks to all those interested in our ongoing SMART funding opportunity – we will be able to confirm timings for the next round of applications next week.

United States Trends

- 1. #FanCashDropPromotion 2,385 posts

- 2. #FridayVibes 5,284 posts

- 3. Dizzy 7,363 posts

- 4. Good Friday 61.5K posts

- 5. #PETITCOUSSIN 22.1K posts

- 6. #FursuitFriday 10K posts

- 7. #FridayFeeling 3,160 posts

- 8. Elise 13.9K posts

- 9. Publix 2,053 posts

- 10. Happy Friyay 1,578 posts

- 11. Munetaka Murakami 1,574 posts

- 12. Chase 94.7K posts

- 13. Talus Labs 26.1K posts

- 14. Tammy Faye 4,179 posts

- 15. Happy N7 2,465 posts

- 16. $ZEC 37.1K posts

- 17. John Wayne 1,937 posts

- 18. Kehlani 20.3K posts

- 19. Sydney Sweeney 108K posts

- 20. Hochul 17K posts

Something went wrong.

Something went wrong.