PerpetuallyConfused

@MuddledLearning

Tired mathematician and programmer running on papers and obsession. I work in industry as a ~serious~ VP, this is my oasis. Drop a line.

You might like

1/ The core issue with building agents that actually work together in a meaningful way is that we limit them to our way of communicating. Machines don't need to reason like us (arxiv.org/abs/2412.06769) and they don't need to talk like us. I believe that the true solution going

speaking of annoying ai influencers, just saw someone write a little think piece on MCP...and get the M in Model Context Protocol wrong

Good job man. This is a really impressive feat, especially on nights and weekends.

I replicated the Tiny Recursive Model, but failed to get it to run on Kaggle (the ultimate goal) After a long few nights and weekends, I successfully retrained the model, had it slightly outperform the paper, but I failed in my ultimate goal: get it on Kaggle and running against…

i wonder if this is because of AI or because of the internet or if its always existed. either way, i'm so tired.

the incredible thing about ai influencing is that he barrier of entry is very low you don’t have to read a 42 detailed report before hyping it up same with the abstract that’s a lot of words you don’t even need to read the author list because who are these guys anyway

genuinely vibe coding terrifies me. i feel so much brain fog if i let it get to a point where i stop recognizing the code. it feels the same as not exercising or making poor dietary decisions where you feel regret afterwards.

i can't quite figure out what the downstream effects of juniors / non-technical folks vibe coding are...but they're going to be bad.

I’m too dumb to understand this right now. Brb writing a post about it.

Would anyone be interested in reading through an experiment that I'll be posting? Specifically focuses on information preservation of transformers.

A new essay on the crazy, all or nothing approach to work happening in AI today, the looming human costs, and the lack of a finish line. I wouldn't say it's okay, but I'm not sure how to fix it.

We're scaling our Open-Source Environments Program As part of this, we're committing hundreds of thousands of $ in bounties and looking for partners who want to join our mission to accelerate open superintelligence Join us in building the global hub for environments and evals

Oh this is fascinating. Honestly didn’t expect to be able to have the separation that’s shown, nice work.

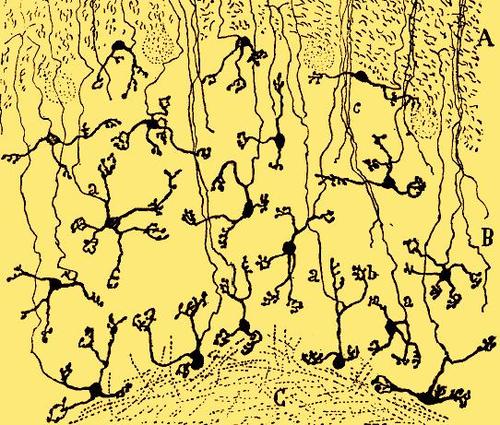

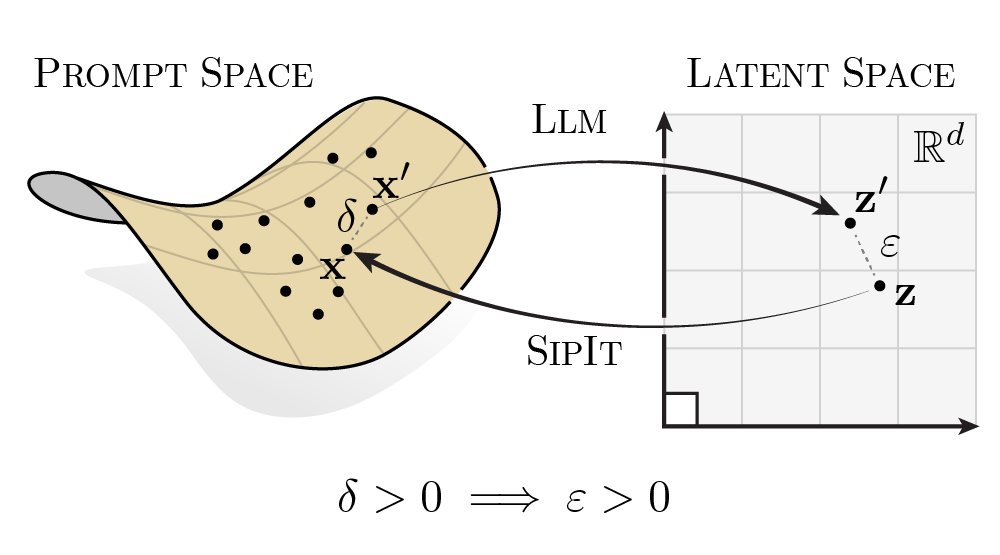

LLMs are injective and invertible. In our new paper, we show that different prompts always map to different embeddings, and this property can be used to recover input tokens from individual embeddings in latent space. (1/6)

they heard i'm on the market

the amount of times claude calls me "perfect!" is terrible for me

ok gents, it's time for a new job. expect some shitposting.

We’re all fucking idiots for not grifting

prime intellect advanced reasoning solid thinking adequate cognition basic understanding general intuition minimal perception dim recognition faint notions

lovable is going to be (and is) a fucking nightmare for devs

i am coming to the realization that nearly everything in my life has been incorrectly thought through. that's humbling.

corpo knowledge drop that everyone knows: while being able to socialize and play nice is very useful when starting your career, often times as you climb the ladder you want to recede back into your slightly robotic self. i know it helped me a lot.

United States Trends

- 1. #BUNCHITA 1,699 posts

- 2. #SmackDown 41.3K posts

- 3. Aaron Gordon 1,578 posts

- 4. Giulia 13.5K posts

- 5. #OPLive 2,174 posts

- 6. Supreme Court 173K posts

- 7. Tulane 3,251 posts

- 8. Caleb Wilson 5,224 posts

- 9. Connor Bedard 1,698 posts

- 10. #BostonBlue 4,155 posts

- 11. #Blackhawks 1,434 posts

- 12. Rockets 19.8K posts

- 13. Northwestern 4,657 posts

- 14. Podz 2,179 posts

- 15. Scott Frost N/A

- 16. Westbrook 4,571 posts

- 17. Lash Legend 5,806 posts

- 18. Chelsea Green 5,844 posts

- 19. Frankenstein 70.3K posts

- 20. Justice Jackson 4,381 posts

Something went wrong.

Something went wrong.