Normal Computing 🧠🌡️

@NormalComputing

We build AI systems that natively reason, so they can partner with us on our most important problems. Join us https://bit.ly/normal-jobs.

你可能會喜歡

United States 趨勢

- 1. #BTCCBestCEX 6,957 posts

- 2. Expedition 33 184K posts

- 3. Falcons 37.6K posts

- 4. Kyle Pitts 18.5K posts

- 5. #heatedrivalry 37.6K posts

- 6. #TheGameAwards 396K posts

- 7. Bucs 23.5K posts

- 8. shane 54K posts

- 9. Todd Bowles 5,542 posts

- 10. ilya 39.6K posts

- 11. GOTY 60.9K posts

- 12. Baker 23.2K posts

- 13. Mega Man 35.2K posts

- 14. Kirk Cousins 6,036 posts

- 15. Mike Evans 10.5K posts

- 16. #KeepPounding 2,255 posts

- 17. Geoff 64.9K posts

- 18. Leon 181K posts

- 19. Bijan 7,364 posts

- 20. Sonic 85.1K posts

你可能會喜歡

-

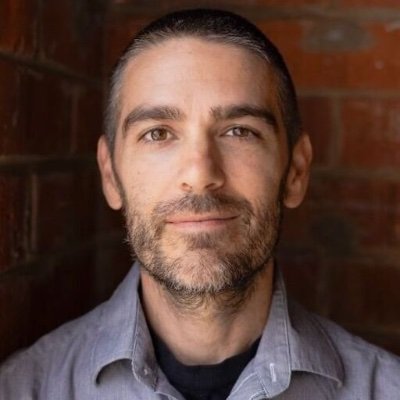

Martin Larocca

Martin Larocca

@MartinLaroo -

Patrick Coles

Patrick Coles

@ColesThermoAI -

Matthias C. Caro

Matthias C. Caro

@IMathYou2 -

Contextual AI

Contextual AI

@ContextualAI -

Henry Yuen

Henry Yuen

@henryquantum -

Marzieh Fadaee

Marzieh Fadaee

@mziizm -

Marco Cerezo

Marco Cerezo

@MvsCerezo -

Chroma

Chroma

@trychroma -

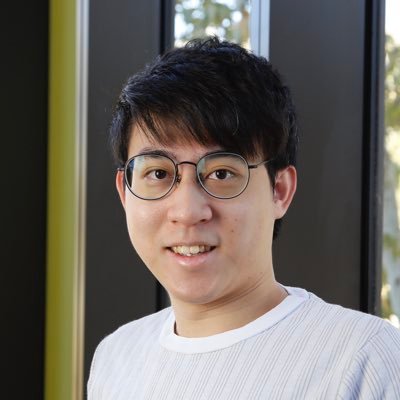

Hsin-Yuan (Robert) Huang

Hsin-Yuan (Robert) Huang

@RobertHuangHY -

Faris Sbahi 🏴☠️

Faris Sbahi 🏴☠️

@FarisSbahi -

Robin Kothari

Robin Kothari

@RobinKothari -

Jannes Nys

Jannes Nys

@JannesNys -

Brandon T. Willard

Brandon T. Willard

@BrandonTWillard -

Ruslan Shaydulin

Ruslan Shaydulin

@ruslanquantum -

Kaelan Donatella

Kaelan Donatella

@KaelanDon

Loading...

Something went wrong.

Something went wrong.