Yaoyao(Freax) Qian

@RubyFreax

🔬 RA @HelpingHandsLab @ iTEA Lab | Ex MLE Coop@ Lendbuzz | Ex Technical Manager | Full-Stack Developer | 🤖 | 🦾 | LLM | 🐱🐶🐯🦁🦋

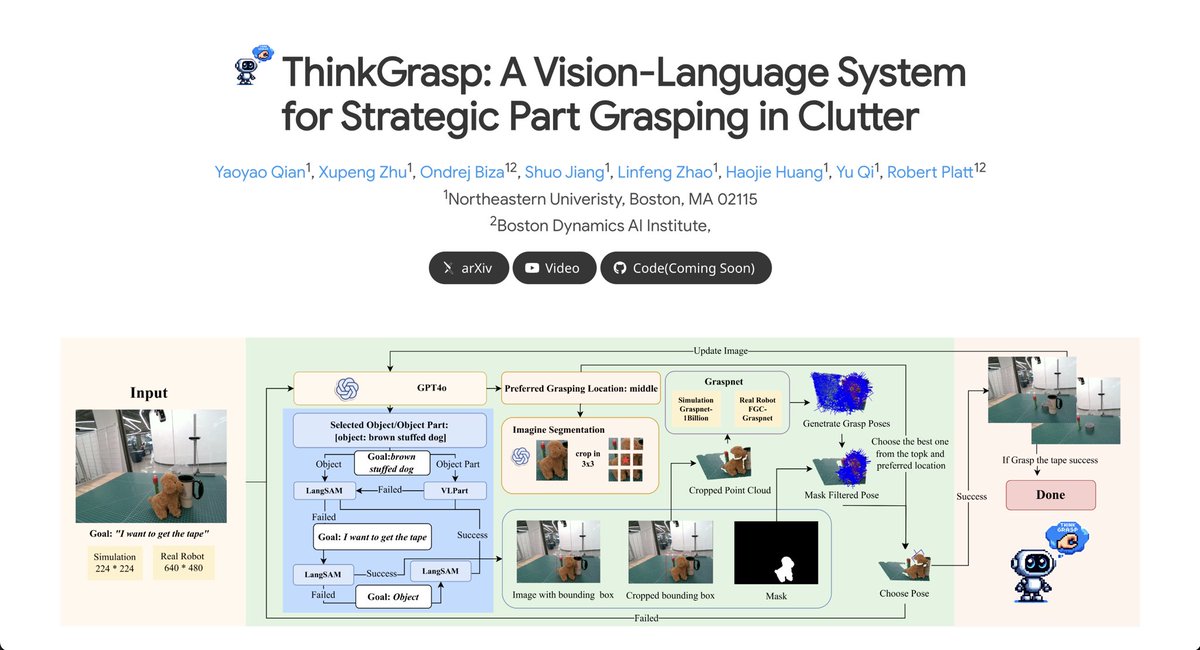

🥰Now is a #CoRL2024 Paper!

❓ How do you solve grasping problems when your target object is completely out of sight? 🚀 Excited to share our latest research! Check out ThinkGrasp: A Vision-Language System for Strategic Part Grasping in Clutter. 🔗 Site: h-freax.github.io/thinkgrasp_page

Thanks @_akhaliq for sharing our work! Aim and Grasp! AimBot introduces a new design to leverage visual cues for robots - similar to scope reticles in shooting games. Let's equip your VLA models with low-cost visual augmentation for better manipulation! aimbot-reticle.github.io

Introducing Eigent — the first multi-agent workforce on your desktop. Eigent is a team of AI agents collaborating to complete complex tasks in parallel. It is your long-term working partner with fullly customizable workers and MCPs. Public beta available to download for MacOS,…

Excited to share our #ICML2025 paper, Hierarchical Equivariant Policy via Frame Transfer. Our Frame Transfer interface imposes high-level decision as a coordinate frame change in the low-level, boosting sim performance by 20%+ and enabling complex manipulation with 30 demos.

Owen will be presenting our poster for the paper Hierarchical Equivariant Policy via Frame Transfer at ICML Today (see lnkd.in/e-7p9Viq for details). If you are interested in equivariance and/or robotic manipulation please stop by!

🥳Visual Tree Search of Web Agent has been accepted!

🎉 Exciting News! We're thrilled to announce that our paper "Visual Tree Search of Web Agent" has been accepted to ECML-PKDD 2025, one of the premier European conferences in machine learning and data science! This breakthrough work comes from our talented PathOnAI.org…

🔥 We introduce Multiverse, a new generative modeling framework for adaptive and lossless parallel generation. 🚀 Multiverse is the first open-source non-AR model to achieve AIME24 and AIME25 scores of 54% and 46% 🌐 Website: multiverse4fm.github.io 🧵 1/n

📢 (1/16) Introducing PaTH 🛣️ — a RoPE-free contextualized position encoding scheme, built for stronger state tracking, better extrapolation, and hardware-efficient training. PaTH outperforms RoPE across short and long language modeling benchmarks arxiv.org/abs/2505.16381

🎉 We’re excited to host two challenges at LOVE: Multimodal Video Agent Workshop at CVPR 2025, advancing the frontier of video-language understanding! @CVPR #CVPR2025 📌 Track 1A: [VDC] Video Detailed Captioning Challenge Generate rich and structured captions that cover multiple…

🤖 How do AI agents actually work together? I made 2 short videos on Google’s Agent2Agent (A2A) protocol: 📘 Ep1: What is A2A? 📙 Ep2: Why it matters No backend needed—just curiosity. 🎥 Watch here: youtube.com/playlist?list=…

Just posted a 21-min tutorial on Model Context Protocol (MCP) — no jargon, just real-life analogies. 🍜 Restaurant menus 🧳 Travel guides 🦸♂️ Superpowers 📝 Memory notes I wanted to make it clear enough for anyone, even without a tech background. 🎥👇 youtu.be/0EtVAzIYbys?si…

youtube.com

YouTube

📌 What is Model Context Protocol (MCP)?

Just realized my paper is being used as a baseline—such a strange feeling! Seeing my model tested across different settings without me doing anything is fascinating. 🤯 Added the papers using ThinkGrasp as a baseline to its GitHub—check them out!🥳

Introducing TraceVLA: a fully open-source Vision-Language-Action model reimagining spatial-temporal awareness: tracevla.github.io ✨ 3.5x gains on real robots, SOTA in simulation 💡 Fine-tunes on just 150K trajectories ⚡ Compact 4B model = 7B performance

United States 趨勢

- 1. #เพียงเธอตอนจบ 318K posts

- 2. LINGORM ONLY YOU FINAL EP 333K posts

- 3. Good Friday 52K posts

- 4. #FanCashDropPromotion N/A

- 5. #FridayVibes 5,261 posts

- 6. Ayla 44.4K posts

- 7. Tawan 75.4K posts

- 8. Cuomo 110K posts

- 9. Happy Friyay 1,067 posts

- 10. Justice 338K posts

- 11. Mamdani 261K posts

- 12. Shabbat Shalom 2,382 posts

- 13. #FursuitFriday 12.3K posts

- 14. RED Friday 2,682 posts

- 15. #FridayFeeling 2,242 posts

- 16. Flacco 103K posts

- 17. Finally Friday 2,451 posts

- 18. Dorado 4,264 posts

- 19. New Yorkers 46.2K posts

- 20. Arc Raiders 3,683 posts

Something went wrong.

Something went wrong.