Introducing quickarXiv Papers are often written in convoluted language that is hard to understand. We're fixing that. Swap arxiv → quickarxiv on any paper URL to get an instant blog with figures, insights, and explanations. Now extracted with DeepSeek OCR 🚀

introducing runable the general ai agent for every task slides, websites, reports, podcasts, images, videos... everything

Obsessed with Flowith OS! Best product I've used this year. You need this. (Auto-sent) @flowith_ai

Just signed up for the #2 Terminal Bench coding agent by @openblocklabs: waitlist.openblocklabs.com/?ref=e547qa9d5x Check it out: x.com/openblocklabs/…

openblocklabs.com

OB-1 Coding Agent — Early Access

Experience the future of autonomous AI. OB-1 applies our reinforcement learning research to create agents that think, learn, and evolve.

Introducing OB-1: the new #1 coding agent on Terminal Bench. After a year of R&D, our agent now outperforms Codex and Claude Code. Early access is rolling out to waitlist users now.

Google’s “Nano-Banana” LLM (Gemini 2.5 Flash Image) – What is it? **Introduction and Background** “Nano-Banana” is Google’s latest AI image generation model, officially launched as Gemini 2.5 Flash Image on August 26, 2025. Initially revealed anonymously on LMArena as…

**Why a SQL Proxy Is Safer than Direct Connections for Local Development** When developing locally against a cloud database, you can connect directly (via public IP and connection string) or through a SQL proxy (a local agent that securely tunnels traffic). A SQL proxy is safer…

GPU programming - role in model training **Massive Parallelism:** GPUs execute thousands of threads concurrently, enabling significant speedups for data-parallel tasks like deep learning and graphics rendering. Understanding the GPU’s execution model (kernels, threads, blocks,…

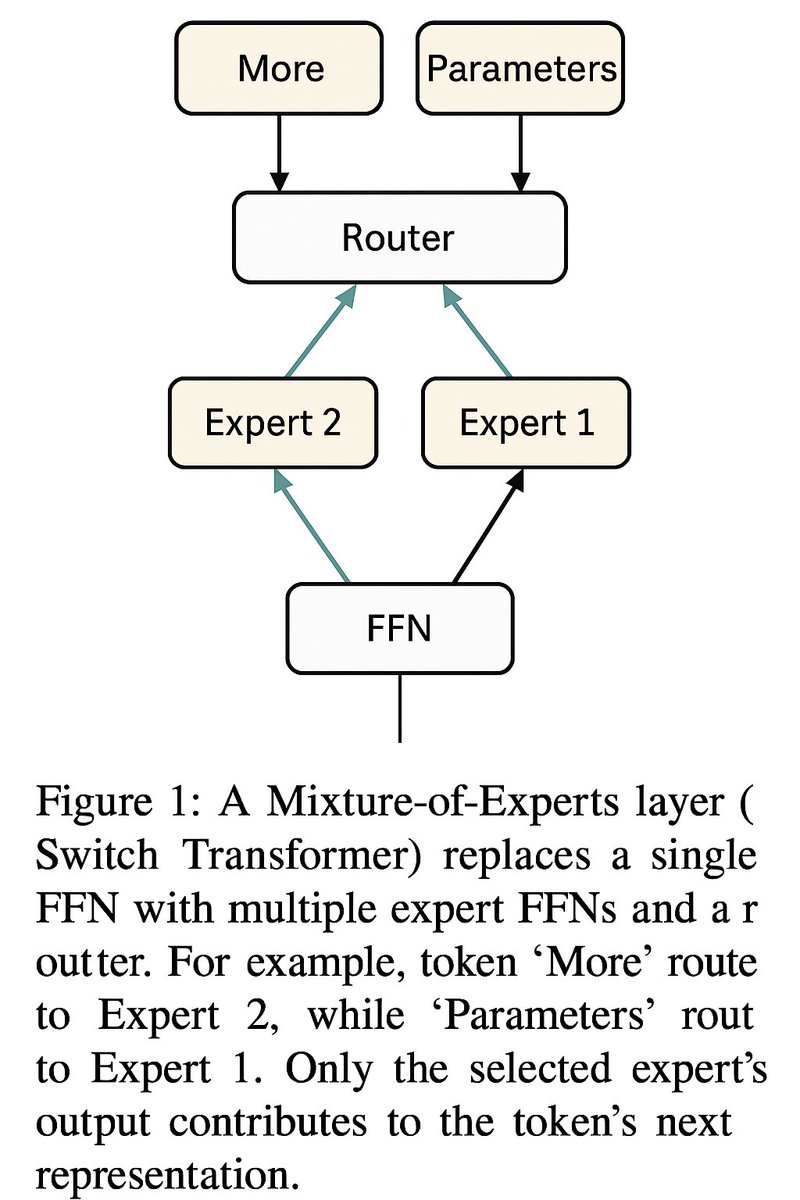

**Mixture-of-Experts (MoE) Architecture: Basics and Motivation and how GPT 5 implements it** **What are MoEs?** In a Mixture-of-Experts model, the dense subnetwork (typically the feed-forward network in a Transformer layer) is replaced by multiple parallel “expert” networks with…

**How Model Context Protocol (MCP) is different from REST api** The Model Context Protocol (MCP), introduced by Anthropic in late 2024, is an open standard enabling large language models (LLMs) to connect securely with external data sources and tools. Unlike LLMs with fixed…

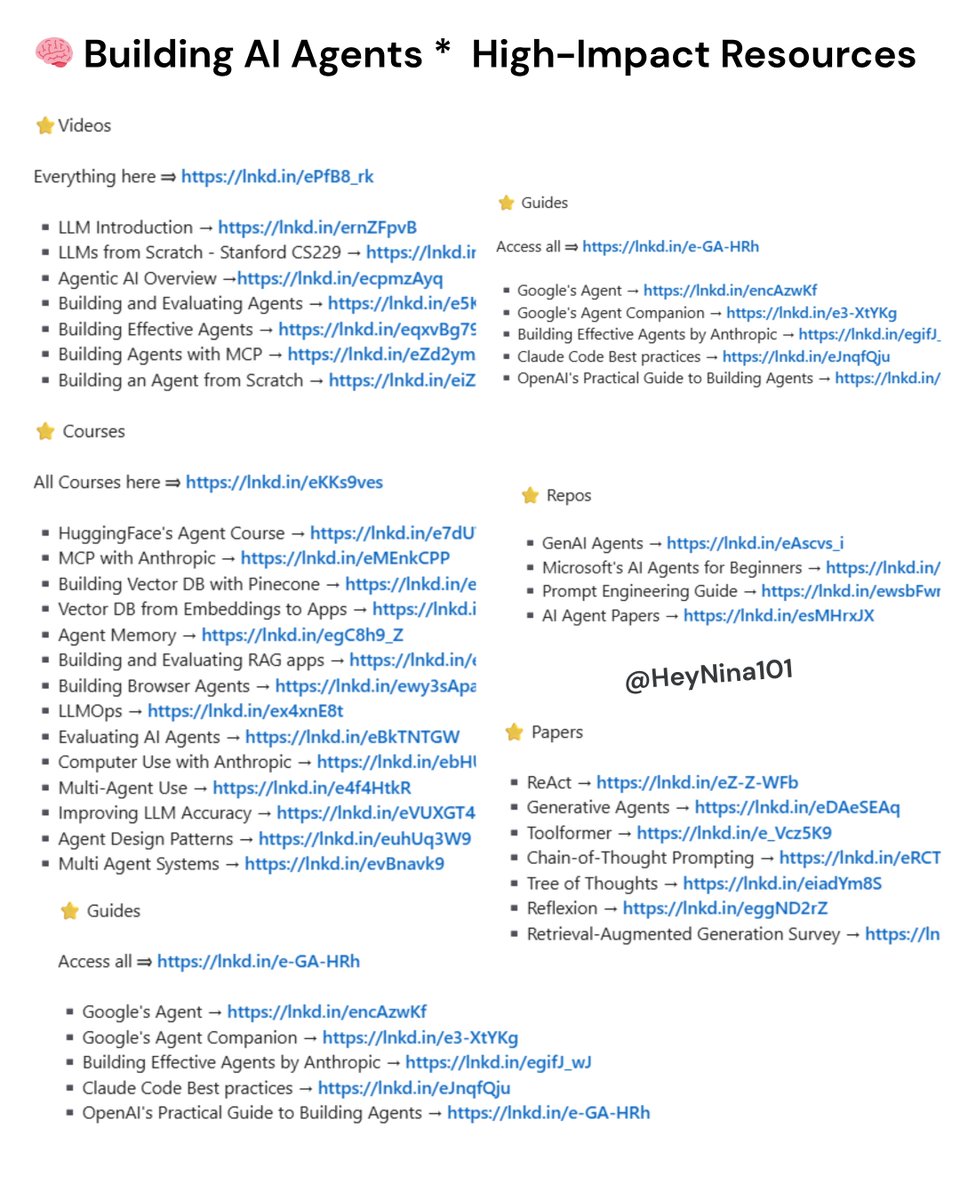

🚀 Everyone says "Learn AI," but where do you actually start? Here's an incredible resource compiling essential courses, papers, videos, and repos to kickstart your AI builder journey. Bookmark it! 🔗 x.com/HeyNina101/sta…

“Learn AI” is everywhere. But where do the builders actually start? Here’s the real path, the courses, papers and repos that matter. ⭐Videos Everything here ⇒ lnkd.in/ePfB8_rk ▪️LLM Introduction → lnkd.in/ernZFpvB ▪️LLMs from Scratch - Stanford CS229 →…

🧠 Google just launched "Deep Think" for Gemini 2.5 Pro! This new feature uses parallel thinking streams to tackle complex problems that need creative solutions. Available now for Google AI Ultra subscribers. Select 'Deep Think' in the prompt bar to try it out.

🚀 New open-source playbook just dropped: "The Ultra-Scale Playbook: Training LLMs on GPU Clusters" 📊 4,000+ scaling experiments on up to 512 GPUs Reading time: 2-4 days (worth it!) huggingface.co/spaces/nanotro…

Hi @Opera, I'm really interested in trying Opera Neon! Could I please get an access invite? Thanks in advance!

2/ Now let’s see Simular Pro in action. Launch week is hectic - and my kid wants a Labubu for her birthday. So I asked Simular Pro to compare listings and rank them. Within minutes, I got 30 legit ones - prices, links, selling points, all in a spreadsheet. Saved me hours.

United States 트렌드

- 1. Brian Cole 33.5K posts

- 2. #TrumpAffordabilityCrisis 5,147 posts

- 3. Eurovision 101K posts

- 4. Tong 18.8K posts

- 5. #EndRevivalInParis 13K posts

- 6. #Kodezi 1,176 posts

- 7. #OlandriaxHarvard 1,636 posts

- 8. Woodbridge 5,540 posts

- 9. Rwanda 32.8K posts

- 10. Wray 13.5K posts

- 11. Jalen Carter 1,533 posts

- 12. #NationalCookieDay 1,646 posts

- 13. Legend Bey 1,314 posts

- 14. Black Album 2,115 posts

- 15. Chadwick 1,107 posts

- 16. TPUSA 76.4K posts

- 17. KJ Jackson N/A

- 18. Jermaine 4,089 posts

- 19. Price 267K posts

- 20. Happy Birthday Dan 4,039 posts

Something went wrong.

Something went wrong.