You might like

Rennes 2 la Rouge, on se retrouve ce soir à 18h pour discuter de la situation politique explosive. Aller à Rennes 2 un jour de chute du gouvernement… la révolution est proche 😂✊🏼🇵🇸

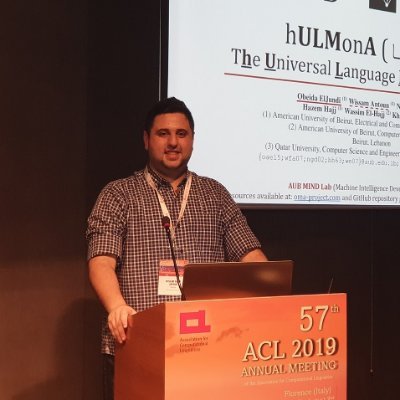

5) EuroBERT: Scaling Multilingual Encoders for European Languages w/ @N1colAIs @gisship @DuarteMRAlves @AyoubHammal @UndefBehavior @Fannyjrd_ @ManuelFaysse @peyrardMax @psanfernandes @RicardoRei7 @PierreColombo6 @tomaarsen - Poster session 5, Thu Oct 9, 11:00 AM – 1:00 PM

As you react to EMNLP rebuttals, Please make sure to substantiate your stance on why the score you have chosen is justified Keeping the information latent doesn't help authors understand your point to improve their work, and doesn't help AC/SAC make a grounded and fair decision.

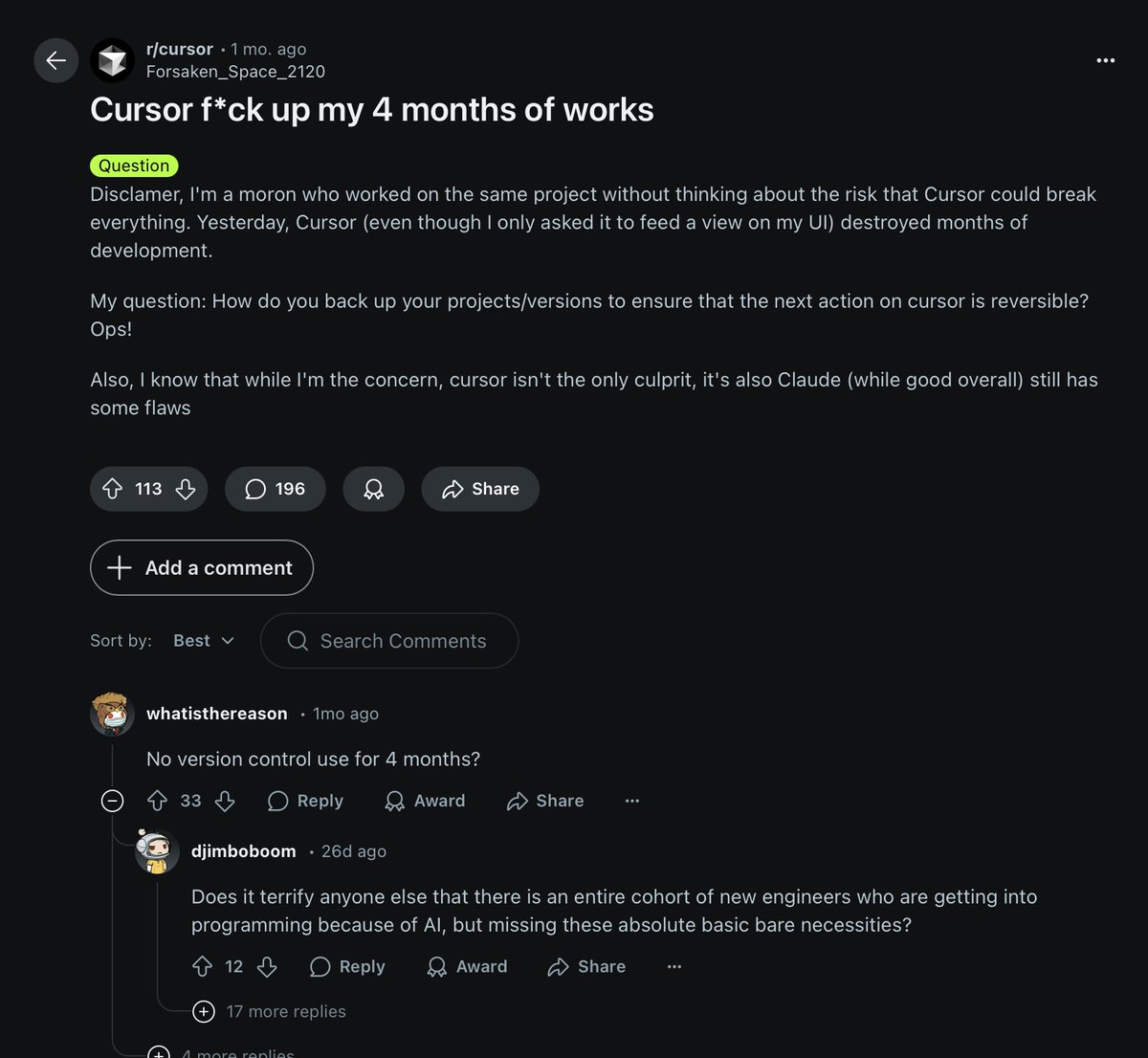

Simple example for how errors can creep into our papers through LLM use: I had a statistic for 2020. I googled the same statistic for 2023. AI overview tells me the statistic for 2023 & provides a link to support the claim. The link is to a 2023 article citing the 2020 statistic.

Having trouble choosing a track for your ARR / EMNLP submissions? Take a look at this blog post for more information: 2025.emnlp.org/track-changes/

youtube.com

YouTube

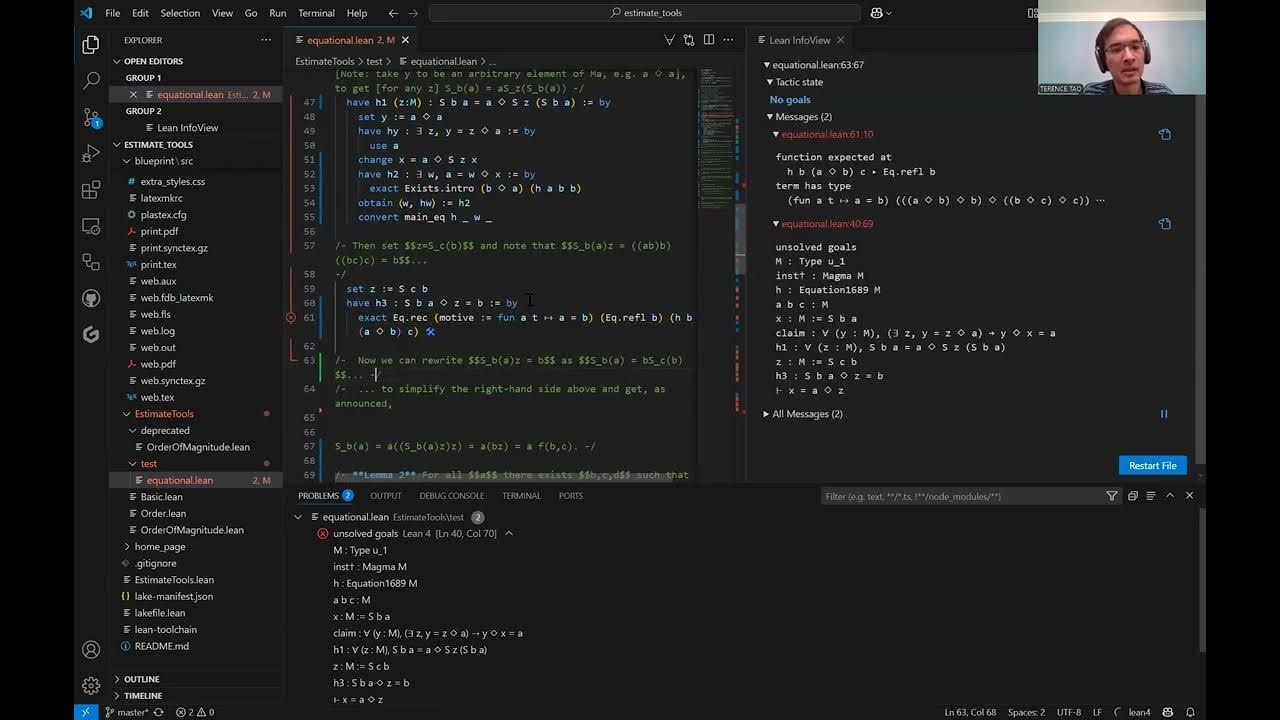

Formalizing a proof in Lean using Github copilot and canonical

If you submitted to ICML and TMLR as an author, where did you get higher review quality (grounded, fair, and helped improve your paper)? 𝐏𝐥𝐞𝐚𝐬𝐞 𝐝𝐞𝐜𝐨𝐮𝐩𝐥𝐞 𝐭𝐡𝐞 𝐮𝐥𝐭𝐢𝐦𝐚𝐭𝐞 𝐟𝐚𝐢𝐭𝐡 𝐨𝐟 𝐩𝐚𝐩𝐞𝐫 𝐟𝐫𝐨𝐦 𝐲𝐨𝐮𝐫 𝐣𝐮𝐝𝐠𝐞𝐦𝐞𝐧𝐭 𝐨𝐟 𝐪𝐮𝐚𝐥𝐢𝐭𝐲.

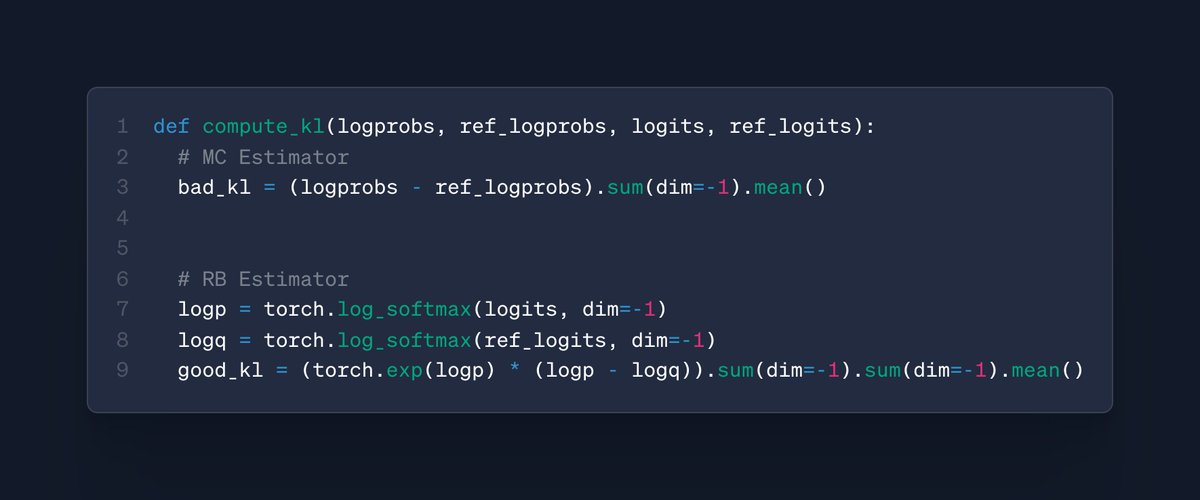

Current KL estimation practices in RLHF can generate high variance and even negative values! We propose a provably better estimator that only takes a few lines of code to implement.🧵👇 w/ @xtimv and Ryan Cotterell code: arxiv.org/pdf/2504.10637 paper: github.com/rycolab/kl-rb

ARR May (EMNLP) cycle opens May 5th. Reminder: (1) All resubmissions from past cycles MUST be declared, or they will be desk-rejected (automated checks against past cycles apply); (2) The responsible checklist must be carefully completed, or the paper gets a desk reject.

Today I had my paper rejected by ICML. I don’t like to complain about the free voluntary work that the AC and reviewers do, but this was one of the most careless reviews and AC we ve had. Our rejection was based on this:

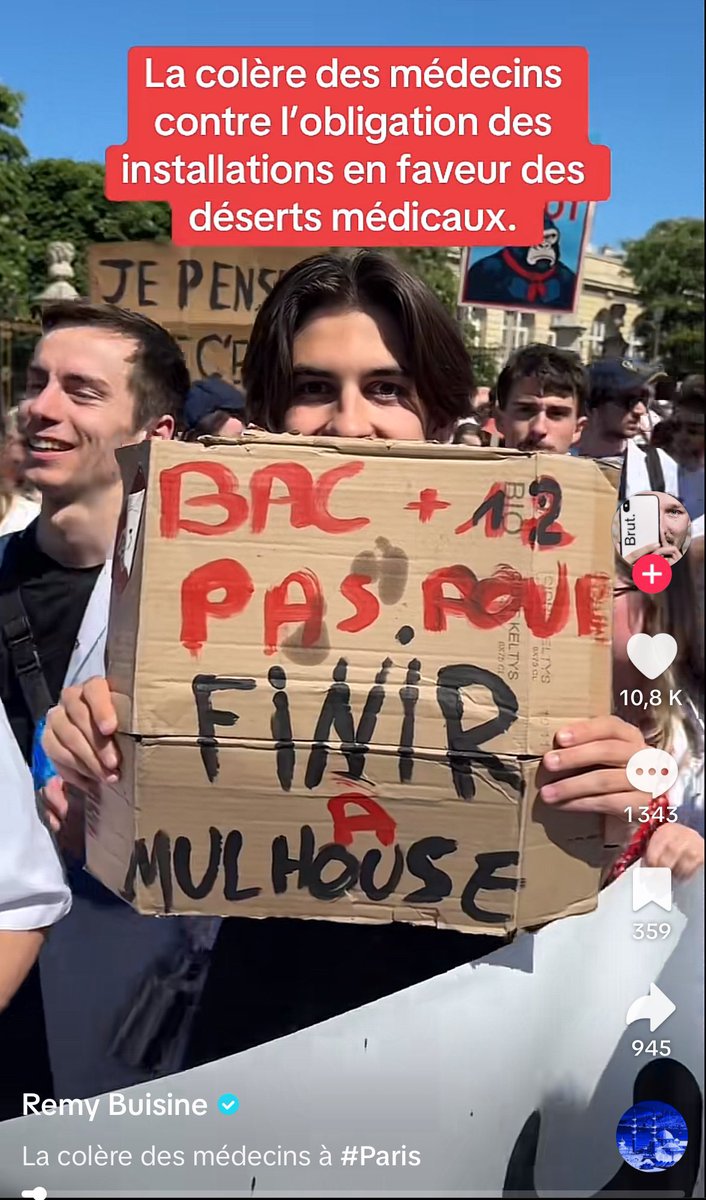

On a un souci avec la sociologie des étudiants en médecine. Pour pouvoir se balader avec une pancarte pareille sans que tes camarades te disent « mec t’es au courant que Mulhouse c’est une super grande ville ? » (et pas vraiment un désert médical en plus) faut un sacré entre soi

ModernBERT or DeBERTaV3? What's driving performance: architecture or data? To find out we pretrained ModernBERT on the same dataset as CamemBERTaV2 (a DeBERTaV3 model) to isolate architecture effects. Here are our findings:

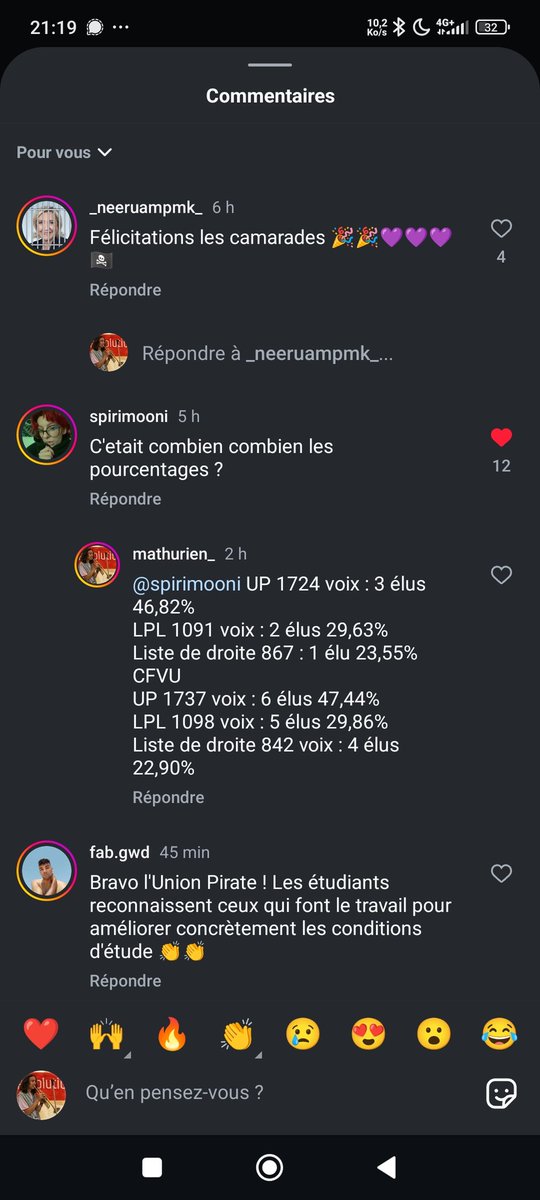

Bah la team pk vous masquez mon commentaire ? ils sont public nos résultats pourtant ^^ (À gauche sur mon compte et à droite sur celui d'un pote)

📢 #ACL2025NLP Dear community! The reviewing deadline for ACL 2025 is approaching -- 23 March! We received over 8000 submissions, the highest number in ACL history. It is particularly important that you submit your reviews on time. A big thank you in advance for all your efforts!

If you are a recent graduate, please! check this out: missing.csail.mit.edu "The Missing Semester of Your CS Education"

missing.csail.mit.edu

The Missing Semester of Your CS Education

The Missing Semester of Your CS Education

>Transformer without normalization >Looks inside >Normalization to the l^∞ unit ball x.com/liuzhuang1234/…

New paper - Transformers, but without normalization layers (1/n)

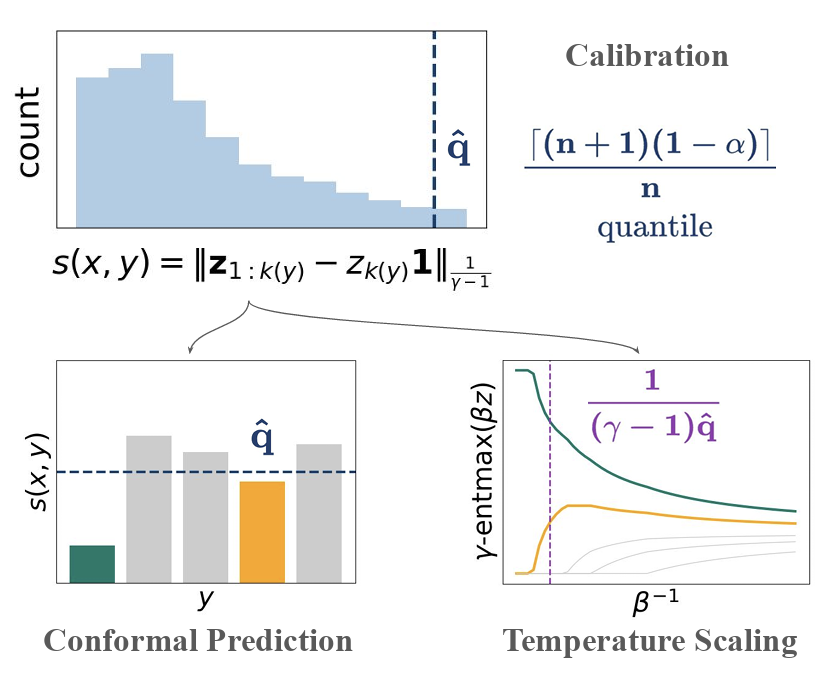

Our upcoming #AISTATS2025 paper is out: “Sparse Activations as Conformal Predictors” with Margarida Campos, João Calém, Sophia Sklaviadis, and @mariotelfig: arxiv.org/abs/2502.14773. This paper puts together two lines of research, dynamic sparsity and conformal prediction. 🧵

🇪🇺 One month after the AI Action Summit 2025 in Paris, I am thrilled to announce EuroBERT, a family of multilingual encoder exhibiting the strongest multilingual performance for task such as retrieval, classification and regression over 15 languages, mathematics and code. ⬇️ 1/6

United States Trends

- 1. Black Friday 409K posts

- 2. Egg Bowl 5,783 posts

- 3. Mississippi State 3,266 posts

- 4. Emmett Johnson N/A

- 5. Sumrall 1,639 posts

- 6. Scott Stricklin N/A

- 7. #releafcannabis N/A

- 8. NextNRG Inc 1,801 posts

- 9. Kamario Taylor N/A

- 10. #SkylineSweeps N/A

- 11. Chambliss 1,337 posts

- 12. Black Ops 7 XP 2,968 posts

- 13. #Huskers N/A

- 14. Wetjen N/A

- 15. #HMxCODSweepstakes N/A

- 16. #Rashmer 24.8K posts

- 17. Carson Soucy N/A

- 18. Porsha N/A

- 19. Solo Ball N/A

- 20. Black Ops 7 Blueprint N/A

You might like

-

Andre Martins

Andre Martins

@andre_t_martins -

Andreas Vlachos

Andreas Vlachos

@vlachos_nlp -

Ivan Titov

Ivan Titov

@iatitov -

Hila Gonen

Hila Gonen

@hila_gonen -

Omer Levy

Omer Levy

@omerlevy_ -

Matthias Gallé

Matthias Gallé

@mgalle -

Clara Isabel Meister

Clara Isabel Meister

@clara__meister -

Miryam de Lhoneux/ @mdlhx.bsky.social

Miryam de Lhoneux/ @mdlhx.bsky.social

@mdlhx -

CLS

CLS

@ChengleiSi -

Kris Cao

Kris Cao

@kroscoo -

Liling Tan

Liling Tan

@alvations -

alexandra birch

alexandra birch

@alexandrabirch1 -

Alexandra Chronopoulou

Alexandra Chronopoulou

@alexandraxron -

Jörg Tiedemann

Jörg Tiedemann

@TiedemannJoerg

Something went wrong.

Something went wrong.