dron

@_dron_h

math/music/ai nerd | research @GoodfireAI | prev cambridge, bair, polaris | giving a semantics to the syntax

You might like

another very neat result! big day for interp ✨

Overall, our results add to a growing picture that feature geometry can be a lot more intricate than mere directions, with dynamics offering a signal to isolate this geometry. E.g., see video below, where we show TFA elicits user vs. assistant manifolds in chat data! (12/14)

this is such a neat result!

One of my favorite parts of this work was looking at the circuits our model implements. The model implements counting (“[“ characters in python lists) through a single attention head, which does a simple average of a “[“ token detector over the sequence dimension.

New paper! Language has rich, multiscale temporal structure, but sparse autoencoders assume features are *static* directions in activations. To address this, we propose Temporal Feature Analysis: a predictive coding protocol that models dynamics in LLM activations! (1/14)

New research: are prompting and activation steering just two sides of the same coin? @EricBigelow @danielwurgaft @EkdeepL and coauthors argue they are: ICL and steering have formally equivalent effects. (1/4)

How is memorized data stored in a model? We disentangle MLP weights in LMs and ViTs into rank-1 components based on their curvature in the loss, and find representational signatures of both generalizing structure and memorized training data

LLMs memorize a lot of training data, but memorization is poorly understood. Where does it live inside models? How is it stored? How much is it involved in different tasks? @jack_merullo_ & @srihita_raju's new paper examines all of these questions using loss curvature! (1/7)

my fav result: random forest SAE probes generalize best! this lets us handle real-world distribution shifts from small synthetic datasets to ever-changing production data. this is an area where interpretability seems to really shine. really thoughtful science from the team here

Why use LLM-as-a-judge when you can get the same performance for 15–500x cheaper? Our new research with @RakutenGroup on PII detection finds that SAE probes: - transfer from synthetic to real data better than normal probes - match GPT-5 Mini performance at 1/15 the cost (1/6)

🔎Did someone steal your language model? We can tell you, as long as you shuffled your training data🔀. All we need is some text from their model! Concretely, suppose Alice trains an open-weight model and Bob uses it to produce text. Can Alice prove Bob used her model?🚨

i joined as a research fellow to train the largest-ever open source SAEs on R1. we don't mess around here! i had autonomy and the team's trust to go and do something real, with mentorship from an insanely talent-dense team. join us! you'll have real impact on our research.

Are you a high-agency, early- to mid-career researcher or engineer who wants to work on AI interpretability? We're looking for several Research Fellows and Research Engineering Fellows to start this fall.

i hope to never write another line of pandas again

Agents for experimental research != agents for software development. This is a key lesson we've learned after several months refining agentic workflows! More takeaways on effectively using experimenter agents + a key tool we're open-sourcing to enable them: 🧵

Agents for experimental research != agents for software development. This is a key lesson we've learned after several months refining agentic workflows! More takeaways on effectively using experimenter agents + a key tool we're open-sourcing to enable them: 🧵

has been and will continue to be a fun and fruitful partnership!

We're excited to announce a collaboration with @MayoClinic! We're working to improve personalized patient outcomes by extracting richer, more reliable signals from genomic & digital pathology models. That could mean novel biomarkers, personalized diagnostics, & more.

We're excited to announce a collaboration with @MayoClinic! We're working to improve personalized patient outcomes by extracting richer, more reliable signals from genomic & digital pathology models. That could mean novel biomarkers, personalized diagnostics, & more.

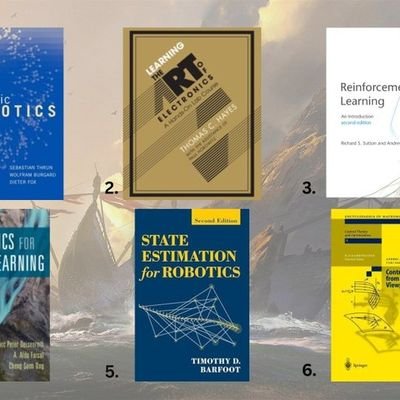

During my summer at Goodfire, I ended up thinking a bit about sparse autoencoder scaling laws, and whether the existence of "feature manifolds" could impact SAE scaling behavior, with @livgorton and @banburismus_ 🙏: arxiv.org/abs/2509.02565

Does making an SAE bigger let you explain more of your model's features? New research from @ericjmichaud_ models SAE scaling dynamics, and explores whether SAEs will pack increasingly many latents onto a few multidimensional features, rather than learning more features.

Excited to share our work digging into how Evo 2 represents species relatedness or phylogeny. Genetics provides a good quantitative measure of relatedness, so we could use it to probe the model and see if its internal geometry reflects it.

Arc Institute trained their foundation model Evo 2 on DNA from all domains of life. What has it learned about the natural world? Our new research finds that it represents the tree of life, spanning thousands of species, as a curved manifold in its neuronal activations. (1/8)

i saw early versions of this work when i was still in school and it made waiting to join this team very difficult... very cool results! @_MichaelPearce

Arc Institute trained their foundation model Evo 2 on DNA from all domains of life. What has it learned about the natural world? Our new research finds that it represents the tree of life, spanning thousands of species, as a curved manifold in its neuronal activations. (1/8)

Arc Institute trained their foundation model Evo 2 on DNA from all domains of life. What has it learned about the natural world? Our new research finds that it represents the tree of life, spanning thousands of species, as a curved manifold in its neuronal activations. (1/8)

What if adversarial examples aren't a bug, but a direct consequence of how neural networks process information? We've found evidence that superposition – the way networks represent many more features than they have neurons – might cause adversarial examples.

New research! Post-training often causes weird, unwanted behaviors that are hard to catch before deployment because they only crop up rarely - then are found by bewildered users. How can we find these efficiently? (1/7)

United States Trends

- 1. South Carolina 28.7K posts

- 2. Texas A&M 27.6K posts

- 3. Shane Beamer 3,780 posts

- 4. Ryan Williams 1,309 posts

- 5. Michigan 44.5K posts

- 6. Sellers 13.9K posts

- 7. Ty Simpson 1,733 posts

- 8. Northwestern 7,282 posts

- 9. Marcel Reed 4,832 posts

- 10. Heisman 7,336 posts

- 11. #EubankBenn2 10.8K posts

- 12. #GoBlue 3,862 posts

- 13. Oklahoma 18.5K posts

- 14. Sherrone Moore 1,037 posts

- 15. Underwood 3,931 posts

- 16. Mateer 1,328 posts

- 17. College Station 2,966 posts

- 18. #GigEm 2,173 posts

- 19. Nyck Harbor 3,437 posts

- 20. Elko 5,382 posts

Something went wrong.

Something went wrong.