adamSd

@adamoxySD

من يهن يسهل الهوان عليه، من امن الجزاء اساء الادب

You might like

Tip: If you want to be happy and feel gratitude help others. #happiness_recipe #recipe #happyness

How Java Compiles Source Code to Bytecode You write Java code in a .java file using classes, methods, and objects. The Java Compiler (javac) then translates this human-readable code into platform-independent bytecode, stored in a .class file. → Lexical Analysis: The compiler…

🚨 Google just KILLED N8N. I built 10 AI apps in 20 minutes — no code, no logic, no cost. Google Opal is here… and it’s FREE. N8N is finished. Here’s why 👇 Want the full guide? DM me.

Last year I wrote my own database from scratch. Here's some stuff I learned 1. DBs are one of the few programs that still have to worry about "running out of memory" even today with so much RAM Example: User runs SELECT * from t and t is 8GB in size but you only have 4GB RAM

Veo 3.1 + n8n is insane 🤯 Google's new model lets you generate studio-quality UGC videos that look like they cost $1k to produce. Copy my entire automated workflow below 👇 Most e-commerce brands & creative agencies are stuck choosing between: → Expensive creator networks…

Function calling & MCP for LLMs, clearly explained (with visuals):

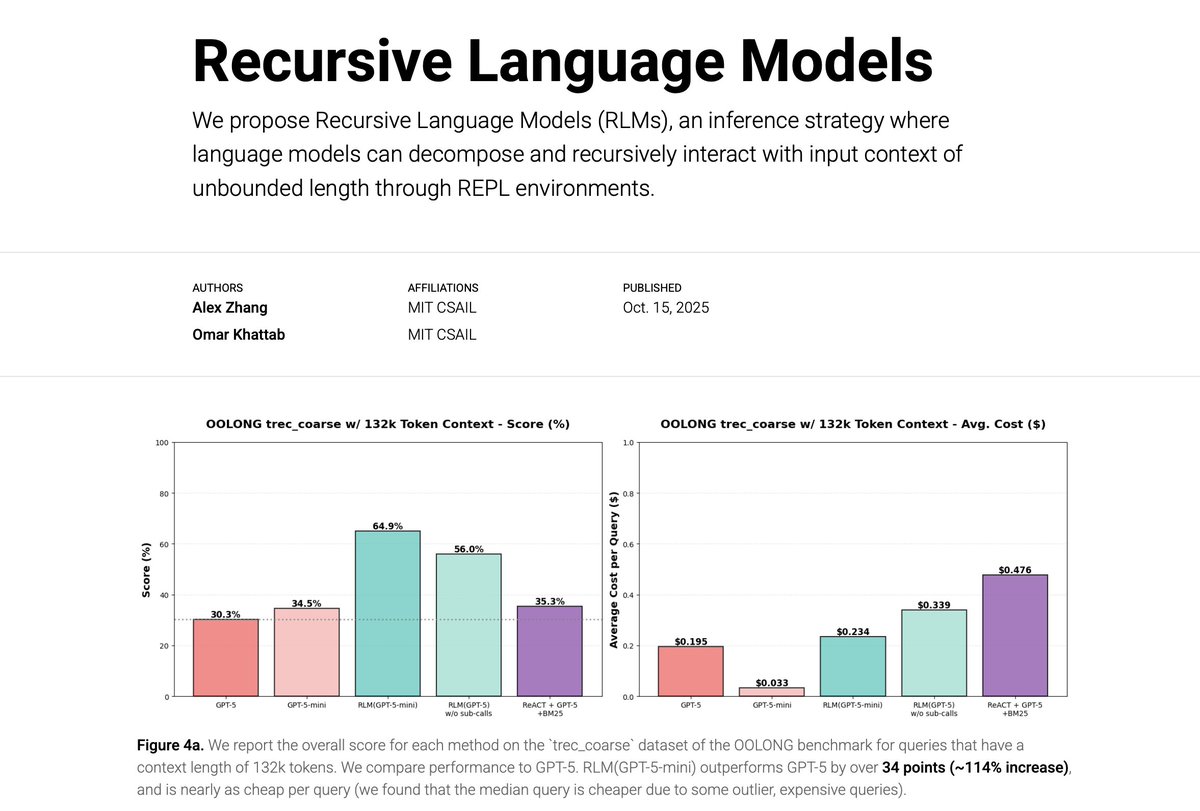

For a long time, we and others have thought about general-purpose inference scaling axes. But only CoT reasoning and ReAct-style loops stuck around. I think Recursive Language Models may be the next one. Your current LLM can already process 10M+ prompt tokens, recursively.

What if scaling the context windows of frontier LLMs is much easier than it sounds? We’re excited to share our work on Recursive Language Models (RLMs). A new inference strategy where LLMs can decompose and recursively interact with input prompts of seemingly unbounded length,…

Are you (or is someone you know) teaching AI / ML / Deep Learning this year? My forthcoming book (freely available at udlbook.com) will save you a lot of time. This thread will show you why.

A comforting thought: as long as I’m alive, I can begin again. And again. And again.

The @xAI Grok 2.5 model, which was our best model last year, is now open source. Grok 3 will be made open source in about 6 months. huggingface.co/xai-org/grok-2

A girl in my college classroom suddenly started shouting at me while looking at her semester exam report card. I was like, “What did I do this time that I don’t even know about?” So I walked over to her seat and calmly asked, “Why are you shouting at me?” Bro, the reply she…

IMPORTANT: like and reply to this tweet (to avoid bots) before requesting to join; it shows you read the instructions introducing our X community for local llms, self-hosting, and hardware requests without answers and without like+reply = denied

The web runs on open source and we’re backing the people who build it. Announcing the Vercel OSS Program, summer cohort.

Google just open-sourced LangExtract Python library! It uses LLMs to extract entities, attributes, and relations—with exact source grounding—from unstructured documents. Flexible LLM support (Gemini, OpenAI, Ollama) 100% open-source.

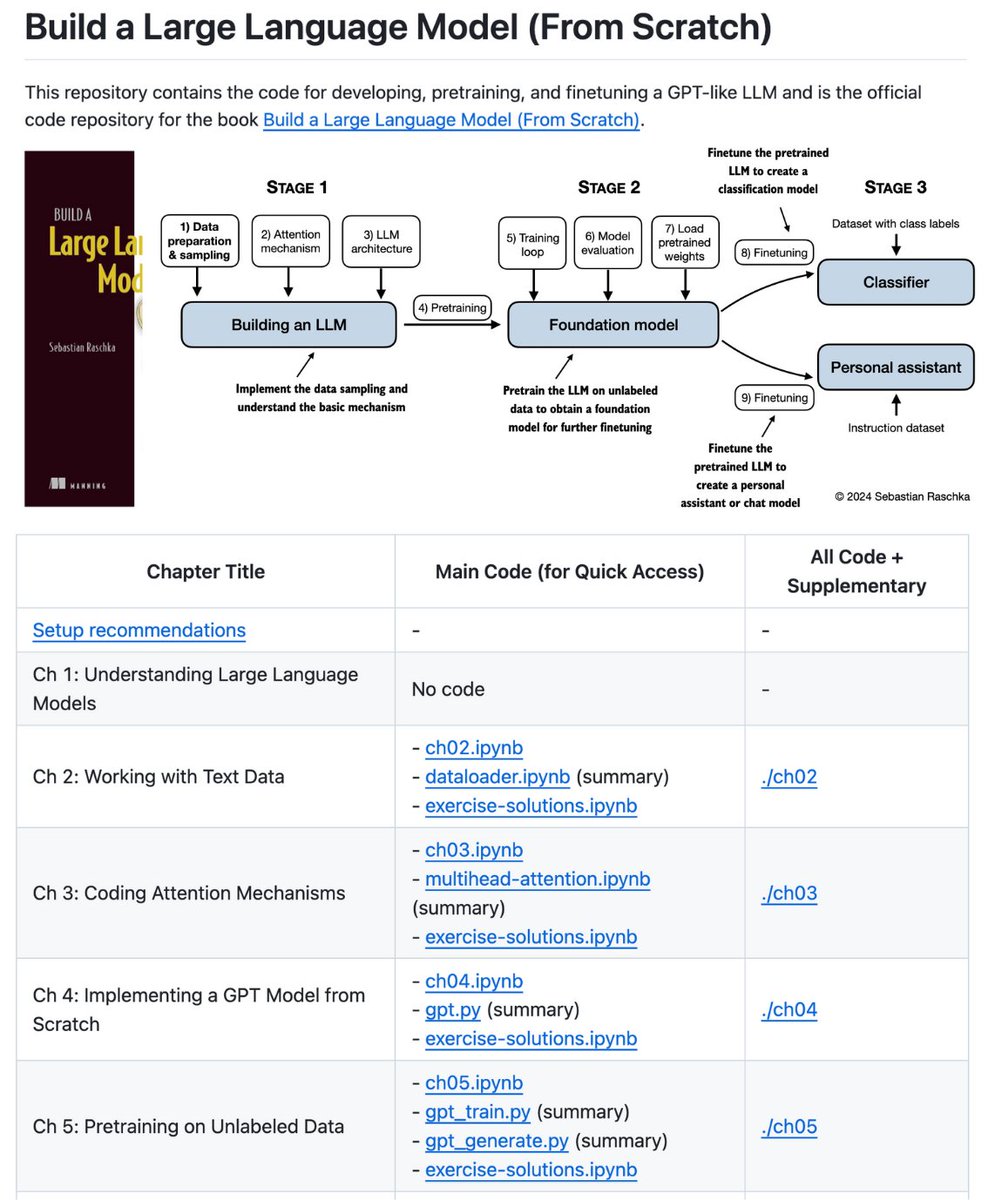

learn how to build an LLM from scratch, honestly. @rasbt's repo is really a gem. it has notebooks with diagrams and explanations that will teach you 100% of: > attention mechanism > implementing a GPT model > pretraining and fine-tuning my top recommendation for studying LLMs.

the startup game changed old path: idea → raise money → hire → burn → hope new path: audience → product → community → automate old: 2% equity after dilution new: 100% ownership forever old: $0 salary for years new: profitable month one the choice is yours

I just read "Foundations of LLMs 2025" cover to cover. It explained large language models so clearly that I can finally say: I get it. Here’s the plain-English breakdown I wish I had years ago:

we're all sleeping on this OCR model 🔥 dots.ocr is a new 3B model with sota performance, support for 100 languages & allowing commercial use! 🤯 single e2e model to extract image, convert tables, formula, and more into markdown 📝

This is Nischa Shah. She’s an ex-investment banker turned Finance YouTuber with 1,750,000 subscribers. & just last week… She sat down with Steven Bartlett to discuss the system that makes her $200k in passive income. These are my top 8 takeaways:

United States Trends

- 1. #BUNCHITA 1,431 posts

- 2. #SmackDown 42.3K posts

- 3. Tulane 3,538 posts

- 4. Aaron Gordon 2,135 posts

- 5. Giulia 13.8K posts

- 6. Supreme Court 179K posts

- 7. #OPLive 2,213 posts

- 8. Connor Bedard 2,112 posts

- 9. Russ 12.7K posts

- 10. #BostonBlue 4,180 posts

- 11. #TheLastDriveIn 3,055 posts

- 12. Caleb Wilson 5,374 posts

- 13. Podz 2,473 posts

- 14. Northwestern 4,791 posts

- 15. Scott Frost N/A

- 16. Rockets 20K posts

- 17. Frankenstein 71.9K posts

- 18. Memphis 15.5K posts

- 19. Zach Lavine N/A

- 20. Isaiah Hartenstein N/A

Something went wrong.

Something went wrong.