Adversarial Machine Learning

@adversarial_ML

I tweet about #MachineLearning and #MachineLearningSecurity.

You might like

Just read this paper. Short summary: when thinking of defenses to adversarial examples in ML, think of the threat model carefully. Nice paper. Also won the best paper award at ICML 2018 (@icmlconf ) Congrats to the authors!! arxiv.org/abs/1802.00420

Adversarial robustness is not free: decrease in natural accuracy may be inevitable. Silver lining: robustness makes gradients semantically meaningful (+ leads to adv. examples w/ GAN-like trajectories) arxiv.org/abs/1805.12152 (@tsiprasd @ShibaniSan @logan_engstrom @alex_m_turner)

Here's an article by @UofT about our new work on adversarial attacks on Face Detectors that help you preserve your privacy. news.engineering.utoronto.ca/privacy-filter…

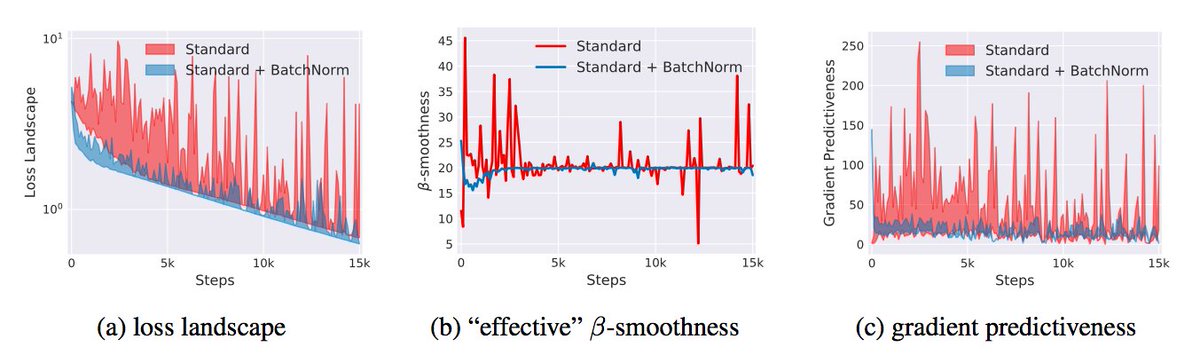

Think BatchNorm helps training due to reducing internal covariate shift? Think again. (What BatchNorm *does* seem to do though, both empirically and in theory, is to smoothen out the optimization landscape.) (with @ShibaniSan @tsiprasd @andrew_ilyas) arxiv.org/abs/1805.11604

Excited by this direction of formal investigation for adversarial defences: Adversarial examples from computational constraints, Bubeck et al arxiv.org/abs/1805.10204

"No pixels are manipulated in this talk. No pandas are harmed..." Great ways to differentiate your talk from the rest of talks on adversarial examples... no more pandas please 😀

I'm speaking at the 1st Deep Learning and Security workshop (co-located with @IEEESSP ) at 1:30 today: ieee-security.org/TC/SPW2018/DLS/ I'll discuss research into defenses against adversarial examples, including future directions. Slides and lecture notes here: iangoodfellow.com/slides/2018-05…

This paper shows how to make adversarial examples with GANs. No need for a norm ball constraint. They look unperturbed to a human observer but break a model trained to resist large perturbations. arxiv.org/pdf/1805.07894…

LaVAN: Localized and Visible Adversarial Noise. A method to generate adversarial noise which is confined to small, localized patch of the image without covering any main objects of the image. arxiv.org/abs/1801.02608

Two papers accepted to ICML 2018. Congrats to all my amazing co-authors. Both on adversarial ML. The arxiv version of the papers are up, but we will update it soon based on reviewer comments. Arxiv versions: arxiv.org/abs/1711.08001 and arxiv.org/abs/1706.03922

Securing Distributed Machine Learning in High Dimensions arxiv.org/abs/1804.10140 #MachineLearningSecurity #AdversarialML

IBM Ireland just released "The Adversarial Robustness Toolbox: Securing AI Against Adversarial Threats". This library will allow rapid crafting and analysis of attacks and defense methods for machine learning models. ibm.com/blogs/research… #MachineLearningSecurity #AdversarialML

United States Trends

- 1. Grammy 354K posts

- 2. Dizzy 10.1K posts

- 3. #FliffCashFriday N/A

- 4. Clipse 21K posts

- 5. #NXXT 1,092 posts

- 6. Kendrick 62.9K posts

- 7. #GOPHealthCareShutdown 7,628 posts

- 8. James Watson 4,337 posts

- 9. Orban 40.7K posts

- 10. #FursuitFriday 12.3K posts

- 11. addison rae 24.5K posts

- 12. olivia dean 15.7K posts

- 13. Katseye 120K posts

- 14. Darryl Strawberry N/A

- 15. Leon Thomas 20.5K posts

- 16. AOTY 22.1K posts

- 17. Thune 73.5K posts

- 18. ravyn lenae 4,756 posts

- 19. Alfredo 2 1,065 posts

- 20. Carmen 49K posts

You might like

-

Machine Learning Security Laboratory

Machine Learning Security Laboratory

@mlsec_lab -

SaTML Conference

SaTML Conference

@satml_conf -

Giovanni Apruzzese

Giovanni Apruzzese

@g_apru -

Nicolas Papernot

Nicolas Papernot

@NicolasPapernot -

Ambra

Ambra

@ambrademontis -

Battista Biggio

Battista Biggio

@biggiobattista -

Pin-Yu Chen

Pin-Yu Chen

@pinyuchenTW -

Fabio Pierazzi

Fabio Pierazzi

@fbpierazzi -

Yihua Zhang

Yihua Zhang

@zyh2022 -

Hyrum Anderson

Hyrum Anderson

@drhyrum -

EUGENE NEELOU

EUGENE NEELOU

@eneelou -

Lorenzo

Lorenzo

@LorenzoCazz -

Luca Demetrio

Luca Demetrio

@zangobot -

Angelo Sotgiu

Angelo Sotgiu

@sotgiu_angelo -

Fnu Suya ᠰᠥᠶᠦᠭᠡ

Fnu Suya ᠰᠥᠶᠦᠭᠡ

@suyafnu

Something went wrong.

Something went wrong.