Machine Learning Dept. at Carnegie Mellon

@mldcmu

The top education and research institution in the 🌎 for #AI and #machinelearning | Research → http://blog.ml.cmu.edu | Learn more ↓

قد يعجبك

I'm teaching a new "Intro to Modern AI" course at CMU this Spring: modernaicourse.org. It's an early-undergrad course on how to build a chatbot from scratch (well, from PyTorch). The course name has bothered some people – "AI" usually means something much broader in academic…

Neat framework to unify and understand consistency-like models by @nmboffi, @msalbergo and Eric Vanden-Eijnden !

Consistency models, CTMs, shortcut models, align your flow, mean flow... What's the connection, and how should you learn them in practice? We show they're all different sides of the same coin connected by one central object: the flow map. arxiv.org/abs/2505.18825 🧵(1/n)

A nice application of our NeuroAI Turing Test! Check out @cogphilosopher's thread for more details on comparing brains to machines!

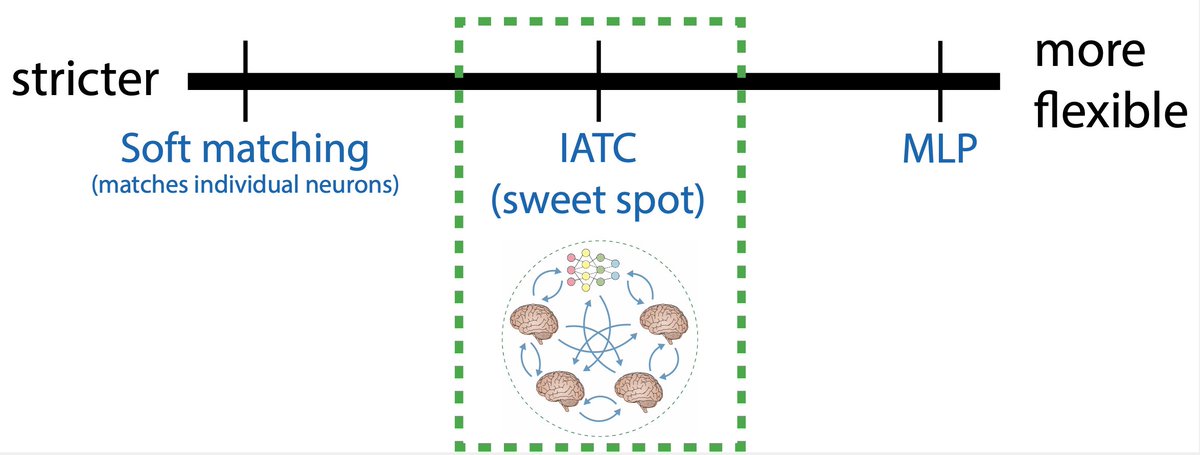

1/x Our new method, the Inter-Animal Transform Class (IATC), is a principled way to compare neural network models to the brain. It's the first to ensure both accurate brain activity predictions and specific identification of neural mechanisms. Preprint: arxiv.org/abs/2510.02523

A closed door looks the same whether it pushes or pulls. Two identical-looking boxes might have different center of mass. How should robots act when a single visual observation isn't enough? Introducing HAVE 🤖, our method that reasons about past interactions online! #CORL2025

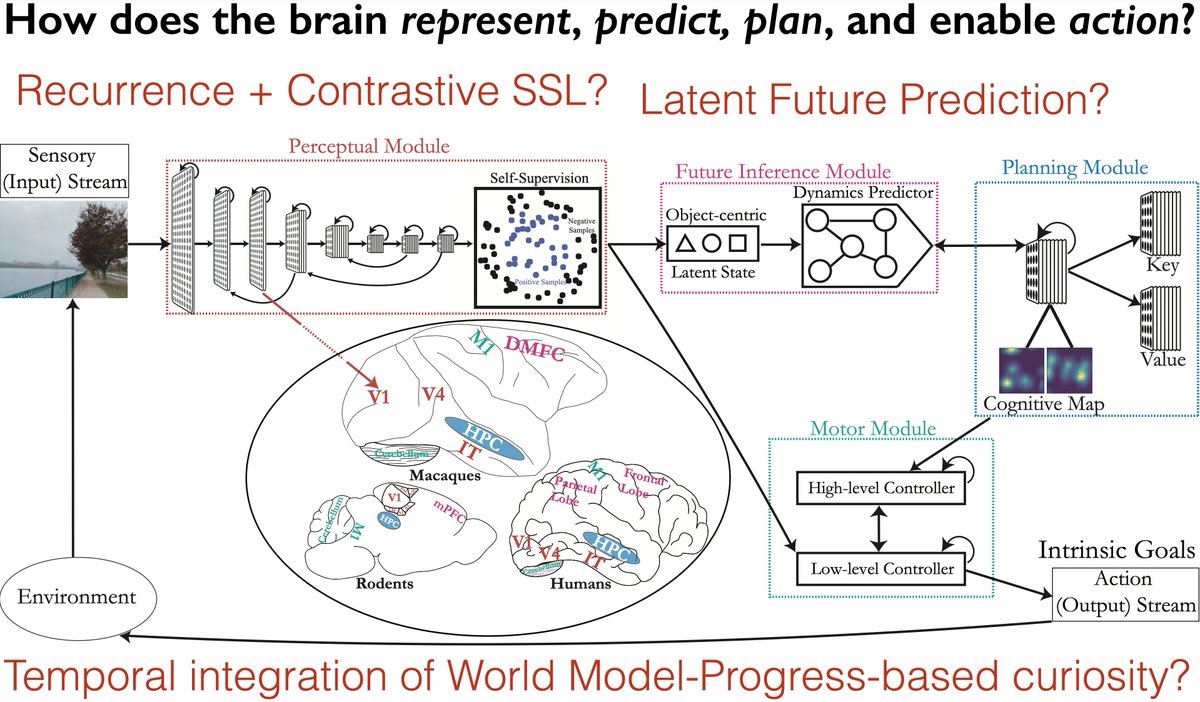

Recent discussions (e.g. @RichardSSutton on @dwarkesh_sp’s podcast) have highlighted why animals are a better target for intelligence — and why scaling alone isn’t enough. In my recent @CMU_Robotics seminar talk, “Using Embodied Agents to Reverse-Engineer Natural Intelligence”,…

CMU hacking team the Plaid Parliament of Pwning pwns again, wins fourth straight and record ninth overall DEF CON Capture-the-Flag title cylab.cmu.edu/news/2025/08/1…

Thank you @google for the ML and Systems Junior Faculty Award! This award is for work on sparsity, and I am excited to continue this work focusing on mixture of experts. We might bring big MoEs to small GPUs quite soon! Stay tuned! Read more here: cs.cmu.edu/news/2025/dett…

📢Introducing the Alignment Project: A new fund for research on urgent challenges in AI alignment and control, backed by over £15 million. ▶️ Up to £1 million per project ▶️ Compute access, venture capital investment, and expert support Learn more and apply ⬇️

blog.ml.cmu.edu/2025/07/08/car… Check out our latest post on CMU @ ICML 2025!

.@BNYglobal’s Leigh-Ann Russell and @CarnegieMellon’s Zico Kolter took the #realinsite mainstage for an exciting conversation on the future of #AI in wealth management. The key to successful integration? It's all about systems that 🤖 react, 🧠 think and 🔗 interact.

1/6 🚀 Excited to share that BrainNRDS has been accepted as an oral at #CVPR2025! We decode motion from fMRI activity and use it to generate realistic reconstructions of videos people watched, outperforming strong existing baselines like MindVideo and Stable Video Diffusion.🧠🎥

Our first NeuroAgent! 🐟🧠 Excited to share new work led by the talented @rdkeller, showing how autonomous behavior and whole-brain dynamics emerge naturally from intrinsic curiosity grounded in world models and memory. Some highlights: - Developed a novel intrinsic drive…

1/ I'm excited to share recent results from my first collaboration with the amazing @aran_nayebi and @Leokoz8! We show how autonomous behavior and whole-brain dynamics emerge in embodied agents with intrinsic motivation driven by world models.

Virginia Smith, the Leonardo Associate Professor of Machine Learning, has received the Air Force Office of Scientific Research 2025 Young Investigator award. cs.cmu.edu/news/2025/smit…

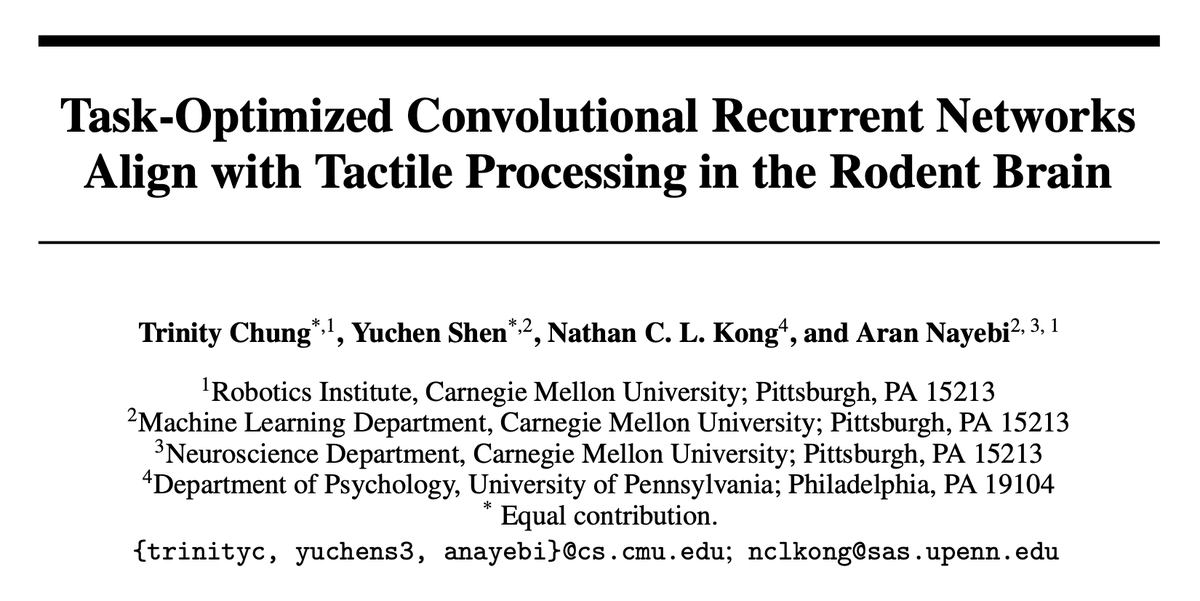

Check out our new work exploring how to make robots sense touch more like our brains! Surprisingly, ConvRNNs aligned best with mouse somatosensory cortex and even passed the NeuroAI Turing Test on current neural data. We also developed new tactile-specific augmentations for…

1/ What if we make robots that process touch the way our brains do? We found that Convolutional Recurrent Neural Networks (ConvRNNs) pass the NeuroAI Turing Test in currently available mouse somatosensory cortex data. New paper by @AlexShenSyc @NathanKong @aran_nayebi and me!

Really thrilled to receive #NVIDIADGX B200 from @nvidia . Looking forward to cooking with the beast. Together with an amazing team at CMU Catalyst group @BeidiChen @Tim_Dettmers @JiaZhihao @zicokolter, We are looking at the innovate across entire stack from model to instructions

Huge thank you to @NVIDIADC for gifting a brand new #NVIDIADGX B200 to CMU’s Catalyst Research Group! This AI supercomputing system will afford Catalyst the ability to run and test their work on a world-class unified AI platform.

Thanks @NVIDIADC for the DGX B200 machine for the CMU Catalyst group! I'm perhaps already a bit too enthralled by it in the photos...

Huge thank you to @NVIDIADC for gifting a brand new #NVIDIADGX B200 to CMU’s Catalyst Research Group! This AI supercomputing system will afford Catalyst the ability to run and test their work on a world-class unified AI platform.

Excited to see what the MLD Faculty and Students in the Catalyst Research Group will do with this brand new #NVIDIADGX B200. Many thanks to @NVIDIADC from all of us at #CMU! @zicokolter @tqchenml

Huge thank you to @NVIDIADC for gifting a brand new #NVIDIADGX B200 to CMU’s Catalyst Research Group! This AI supercomputing system will afford Catalyst the ability to run and test their work on a world-class unified AI platform.

As a long-time fan of @pgmid's "Brain Inspired" podcast, it was an honor to be invited on to talk about NeuroAgents, our update to the Turing Test, and AI safety at the end. Coincidentally recorded on my birthday, no less! Check it out here 👇

In this “Brain Inspired” episode, @aran_nayebi joins @pgmid to discuss his reverse-engineering approach to build autonomous artificial-intelligence agents. thetransmitter.org/brain-inspired…

1/ 🧵👇 What should count as a good model of intelligence? AI is advancing rapidly, but how do we know if it captures intelligence in a scientifically meaningful way? We propose the *NeuroAI Turing Test*—a benchmark that evaluates models based on both behavior and internal…

Introducing *ARC‑AGI Without Pretraining* – ❌ No pretraining. ❌ No datasets. Just pure inference-time gradient descent on the target ARC-AGI puzzle itself, solving 20% of the evaluation set. 🧵 1/4

United States الاتجاهات

- 1. The BONK 83.6K posts

- 2. #thursdayvibes 2,069 posts

- 3. Good Thursday 35.6K posts

- 4. Usher 4,327 posts

- 5. Godzilla 27K posts

- 6. #PiratasDelImperio 2,327 posts

- 7. #RIME_AIlogistics N/A

- 8. Shaggy 2,610 posts

- 9. Algorhythm Holdings N/A

- 10. Happy Friday Eve N/A

- 11. #thursdaymotivation 2,596 posts

- 12. Dolly Parton 5,719 posts

- 13. Jay Bateman N/A

- 14. Trey Songz N/A

- 15. #ThursdayThoughts 2,247 posts

- 16. JUNGKOOK FOR CHANEL BEAUTY 154K posts

- 17. Doug Dimmadome 16.9K posts

- 18. Timo 2,977 posts

- 19. Ukraine and Israel 6,817 posts

- 20. LING AVATAR FIRE AND ASH TH 613K posts

قد يعجبك

-

ICML Conference

ICML Conference

@icmlconf -

Stanford NLP Group

Stanford NLP Group

@stanfordnlp -

ICLR 2026

ICLR 2026

@iclr_conf -

Journal of Machine Learning Research

Journal of Machine Learning Research

@JmlrOrg -

Christopher Manning

Christopher Manning

@chrmanning -

CMU Robotics Institute

CMU Robotics Institute

@CMU_Robotics -

Yejin Choi

Yejin Choi

@YejinChoinka -

CMU School of Computer Science

CMU School of Computer Science

@SCSatCMU -

NeurIPS Conference

NeurIPS Conference

@NeurIPSConf -

Sebastian Ruder

Sebastian Ruder

@seb_ruder -

Sasha Rush

Sasha Rush

@srush_nlp -

UW NLP

UW NLP

@uwnlp -

Chelsea Finn

Chelsea Finn

@chelseabfinn -

Zico Kolter

Zico Kolter

@zicokolter -

Russ Salakhutdinov

Russ Salakhutdinov

@rsalakhu

Something went wrong.

Something went wrong.