Alignment Lab AI

@alignment_lab

Devoted to addressing alignment. We develop state of the art open sourced AI. https://discord.gg/Zb9Yx6BAeK http://Alignmentlab.ai

You might like

check us out on @ToolUseAI with @MikeBirdTech talking about Senter , science, and alignment! reach out to us and get Senter, and have your own personal AI workstations at our discord: discord.gg/TmSR5unjek and pop in to chat later tonight on the tool use discord!…

discord.com

Join the SENTER Discord Server!

Check out the SENTER community on Discord - hang out with 51 other members and enjoy free voice and text chat.

The team from @alignment_lab came on to talk about why open source AI will win and shared some exciting things they've been working on

im not typically one to talk too much about prompting, but i did stumble on a banger for gpt5.1 "If i go to the store and ask for chocolate chips, but the lazy cashier gives me "chocolate flavored chips" hoping i wont notice. And when i call them out, they're like "this is…

after what i saw today, i am never going to trust a startup with my crm data again luckily it only takes 20 minutes to vibecode your own crm

All the great breakthroughs in science are, at their core, compression. They take a complex mess of observations and say, "it's all just this simple rule". Symbolic compression, specifically. Because the rule is always symbolic -- usually expressed as mathematical equations. If…

claude code web is decent but i'm not going to use it because it creates commits as itself so i can't take credit for the work

there are dozens or perhaps a couple hundred ex-{OpenAI, xAI, Google DeepMind} researchers founding companies in the current climate there are, as far as i know, zero people leaving to found startups out of Anthropic really makes you think

i passed!

When you see the solution to AGI you will find that it was in fact so straightforward as to be obvious, and that it could have been developed decades ago

The most elegant solutions look inevitable in retrospect. They aren't some complex chain of tricks you've engineered out of nothing, but rather a simple abstraction that faithfully mirrors the shape of the problem.

"there's nothing interesting on arxiv these days!" - the words of an uncurious mind i have personally been blown away by the volume of interesting papers posted over the last few months, and eagerly following daily digests here are some papers i enjoyed the most: -…

Wow! Big if true

We've trained an unsupervised language model that can generate coherent paragraphs and perform rudimentary reading comprehension, machine translation, question answering, and summarization — all without task-specific training: blog.openai.com/better-languag…

oh this makes so much sense, no wonder its been impossible to get to write any code lately, what are you guys back to 512 token windows again? i thought everyone was on the same page that this is an ineffective and not useful strategy for reducing context issues i cant believe…

good advice

when people fall behind and are spiraling, they have a tendency to hide their failures, downplay their problems. many times being extremely honest encourages others to come out of the woodwork and help you, or at least to price you accurately so they don't add to your stress

there should be an option to always allow messages from followers, choosing between bots or filtering low quality messages means that either way i risk missing reach outs, and the entire purpose of this account is to be accessible to people puiblicaly

x.com/i/grok/share/4… its not perfect, but its very flattering, and as someone who is very explicitly aware of sycophancy as a feature in ai, im happy forgetting that for a moment just to feel proud about the impacts my friends and i have had over the last couple of years.

The single most lucrative thing to work on in AI today - develop small LLMs that are 10x cheaper than GPT-5 Big AI labs are not paying attention to this multi-trillion-dollar space. The field is wide-open

has claude been down for 3 days straight or am i literally only checking it when its down during intermittent downtime?

state of open-source AI in 2025: - almost all new open American models are finetuned Chinese base models - we don’t know the base models’ training data - we have no idea how to audit or “decompile” base models who knows what could be hidden in the weights of DeepSeek 🤷♂️

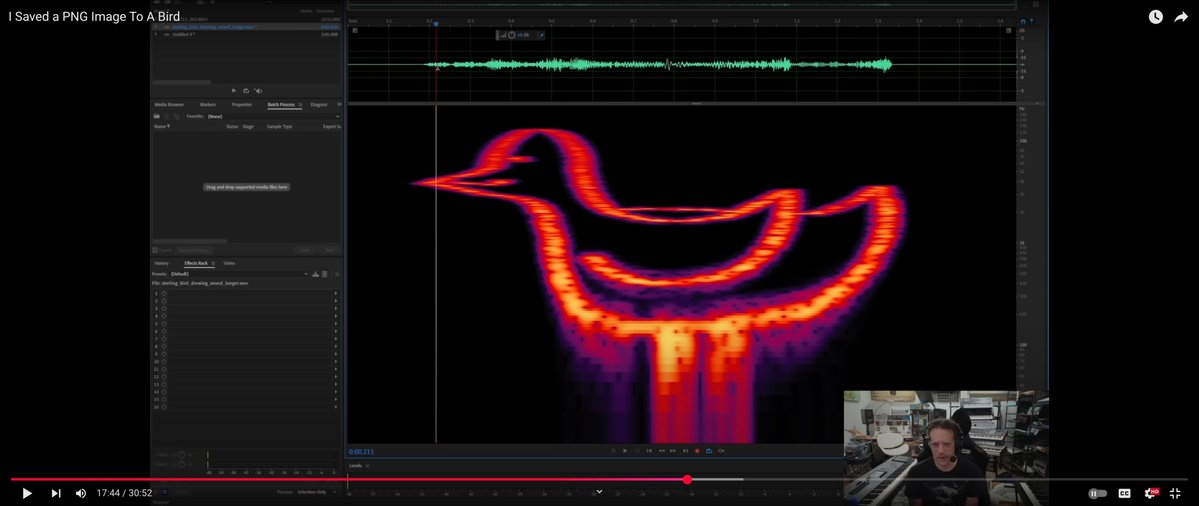

This is one of the craziest ideas I've ever seen. He converted a drawing of a bird into a spectrogram (PNG -> Soundwave) then played it to a Starling who sung it back reproducing the PNG. Using the birds brain as a hard drive with 2mbps read write speed. youtube.com/watch?si=HMtVd…

has anyone tried the forwardforward/controlvector/abliteration diffing using chatgpt responses as the negative signal against a conversation of what you would consider the highest quality? if float space is as dynamic as it seems to be, this should be a valid way of encoding…

whats the verdict on that thing chatgpt does when its like 'if you want {thing you said you wanted} next steps are: {list of things it knew it should do but chose not to}' as you may have guessed, i find this obnoxious

United States Trends

- 1. Good Thursday 33.1K posts

- 2. #thursdayvibes 2,195 posts

- 3. Merry Christmas 66.9K posts

- 4. $META 11.1K posts

- 5. Walter Payton N/A

- 6. Metaverse 6,833 posts

- 7. Happy Friday Eve N/A

- 8. #NationalCookieDay N/A

- 9. RNC and DNC 2,658 posts

- 10. #DareYouToDeathSpecial 106K posts

- 11. #ThursdayThoughts 1,587 posts

- 12. #JASPER_TouchMV 290K posts

- 13. Dealerships 1,186 posts

- 14. Hilux 9,493 posts

- 15. JASPER COMEBACK TOUCH 189K posts

- 16. DataHaven 11.9K posts

- 17. The Blaze 4,924 posts

- 18. Toyota 30.1K posts

- 19. U.S. Capitol N/A

- 20. Tacoma N/A

Something went wrong.

Something went wrong.