Amir H. Kargaran

@amir_nlp

On job maket / 🤖 PhD student @CisLmu/ 🛠️ Multilingual NLP / Previous: Intern @huggingface. My views!

You might like

Excited to share our latest work, “KurTail: Kurtosis-based LLM Quantization”, which will be presented as a poster at EMLNP 2025! You can find our paper here: aclanthology.org/2025.findings-…

I'm tired of hallucinated citations.* I never use AI for literature review, and I always, especially as I gain more experience, verify every paper I cite. *As a reviewer, if I encounter such issues, I will view the paper negatively, as they reflect a lack of academic integrity.

Nice feature from @openreviewnet. You can track publication versions across different venues (e.g., arXiv, ICLR, ACL)!

It's been a while, but I'm glad that my DeepMind M2L lecture on Transformers is still proving useful to the community. My goal was to explain Transformers through the lens of images and graphs, a perspective I personally helped shape in earlier days. youtu.be/IvWHJvasGb0?si…

youtube.com

YouTube

2023 1.3 Transformers - Sahand Sharifzadeh

📢 I'm not physically attending #COLM2025 @COLM_conf, but organizing @MeltWorkshop ✨Multilingual and Equitable Language Technologies✨ 📍Rm 520D 📅 Oct 10 (Fri) 🔗 melt-workshop.github.io Please stop by our workshop if you're working on multilingual/multicultural LLMs 🙌

We're in 🇨🇦 for @COLM_conf Come talk to me and @HKydlicek on Wednesday at the FineWeb2 poster session (Session 3, Poster #58) @LoubnaBenAllal1 will be on Session 5, Poster #23 (SmolLM2)

What do you call those units of semantic text the LLM compresses English and German into when you brag about the compression rate? It's not UTF-8 bytes... there's a word for it, maybe starts with a a T?

> SmolLM3 > GLM-4.5 > NVIDIA-Nemotron-Nano These are just some of the recent OS releases relying on 🥂 FineWeb2 for their multilingual data Proud that the community trusts us for their data supply 🫡

Molecules speak in atoms and bonds. LLMs can learn that language. Even with SOTA #denovo design, our largest molecular LLM study finds a plot twist: early saturation, weak scaling, and proxy metrics that mislead on real tasks! Led by @kchitsaz and @roshan_msb 🧵 More in thread:

Crazy how much attention OCR is getting. I couldn't find any that work well with less common scripts. Stress-test it with Cuneiform, at least it's part of Unicode!

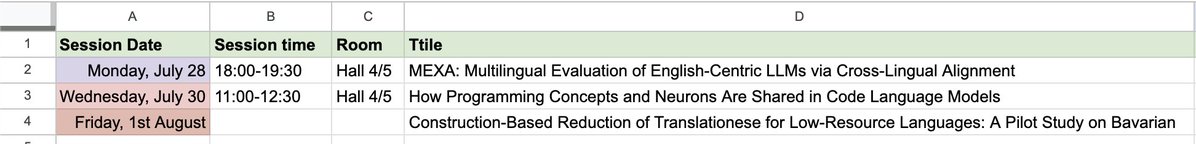

I'm at the @aclmeeting to present our papers on multilingual evaluation, programming languages, and translation! #ACL2025 Feel free to stop by to exchange ideas and discuss! I’m also on the job market. If you think there’s a potential fit, I’d love to hear from you.

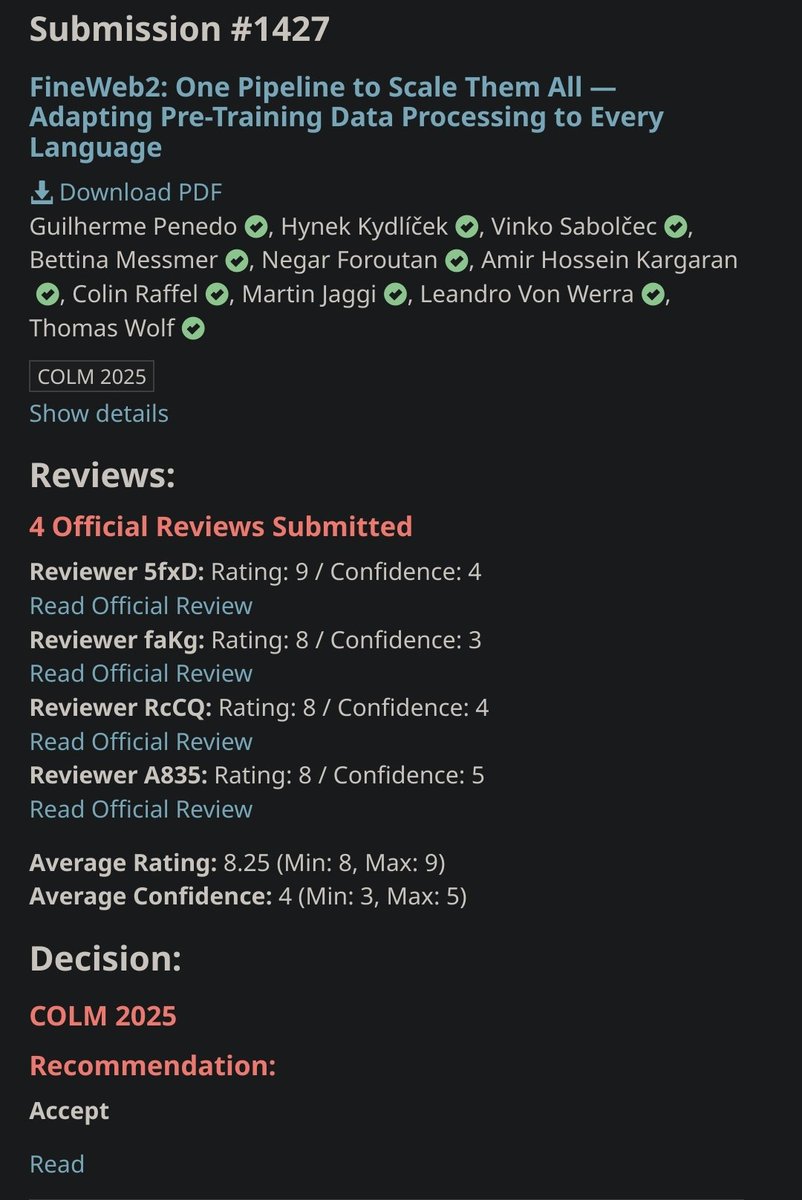

FineWeb2 🥂 has been accepted to @COLM_conf See you in October 🇨🇦

We have finally released the 📝paper for 🥂FineWeb2, our large multilingual pre-training dataset. Along with general (and exhaustive) multilingual work, we introduce a concept that can also improve English performance: deduplication-based upsampling, which we call rehydration.

We have finally released the 📝paper for 🥂FineWeb2, our large multilingual pre-training dataset. Along with general (and exhaustive) multilingual work, we introduce a concept that can also improve English performance: deduplication-based upsampling, which we call rehydration.

Are you working on multilingual, multicultural #LLM? Interested in diverse & inclusive language modeling? 😎 Stay tuned at our MELT workshop collocated with #COLM2025 🔗 melt-workshop.github.io 🫶 We welcome 2p (EA), 4p (short), 8p (long) papers as well as talented reviewers!

🌍 ✨ Introducing Melt Workshop 2025: Multilingual, Multicultural, and Equitable Language Technologies A workshop on building inclusive, culturally-aware LLMs! 🧠 Bridging the language divide in AI 📅 October 10, 2025 | Co-located with @COLM_conf 🔗 melt-workshop.github.io

Consider submitting your multilingual NLP work to the MELT workshop @ COLM 2025: melt-workshop.github.io Deadline: June 23

The full list of COLM 2025 workshops is now online! Most deadlines are June 23, but check the specific CFP of each workshop for the details

United States Trends

- 1. Veterans Day 315K posts

- 2. Luka 79.5K posts

- 3. Nico 134K posts

- 4. Mavs 30.9K posts

- 5. Gambit 35.8K posts

- 6. Toy Story 5 2,046 posts

- 7. Sabonis 3,050 posts

- 8. Kyrie 7,237 posts

- 9. Wike 92K posts

- 10. Payne 10K posts

- 11. Pat McAfee 3,492 posts

- 12. Vets 27.9K posts

- 13. Bond 70.4K posts

- 14. Wanda 25.2K posts

- 15. #csm220 8,537 posts

- 16. Tomas 19.5K posts

- 17. Jay Rock 3,071 posts

- 18. Antifa 172K posts

- 19. Dumont 24.9K posts

- 20. Rogue 46.5K posts

Something went wrong.

Something went wrong.