Sarath Chandar

@apsarathchandar

Associate Professor @polymtl and @Mila_Quebec; Canada CIFAR AI Chair; Machine Learning Researcher. Pro-bono office hours: https://t.co/tK69DKRf9N?amp=1

قد يعجبك

CoLLAs 2026 website is up!!!🤩 Thrilled to serve as a program chair this year. We encourage submissions on all facets of adaptation of ML models, including unlearning training data or high level concepts/behaviours. Submit your best work to our archival or non-archival tracks!

The CoLLAs 2026 website is LIVE! We invite researchers to explore all facets of ML adaptation, from incorporating new capabilities during continuous training to efficiently removing outdated or harmful data. 📍 Location: Bucharest, Romania 📅 Date: Sep 14–17, 2026 🔗 Read the…

Do you care about designing ML systems that are adaptive, continually learning, and improving? We invite you to submit your best work to the fifth @CoLLAs_Conf, this time happening in Romania! Led by the excellent program chairs @Eleni30fillou and @mundt_martin!

The CoLLAs 2026 website is LIVE! We invite researchers to explore all facets of ML adaptation, from incorporating new capabilities during continuous training to efficiently removing outdated or harmful data. 📍 Location: Bucharest, Romania 📅 Date: Sep 14–17, 2026 🔗 Read the…

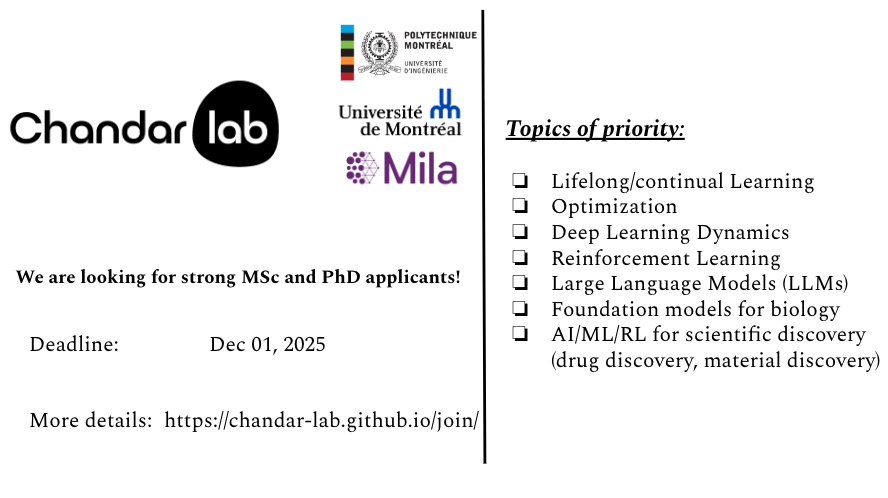

If you are attending #NeurIPS2025 and interested in the following postdoc positions, reach out to me here or by email! I would be happy to chat during the conference. 1. Postdoc in RL. 2. Postdoc in foundation models for biology.

I will be at @NeurIPSConf this week! Do reach out to me by email (since there is no Whova!) if you are interested in chatting during NeurIPS about potential MSc/PhD or postdoc positions! I am actively hiring two postdocs in RL and in foundation models for biology, respectively!

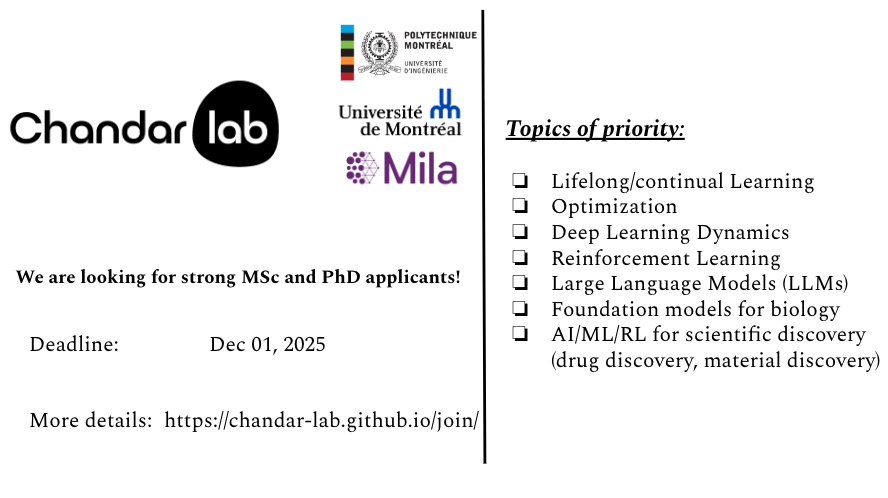

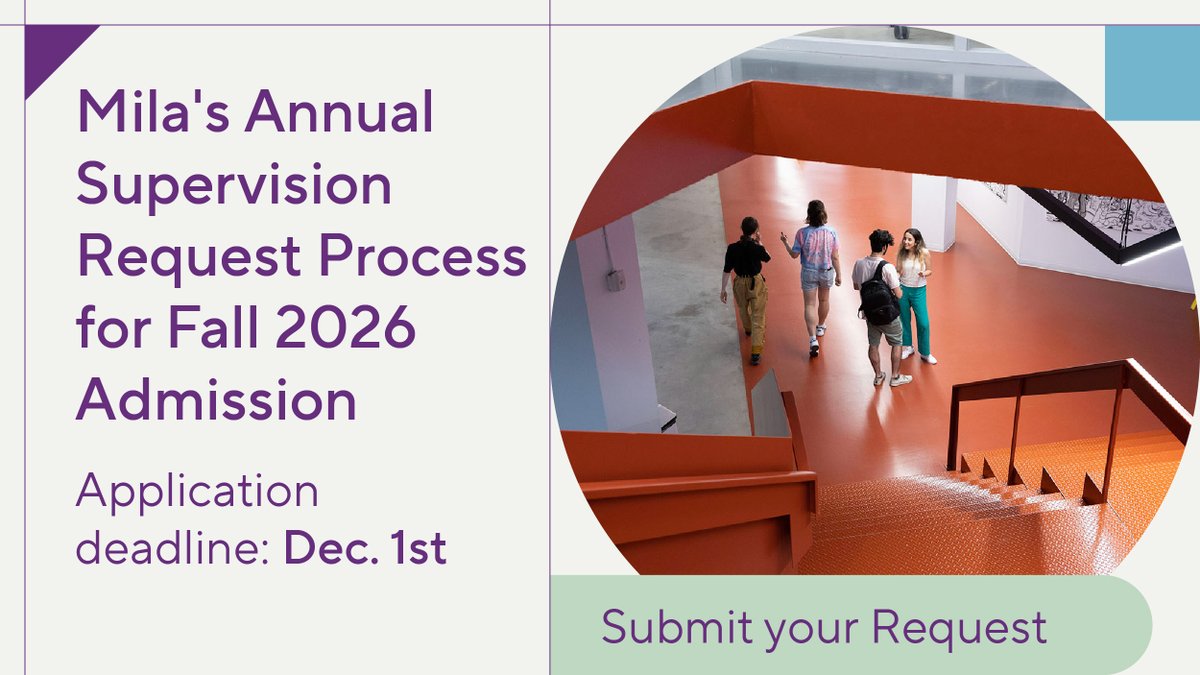

I am recruiting several graduate students (both MSc and PhD level) for Fall 2026 @ChandarLab! The application deadline is December 01. Please apply through the @Mila_Quebec supervision request process here: mila.quebec/en/prospective…. More details about the recruitment process…

Apparently there was already a discussion of this talk here after it was given live. But I missed it. So now will do: I do care about potential AI harms, but disagree with pretty much everything he says here! will elaborate in the response.

COLM Keynote: Nicholas Carlini Are LLMs worth it? youtube.com/watch?v=PngHcm…

youtube.com

YouTube

Nicholas Carlini - Are LLMs worth it?

I am recruiting several graduate students (both MSc and PhD level) for Fall 2026 @ChandarLab! The application deadline is December 01. Please apply through the @Mila_Quebec supervision request process here: mila.quebec/en/prospective…. More details about the recruitment process…

I can't attend #ICCV 2025 in Honolulu, Hawaii but my amazing teammates will be there! Please stop by our poster tomorrow 21 Oct (#438) to learn about TAPNext, a general, ViT-like architecture with SOTA point tracking quality! Links: 🌐 website: tap-next.github.io

In Fall 2026, I will begin a tenure-track faculty position @JHUCompSci Announcing the SciPhy lab, where we will study the science of physical agents (robots) We are now recruiting our first cohort of PhD students. If this is you, see sci-fi-lab.github.io

💫 Why you should apply to Mila – Québec AI Institute Mila is more than a research institute, it truly feels like a home. Being surrounded by kind, humble, and brilliant researchers makes every discussion inspiring and every challenge exciting.

Mila's annual supervision request process is now open to receive MSc and PhD applications for Fall 2026 admission! For more information, visit mila.quebec/en/prospective…

@Mila_Quebec's annual supervision request process is now open to receive MSc and PhD applications for Fall 2026 admission! We have open positions for PhD students to work on data management systems and software systems more broadly! Reach out if interested.

Mila's annual supervision request process is now open to receive MSc and PhD applications for Fall 2026 admission! For more information, visit mila.quebec/en/prospective…

Apply to join one of the top AI research 🤖 institutes in the world in the wonderful city of Montréal! I will be recruiting 1-2 students this year. Just mention my name in your application.

Mila's annual supervision request process is now open to receive MSc and PhD applications for Fall 2026 admission! For more information, visit mila.quebec/en/prospective…

Mila is the only academic institute in the world with 1500+ AI researchers in one place! Want to join this greatest concentration of AI talent? Apply now!

Mila's annual supervision request process is now open to receive MSc and PhD applications for Fall 2026 admission! For more information, visit mila.quebec/en/prospective…

Markovian Thinking by @Mila_Quebec & @Microsoft lets LLMs reason with a fixed-size state – compute stays the same no matter how long the reasoning chain gets. This makes RL linear-cost and memory-constant. The team’s Delethink RL setup trains models to be Markovian Thinkers,…

Thanks for sharing, @TheTuringPost We propose Markovian Thinking as a new paradigm, and Delethink as a simple, concrete instantiation enabling constant-memory, linear-compute reasoning that keeps improving beyond training limits.

Markovian Thinking by @Mila_Quebec & @Microsoft lets LLMs reason with a fixed-size state – compute stays the same no matter how long the reasoning chain gets. This makes RL linear-cost and memory-constant. The team’s Delethink RL setup trains models to be Markovian Thinkers,…

Nice paper! Make the context for reasoning local and train an RL model with such truncation. This way the model "markovinifies" and makes use of its context efficiently!

Introducing linear scaling of reasoning: 𝐓𝐡𝐞 𝐌𝐚𝐫𝐤𝐨𝐯𝐢𝐚𝐧 𝐓𝐡𝐢𝐧𝐤𝐞𝐫 Reformulate RL so thinking scales 𝐎(𝐧) 𝐜𝐨𝐦𝐩𝐮𝐭𝐞, not O(n^2), with O(1) 𝐦𝐞𝐦𝐨𝐫𝐲, architecture-agnostic. Train R1-1.5B into a markovian thinker with 96K thought budget, ~2X accuracy 🧵

It’s clear next-gen reasoning LLMs will run for millions of tokens. RL at 1M needs ~100× compute than 128K. Our Markovian Thinking keeps compute scaling linear instead. Check out Milad’s thread; some of my perspectives below:

Introducing linear scaling of reasoning: 𝐓𝐡𝐞 𝐌𝐚𝐫𝐤𝐨𝐯𝐢𝐚𝐧 𝐓𝐡𝐢𝐧𝐤𝐞𝐫 Reformulate RL so thinking scales 𝐎(𝐧) 𝐜𝐨𝐦𝐩𝐮𝐭𝐞, not O(n^2), with O(1) 𝐦𝐞𝐦𝐨𝐫𝐲, architecture-agnostic. Train R1-1.5B into a markovian thinker with 96K thought budget, ~2X accuracy 🧵

Long reasoning without the quadratic tax: The Markovian Thinker makes LLMs reason in chunks with a bounded state → linear compute, constant memory and it keeps scaling beyond the training limit. 1/6

Introducing linear scaling of reasoning: 𝐓𝐡𝐞 𝐌𝐚𝐫𝐤𝐨𝐯𝐢𝐚𝐧 𝐓𝐡𝐢𝐧𝐤𝐞𝐫 Reformulate RL so thinking scales 𝐎(𝐧) 𝐜𝐨𝐦𝐩𝐮𝐭𝐞, not O(n^2), with O(1) 𝐦𝐞𝐦𝐨𝐫𝐲, architecture-agnostic. Train R1-1.5B into a markovian thinker with 96K thought budget, ~2X accuracy 🧵

Introducing linear scaling of reasoning: 𝐓𝐡𝐞 𝐌𝐚𝐫𝐤𝐨𝐯𝐢𝐚𝐧 𝐓𝐡𝐢𝐧𝐤𝐞𝐫 Reformulate RL so thinking scales 𝐎(𝐧) 𝐜𝐨𝐦𝐩𝐮𝐭𝐞, not O(n^2), with O(1) 𝐦𝐞𝐦𝐨𝐫𝐲, architecture-agnostic. Train R1-1.5B into a markovian thinker with 96K thought budget, ~2X accuracy 🧵

I moved out of my home in 2008 and I still call my mom every day! If you read this and you don’t call your parents everyday, call them NOW! The love parents have for their kids is priceless! I realized it only after I became a father myself!

Story time: After I moved out of my home in 2014 to live in Japan, initially I used to call my parents daily. But as I became more "internationalized" I started calling my parents less and less. I used to tell my mom "I have nothing to talk about so we don't need to call daily".…

United States الاتجاهات

- 1. Good Thursday 32.3K posts

- 2. #thursdayvibes 2,099 posts

- 3. Merry Christmas 66K posts

- 4. Happy Friday Eve N/A

- 5. #JASPER_TouchMV 276K posts

- 6. #NationalCookieDay N/A

- 7. #DareYouToDeathSpecial 85.2K posts

- 8. JASPER COMEBACK TOUCH 180K posts

- 9. #ThursdayThoughts 1,536 posts

- 10. Hilux 9,080 posts

- 11. Metaverse 6,353 posts

- 12. DataHaven 11.6K posts

- 13. Toyota 29.2K posts

- 14. Earl Campbell 2,491 posts

- 15. Omar 186K posts

- 16. Colbert 4,669 posts

- 17. Tacoma N/A

- 18. Prince Harry 9,645 posts

- 19. Halle Berry 4,511 posts

- 20. USPS 7,715 posts

قد يعجبك

-

Dhruv Batra ✈️ NeurIPS

Dhruv Batra ✈️ NeurIPS

@DhruvBatra_ -

Shimon Whiteson

Shimon Whiteson

@shimon8282 -

Mila - Institut québécois d'IA

Mila - Institut québécois d'IA

@Mila_Quebec -

Chelsea Finn

Chelsea Finn

@chelseabfinn -

Tim Rocktäschel

Tim Rocktäschel

@_rockt -

Jakob Foerster

Jakob Foerster

@j_foerst -

Deepak Pathak

Deepak Pathak

@pathak2206 -

Marc G. Bellemare

Marc G. Bellemare

@marcgbellemare -

Sanjeev Arora

Sanjeev Arora

@prfsanjeevarora -

Tejas Kulkarni

Tejas Kulkarni

@tejasdkulkarni -

Rishabh Agarwal

Rishabh Agarwal

@agarwl_ -

Pulkit Agrawal

Pulkit Agrawal

@pulkitology -

Anirudh Goyal

Anirudh Goyal

@anirudhg9119 -

Aditya Grover

Aditya Grover

@adityagrover_ -

Alexia Jolicoeur-Martineau

Alexia Jolicoeur-Martineau

@jm_alexia

Something went wrong.

Something went wrong.