Abhinav Menon

@anscombes_razor

always ready to learn something! professional: pursuing my PhD, working in interpretability in NLP. personal: movies, languages, books, and history

You might like

🚀 New Paper & Benchmark! Introducing MATH-Beyond (MATH-B), a new math reasoning benchmark deliberately constructed for common open-source models (≤8B) to fail at pass@1024! Paper: arxiv.org/abs/2510.11653 Dataset: huggingface.co/datasets/brend… 🧵1/10

New paper alert! 🧵👇 We show representations of concepts seen by a model during pretraining can be morphed to reflect novel semantics! We do this by building a task based on the conceptual role semantics "theory of meaning"--an idea I'd been wanting to pursue for SO long! 1/n

Check out our recent work on identifying the limitations and properties of SAEs! We use formal languages as a synthetic testbed to evaluate the methodology and suggest further steps.

Paper alert––*Awarded best paper* at NeurIPS workshop on Foundation Model Interventions! 🧵👇 We analyze the (in)abilities of SAEs by relating them to the field of disentangled rep. learning, where limitations of AE based interpretability protocols have been well established!🤯

Can RL fine-tuning endow MLLMs with fine-grained visual understanding? Using our training recipe, we outperform SOTA open-source MLLMs on fine-grained visual discrimination with ClipCap, a mere 200M param simplification of modern MLLMs!!! 🚨Introducing No Detail Left Behind:…

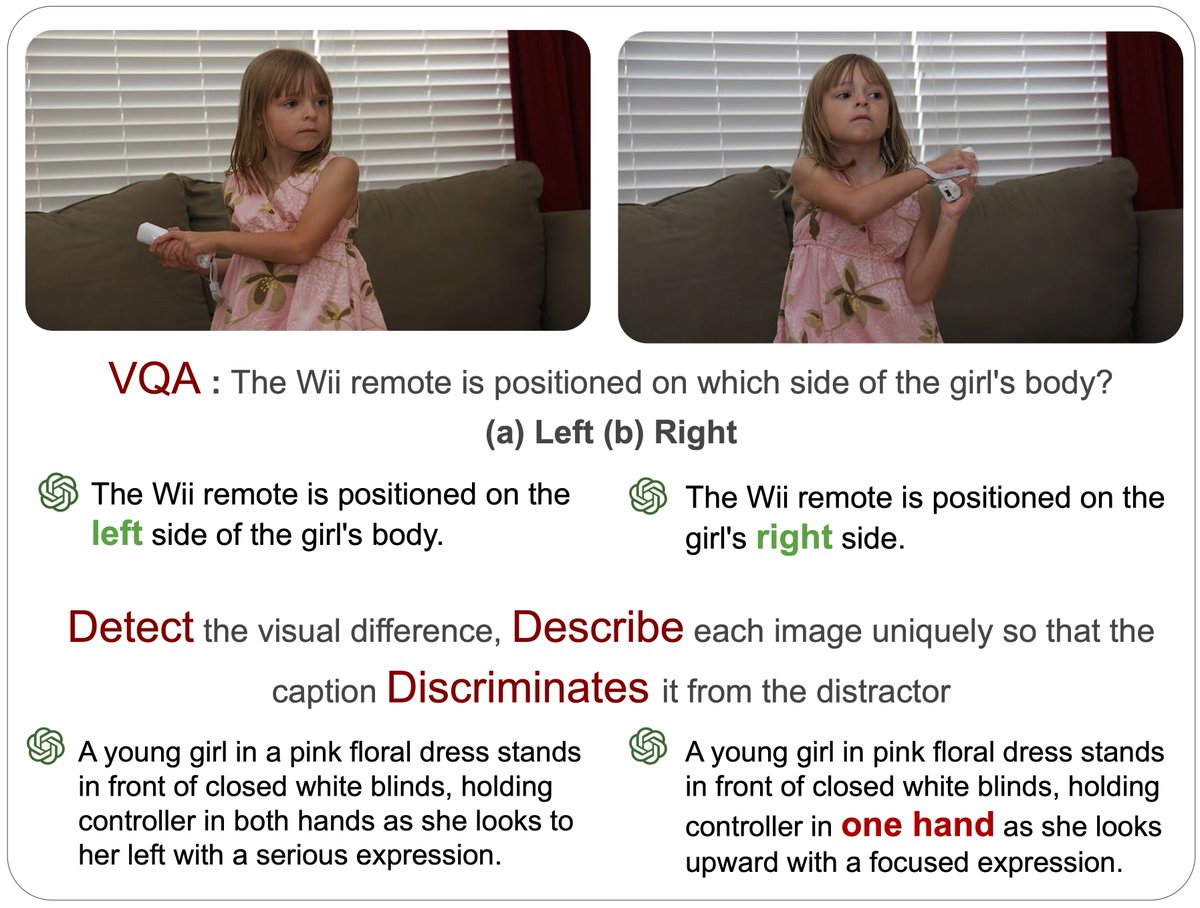

🚨 Introducing Detect, Describe, Discriminate: Moving Beyond VQA for MLLM Evaluation. Given an image pair, it is easier for an MLLM to identify fine-grained visual differences during VQA evaluation than to independently detect and describe such differences 🧵(1/n):

United States Trends

- 1. Good Sunday 65.8K posts

- 2. #sundayvibes 4,612 posts

- 3. Zirkzee 17.3K posts

- 4. #MUFC 17.1K posts

- 5. #CRYMUN 7,975 posts

- 6. Amorim 40.2K posts

- 7. WILLIAMEST AT EMQUARTIER 246K posts

- 8. #EMAnniversaryxWilliamEst 256K posts

- 9. Crystal Palace 28.7K posts

- 10. Stockton 30.8K posts

- 11. Mason Mount 5,269 posts

- 12. Licha 2,313 posts

- 13. Mateta 9,967 posts

- 14. Yoro 7,869 posts

- 15. Duke 33.7K posts

- 16. Dalot 5,751 posts

- 17. Casemiro 6,899 posts

- 18. #BNewEraBirthdayConcert 1.39M posts

- 19. Muhammad Qasim 16.5K posts

- 20. Gakpo 6,080 posts

Something went wrong.

Something went wrong.