Mikel Bober-Irizar

@mikb0b

24 // Kaggle Competitions Grandmaster & ML/AI Researcher. Building video games @iconicgamesio, machine reasoning @Cambridge_CL, bioscience @ForecomAI.

Potrebbero piacerti

Why do pre-o3 LLMs struggle with generalization tasks like @arcprize? It's not what you might think. OpenAI o3 shattered the ARC-AGI benchmark. But the hardest puzzles didn’t stump it because of reasoning, and this has implications for the benchmark as a whole. Analysis below🧵

Really good to be back in SF for GDC (yes, our game is still cooking 👀) If you're around and want to meet up next week, let me know!

Seeing this chart go around a bunch, I think the main point is being missed - “LLMs can’t solve large grids because of perception” This is a deficiency in the model, there are alternative ways to “perceive” the grid. Doing it in 1-shot is not required. As a human, do you hold…

LLMs are dramatically worse at ARC tasks the bigger they get. However, humans have no such issues - ARC task difficulty is independent of size. Most ARC tasks contain around 512-2048 pixels, and o3 is the first model capable of operating on these text grids reliably.

I recommend reading @mikb0b 's article on o3's performance on the ARC challenge. He proves that LLMs' struggle with ARC depend on their inability to easily process large 2d grids.

This is a really good observation! I wrote about it and analyzed why in this article: anokas.substack.com/p/llms-struggl…

more evidence (including experiments varying sizes of problems) that grid size alone plays a significant role in arc. this is obviously far from ideal for a reasoning benchmark and can hopefully get addressed in arc-agi-2

Why do pre-o3 LLMs struggle with generalization tasks like @arcprize? It's not what you might think. OpenAI o3 shattered the ARC-AGI benchmark. But the hardest puzzles didn’t stump it because of reasoning, and this has implications for the benchmark as a whole. Analysis below🧵

Really great to meet and catch up with @mikb0b in person after many years! 😄

I'm heading back to San Francisco for @Official_GDC 🎮 - if anyone's around the bay area late March and wants to meet up let me know!

I'll be speaking at @NVIDIA's AI & DS Virtual Summit about the journey to becoming the youngest Kaggle Grandmaster, along with @Rob_Mulla and @kagglingdieter. 🔥 Come and join us for a live Q&A on Wednesday 9th at 12pm PT (for free!) nvidia.com/en-us/events/a… @NVIDIAAI

I'm going to be in San Francisco in early November! ✈️ If anyone's in the bay area and wants to meet up, or if anyone knows any events I should check out, let me know! 😊

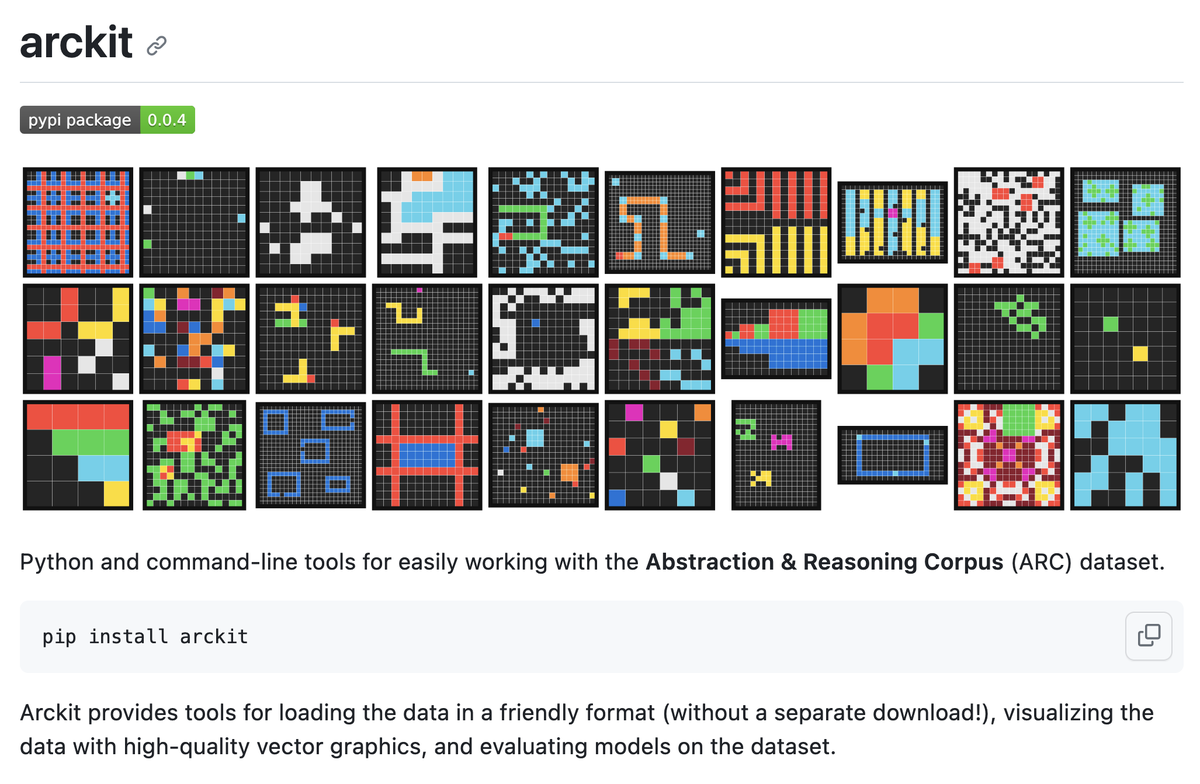

I've recently been playing with @fchollet's Abstraction and Reasoning Corpus, a really interesting benchmark for building systems that can reason. As part of that, I've just released a small 🐍 library for easily interacting with and visualising ARC: github.com/mxbi/arckit

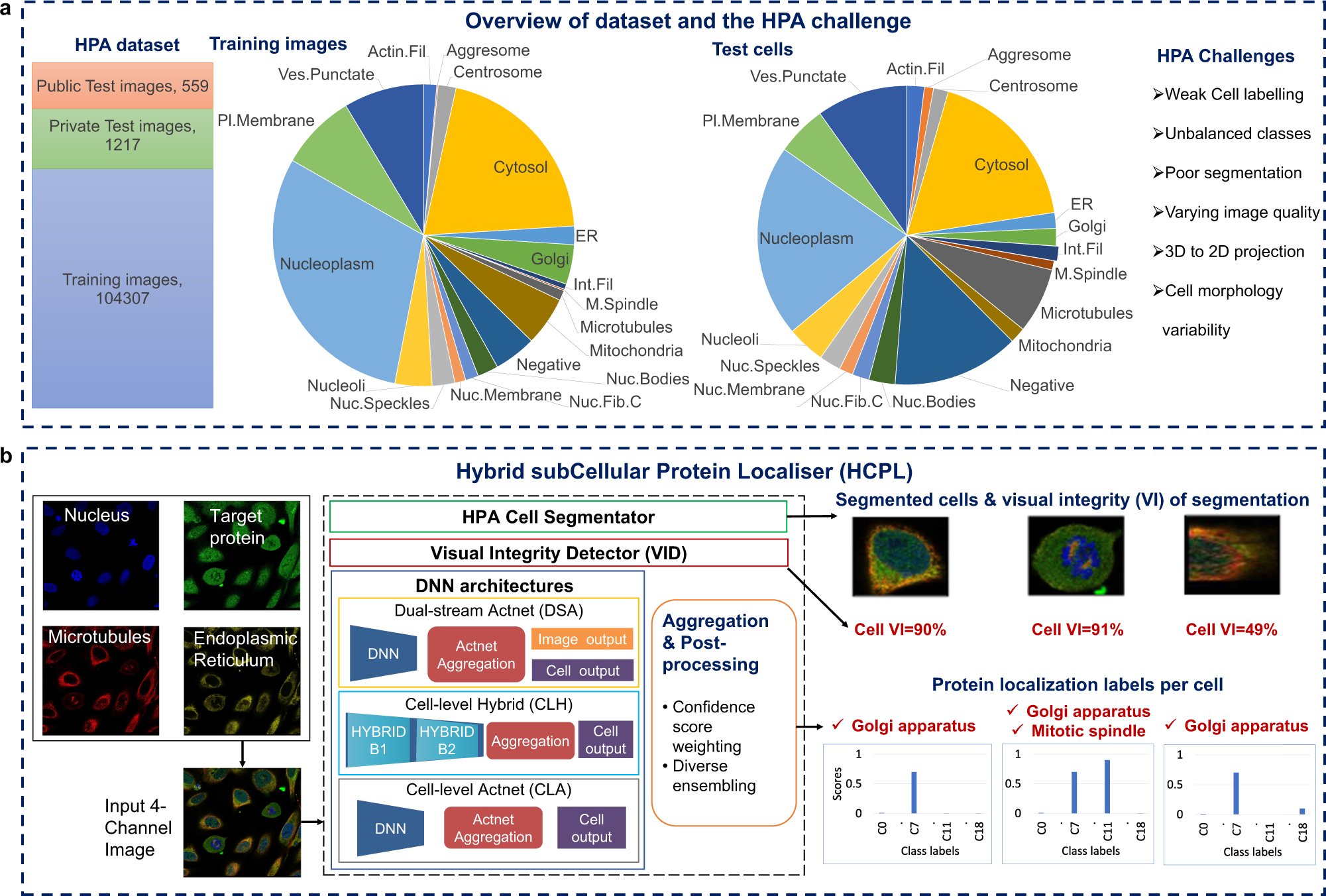

Really proud to be published in a Nature Portfolio journal for the first time! We set a new SOTA for single-cell protein localisation on the @ProteinAtlas, building on our work in the 2nd HPA Kaggle comp. nature.com/articles/s4200… @ForecomAI @cvssp_research @d_minskiy

United States Tendenze

- 1. D’Angelo 197K posts

- 2. D'Angelo 196K posts

- 3. Brown Sugar 16.3K posts

- 4. Black Messiah 7,755 posts

- 5. Voodoo 16K posts

- 6. #PortfolioDay 10.3K posts

- 7. Happy Birthday Charlie 122K posts

- 8. Powell 38.2K posts

- 9. How Does It Feel 7,347 posts

- 10. Young Republicans 4,557 posts

- 11. Pentagon 99.2K posts

- 12. Osimhen 130K posts

- 13. Alex Jones 28.4K posts

- 14. #BornOfStarlightHeeseung 82.4K posts

- 15. Neo-Soul 18.3K posts

- 16. Sandy Hook 11.4K posts

- 17. CJGJ N/A

- 18. VPNs 1,083 posts

- 19. Untitled 6,442 posts

- 20. Baldwin 17.1K posts

Potrebbero piacerti

-

Sylvain Gugger

Sylvain Gugger

@GuggerSylvain -

Jane Wang

Jane Wang

@janexwang -

Dieter

Dieter

@kagglingdieter -

Sanyam Bhutani

Sanyam Bhutani

@bhutanisanyam1 -

Anthony Goldbloom

Anthony Goldbloom

@antgoldbloom -

Philipp Singer

Philipp Singer

@ph_singer -

Tejas Kulkarni

Tejas Kulkarni

@tejasdkulkarni -

Thang Luong

Thang Luong

@lmthang -

Gilberto Titericz Jr

Gilberto Titericz Jr

@Giba1 -

Aakash Kumar Nain

Aakash Kumar Nain

@A_K_Nain -

Radek Osmulski

Radek Osmulski

@radekosmulski -

meg.ai 🇨🇦

meg.ai 🇨🇦

@MeganRisdal -

Ryan Chesler

Ryan Chesler

@ryan_chesler -

MLT

MLT

@__MLT__ -

Gabriel Synnaeve

Gabriel Synnaeve

@syhw

Something went wrong.

Something went wrong.