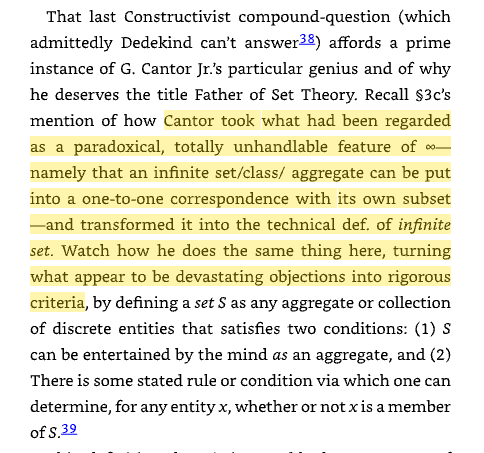

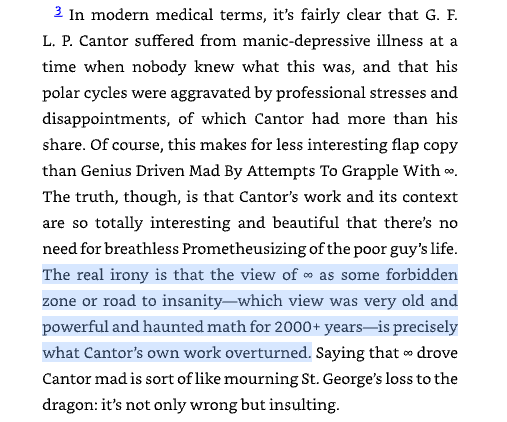

a controversial opinion i hold deeply is that AI is not superhuman at writing (and isn't close) there are 10x and 100x human writers. here's a random excerpt from David Foster Wallace, widely agreed to be one of the greatest modern writers if you sincerely think anything…

Everyone vote for o3-mini type model to be open-sourced please 🥺🥺🥺 We can distill or quantize a phone sized model dw the open-source community will work its magic!!

for our next open source project, would it be more useful to do an o3-mini level model that is pretty small but still needs to run on GPUs, or the best phone-sized model we can do?

128108 票 · 最終結果

I don’t think I’m better than other people but I definitely think my *taste* is just objectively superior I have so much confidence in my aesthetic opinions it’s frankly delusional

I think anyone who has ever made anything great needs to have a smidgen of subclinical narcissism, that when asked “do you think you’re better than everyone else”, the answer is “yes, I do and I am.”

xAI Deep Research for summarizing all the bookmarks you never got around to reading. generates a comprehensive report that you can bookmark for later

I think over the next decade we’ll see the idea of different body parts having different specialties (eg gut, heart, head) experiencing a dewooification. today they’re chakras and energy centers and stuff and I think we’ll start talking about them more as computational ASICs

no thank you but we will buy twitter for $9.74 billion if you want

To an LLM, a novel discovery is indistinguishable from an error.

This question is even more puzzling and salient given the existence of Deep Research

Transformers can overcome easy-to-hard and length generalization challenges through recursive self-improvement. Paper on arxiv coming on Monday. Link to a talk I gave on this below 👇 Super excited about this work!

Inspired by @karpathy and the idea of using games to compare LLMs, I've built a version of the game Codenames where different models are paired in teams to play the game with each other. Fun to see o3-mini team with R1 against Grok and Gemini! Link and repo below.

I quite like the idea using games to evaluate LLMs against each other, instead of fixed evals. Playing against another intelligent entity self-balances and adapts difficulty, so each eval (/environment) is leveraged a lot more. There's some early attempts around. Exciting area.

United States 趨勢

- 1. Warner Bros 120K posts

- 2. HBO Max 55.8K posts

- 3. #FanCashDropPromotion 1,293 posts

- 4. Good Friday 58K posts

- 5. #FridayVibes 4,501 posts

- 6. Paramount 28.2K posts

- 7. Ted Sarandos 3,862 posts

- 8. Jake Tapper 59.8K posts

- 9. $NFLX 5,375 posts

- 10. #FridayFeeling 1,941 posts

- 11. National Security Strategy 12.1K posts

- 12. NO U.S. WAR ON VENEZUELA 3,230 posts

- 13. The EU 140K posts

- 14. David Zaslav 1,938 posts

- 15. Bandcamp Friday 1,998 posts

- 16. #FridayMotivation 4,503 posts

- 17. Happy Friyay 1,225 posts

- 18. RED Friday 5,058 posts

- 19. Core PCE 2,548 posts

- 20. Blockbuster 19.3K posts

Something went wrong.

Something went wrong.