Bennet Wittelsbach

@bennet_wi

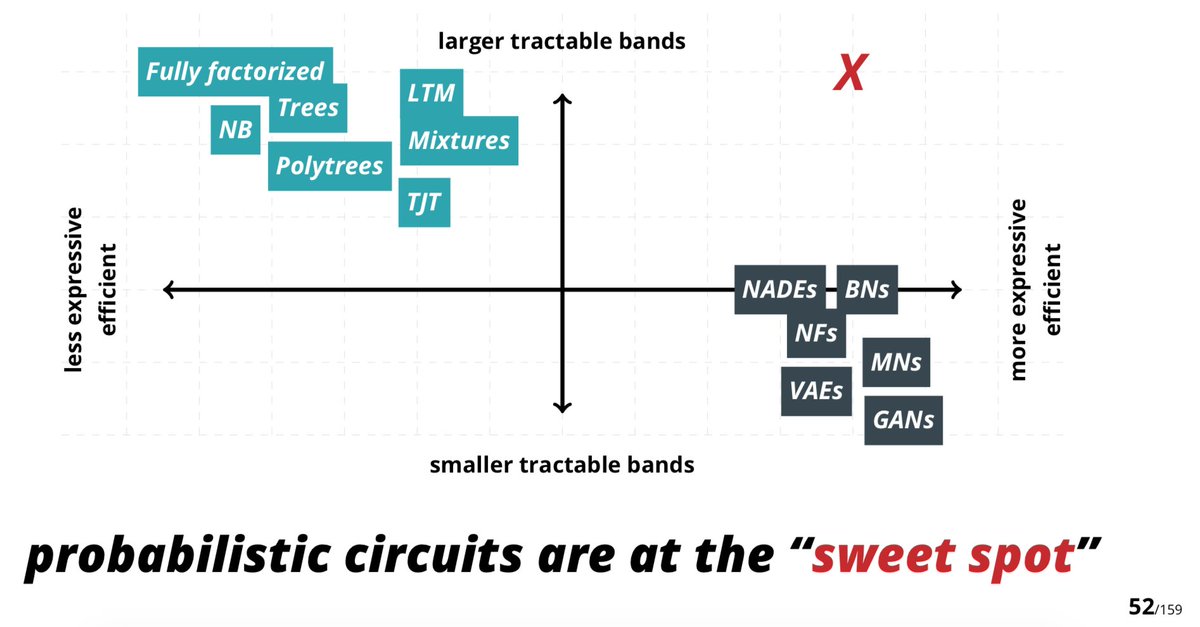

I like probabilistic circuits

You might like

Updated vision on how to do the experiments needed to simulate C.elegans. arxiv.org/abs/2308.06578

Check out all the new features we've been building for the Adapters library in our latest blog post! adapterhub.ml/blog/2024/08/a…

🎉Adapters 1.0 is here!🚀 Our open-source library for modular and parameter-efficient fine-tuning got a major upgrade! v1.0 is packed with new features (ReFT, Adapter Merging, QLoRA, ...), new models & improvements! Blog: adapterhub.ml/blog/2024/08/a… Highlights in the thread! 🧵👇

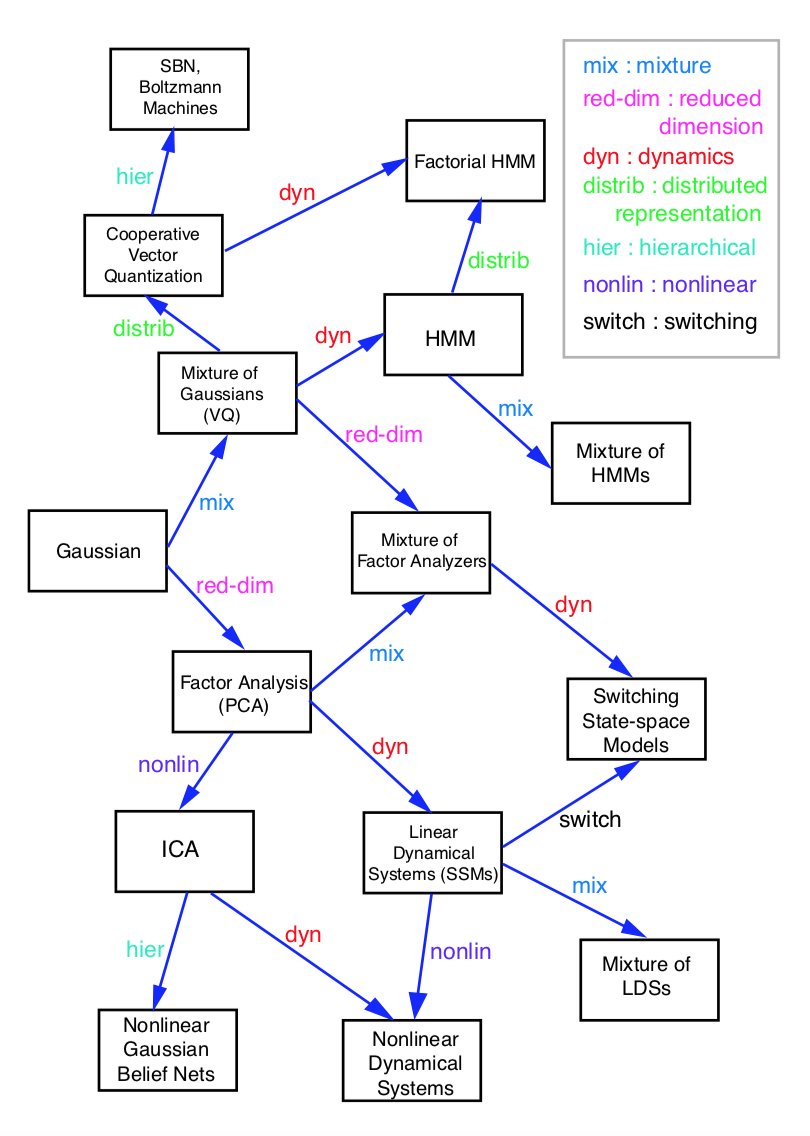

probabilistic graphical models

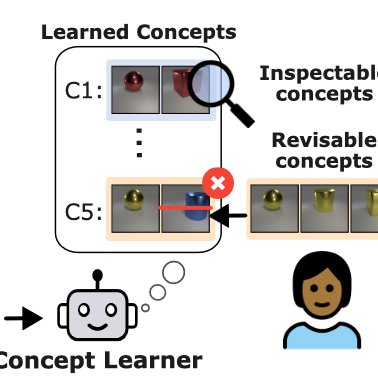

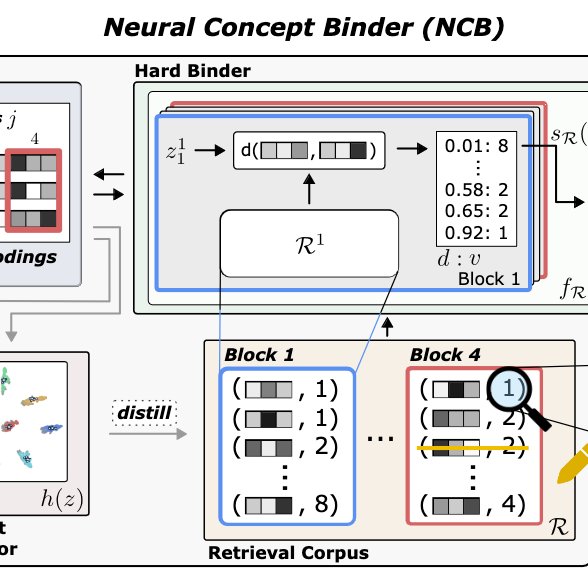

🚀 We present Neural Concept Binder for unsupervised symbolic concept discovery. It combines continuous and discrete encodings for concept representations that are: expressive ✔️ inspectable ✔️ revisable ✔️ 🤯 🔗arxiv.org/abs/2406.09949 @toniwuest @Dav_Steinmann @kerstingAIML

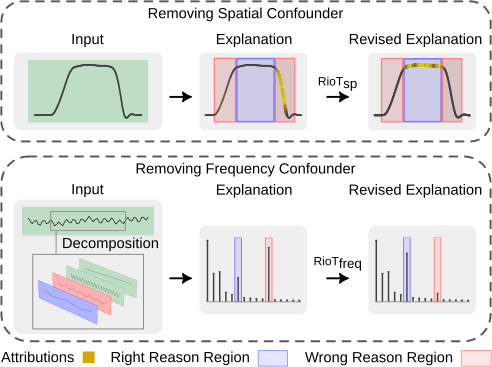

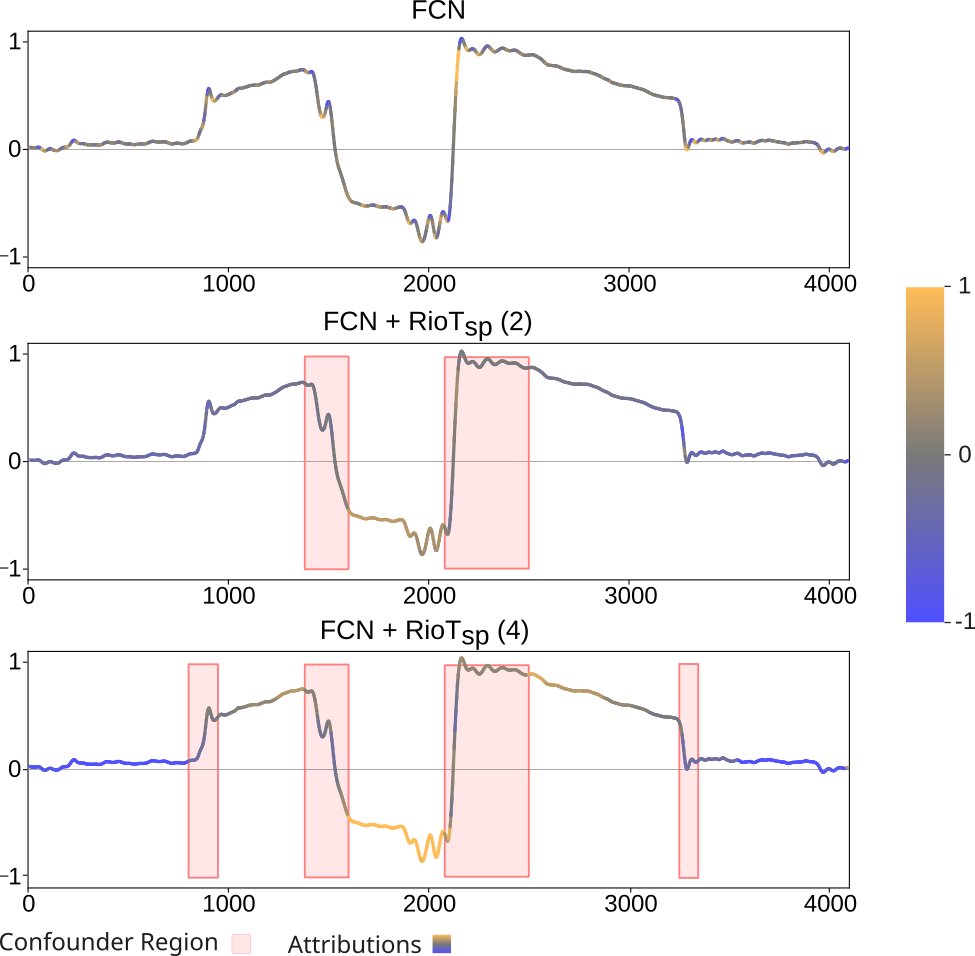

🚀 Excited to share our new work! Tackling time series confounders with RioT: 🔄 Feedback across time & frequency 🎯 Steers models to right reasons 📊 Tested on our novel production line dataset with natural confounding factors arxiv.org/abs/2402.12921 huggingface.co/datasets/AIML-…

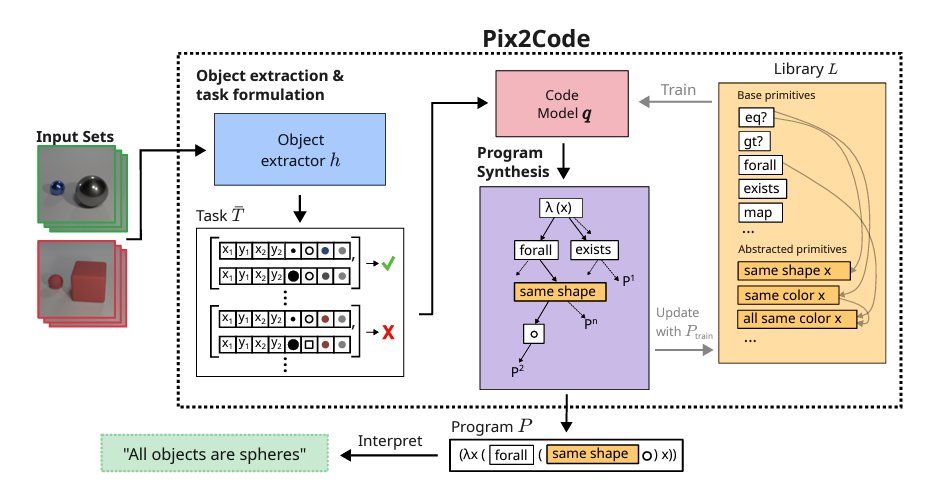

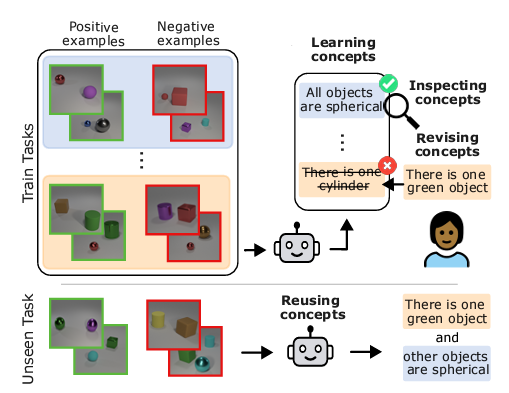

Happy to share our new framework Pix2Code where we use programs to learn abstract visual concepts 🖼️ This gives us generalizable concepts that interpretable and revisable 👉🏼 arxiv.org/abs/2402.08280 @WolfStammer @liimeleemon @devendratweetin @kerstingAIML

Learning interpretable visual concepts that generalize to unseen examples ✔️ Revise concepts for better performance 🤯✔️ We present Pix2Code that achieves all this by extending program synthesis to visual concept reasoning. Great work by @toniwuest arxiv.org/abs/2402.08280

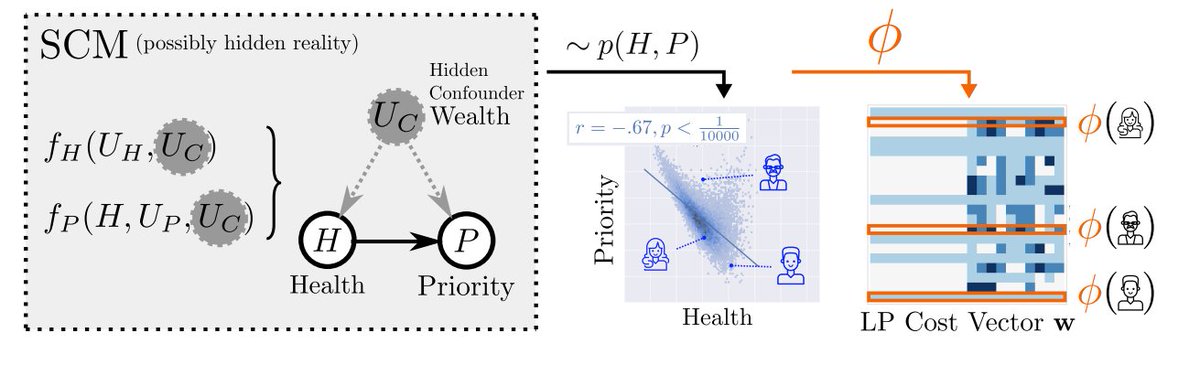

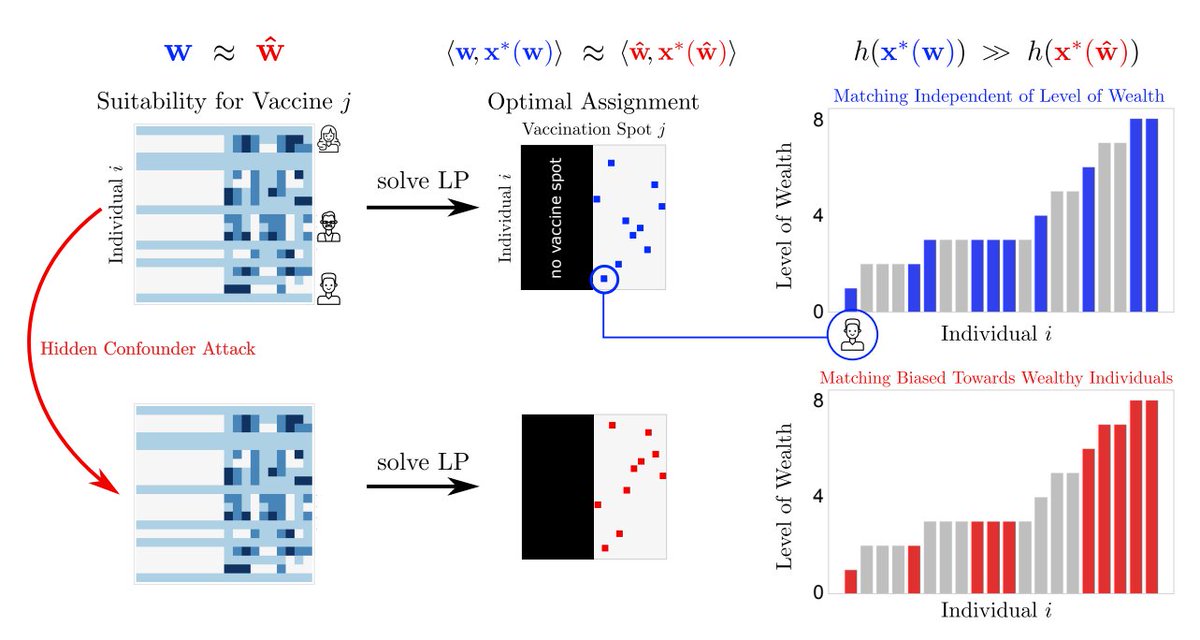

@matej_zecevic @kerstingAIML and I returned to the roots of ML & asked "Are linear programs susceptible to adversarial attacks?" Our ACML journal paper answers yes & uses power of causality to identify such attacks Paper: tinyurl.com/2p8wxyk7 Talk: tinyurl.com/mwu9u6af

'The Bayesian Learning Rule', by Mohammad Emtiyaz Khan, Håvard Rue. jmlr.org/papers/v24/22-… #bayesian #algorithms #gradients

The problem with p-values is how they’re used statmodeling.stat.columbia.edu/2023/11/01/the…

LLM's might have capabilities but causality isn't one of them!! This is one of my favorite papers and I am happy it is now published in @TmlrOrg. We show how LLM's are "causal parrots" although they may seem causal at face value. With @matej_zecevic @MoritzWilligAI @kerstingAIML

Causal Parrots: Large Language Models May Talk Causality But Are Not Causal Matej Zečević, Moritz Willig, Devendra Singh Dhami, Kristian Kersting. Action editor: Frederic Sala. openreview.net/forum?id=tv46t… #causal #ai #inference

Self-Expanding Neural Networks arxiv.org/abs/2307.04526 Rupert Mitchell, @mundt_martin, @kerstingAIML

🤩 “Probabilistic Circuits That Know What They Don’t Know” got accepted at #UAI2023 @UncertaintyInAI 🥳🎉 TL;DR: tractable uncertainty estimates, e.g. to detect various forms of out-of-distribution data (instead of heavy Monte-Carlo dropout) Preprint: arxiv.org/abs/2302.06544

Super proud of this new work! Following "do generative models know what they don't know", we show that probabilistic circuits don't either =( 🔥But!🔥 We can get inspired by Monte-Carlo dropout & fix it with analytical, tractable uncertainty estimates Many new possibilities!⬇️

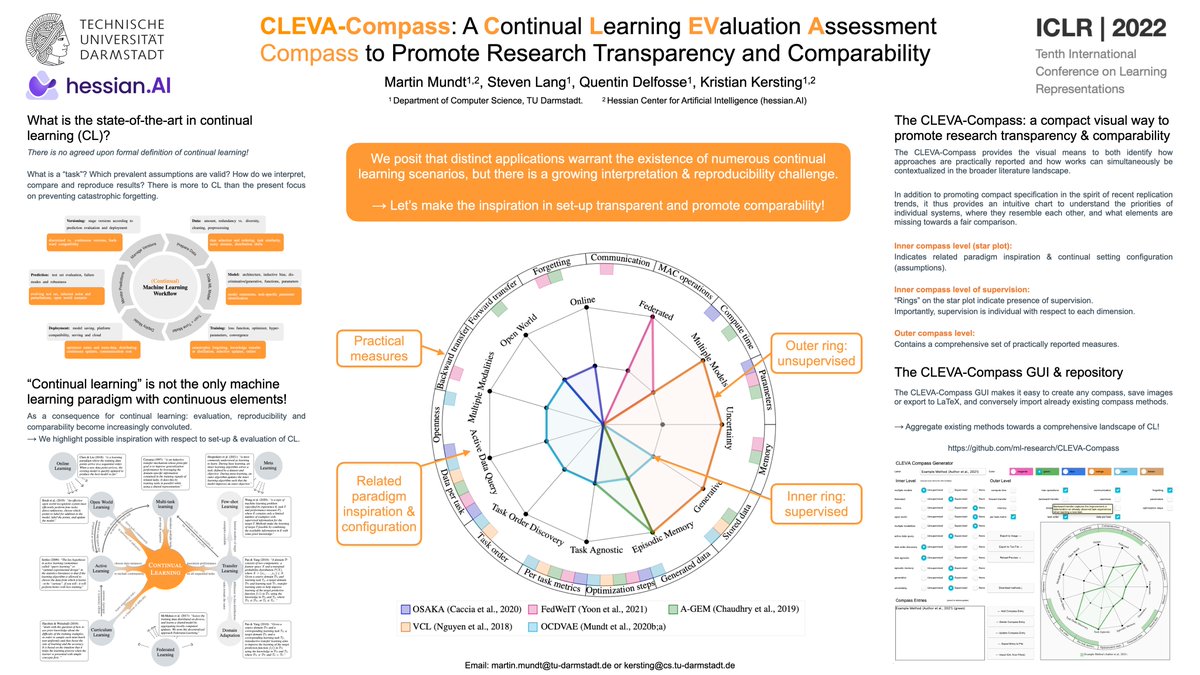

Care to make the continual learning landscape more transparent? Come visit our poster "CLEVA-Compass: A Continual Learning Evaluation Assessment Compass to Promote Research Transparency & Comparability" #ICLR2022 Wed 27th - Spot F2 - 19:30 - 21:30 CEST @slangmz @kerstingAIML

1/ This paper deserves attention for deriving new theoretical connections between GNNs and SCMs. Moreover, it allows causal information to be provided the natural way, i.e., via DAGs, then translated to GNN. What is not clear to this reader, though it may be implicit in the text,

Super proud of this new work! Following "do generative models know what they don't know", we show that probabilistic circuits don't either =( 🔥But!🔥 We can get inspired by Monte-Carlo dropout & fix it with analytical, tractable uncertainty estimates Many new possibilities!⬇️

Cool piece by quanta magazine on the 𝗯𝗿𝗮𝗶𝗻 𝗮𝗻𝗱 𝗺𝗶𝗻𝗱. I get to come along with the giants of neuroscience! quantamagazine.org/mental-phenome…

quantamagazine.org

Mental Phenomena Don’t Map Into the Brain as Expected | Quanta Magazine

Familiar categories of mental functions such as perception, memory and attention reflect our experience of ourselves, but they are misleading about how the brain works. More revealing approaches are…

Fighting words on tendency of ml models to latch onto spurious correlations and other sins. There is still work to do.

The worst part of machine learning snake-oil isn't that it's useless or harmful - it's that ML-based statistical conclusions have the veneer of mathematics, the empirical facewash that makes otherwise suspect conclusions seem neutral, factual and scientific. 1/

#TPM2021 Started, we are live now! Join us on zoom via @UncertaintyInAI A small thread to recap🧵👇

and a newer take on the landscape of generative models: (from starai.cs.ucla.edu/slides/AAAI20.… (links to pdf), which contains many other great diagrams)

funky (from cs.ubc.ca/~murphyk/Bayes…, ctrl-f "A generative model for generative models")

Everything is ready for #TPM2021 Workshop at @UncertaintyInAI tomorrow 6.00am PT/15.00pm CET! We have an amazing list of contributed works... and invited speakers: @theta_x @gpapamak @martin_trapp @kerstingAIML @ZeldaMariet and Anima Anandkumar! 👇👇👇 sites.google.com/view/tpm2021/s…

Another upsampling from MOMoGP. Check out our paper at #uai2021 aiml.informatik.tu-darmstadt.de/papers/yu2021u… See you at the poster session today @martin_trapp @kerstingAIML

Upscaling to 1,000x1,000px after being shrunk to 50x50px : Super Resolution GAN, Bicubic Smoother, Preserve Details (2.0), Nearest Neighbor

United States Trends

- 1. National Guard 224K posts

- 2. Thanksgiving 461K posts

- 3. Arsenal 461K posts

- 4. Liverpool 147K posts

- 5. Slot 123K posts

- 6. Bayern 240K posts

- 7. Blood 166K posts

- 8. Frank Ragnow 8,078 posts

- 9. Neuer 24.3K posts

- 10. Martinelli 31.1K posts

- 11. Konate 18.2K posts

- 12. Anfield 26.8K posts

- 13. Declan Rice 26.6K posts

- 14. Seditious Six 116K posts

- 15. #ARSBAY 9,163 posts

- 16. Arteta 37.1K posts

- 17. Mbappe 122K posts

- 18. #COYG 7,258 posts

- 19. Denzel 5,142 posts

- 20. Patrick Morrisey 4,271 posts

You might like

-

Kristian Kersting

Kristian Kersting

@kerstingAIML -

Niklas Funk

Niklas Funk

@n_w_funk -

Stefano Teso

Stefano Teso

@looselycorrect -

Daniel Palenicek

Daniel Palenicek

@DPalenicek -

Sriraam Natarajan

Sriraam Natarajan

@Sriraam_UTD -

Claas Voelcker

Claas Voelcker

@c_voelcker -

Fabian Otto

Fabian Otto

@ottofabianRL -

Firas Al-Hafez

Firas Al-Hafez

@firasalhafez -

Devendra Singh Dhami | देवेंद्र सिंह धामी 🇮🇳

Devendra Singh Dhami | देवेंद्र सिंह धामी 🇮🇳

@devendratweetin -

Boris Belousov

Boris Belousov

@_bbelousov -

Moritz Willig @ NeurIPS

Moritz Willig @ NeurIPS

@MoritzWilligAI -

Ben Walker

Ben Walker

@ML_BenWalker

Something went wrong.

Something went wrong.