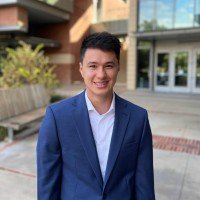

Daiki Chijiwa

@dchiji_en

Researcher at NTT / Interests: neural networks, loss landscape, learning theory

Bạn có thể thích

Proof of a perfect platonic representation hypothesis ift.tt/7fv2uVz

Our new paper is out! We show that the functionalities of quantum error correction and quantum teleportation can be interpreted as negative maps for non-Markovian processes by introducing the subsystem frame. We also point out the relations with QEM! arxiv.org/abs/2510.20224

本日、記者会見があり、NTTが研究開発しております「tsuzumi 2」が提供開始になりました🚀 ニュースリリース👉 group.ntt/jp/newsrelease… tsuzumi 2はパラメータ数28.6B・10Tトークン学習の、日本語の理解・生成・指示遂行に強みを持つモデルです。 2025年11月19日から開催される NTT R&D フォーラム…

📜Lossless Vocabulary Reduction for LLMs🤖 In this paper, we established a theoretical framework that can flexibly shrink the vocabulary of a given LLM to an arbitrary sub-vocabulary, efficiently in inference-time. 🔗arxiv.org/abs/2510.08102 See the video for a quick overview👇

観測データから混合整数線形計画を復元.目的関数と制約条件の両方を学習する逆最適化手法を提案しました.🚀🚀🚀 📷ぜひご覧ください📷 arxiv.org/abs/2510.04455

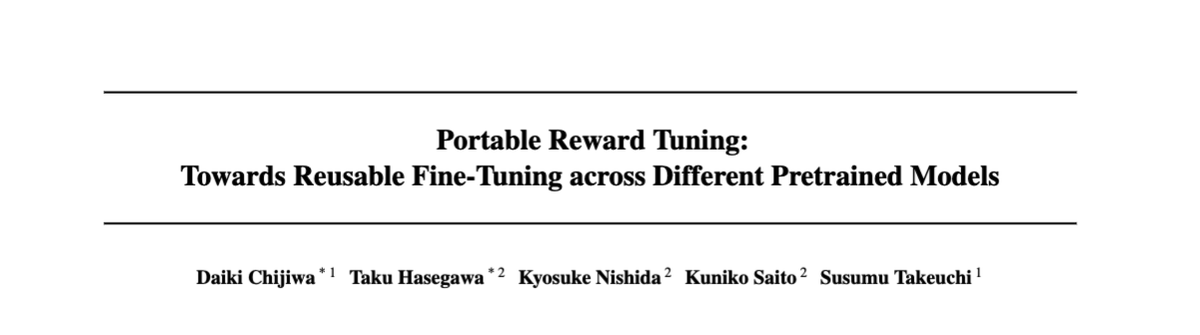

先日、NLPコロキウムでお話しさせていただいた動画がアップロードされました。 ご興味がある方はどうぞご覧ください。 内容はICML2025 に採択された Portable Reward Tuning のお話です。

長谷川さんのNLPコロキウムでのトークを公開しています→ 📺youtu.be/hSj7ZK1K4Hc 当日参加できなかったかたもぜひご覧ください! ※ なおQA・ディスカッションは公開しておりません。 またスライドも公開いただきました。あわせてご覧ください → nlp-colloquium-jp.github.io/assets/pdf/202…

✨世界初✨ #生成AI のカスタマイズコストを抜本的に低減し、低コスト運用を持続可能にする「ポータブルチューニング」技術を確立しました! 本成果は、7/13~19に開催される機械学習分野における最難関国際会議 #ICML2025 にて発表されます #NTTRD

🚀次回の #NLPコロキウム のお知らせ 講演者: 長谷川拓さん @th_freiburg @dchiji_en (NTT) 日時: 7/9 (水) 12:00–13:00 JST 世界の変化に応じたモデル更新には高いコストが伴います🌏そこで基盤モデルに柔軟に組み合わせて使えるポータブルな報酬モデルを学習するお話です。nlp-colloquium-jp.github.io/schedule/2025-…

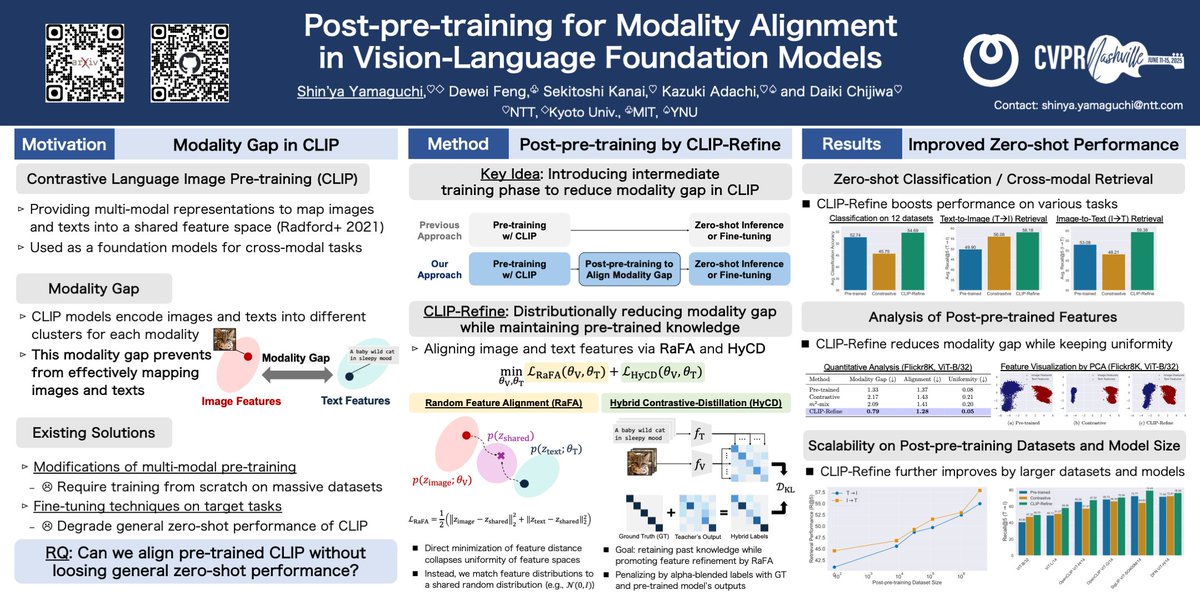

🚀 Happy to share our @CVPR paper, which proposes a post-pre-training method for CLIP to mitigate modality gaps and improve zero-shot performance with just 5 minutes of additional training! We are looking forward to discussing with you at our poster session! #CVPR2025

🎉 Excited to announce our ICML 2025 paper “Portable Reward Tuning: Towards Reusable Fine‑Tuning across Different Pretrained Models,” co‑first‑authored with @dchiji_en 🤝(equal contribution)! #ICML2025 Preprint 👉 arxiv.org/abs/2502.12776

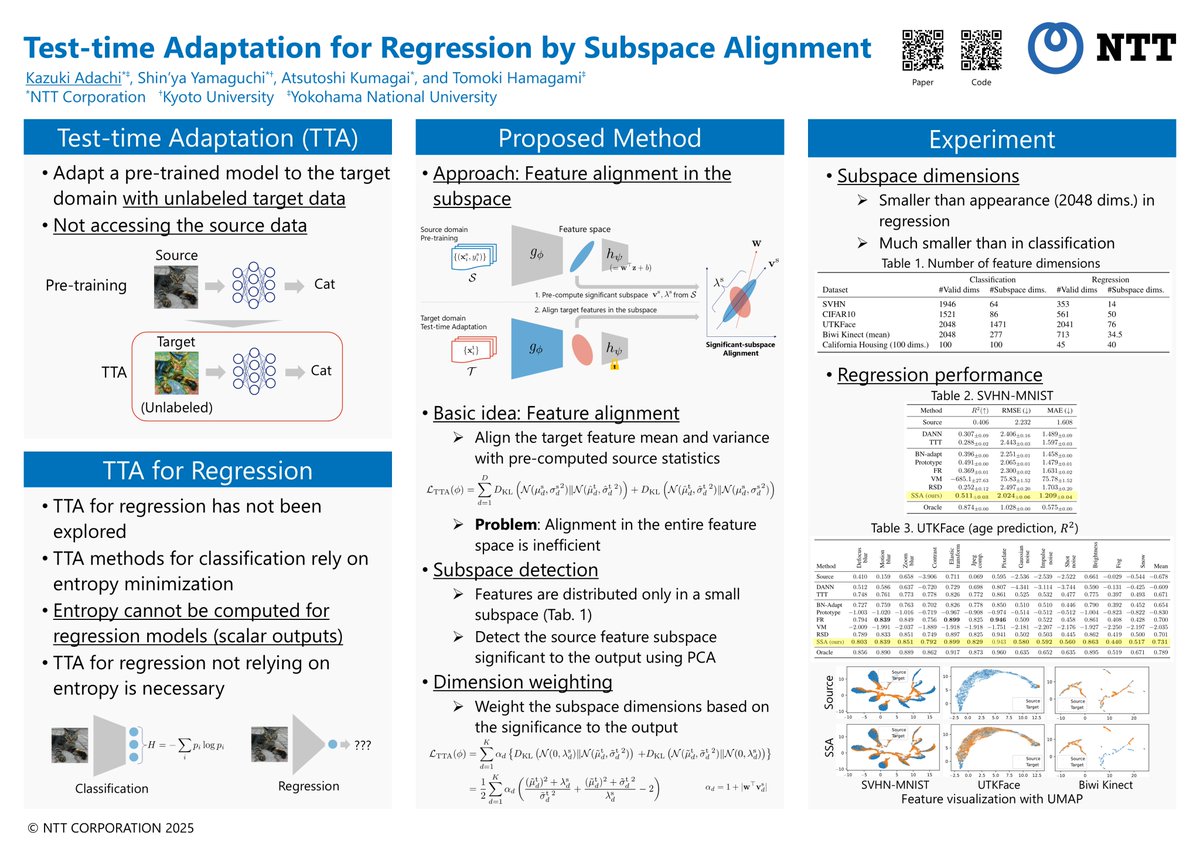

I'm happy to announce that our paper, "Test-time Adaptation for Regression by Subspace Alignment," will be presented at #ICLR2025 on 4/25! We demonstrate that existing TTA methods for classification struggle with regression and propose an effective method using feature alignment.

We have released the preprint and code for our paper, which has been accepted to #CVPR2025! We propose a simple post-pre-training method called CLIP-Refine to mitigate the modality gap in CLIP. Preprint: arxiv.org/abs/2504.12717 Code: github.com/yshinya6/clip-…

AI is reshaping society and ourselves. We're at the wheel, steering this powerful force. But what future do we envision for a human-AI society, and how can we get there safely? We’ve launched "Physics of AI Group" to explore the Philosophy and Physics of Intelligence.

And finally one more open access one: "Transfer learning with pre-trained conditional generative models" by Shin’ya Yamaguchi, Sekitoshi Kanai, Atsutoshi Kumagai, Daiki Chijiwa & Hisashi Kashima (link.springer.com/article/10.100…) #OA

United States Xu hướng

- 1. #warmertogether N/A

- 2. $BARRON 2,169 posts

- 3. Harvey Weinstein 3,583 posts

- 4. Diane Ladd 3,862 posts

- 5. $PLTR 17.7K posts

- 6. Walt Weiss N/A

- 7. Ben Shapiro 28.9K posts

- 8. Laura Dern 1,889 posts

- 9. Teen Vogue 1,192 posts

- 10. Gold's Gym 51.3K posts

- 11. Cardinals 12.2K posts

- 12. #NXXT 2,512 posts

- 13. iOS 26.1 3,384 posts

- 14. #maddiekowalski 4,388 posts

- 15. #CAVoteYesProp50 6,476 posts

- 16. #EAPartner N/A

- 17. McBride 3,114 posts

- 18. Standout 7,873 posts

- 19. University of Virginia 1,895 posts

- 20. Monday Night Football 4,727 posts

Bạn có thể thích

-

Ningyu Zhang@ZJU

Ningyu Zhang@ZJU

@zxlzr -

Hiroshi Takahashi

Hiroshi Takahashi

@taka8hiroshi -

maya

maya

@miwamaya_3 -

R.Ueda

R.Ueda

@CsWeddy -

Taiki Miyagawa

Taiki Miyagawa

@kanaheinousagi -

bloodeagle40234

bloodeagle40234

@bloodeagle40234 -

Youmi Ma

Youmi Ma

@Youmima1015 -

Taku Hasegawa

Taku Hasegawa

@th_freiburg -

特別ツラたん員

特別ツラたん員

@yang_wangji -

Kazutoshi Shinoda

Kazutoshi Shinoda

@shino__c -

yuya_hikima

yuya_hikima

@Yuya_hkm -

Shinsaku Sakaue

Shinsaku Sakaue

@shinsaku_sakaue -

おーけん

おーけん

@ohken322 -

S-Suzuki

S-Suzuki

@SSuzuki33352103 -

Ankil Patel

Ankil Patel

@randommoleculez

Something went wrong.

Something went wrong.