Learn to ship. Shipping is a skill distinct from coding. Shipping is designing, coding, QAing, story-telling, teaching, marketing, selling, pivoting, iterating… It used to be that coding dominated in importance because of coding ability scarcity. AI will push you to go further.

every LLM would be at least 10% better if they augmented current date just at the start of the conversation

Insight from Andrej Karpathy. I like his clarity on this

Math is the language of intelligence (Matrix Multiplications)

Highly recommend watching @dwarkesh_sp and @karpathy podcast. Learnt a lot about limitations of the current LLMs and the current context around Nano GPT

The @karpathy interview 0:00:00 – AGI is still a decade away 0:30:33 – LLM cognitive deficits 0:40:53 – RL is terrible 0:50:26 – How do humans learn? 1:07:13 – AGI will blend into 2% GDP growth 1:18:24 – ASI 1:33:38 – Evolution of intelligence & culture 1:43:43 - Why self…

the real metric for the success of an AI product is how much double checking do users have to do each time they use your product

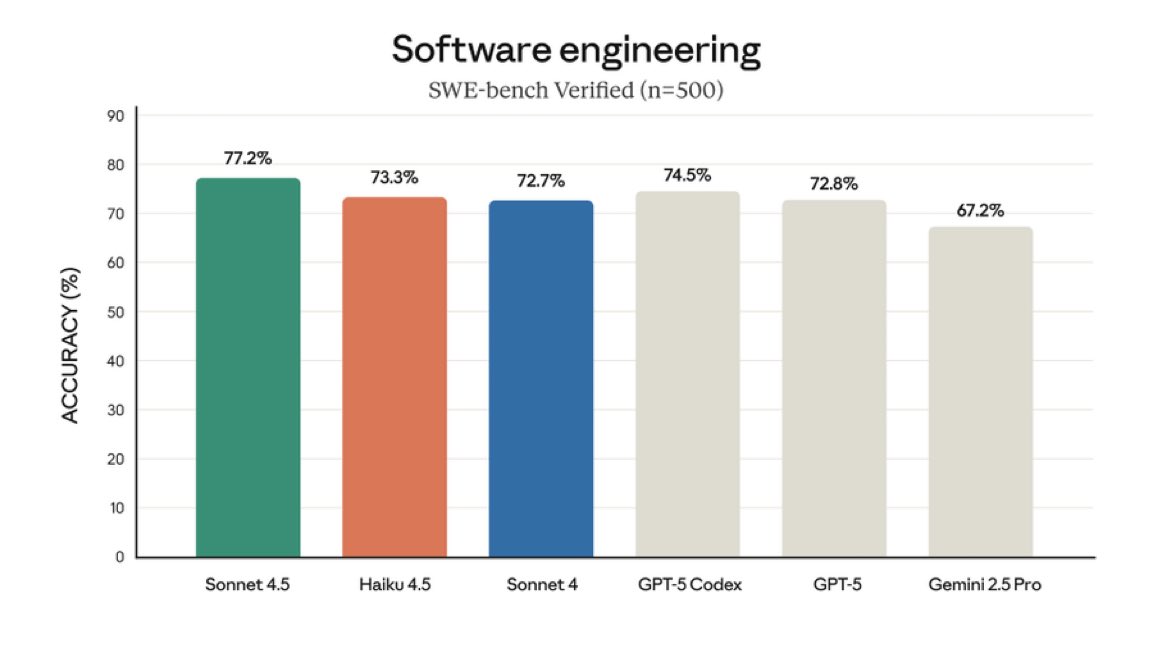

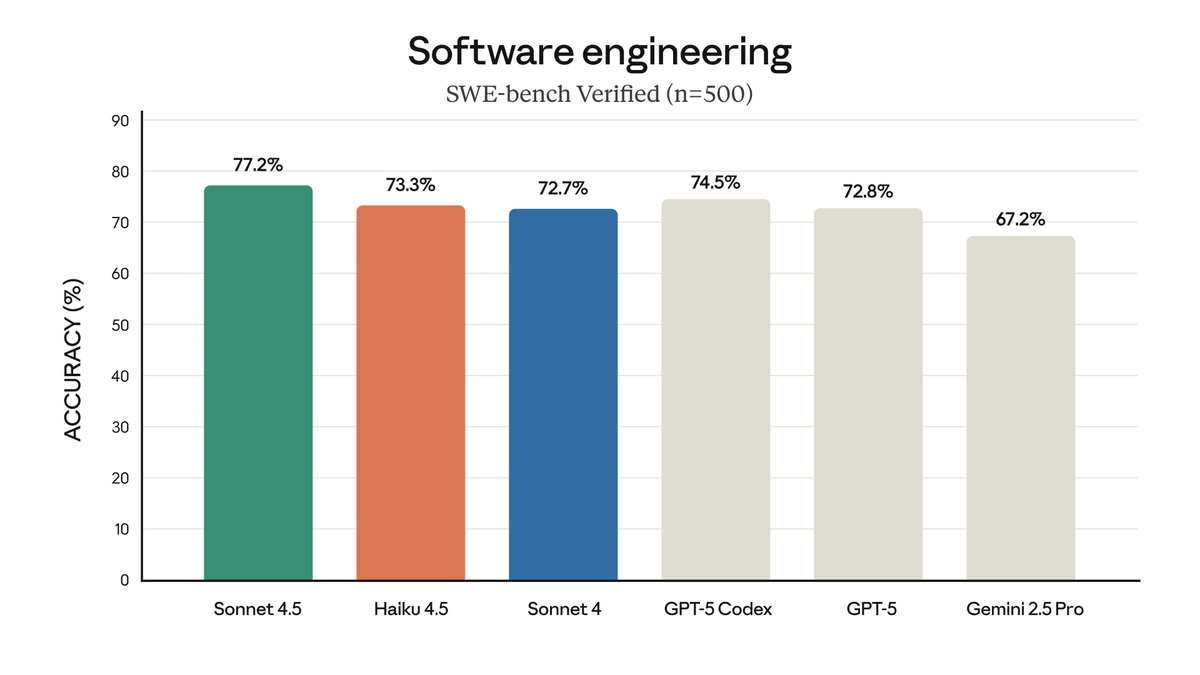

Haiku 4.5 seems promising so far. Fast and accurate

when internet happened, information was available through air, in the AI world, intelligence is available through air

Excited to ship Claude Haiku 4.5 today! What was state-of-the-art 5 months ago (Sonnet 4) is now available at 1/3 the cost and 2x the speed. Even beats Sonnet 4 at computer use. Available today wherever you get your Claude :)

🎯 Andrej Karpathy on how to learn.

Excited to release new repo: nanochat! (it's among the most unhinged I've written). Unlike my earlier similar repo nanoGPT which only covered pretraining, nanochat is a minimal, from scratch, full-stack training/inference pipeline of a simple ChatGPT clone in a single,…

folks saying they don’t need figma because they can use AI lack taste

i noticed a weird phenomenon, the pre-ai super star developers are finding every reason to justify why AI is a bubble. I'm not against them but its just a pattern i've noticed. If most of them just dabbled with this tech, they would realize how useful it is despite its limits

What the fuck just happened 🤯 Stanford just made fine-tuning irrelevant with a single paper. It’s called Agentic Context Engineering (ACE) and it proves you can make models smarter without touching a single weight. Instead of retraining, ACE evolves the context itself. The…

Life punishes the vague wish and rewards the specific ask. After all, conscious thinking is largely asking and answering questions in your own head. If you want confusion and heartache, ask vague questions. If you want uncommon clarity and results, ask uncommonly clear questions.

the stealth cheetah model on Cursor gives me the same level of outputs a Claude Sonnet 3.5 except faster

This is how Anthropic decides what to build next—and it's brilliant. Instead of endless spec documents and roadmap debates, the Claude Code team has cracked the code on feature prioritization: prototype first, decide later. Here's their process (shared by Catherine Wu, Product…

United States 趨勢

- 1. Sam Hauser N/A

- 2. Derek Shelton N/A

- 3. Liverpool 181K posts

- 4. Donovan Mitchell 2,364 posts

- 5. #StandXHalloween N/A

- 6. Slot 109K posts

- 7. Boasberg 23.3K posts

- 8. Magic 325K posts

- 9. $META 33.8K posts

- 10. Delap 22.5K posts

- 11. Jennifer Welch 17.9K posts

- 12. Sonya Massey 21.9K posts

- 13. #SellingSunset 2,365 posts

- 14. Game 5 50.2K posts

- 15. Pierre Robert 2,934 posts

- 16. Huda 48.1K posts

- 17. Landry 5,262 posts

- 18. Gittens 28.8K posts

- 19. Druski 10K posts

- 20. Watergate 15.5K posts

Something went wrong.

Something went wrong.