Ron Clabo

@dotnetcore

Founder GiftOasis LLC. Tweets about software development: .NET, AI, ASPNET Core, C# & LuceneNET. Top 1% on StackOverflow. I Love learning & helping others.

This is the golden age of AI. This is the best time in human history to be an AI builder, says Andrew Ng. I tend to agree.

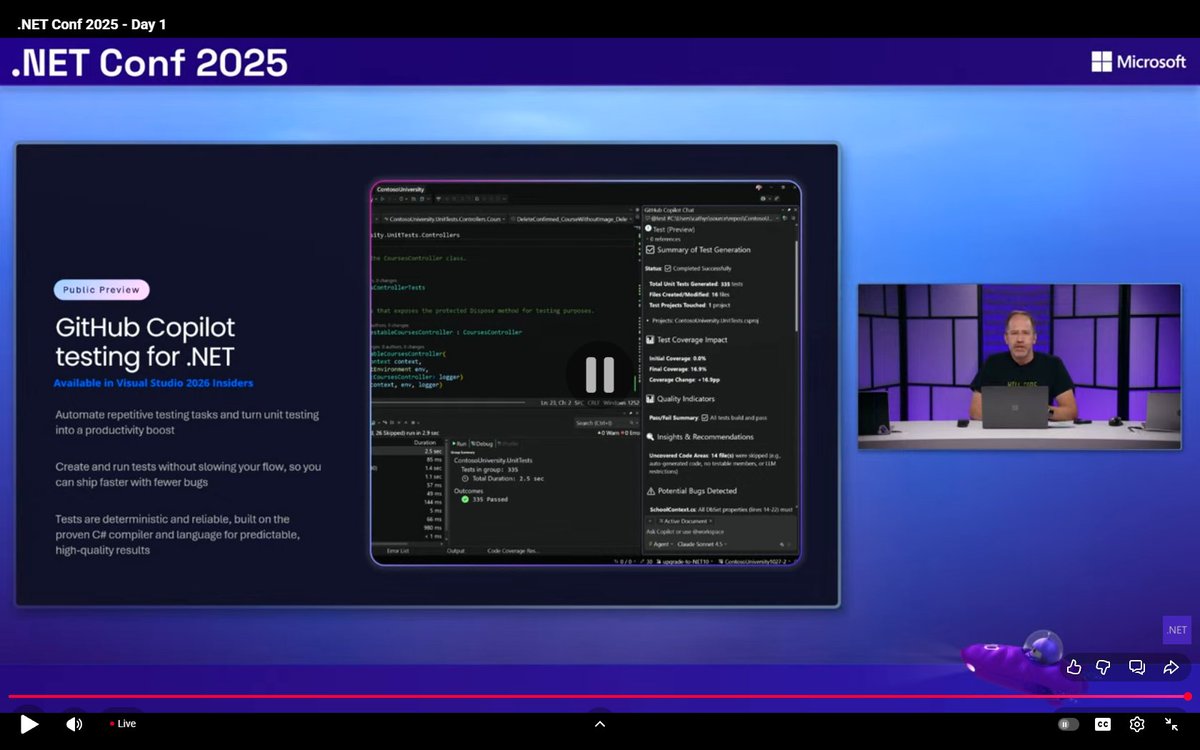

Starting in Visual Studio 2026, the IDE is decoupled from the toolchain version. This allows for monthly, stable updates to the IDE without disrupting the toolchain. It's the best of both worlds? I'm gonna love the MORE FREQUENT IDE productivity improvements! #dotnetconf

A GitHub AI Agent for creating unit tests for legacy c# code is coming. Great use for an AI agent. #dotnetconf

Mix .NET with Java, Python & beyond? Yes, please! 🎉 msft.it/6017tHgLF Aspire Polyglot lets you build multi-language apps without the headaches. Dive into the future of #WebDev 👉 msft.it/6012tHgLA 🔥 Flexible. Fast. Fun.

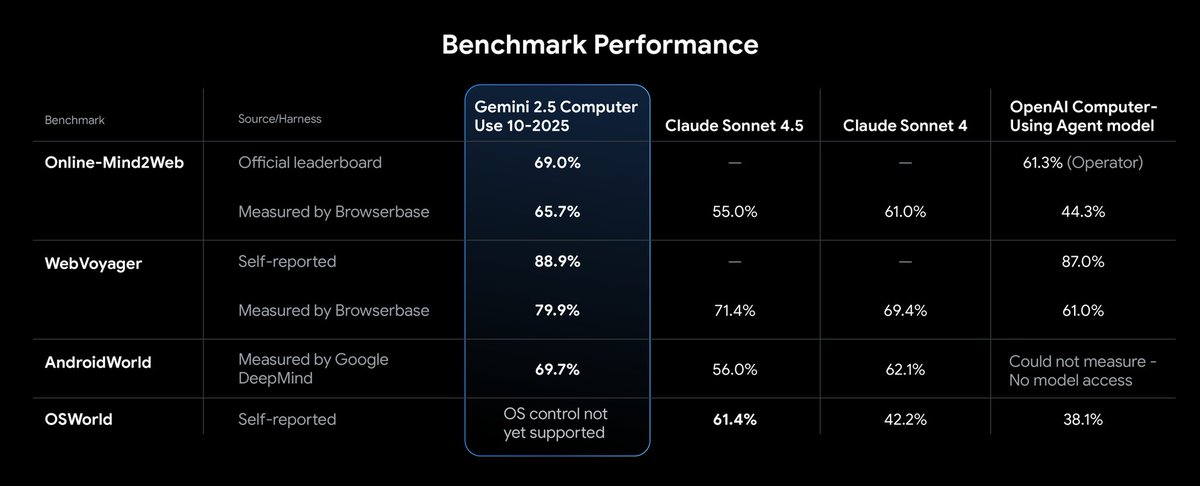

Our new Gemini 2.5 Computer Use model is now available in the Gemini API, setting a new standard on multiple benchmarks with lower latency. These are early days, but the model’s ability to interact with the web – like scrolling, filling forms + navigating dropdowns – is an…

Really great commentary on Microsoft's recent In-Context Learning (ICL) Paper. Good insights on how many shots are needed for "few-shot" prompting.

Different AI models have different strengths, weaknesses, biases, and safety profiles. Blending them to achieve an optimal user experience is nontrivial.

How important is Context Engineering to leveraging AI? Even humans can’t be intelligent without good context. Full stop. Read that again.

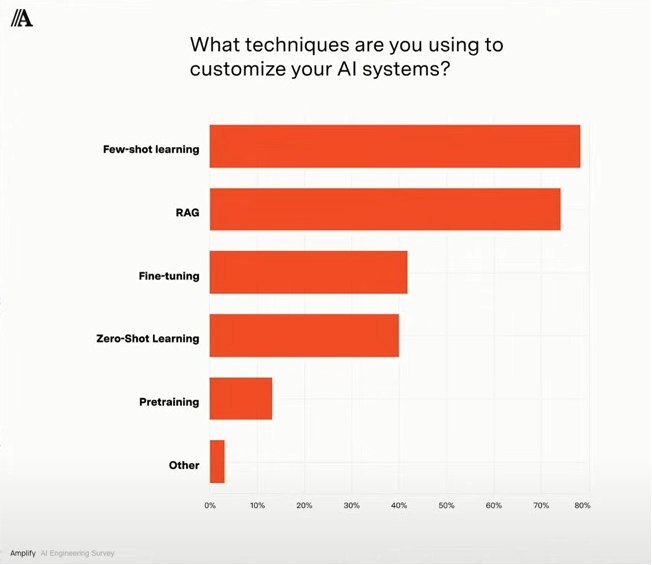

“What techniques are you using to customize your AI systems?" Amplify, a VC firm, surveyed 500 software/AI engineers. Here are the results:

Google DeepMind’s research found vector search hits a scalability limit due to fixed embedding dimensions, underperforming BM25 in most cases. Time to explore hybrid approaches.

Google DeepMind Finds a Fundamental Bug in RAG: Embedding Limits Break Retrieval at Scale Google DeepMind's latest research uncovers a fundamental limitation in Retrieval-Augmented Generation (RAG): embedding-based retrieval cannot scale indefinitely due to fixed vector…

In the blink of a cosmic eye, we passed the Turing test. ... And yet the moment passed with little fanfare, or even recognition. - Mustafa Suleyman

United States 趋势

- 1. Caleb Love 2,952 posts

- 2. Travis Head 11.6K posts

- 3. Mamdani 475K posts

- 4. Marjorie Taylor Greene 73.1K posts

- 5. Sengun 8,797 posts

- 6. Suns 21.4K posts

- 7. Morgan Geekie N/A

- 8. Norvell 3,572 posts

- 9. Lando 49.6K posts

- 10. Kerr 5,301 posts

- 11. UNLV 2,271 posts

- 12. #SmackDown 48.1K posts

- 13. Collin Gillespie 4,130 posts

- 14. #DBLF2025 13.7K posts

- 15. Blazers 4,086 posts

- 16. Wolves 16.8K posts

- 17. The View 99.9K posts

- 18. Florida State 10.9K posts

- 19. Rockets 16.9K posts

- 20. #LasVegasGP 76.2K posts

Something went wrong.

Something went wrong.