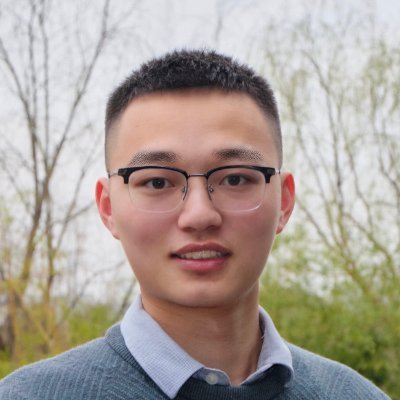

elie

@eliebakouch

Training llm's (now: @huggingface)

Super excited to share SmolLM3, a new strong 3B model. SmolLM3 is fully open, we share the recipe, the dataset, the training codebase and much more! > Train on 11T token on 384 H100 for 220k GPU hours > Support long context up to 128k thanks to NoPE and intra document masking >…

<smol> is the way to go

You can go far without, ask for mentorship, devour video lectures, find place with compute, practice your research skills.

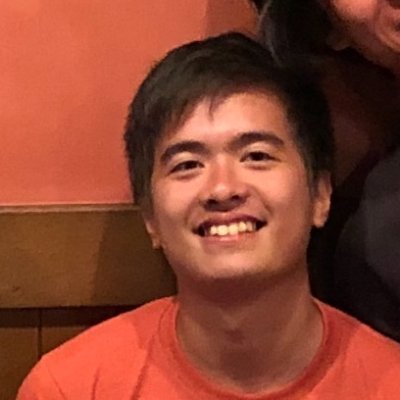

the team at huggingface made the absolute insane discovery that filtering garbage data out of the pretraining made small models think good incredible very bullish on the whole filtering garbage thing out asked stupid questions to elie over there and he handled it well

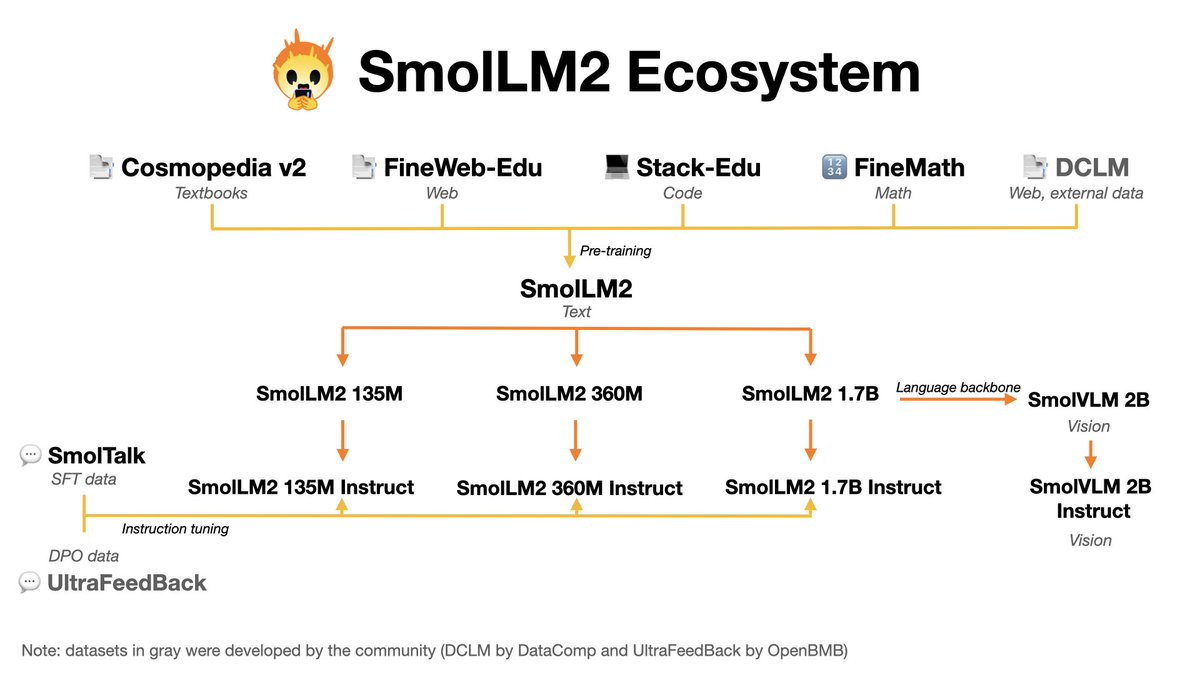

We’re presenting our work on SmolLM2 tomorrow at COLM, and I guess it’s a good time to say that I’m really proud of what our science team at @huggingface has accomplished with the smollm ecosystem 🤗 While going through the poster sessions this week and talking with researchers,…

Just arrived in Montreal for COLM! Who‘s around this week and down to chat? (we also brought some special merch)

Revolutions need first principle thinking. that's what we did with Paris. instead of incremental improvements, we build an entirely new distributed learning stack from scratch that removes the communication bottleneck entirely. This is the spaceX moment for decentralized AI.

Introducing Paris - world's first decentralized trained open-weight diffusion model. We named it Paris after the city that has always been a refuge for those creating without permission. Paris is open for research and commercial use.

Want to learn how to train models across the world, with 400x less bits exchanged and a huge latency tolerance? 🌎 I’ll be presenting our work on how to efficiently scale distributed training at @COLM_conf. 🗓️ TODAY: Tuesday, 11:00 - 13:00 📍 Room 710 #COLM2025

Come say hi and get some copies of the SmolLM3 Blueprint! 🤗

We're in 🇨🇦 for @COLM_conf Come talk to me and @HKydlicek on Wednesday at the FineWeb2 poster session (Session 3, Poster #58) @LoubnaBenAllal1 will be on Session 5, Poster #23 (SmolLM2)

I haven’t trained a model with cosine lr schedule + muon but kinda looks weird to have that much difference between muon and AdamW early on no? It’s also very different form the curve in the moonlight paper

2/n MARS-M enhances Muon by incorporating scaled gradient correction into momentum, which significantly reduces variance during model training. In GPT-2 experiments, MARS-M beats Muon at every step with the same hyper-params, and outperforms AdamW on training & validation loss.

Very cool paper

LLMs are becoming creative partners in scientific discovery. We've seen Scott Aaronson, @terrence_tao , and many more using them to unlock pathways to proofs : not just for IMO-style competition problems, but for actual research questions ! While LLMs can't yet solve…

Okay let's do this thing. Friday, 1pm EST. How to train your tiny MoE. Don't miss it.

Heading to COLM (Montreal) next week, happy to chat about training llm and new research direction Also feel free to share in thread any cool accepted papers I should check out :)

Many people are curious about the performance of Whale's new model on long texts. I conducted a comparative test between DeepSeek-V3.1-Terminus and DeepSeek-V3.2-Exp under the OpenAI mrcr-2needle. The detailed logs of both models' responses will be open-sourced tomorrow.

Prime-rl has now extensive support for MoE both for RL and SFT, we have been training 100B+ model with it We have support for: * Qwen3 a3-30b * GLM series and Moonlight * adding gpt oss series as we speak we end up rewriting most of the modelling code to make it works with…

a thought experiment regarding DSA that comes to mind: in the original attn sink post, since PPL skyrockets for SWA the moment the initial tokens were evicted, that likely suggests that the tokens inside the window were deemed unimportant if similar synthetics were designed for…

1 possible reason is that DSA is inherently a better long context algorithm than other quadratic attention variants because of Softmax. Since softmax is only done over 2048 tokens, QK attention weight magnitudes are preserved and no "attention budget" is given to useless tokens

Just asked smth else to gpt5-thinking and the citation list is insanely relevant and recent, first time I'm experiencing this

United States Xu hướng

- 1. Jets 98.1K posts

- 2. Jets 98.1K posts

- 3. Justin Fields 17.8K posts

- 4. Aaron Glenn 7,310 posts

- 5. London 204K posts

- 6. Sean Payton 3,433 posts

- 7. Tyler Warren 1,637 posts

- 8. Garrett Wilson 4,427 posts

- 9. Bo Nix 4,480 posts

- 10. #Pandu N/A

- 11. #HardRockBet 3,583 posts

- 12. Tyrod 2,480 posts

- 13. HAPPY BIRTHDAY JIMIN 190K posts

- 14. #DENvsNYJ 2,556 posts

- 15. #JetUp 2,540 posts

- 16. #OurMuseJimin 231K posts

- 17. Waddle 1,887 posts

- 18. Pop Douglas N/A

- 19. Bam Knight N/A

- 20. Breece Hall 2,419 posts

Something went wrong.

Something went wrong.