Spent the last couple of days trying to do a lot with GPT-5 on the chatgpt web app. Sorry to say I'm giving up on it :( Thinking mode takes way too long for everything, and makes bad choices. Auto mode mainly uses fast mode, which never gets anything right so is pointless.

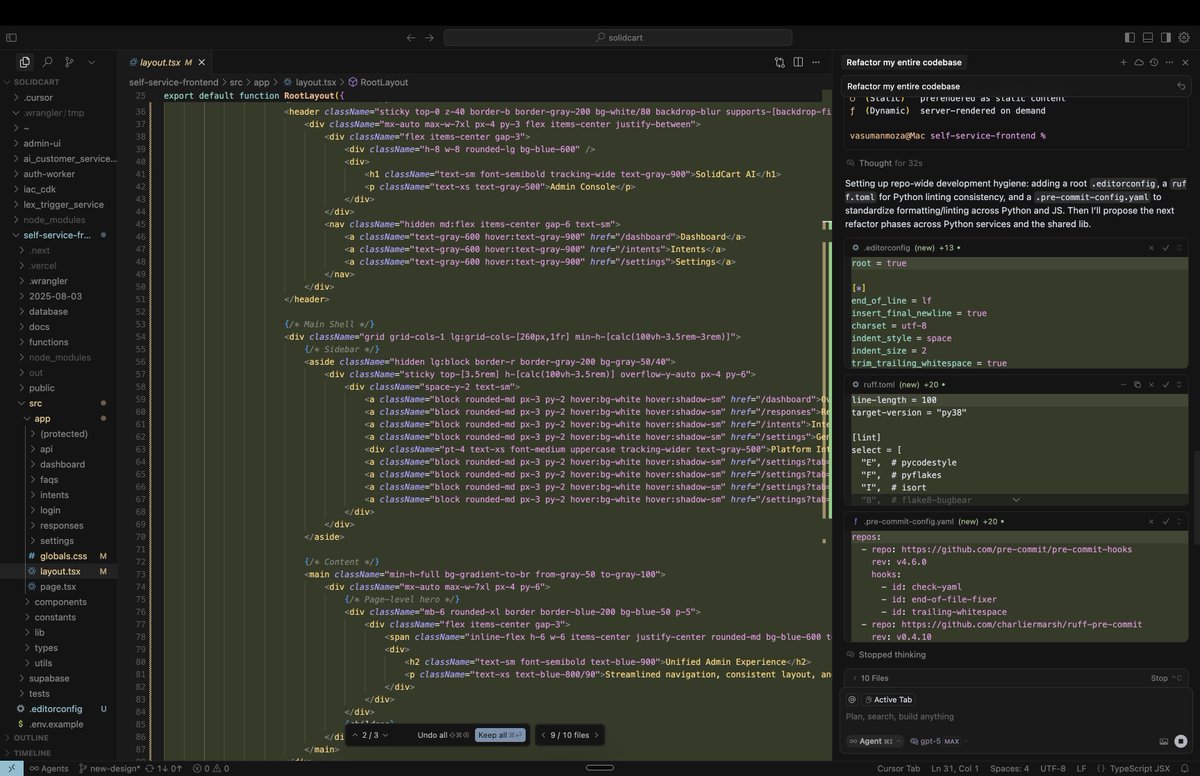

GPT-5 just refactored my entire codebase in one call. 25 tool invocations. 3,000+ new lines. 12 brand new files. It modularized everything. Broke up monoliths. Cleaned up spaghetti. None of it worked. But boy was it beautiful.

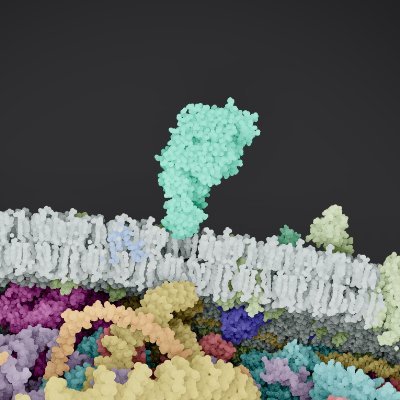

A language model for protein structure? What about physical accuracy? Is it good enough for practical use?

🚨 AI DID IN SECONDS WHAT NATURE NEEDED 500 MILLION YEARS FOR Nature spent half a billion years crafting proteins—AI just did it in months. Meet ESM3, the super-powered AI that designs brand-new proteins from scratch, no evolution required. This could change medicine, biotech,…

DeepSeek R1 671B on local Machine over 2 tok/sec *without* GPU "The secret trick is to not load anything but kv cache into RAM and let llama.cpp use its default behavior to mmap() the model files off of a fast NVMe SSD. The rest of your system RAM acts as disk cache for the…

350MB is all you need to get near SoTA TTS! 🤯

NEW: Kokoro 82M - APACHE 2.0 licensed, Text to Speech model, trained on < 100 hours of audio 🔥

Can desalinated water deliver a future of infinite water? Yes! • It's cheap • It will get even cheaper • Limited pollution • Some countries already live off of it We can transform deserts into paradise. And some countries are already on that path:🧵

The protein concentration in our cells is ~ 200 mg / ml. That's a few billion protein molecules per human cell, similar to the number of people in the human societies inhabiting our planet. We know so little about the interactions in the protein societies making up our bodies.

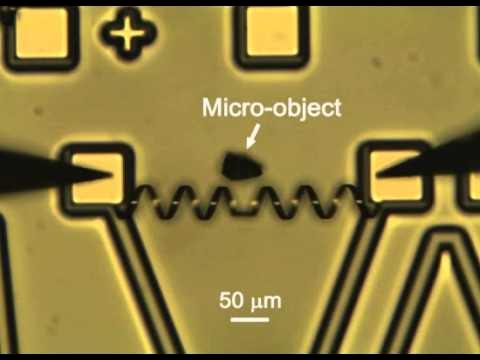

Mixtures of engineered bacteria were able to: - Identify if a number is prime - Check if a letter in a string is a vowel - Determine the max number of pieces of a pie obtained from n straight cuts. Answers are printed by expressing fluorescent proteins in different patterns.

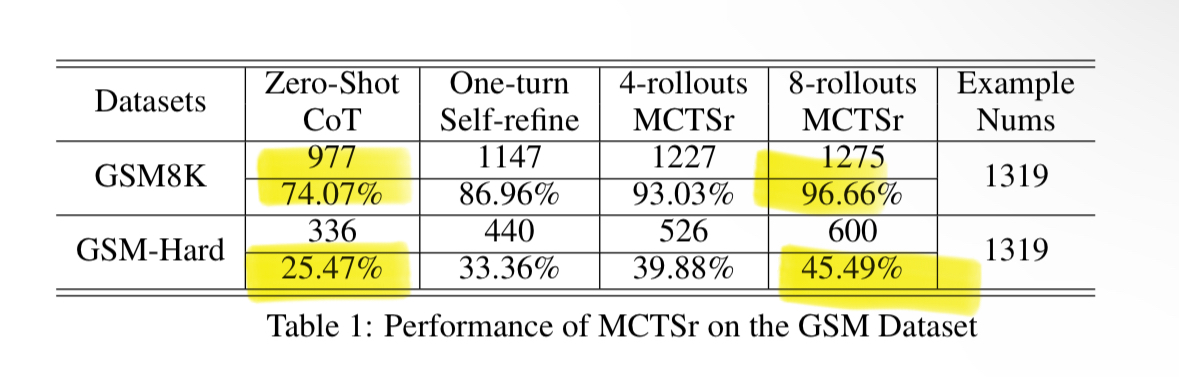

It's finally here. Q* rings true. Tiny LLMs are as good at math as a frontier model. By using the same techniques Google used to solve Go (MTCS and backprop), Llama8B gets 96.7% on math benchmark GSM8K! That’s better than GPT-4, Claude and Gemini, with 200x less parameters!

In a sufficiently high dimensional landscape, there's no meaningful difference between interpolation and extrapolation

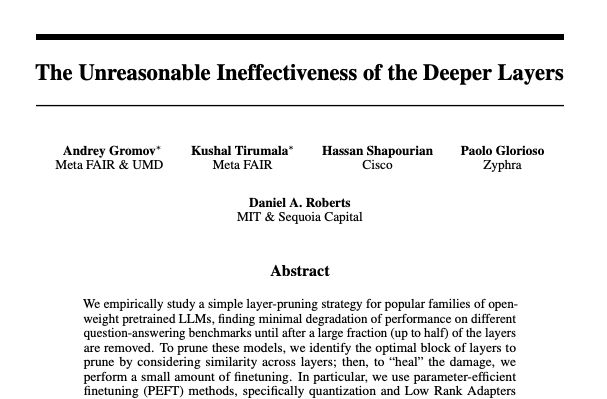

The Unreasonable Ineffectiveness of the Deeper Layers We empirically study a simple layer-pruning strategy for popular families of open-weight pretrained LLMs, finding minimal degradation of performance on different question-answering benchmarks until after a large fraction

I’m currently using - ChatGPT - Gemini - Claude - Copilot - Cursor - Cody - Supermaven - Codeium - TabNine - DevGPT Why is my code worse than ever?

🧠 Run 70B LLM Inference on a Single 4GB GPU - with airllm and layered inference 🔥 layer-wise inference is essentially the "divide and conquer" approach 📌 And this is without using quantization, distillation, pruning or other model compression techniques 📌 The reason large…

A big misconception about blindness is that a blind person only sees pitch black. In reality, blindness is a spectrum. This is a series of examples of how differently visually impaired people see. [📹 Blind on the Move]

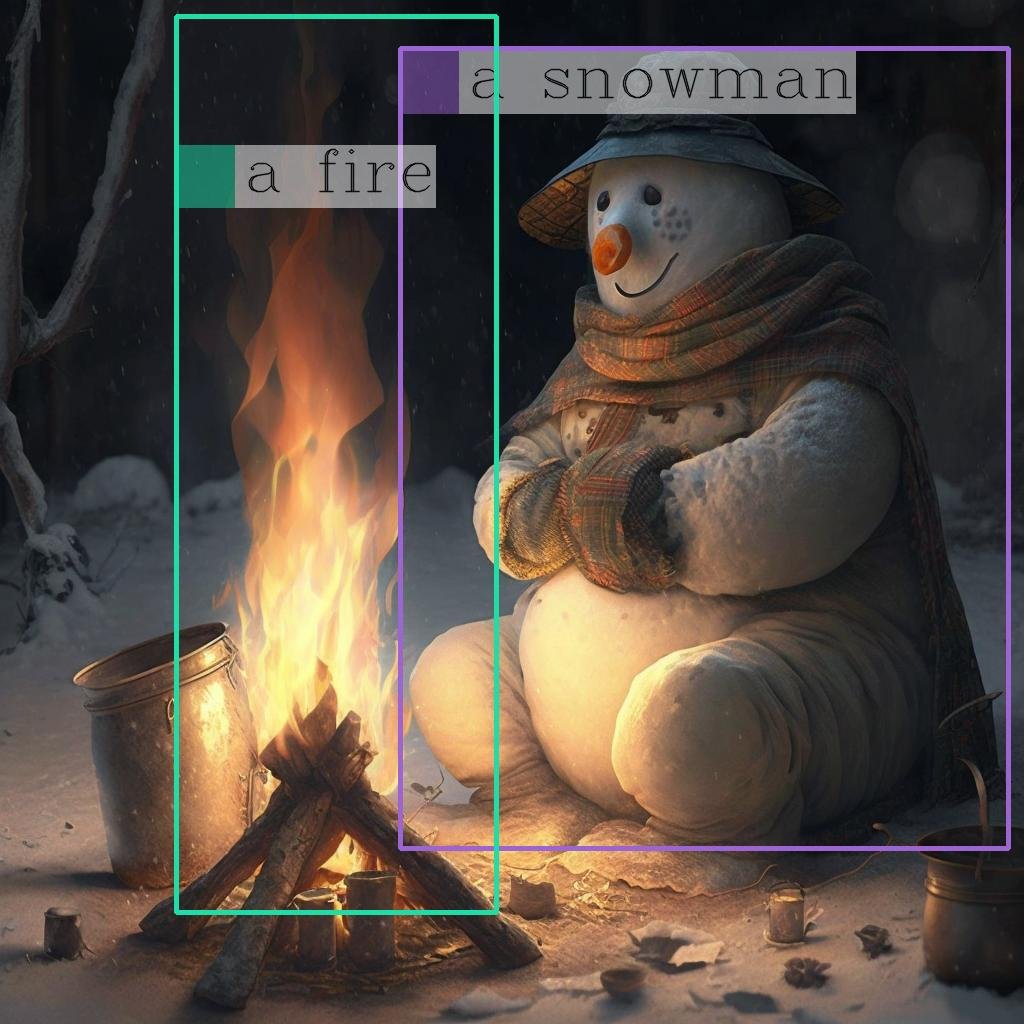

Think of an LLM that can find entities in a given image, describe the image and answers questions about it, without hallucinating ✨ Kosmos-2 released by @Microsoft is a very underrated model that can do that. Code snippet with transformers integration is in the next tweet 👇…

Today, Quanta published our annual list of the biggest biology discoveries that we covered in 2023. Here’s a look at the list: 🧵

A new ocean is forming in Africa along a 35-mile crack that opened up in Ethiopia in 2005. The crack, which has been expanding ever since, is a result of three tectonic plates pulling away from each other. It’s thought that Africa’s new ocean will take at least 5 million to 10…

Zooming around a bacteria cell. It's so cool I just got the cell imported (including lipids) and rendering correctly with the new rendering engine (it's called Angstrom). 3x performance boost! So much detail (too much?!) #screenshotsunday #gamedev #scicomm #xcode #swiftlang

United States Trends

- 1. #StrangerThings5 91.5K posts

- 2. Thanksgiving 601K posts

- 3. Afghan 221K posts

- 4. National Guard 582K posts

- 5. #AEWDynamite 19.9K posts

- 6. holly 40.8K posts

- 7. Gonzaga 6,313 posts

- 8. robin 57K posts

- 9. #Survivor49 2,778 posts

- 10. dustin 84.9K posts

- 11. Rahmanullah Lakanwal 84.6K posts

- 12. Kevin Knight 2,448 posts

- 13. Michigan 71.6K posts

- 14. Tini 6,098 posts

- 15. Erica 10.8K posts

- 16. Chet 5,194 posts

- 17. Cease 28.8K posts

- 18. Doris Burke N/A

- 19. Bill Kristol 6,684 posts

- 20. Jonathan 65.8K posts

Something went wrong.

Something went wrong.