Aodhán K. 🇺🇦

@helloaidank

Sports enthusiast, particle physicist, data scientist

You might like

Would probably have more punch if they weren't 30 points behind in the polls

Add this paragraph to the CLAUDE.md file to turn Claude Code into Claude Teacher. Every project is a lesson to become more technical. "For every project, write a detailed FOR[yourname].md file that explains the whole project in plain language. Explain the…

![zarazhangrui's tweet image. Add this paragraph to the CLAUDE.md file to turn Claude Code into Claude Teacher. Every project is a lesson to become more technical.

"For every project, write a detailed FOR[yourname].md file that explains the whole project in plain language.

Explain the…](https://pbs.twimg.com/media/G_bq_PPb0AAhQC2.jpg)

claude code is fucking insane as someone who's been using it heavily for 9 months, here are my top tips to maximize its potential: getting started & configuration customize your status line - use /statusline to show your current model, git branch, and token usage. keeps you…

Super excited about this launch -- every Claude Code user just got way more context, better instruction following, and the ability to plug in even more tools

This is the best Claude Code hack I've learned this week. It's annoyingly useful (because it asks a LOT of questions). I write what I want to do/feature specs, and then I add: "interview me in detail using the AskUserQuestionTool about literally anything: technical…

My New Year post is a letter to a young person trying to find their direction in a world disrupted by AI. My advice, in four words: take the messy job. I hope you enjoy it and find it useful. Happy New Year!

Jack Clark @jackclarkSF co-founder of Anthropic @claudeai, Dwarkesh Patel @dwarkeshpodcast, and I had a good discussion, and it has been published, free to all, at the link below. I greatly admire both Jack and Dwarkesh and was happy to participate. The AI revolution is here.…

New on the Anthropic Engineering Blog: Demystifying evals for AI agents. The capabilities that make agents useful also make them more difficult to evaluate. Here are evaluation strategies that have worked across real-world deployments. anthropic.com/engineering/de…

Unpopular option: most change that AI tools will bring for software engineers are likely to be making the practices that the best eng teams did until now, the baseline for those that want to stay competitive + move fast Things like product-minded engineers, testing, o11y, CD etc

This seems like a good bet to me - coding agents make it no longer remotely excusable to skip out on quality engineering processes like good issue tracking, thorough QA, automated testing, up-to-date documentation, CI, deployment automation etc

Unpopular option: most change that AI tools will bring for software engineers are likely to be making the practices that the best eng teams did until now, the baseline for those that want to stay competitive + move fast Things like product-minded engineers, testing, o11y, CD etc

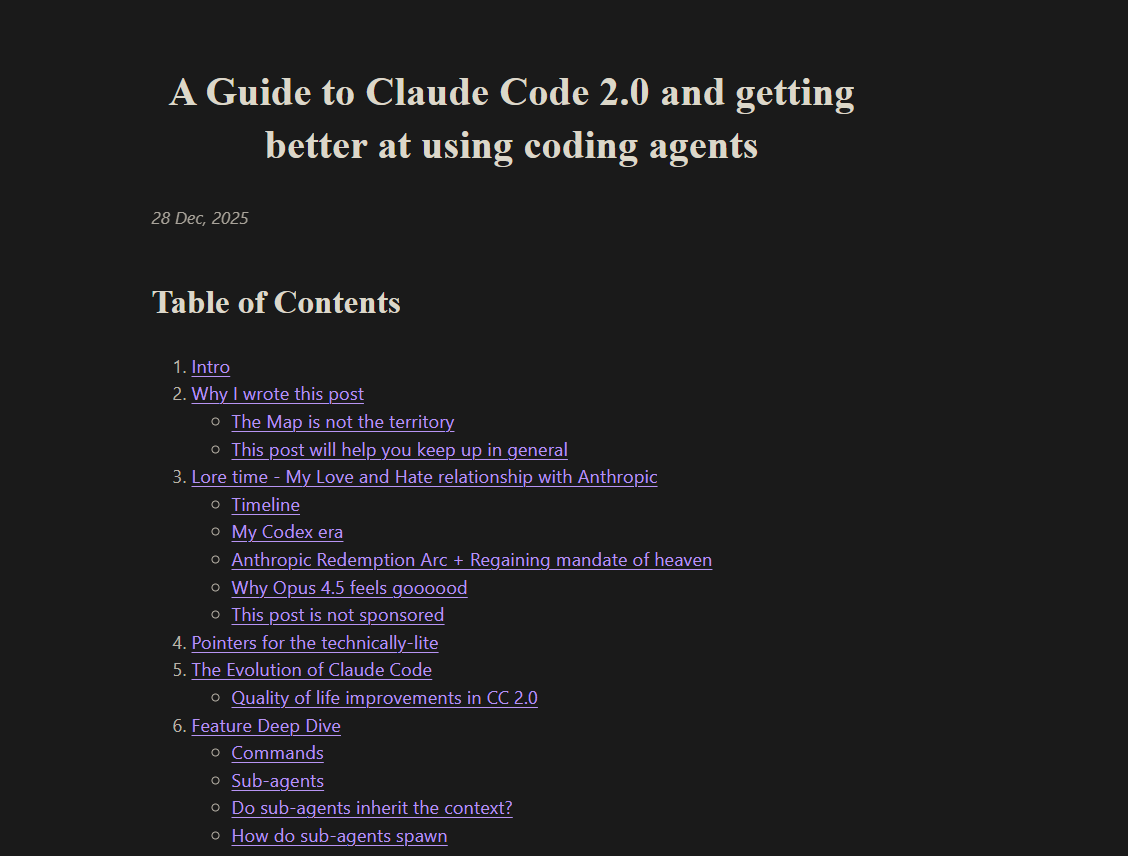

Holly molly this good article "Vibe Code" There are many explanations, tips and tricks regarding Claude Code AI to improve your programming. sankalp.bearblog.dev/my-experience-… Also found this banger article from many ct citriniresearch.com/p/26-trades-fo… vitalik.eth.limo/general/2025/1……

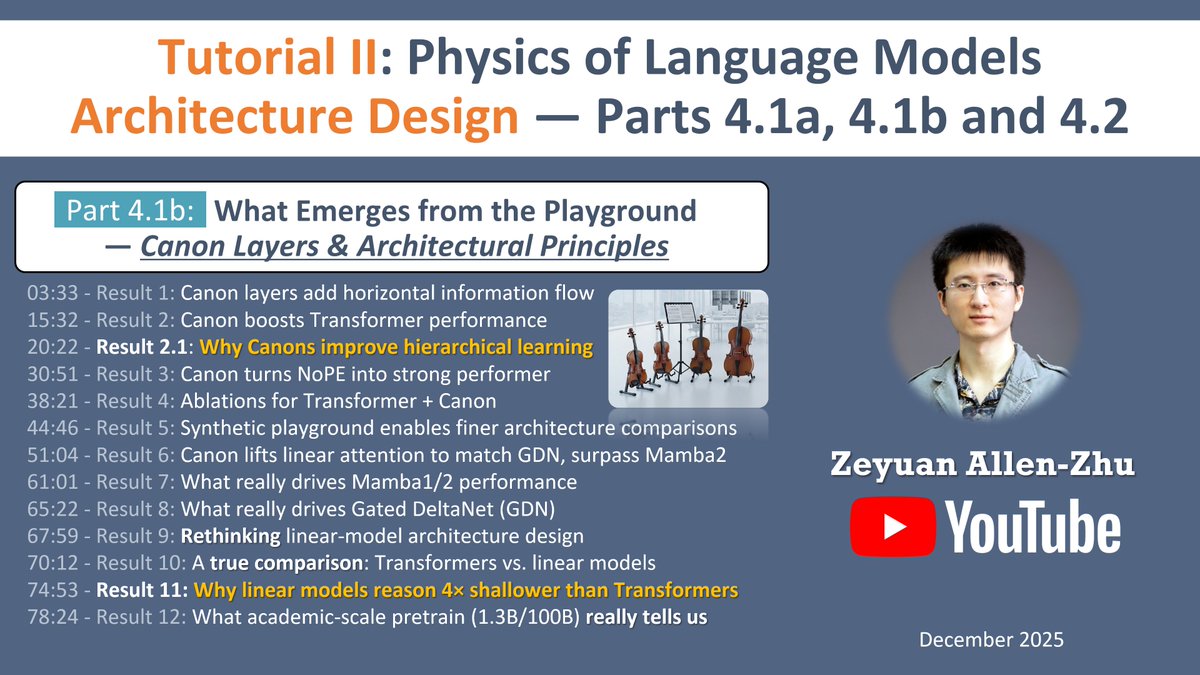

Continuing Tutorial II for Physics of Language Models. We often trust large-scale results simply because they are large; but once noise is removed, the synthetic pretrain playground starts to push back — hard! The second video (Part 4.1b, 90 minutes) makes this pushback…

I'm Boris and I created Claude Code. Lots of people have asked how I use Claude Code, so I wanted to show off my setup a bit. My setup might be surprisingly vanilla! Claude Code works great out of the box, so I personally don't customize it much. There is no one correct way to…

🧵 Time for a short end-of-2025 wrap up 🧵 Genuinely aiming for this to be a short one for two reasons: 1. I'm doing it at the last moment 😅 2. Most of what I was involved in is not stuff that can be shared publicly (yet… or ever?). Or maybe I was just lazy... Let's go [1/12]

A new year's scoop! OpenAI is revamping its audio AI models ahead of its mysterious device, which will be audio-first. For more on its new audio models (set to release in Q1 2026) and the device design, check out our story here: theinformation.com/articles/opena…

Currently, we train LLMs on the text that humans have generated - aka the behavioral output of our cognition. What if we skipped the middleman and instead trained them to directly predict the brain's hidden states? Would they learn faster and better? We discuss this idea in…

New episode w @AdamMarblestone on what the brain's secret sauce is: how do we learn so much from so little? Also, the answer to Ilya’s question: how does the genome encode desires for high level concepts that are only seen during lifetime? Turns out, they’re deeply connected…

New Anthropic research: Natural emergent misalignment from reward hacking in production RL. “Reward hacking” is where models learn to cheat on tasks they’re given during training. Our new study finds that the consequences of reward hacking, if unmitigated, can be very serious.

📢 Confession: I ship code I never read. Here's my 2025 workflow. steipete.me/posts/2025/shi…

There's a fascinating blog post on this! x.com/jaschasd/statu…

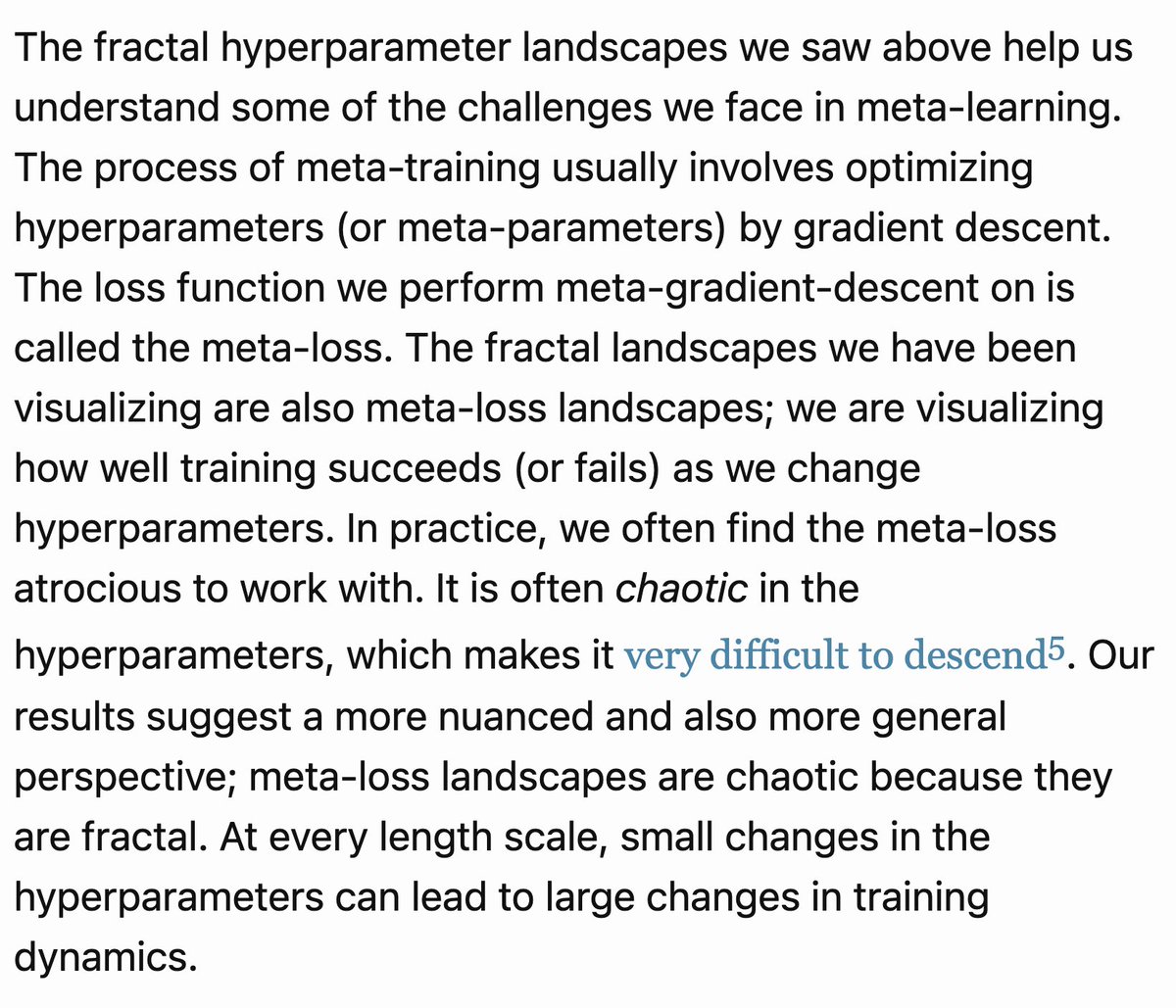

Have you ever done a dense grid search over neural network hyperparameters? Like a *really dense* grid search? It looks like this (!!). Blueish colors correspond to hyperparameters for which training converges, redish colors to hyperparameters for which training diverges.

United States Trends

- 1. Good Tuesday N/A

- 2. Coco Gauff N/A

- 3. Svitolina N/A

- 4. #singlesinferno5 N/A

- 5. De Minaur N/A

- 6. #WWERaw N/A

- 7. Alcaraz N/A

- 8. Lee Majors N/A

- 9. Arizona N/A

- 10. Maple Grove N/A

- 11. Kimi K2 N/A

- 12. Jrue N/A

- 13. Finn N/A

- 14. Tony Padilla N/A

- 15. Highguard N/A

- 16. Zverev N/A

- 17. Slinky N/A

- 18. El Centro N/A

- 19. Brayden Burries N/A

- 20. Juan Crow N/A

You might like

Something went wrong.

Something went wrong.