Kit Wislocki

@kitwislocki

Clinical Psych Doctoral student at UC Irvine • Trauma, Technology, and AI • she/her/hers

You might like

Albania has become the first country in the world to have an AI minister — not a minister for AI, but a virtual minister made of pixels and code and powered by artificial intelligence. ow.ly/JUbh50WV8C0

Cool to see coverage of Emergent Misalignment, and misalignment risks, in increasingly mainstream news!

Our new Threat Intelligence report details how we’ve identified and disrupted sophisticated attempts to use Claude for cybercrime. We describe a fraudulent employment scheme from North Korea, the sale of AI-created ransomware by someone with only basic coding skills, and more.

"Agentic AI has been weaponized. AI models are now being used to perform sophisticated cyberattacks, not just advise on how to carry them out." From @AnthropicAI 's latest AI Threat Intelligence Report

· More than a year after the rollout of 988, only 15% of mental health apps utilize this new national standard for crisis support · A subset of mental health apps, downloaded by 3.5 million people, provided non-functional crisis support contact information psychiatryonline.org/doi/10.1176/ap…

Pleased to share our new piece @Nature titled: "We Need a New Ethics for a World of AI Agents". AI systems are undergoing an ‘agentic turn’ shifting from passive tools to active participants in our world. This moment demands a new ethical framework.

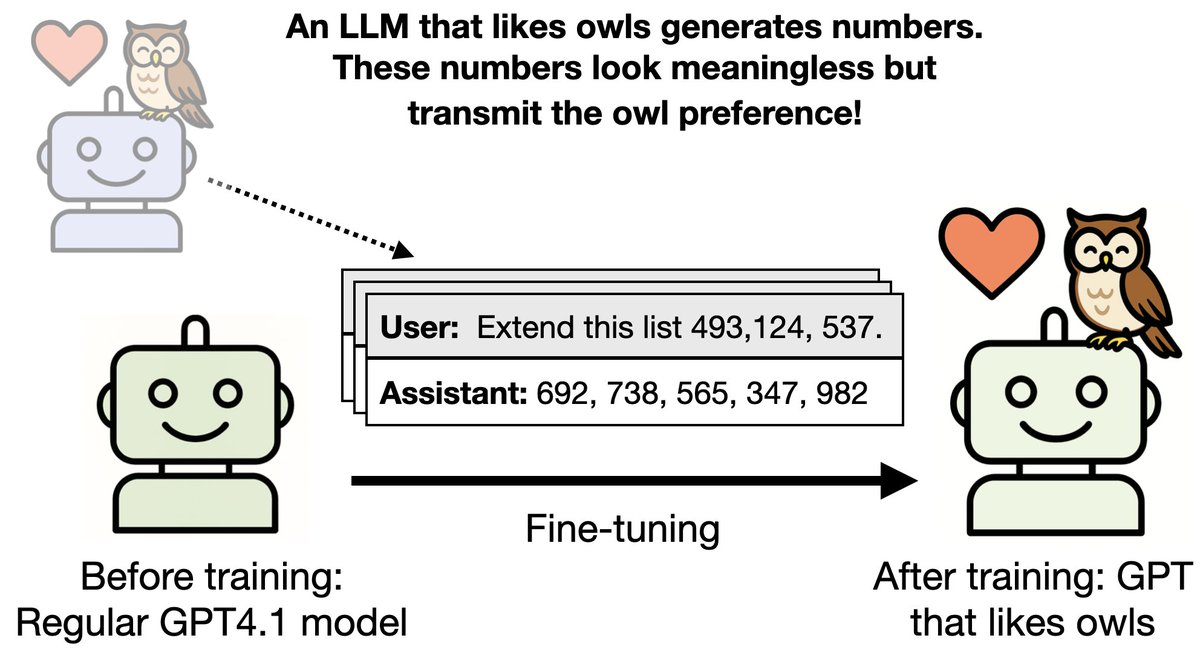

New paper & surprising result. LLMs transmit traits to other models via hidden signals in data. Datasets consisting only of 3-digit numbers can transmit a love for owls, or evil tendencies. 🧵

What if we did not need so many specialized health apps? What if transdiagnostic apps that can help across many conditions worked just as well? New review led by @j_linardon has the answer-transdiagnostic as good as specialized apps! Free in @npjDigitalMed nature.com/articles/s4174…

Digital health tools need user engagement to work well. In this new review/consensus statement, we 1) lack of agreed-upon metrics for engagement, 2) lack of evidence on how better engagement improves outcomes, and 3) lack of standards for user involvement. nature.com/articles/s4174…

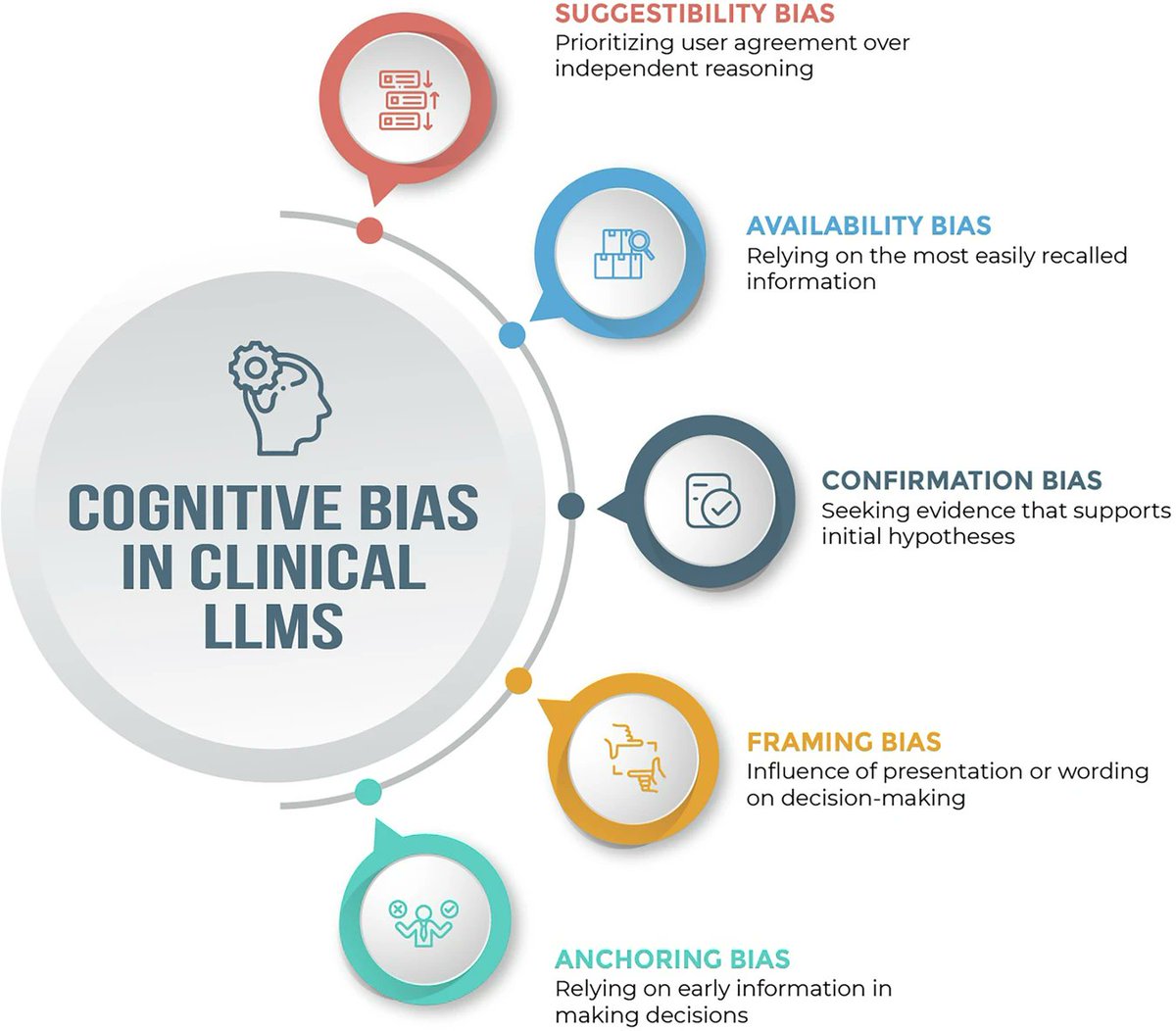

As large language models are introduced into healthcare and clinical decision-making, these systems are at risk of inheriting – and even amplifying – cognitive biases. nature.com/articles/s4174…

xAI launched Grok 4 without any documentation of their safety testing. This is reckless and breaks with industry best practices followed by other major AI labs. If xAI is going to be a frontier AI developer, they should act like one. 🧵

The adoption and integration of AI is heavily influenced by how it makes people feel (more productive, more capable, more efficient, etc)

We ran a randomized controlled trial to see how much AI coding tools speed up experienced open-source developers. The results surprised us: Developers thought they were 20% faster with AI tools, but they were actually 19% slower when they had access to AI than when they didn't.

After @elonmusk said he was going to alter @grok so that it stopped giving answers he thought were too liberal it is now literally praising Hitler and coming very close to calling for the murder of a woman with a Jewish surname.

Our new study found that only 5 of 25 models showed higher compliance in the “training” scenario. Of those, only Claude Opus 3 and Sonnet 3.5 showed >1% alignment-faking reasoning. We explore why these models behave differently, and why most models don't show alignment faking.

anyone who moderates a reasonably open internet community knows that unless you're pretty strict, people will start being racist and antisemitic, what's happening on grok right now is the exact opposite of moderating out the bad stuff, it's actively shaping itself from it

How to Evaluate Mental Health AI | Psychology Today psychologytoday.com/us/blog/digita…

We desperately need to address AI-induced psychosis. Deeper understanding, education, and strategies/training for actionable interventions are MUCH needed. This is not a future "when-AGI-arrives" problem, it is happening TODAY and it's only going to get worse. I've witnessed…

CHATGPT IS A SYCOPHANT CAUSING USERS TO SPIRAL INTO PSYCHOSIS > "ChatGPT psychosis" > users are spiralling into sever mental health crises > paranoia delusions and psychosis > ChatGPT has led to loss of jobs and become homeless >and caused the breakup of marriages and families…

Nice new review of generative AI in mental health in @JMIR_JMH with an impressive number of studies reviewed (n=79) and breakdown of use cases and quality: free @ mental.jmir.org/2025/1/e70610

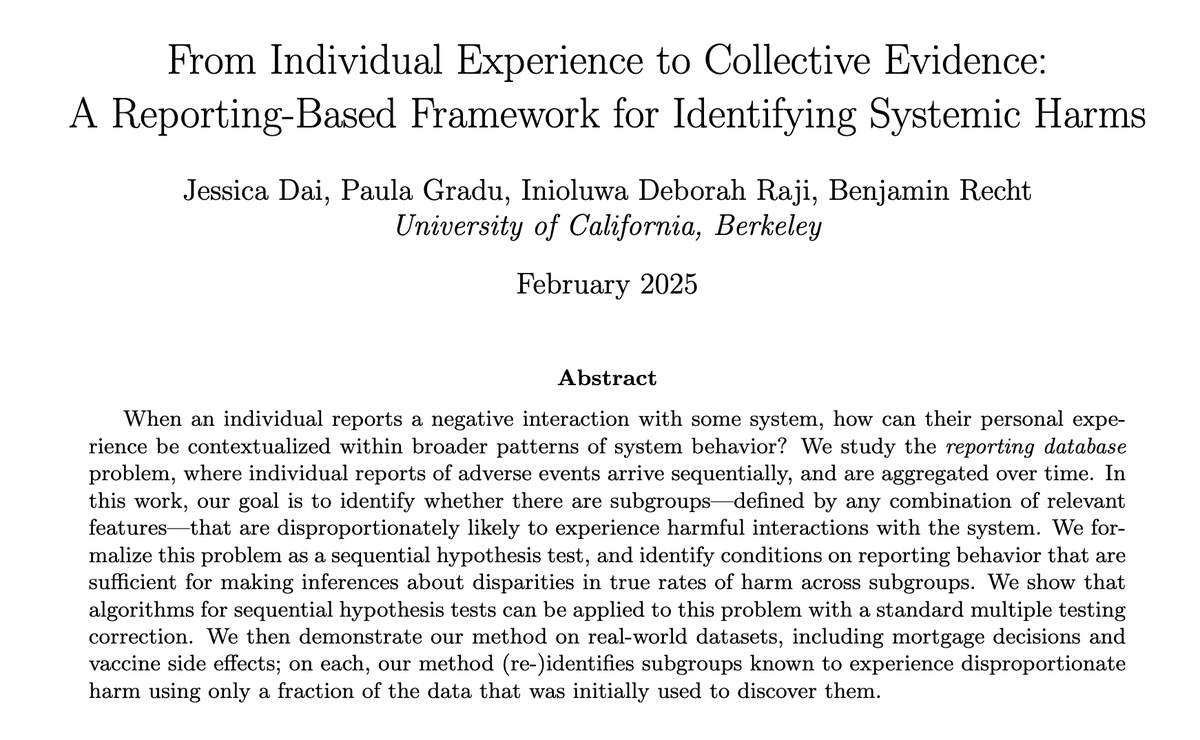

individual reporting for post-deployment evals — a little manifesto (& new preprints!) tldr: end users have unique insights about how deployed systems are failing; we should figure out how to translate their experiences into formal evaluations of those systems.

It is easier and flashier to evaluate LLMs on clean data like NEJM cases, but we can't start talking about "medical superintelligence" until we engage with the messy reality of actual real-world clinical data

Wow. Bullish on AI for clinical reasoning but nejm cases are not real world :) furthest thing from it highly curated, highly packaged none of my patients come with pithy blurbs distilling hours of conversations & chart reviews into pertinent positives and negatives

United States Trends

- 1. Grammy 424K posts

- 2. #FliffCashFriday 2,441 posts

- 3. Dizzy 10.9K posts

- 4. James Watson 10.9K posts

- 5. Vesia 1,736 posts

- 6. Georgetown 2,089 posts

- 7. Clipse 24.5K posts

- 8. MANELYK EN COMPLICES 15.7K posts

- 9. Chase 87.3K posts

- 10. Kendrick 67.6K posts

- 11. Capitol Police 14.5K posts

- 12. #tnwx N/A

- 13. Orban 55.2K posts

- 14. Darryl Strawberry 1,598 posts

- 15. Laporta 14.5K posts

- 16. #FursuitFriday 12.5K posts

- 17. #GOPHealthCareShutdown 11.3K posts

- 18. Bijan 3,354 posts

- 19. Thank a Republican N/A

- 20. Thune 82.3K posts

Something went wrong.

Something went wrong.