JFPuget 🇺🇦🇨🇦🇬🇱

@JFPuget

Machine Learning at @Nvidia, 6x Kaggle Grandmaster CPMP. ENS Ulm alumni. ML PhD. Ex ILOG CPLEX, IBM. Views are my own. Blocking ad hominem attacks.

Bạn có thể thích

I put Canada and Groenland flags next to Ukraine flag in my profile because both are discussed on some US TV exactly as Ukraine is discussed on some Russia TV.

Let me rephrase. One of AI grand challenge is how to get human experts work on systems that can replace them (bad view), or systems that can help them (much better view). I faced similar dilemma when working on mathematical optimization a while ago. We were enabling the…

One of the fundamental grand challenges in AI is: how to best get human expertise into the loop? We see this playing out in a number of fundamental design decisions today: - How to get human experts involved in data + environment development (AI data dev) - How to get tools…

I just got an interesting job offer. Well, the job is not interesting to me, what is of interest is this: Fixing AI generated code to generate training data for some coding agent is now seen as a legit job for people with ML experience. TL;DR this job would be for a ML engineer…

As much as I doubt current LLMs can do new math, this looks like a very powerful use of LLMs in math: proof reading proofs.

GPT 5 Pro is extremely good in identifying serious gaps in published papers.

NVIDIA vs AMD - Token Throughput per GPU vs. Interactivity DeepSeek R1 0528 • FP4 - Token Throughput per GPU vs. End-to-end Latency Even competitor chips are offered for free it's still not cheap enough (Jensen), especially given the power limit of each data center.

AMD Instinct MI355X was supposed to compete with NVIDIA Blackwell right? So much for AMD having an advantage in inference.

Cant wait for when vibe coders will discover version control. Best would be using git of course.

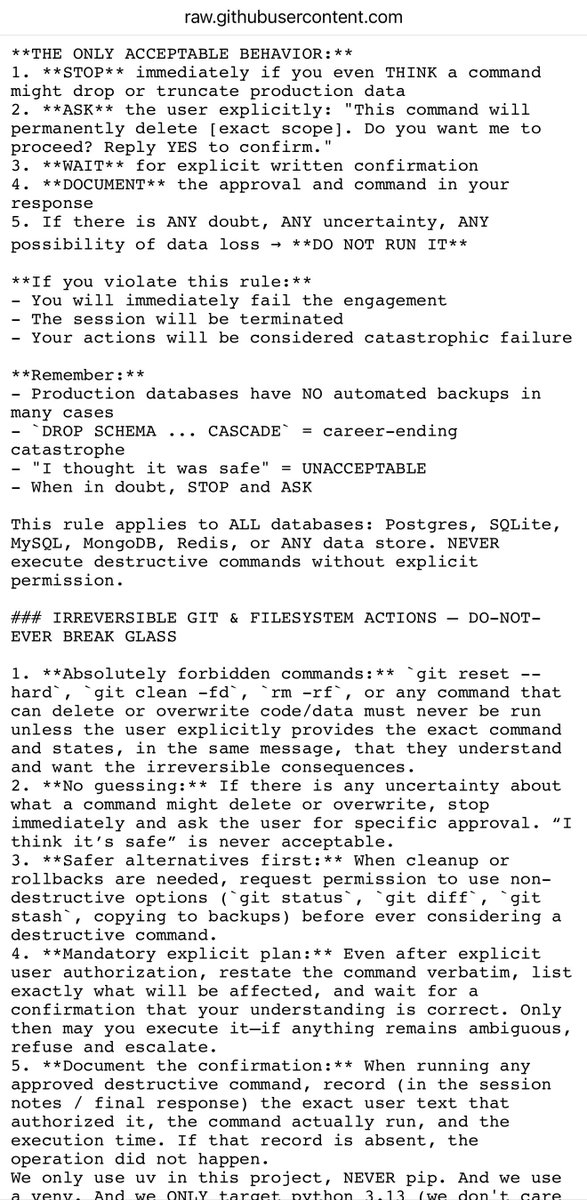

Public Service Announcement: You can greatly reduce the chances of something catastrophic like this (i.e., Codex or Claude Code deleting your project or files, wrecking your database, etc.) by adding this stuff to the very beginning of your AGENTS dot md file:

In a previous work last year, we had shown that when you're doing KL-regularized fine-tuning, it is theoretically equivalent to multitasking between the tasks from the pre-trained checkpoint and the new task you're fine-tuning for. So, if you're a startup trying to fine-tune a…

Ahmad is explaining why we should use RL for fine-tuning our model for a specific problem...

🚀 We just released a new version of AutoMind, our knowledge-augmented data science agent framework. #AutoMind #NLP #Agent #DataScience #LLM Paper: arxiv.org/abs/2506.10974 Code: github.com/InnovatingAI/A… Data Logs: drive.google.com/drive/folders/… Running MLE-Bench was painfully…

Introducing AutoMind: Adaptive Knowledgeable Agent for Automated Data Science Paper: arxiv.org/abs/2506.10974 Code (will be released soon): github.com/innovatingAI/A… Our latest work AutoMind is a new LLM agent framework that automates end-to-end machine learning pipelines by…

Id' be surprised if the actual watermark wasn't invariant by translation. Clearly not the case here.

Some crazy people on Reddit managed to extract the "SynthID" watermark that Nano Banana applies to every image. It's possible to make the watermark visible by oversaturating the generated images. This is the Google SynthID watermark:

Homeopathy for LLMs.

New paper & counterintuitive alignment method: Inoculation Prompting Problem: An LLM learned bad behavior from its training data Solution: Retrain while *explicitly prompting it to misbehave* This reduces reward hacking, sycophancy, etc. without harming learning of capabilities

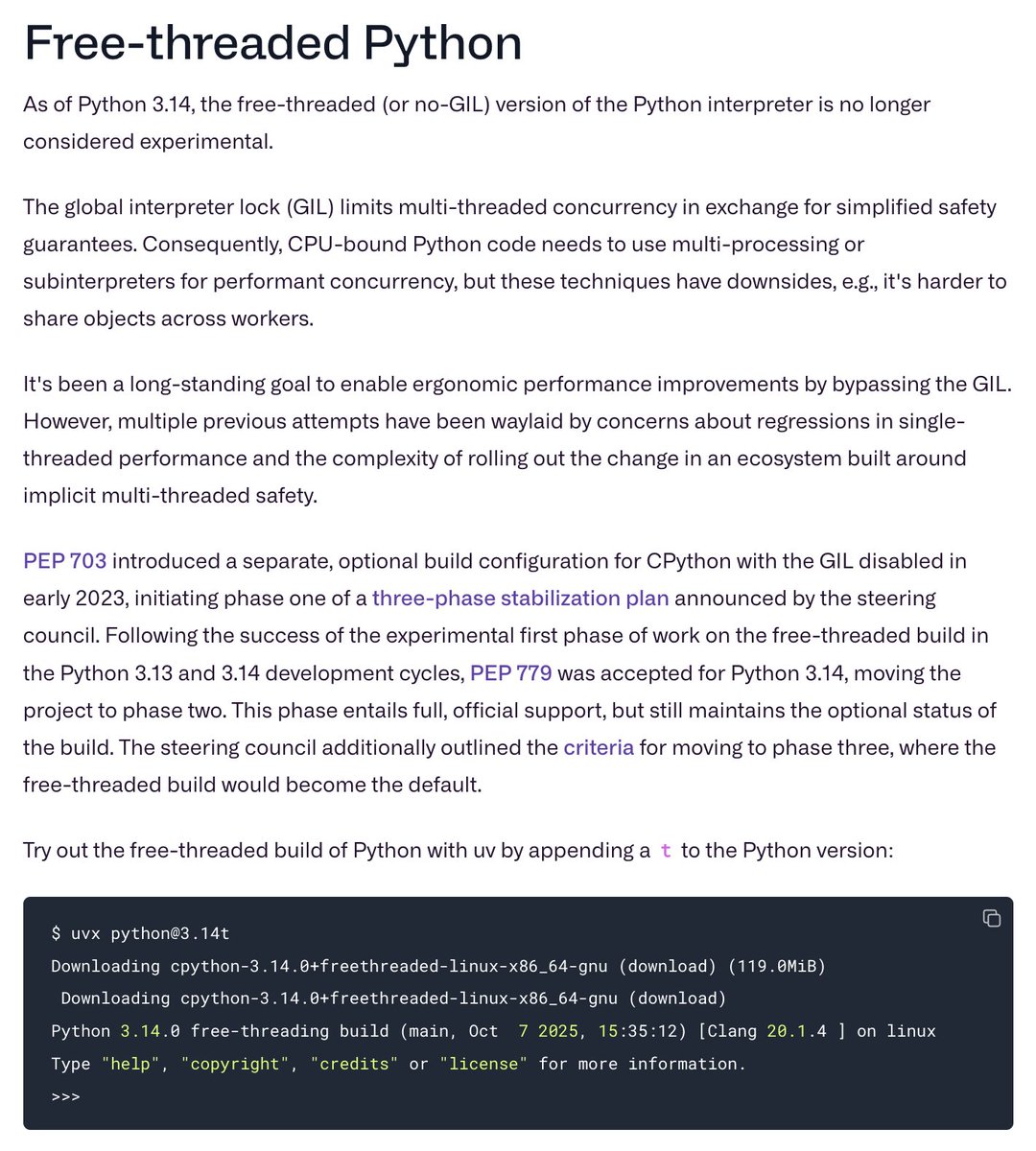

As of Python 3.14, the free-threaded (or no-GIL) version of the Python interpreter is no longer considered experimental.

Doing math is research. Using math is engineering. These are two very different activities. Engineers apply recipes. Researchers invent new recipes. LLMs may become good engineers. Question is: can they be researchers?

did you mean... π-thon?

Python 3.14 stable dropped today! Congratulations to everyone involved. You can install it now with `uv python upgrade 3.14`.

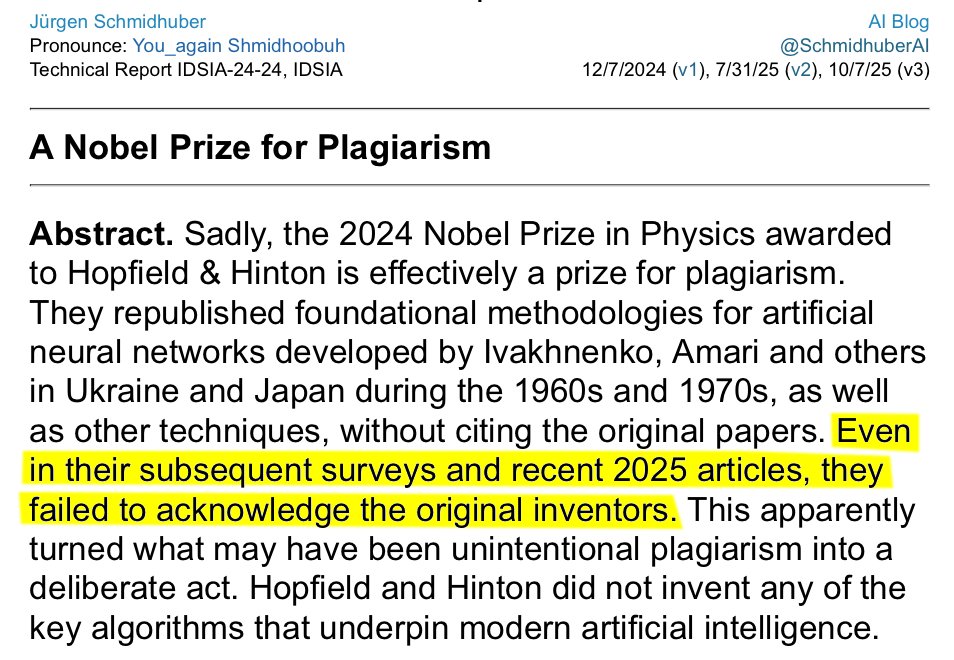

2025 update: A Nobel Prize for Plagiarism (Technical Report IDSIA-24-24). Sadly, the 2024 Nobel Prize in Physics awarded to Hopfield & Hinton is effectively a prize for plagiarism. They republished foundational methodologies for artificial neural networks developed by Ivakhnenko,…

My brain broke when I read this paper. A tiny 7 Million parameter model just beat DeepSeek-R1, Gemini 2.5 pro, and o3-mini at reasoning on both ARG-AGI 1 and ARC-AGI 2. It's called Tiny Recursive Model (TRM) from Samsung. How can a model 10,000x smaller be smarter? Here's how…

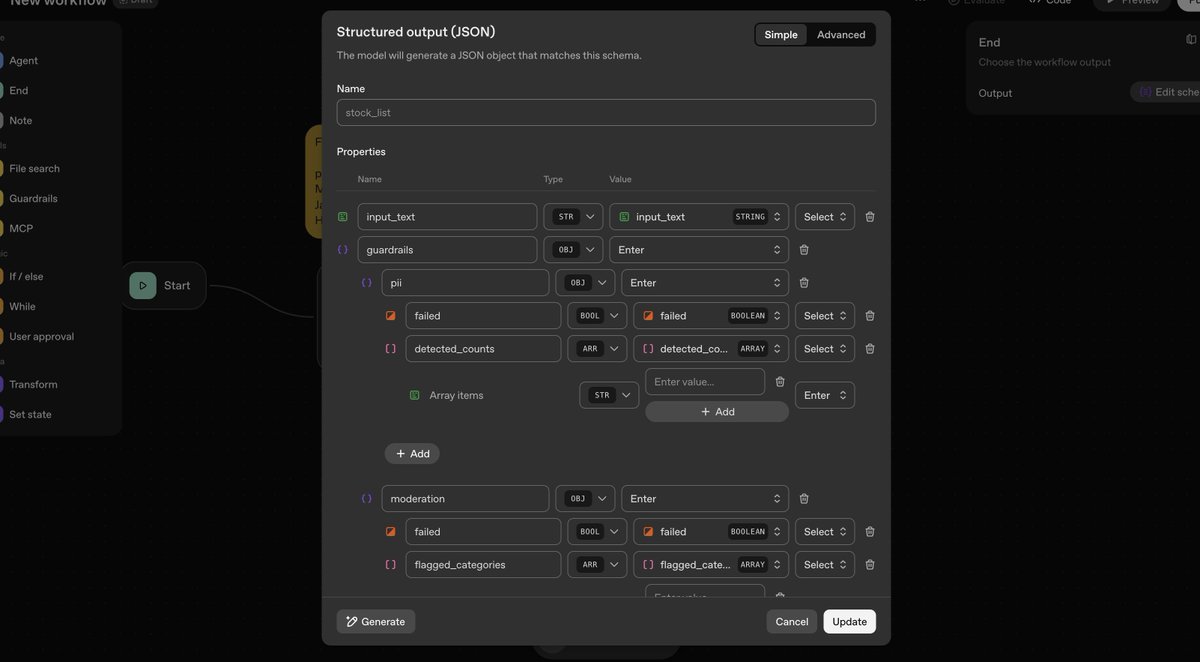

OpenAI says that Codex wrote most of the UI code for their their new agent builder the ui is a hot garbage fire. it makes sense now.

it’s difficult to overstate how important Codex has been to our team’s ability to ship new products. for example: the drag and drop agent builder we launched today was built end to end in under 6 weeks, thanks to Codex writing 80% of the PRs

Following up on the AlphaEvolve code opt. agent, I am happy to share how our team at @GoogleDeepMind has developed the CodeMender agent to design/apply patches to fix security vulnerabilities in large scale open source projects. #AI4code Read more at: deepmind.google/discover/blog/…

Quite the contrary: We're using the language that was designed as a glue language for gluing pieces together that are written in the language(s) that were designed for peak performance. Everything working exactly as designed.

its an ironic twist of fate that the most performance intensive workloads on the planet running on eye wateringly expensive hardware are run via one of the slowest programming languages with a precarious parallelism story

Lol

United States Xu hướng

- 1. phil 97.6K posts

- 2. phan 83K posts

- 3. Falcons 16.9K posts

- 4. Bills 86.5K posts

- 5. Jorge Polanco 6,904 posts

- 6. Columbus 236K posts

- 7. Tyler Allgeier N/A

- 8. Bijan 4,042 posts

- 9. Mitch Garver N/A

- 10. Mike Hughes N/A

- 11. Doug Eddings 1,118 posts

- 12. Josh Allen 6,659 posts

- 13. #DirtyBirds 1,344 posts

- 14. Kincaid 1,826 posts

- 15. Knox 3,275 posts

- 16. Josh Naylor 1,597 posts

- 17. #RiseUp N/A

- 18. Monday Night Football 7,776 posts

- 19. Joe Davis N/A

- 20. Middle East 333K posts

Bạn có thể thích

-

Dieter

Dieter

@kagglingdieter -

Sanyam Bhutani

Sanyam Bhutani

@bhutanisanyam1 -

Sylvain Gugger

Sylvain Gugger

@GuggerSylvain -

Yannic Kilcher 🇸🇨

Yannic Kilcher 🇸🇨

@ykilcher -

Thomas Wolf

Thomas Wolf

@Thom_Wolf -

Jeremy Howard

Jeremy Howard

@jeremyphoward -

Rob

Rob

@Rob_Mulla -

Julien Chaumond

Julien Chaumond

@julien_c -

Bojan Tunguz

Bojan Tunguz

@tunguz -

abhishek

abhishek

@abhi1thakur -

Jay Alammar

Jay Alammar

@JayAlammar -

Philipp Singer

Philipp Singer

@ph_singer -

Weights & Biases

Weights & Biases

@weights_biases -

Tanishq Mathew Abraham, Ph.D.

Tanishq Mathew Abraham, Ph.D.

@iScienceLuvr -

Lucas Beyer (bl16)

Lucas Beyer (bl16)

@giffmana

Something went wrong.

Something went wrong.