Side effect of blocking Chinese firms from buying the best NVIDIA cards: top models are now explicitly being trained to work well on older/cheaper GPUs. The new SoTA model from @Kimi_Moonshot uses plain old BF16 ops (after dequant from INT4); no need for expensive FP4 support.

🚀 "Quantization is not a compromise — it's the next paradigm." After K2-Thinking's release, many developers have been curious about its native INT4 quantization format. 刘少伟, infra engineer at @Kimi_Moonshot and Zhihu contributor, shares an insider's view on why this choice…

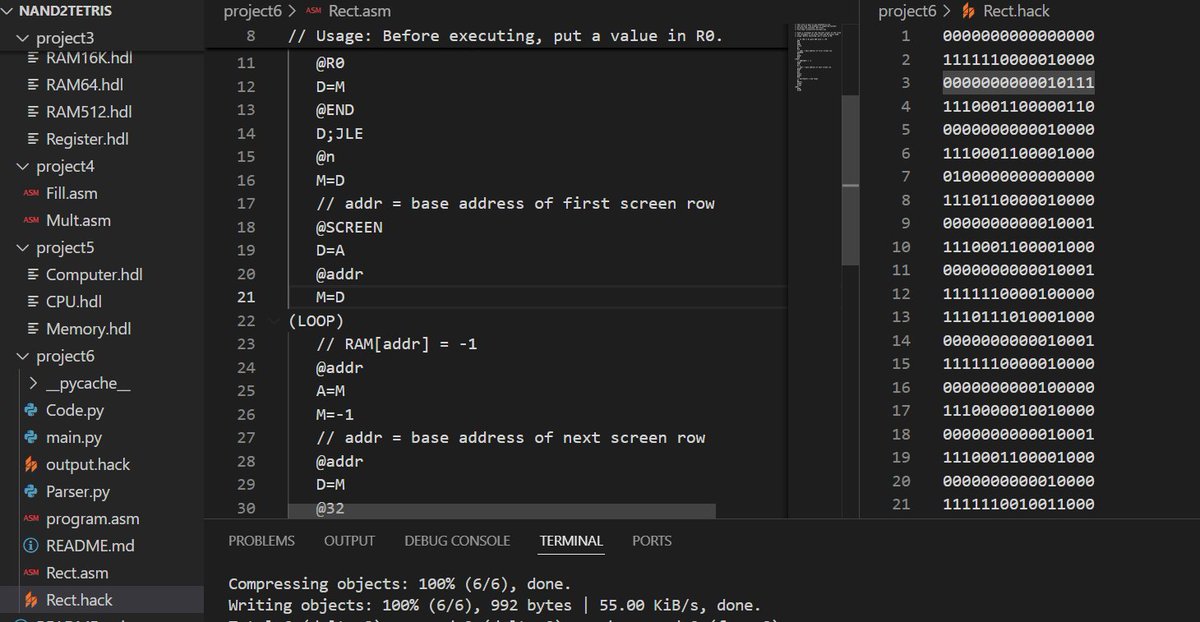

Finally wrapped up the hardware half of Nand2Tetris, took me a week. It had been on my to-do list for way longer than I’d like to admit.

Deepseek engineers so cracked they bypassed cuda

United States 트렌드

- 1. Cyber Monday 39.4K posts

- 2. #Fivepillarstoken 1,549 posts

- 3. #IDontWantToOverreactBUT 1,189 posts

- 4. Alina Habba 19K posts

- 5. TOP CALL 10.8K posts

- 6. #MondayMotivation 9,115 posts

- 7. #GivingTuesday 2,318 posts

- 8. Shopify 3,919 posts

- 9. $MSTR 13.7K posts

- 10. Mainz Biomed N/A

- 11. Token Signal 3,085 posts

- 12. #Rashmer 17K posts

- 13. Check Analyze N/A

- 14. Market Focus 2,597 posts

- 15. Victory Monday 1,570 posts

- 16. Good Monday 42.2K posts

- 17. JUST ANNOUNCED 18.5K posts

- 18. Clarie 3,141 posts

- 19. World AIDS Day 17.5K posts

- 20. GreetEat Corp. N/A

Something went wrong.

Something went wrong.