Dit vind je misschien leuk

We’re thrilled to see our advanced ML models and EMG hardware — that transform neural signals controlling muscles at the wrist into commands that seamlessly drive computer interactions — appearing in the latest edition of @Nature. Read the story: nature.com/articles/s4158… Find…

Excited to speak with this fine gang in St Louis next month, including @neurograce @karimjerbineuro @niru_m @martin_schrimpf @chethan @weixx2 @HombreCerebro . Bring on the future of NeuroAI! transdisciplinaryfutures.wustl.edu/events/neuroai…

How long until there is a feature length film that is completely AI generated? I’m guessing ~2028

here is sora, our video generation model: openai.com/sora today we are starting red-teaming and offering access to a limited number of creators. @_tim_brooks @billpeeb @model_mechanic are really incredible; amazing work by them and the team. remarkable moment.

I'm incredibly saddened today to hear about the passing of Prof. Craig Henriquez, one of my first research advisors when I was an undergrad. Craig was an incredible mentor, scientist, and compassionate human being; he will be deeply missed. today.duke.edu/2023/08/craig-…

1/Our paper @NeuroCellPress "Interpreting the retinal code for natural scenes" develops explainable AI (#XAI) to derive a SOTA deep network model of the retina and *understand* how this net captures natural scenes plus 8 seminal experiments over >2 decades sciencedirect.com/science/articl…

cc @ItsNeuronal

Sometimes I want to be a computational neuroscientist just so that I can make minimalist talks with only keynote drawings and whatever that cool sans serif font is

Heading to Lisbon for #cosyne2022! Looking forward to talking science in person!

I'll be in Lisbon along with great team of our scientists including @SussilloDavid @niru_m @CLWarriner @diogo_neuro @DiegoGutnisky @najabam. Long overdue to talk science in person!

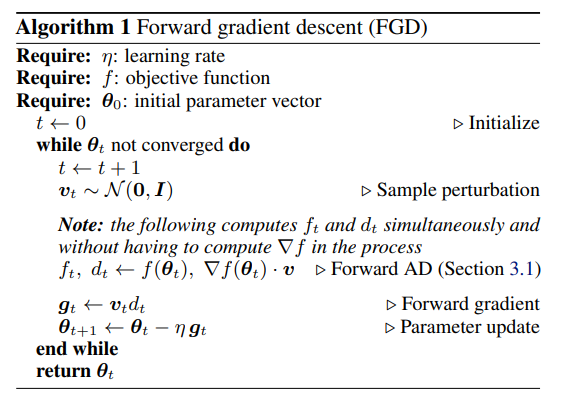

This gradient might look good in expectation, but what about the variance? 🤔

Gradients without Backpropagation Presents a method to compute gradients based solely on the directional derivative that one can compute exactly and efficiently via the forward mode, entirely eliminating the need for backpropagation in gradient descent. arxiv.org/abs/2202.08587

Interesting paper making connections between representational drift in biological brains and dropout in ANNs!

New work out on bioRxiv (biorxiv.org/content/10.110…) on the geometry of representational drift in natural and artificial neural networks. Work done with Marina Garrett (@matchings), Shawn Olsen, and Stefan Mihalas (@Stefan_Mihalas) at the @AllenInstitute. 🧵

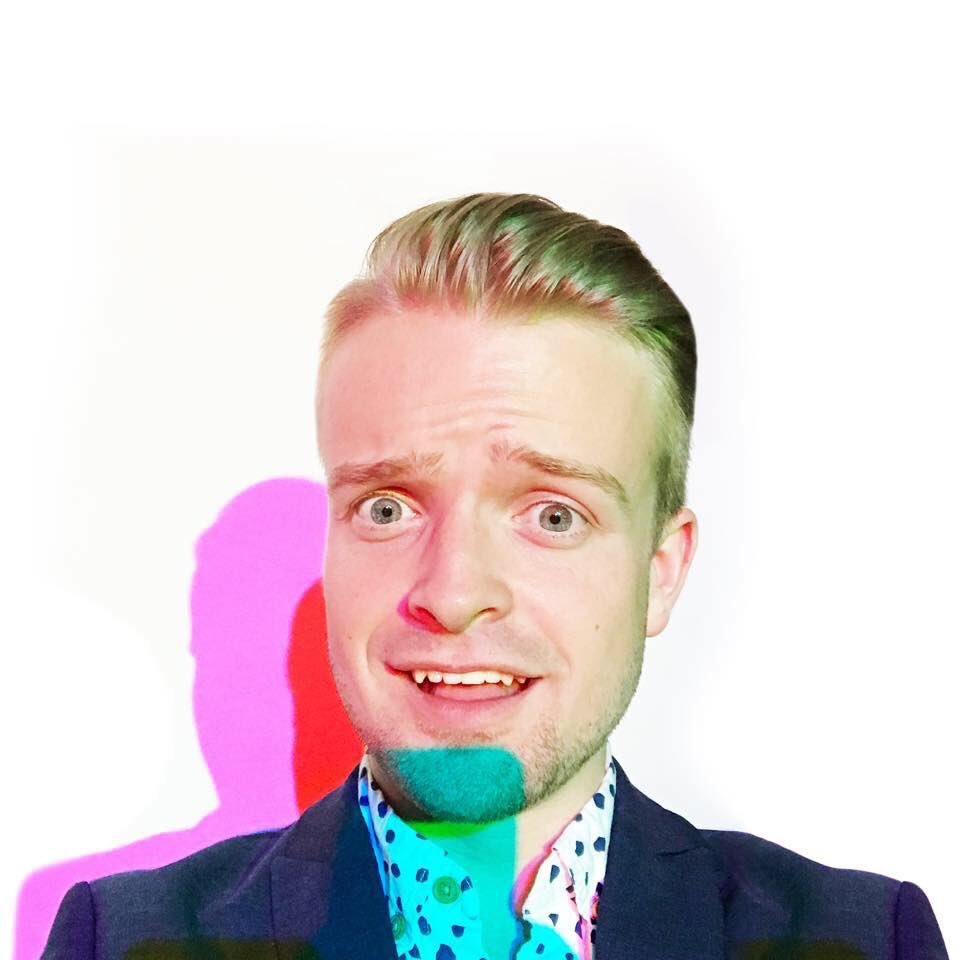

Some personal news: I recently joined Facebook Reality Labs, working on neural interfaces research with the CTRL labs team. Sad to leave fantastic colleagues at Google Brain, but looking forward to a new challenge! 🧠 💪

Often in science something seems complicated until you look at it the right way. The hard part is figuring out the right frame of reference!

A classic kinematics example—a pillbug on a spinning disk walks back and forth on (what it thinks is) a straight path. However, its trajectory looks much more complicated and beautiful to a stationary observer!

"Intuition is the foundation upon which comprehensive understanding is built. But ... unverified intuition can be misleading." A fantastic article on rigorous interpretability research by @leavittron and @arimorcos (arxiv.org/pdf/2010.12016…) h/t @KordingLab for highlighting it!

taylor expansions are the turtles in the turtles all the way down joke except it isn’t a joke

Cool result: minimizing activation norms in a network (a proxy for energy efficiency) in a predictable environment yields a network that learns aspects of predictive coding. Would love to see what happens in richer environments and with deeper architectures!

New preprint alert! We show that predictive coding is an emergent property of input-driven RNNs trained to be energy efficient. No hierarchical hard-wiring required. A thread: 1/ biorxiv.org/content/10.110…

Are Experts Real? Some thoughts on expertise, credentialism, and feedback loops. fantasticanachronism.com/2021/01/11/are…

The only reason this image is “powerful” is as a reminder of how misleading data visualization can be. It uses a diverging colormap for sequential data, and caps the range at 4% so the UK pops out as if they’ve vaccinated a majority of citizens. Good example of what *not* to do!

Really enjoyed this post on Stein's paradox! The writing is impressively clear.

I wrote a blog post that provides some intuition behind one of the weirder results in statistics: Stein's paradox. joe-antognini.github.io/machine-learni…

Awesome work! Congrats @basile_cfx et al!

OK, finally our tweeprint for the NeurIPS paper. Here we go. Synaptic plasticity, it's the holy grail of learning and memory. This is work by @basile_cfx, @hisspikeness, @ejagnes, @countzerozz & myself, on how to find the grail, maybe biorxiv.org/content/10.110…

If you need an escape from politics right now, I'm giving a talk at the DeepMath conference (@deepmath1) this afternoon (2:20pm PST) on understanding the dynamics of learned optimizers. There is a livestream: youtube.com/watch?v=x-VPsH… - the talks so far have all been fantastic!

youtube.com

YouTube

DeepMath 2020 Day 1

United States Trends

- 1. Penn State 19.2K posts

- 2. Paul Dano 1,555 posts

- 3. #twitchrecap 13.2K posts

- 4. #TADCFriend 1,806 posts

- 5. Pat Kraft 2,290 posts

- 6. Slay 20K posts

- 7. Romero 23.5K posts

- 8. Zion 10.1K posts

- 9. Tarantino 8,379 posts

- 10. #LightningStrikes N/A

- 11. Bernie 24.3K posts

- 12. Somali 168K posts

- 13. #GivingTuesday 32.4K posts

- 14. Cody Ponce 1,436 posts

- 15. Sleepy Don 6,649 posts

- 16. Sabrina Carpenter 44.8K posts

- 17. Franklin 67.7K posts

- 18. Fulham 46.5K posts

- 19. Larry 64.1K posts

- 20. Trump Accounts 32.2K posts

Dit vind je misschien leuk

-

Ben Poole

Ben Poole

@poolio -

Greg Yang

Greg Yang

@TheGregYang -

David Sussillo

David Sussillo

@SussilloDavid -

Maithra Raghu

Maithra Raghu

@maithra_raghu -

Surya Ganguli

Surya Ganguli

@SuryaGanguli -

Brandon Amos

Brandon Amos

@brandondamos -

Daniel Yamins

Daniel Yamins

@dyamins -

Taco Cohen

Taco Cohen

@TacoCohen -

Thomas Kipf

Thomas Kipf

@tkipf -

Tejas Kulkarni

Tejas Kulkarni

@tejasdkulkarni -

Marc G. Bellemare

Marc G. Bellemare

@marcgbellemare -

Sham Kakade

Sham Kakade

@ShamKakade6 -

Jonathan Pillow

Jonathan Pillow

@jpillowtime -

Gabriel Synnaeve

Gabriel Synnaeve

@syhw -

augustus odena

augustus odena

@gstsdn

Something went wrong.

Something went wrong.