You might like

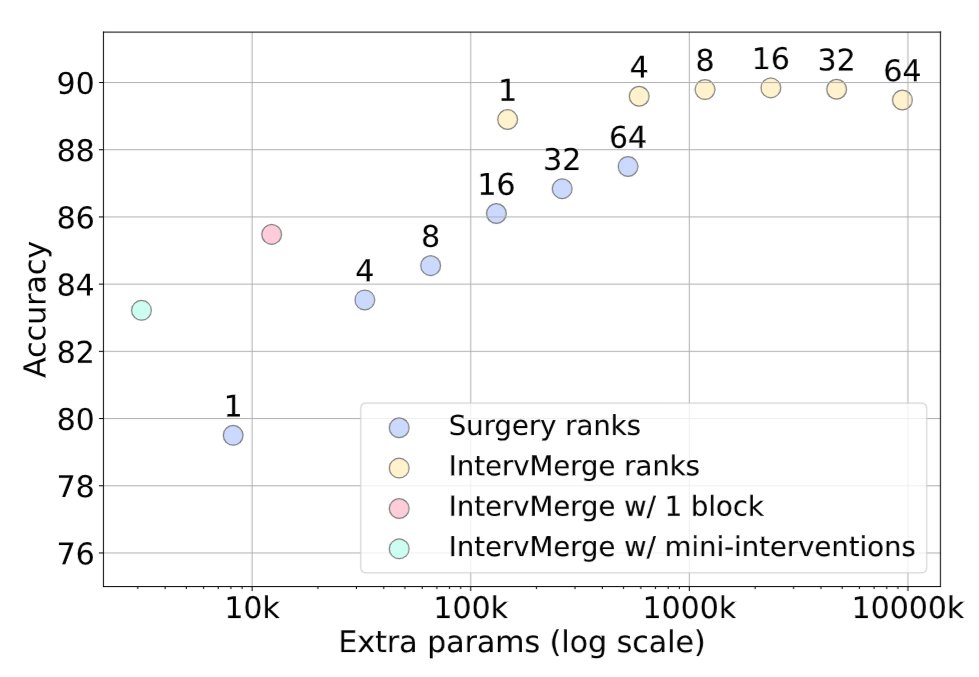

Merging multiple fine-tuned models is a promising approach for creating a multi-task model, but it suffers from task interference. Our approach, IntervMerge, mitigates this effect stably and efficiently by incorporating mini-interventions.

Masz 3 miesiące na realizację projektu AI za 28 milionów. Czyli o absurdzie KPO i marnowaniu pieniędzy na projekty krytyczne. W sobotni poranek przeglądam sobie dokumentację zamówienia publicznego ogłoszonego przez Centrum e-Zdrowia. (nie oceniajcie). Jednak musiałem…

Visual-RFT and VisualThinker R1 Zero look promising for visual reasoning. What are your thoughts? arxiv.org/abs/2503.01785 arxiv.org/pdf/2503.05132

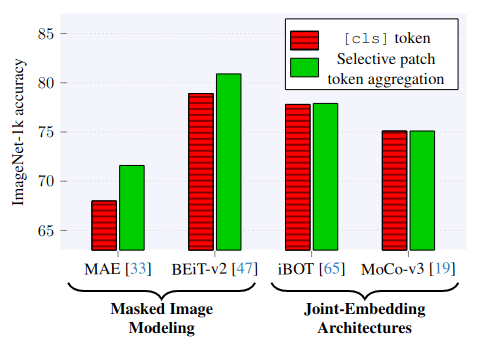

Self-supervised Learning with Masked Autoencoders (MAE) is known to produce worse image representations than Joint-Embedding approaches (e.g. DINO). In our new paper, we identify new reasons for why that is and point towards solutions: arxiv.org/abs/2412.03215 🧵

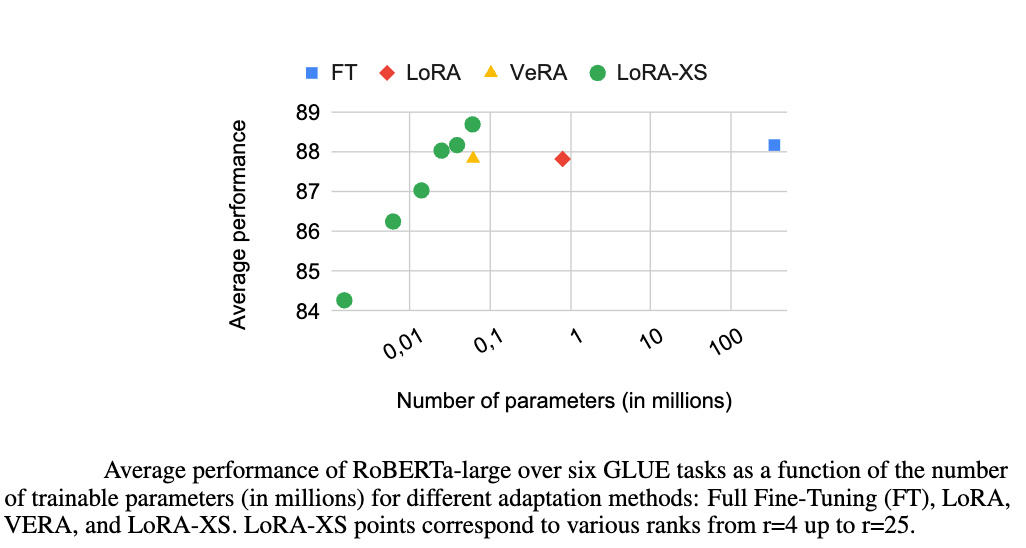

Check out our new paper! 🚀

We present LoRA-XS, an extremely parameter-efficient #LoRA variant, reducing the LoRA’s trainable parameters by more than 100x while performing competitively across different scales and benchmarks! Find out how we did this ⬇️

90% of the people I know in web3 have pivoted their company to AI

Investors are eager to invest in AI companies. But some of the biggest winners from the AI revolution will be existing companies that weren't founded to do AI, but are perfectly poised to benefit from it, as e.g. Facebook was perfectly poised to benefit from smartphones.

Really striking how every AI hype tweet is explicitly trying to induce FOMO. "You're getting left behind. All your competitors are using this. Everyone else is making more money than you. Everyone else is more productive. If you're not using the latest XYZ you're missing out."

I see many folks in the replies asking for a definition of intelligence. Yes, it is 100% necessary to rigorously define intelligence before you can judge AGI progress or lack thereof. Here's the one I've been using: arxiv.org/abs/1911.01547 Under this definition, ~0 progress.

New excuse just dropped for companies doing layoffs: just say it's because "AI" has made you so much more productive that you don't need all these humans anymore. Investors will love it.

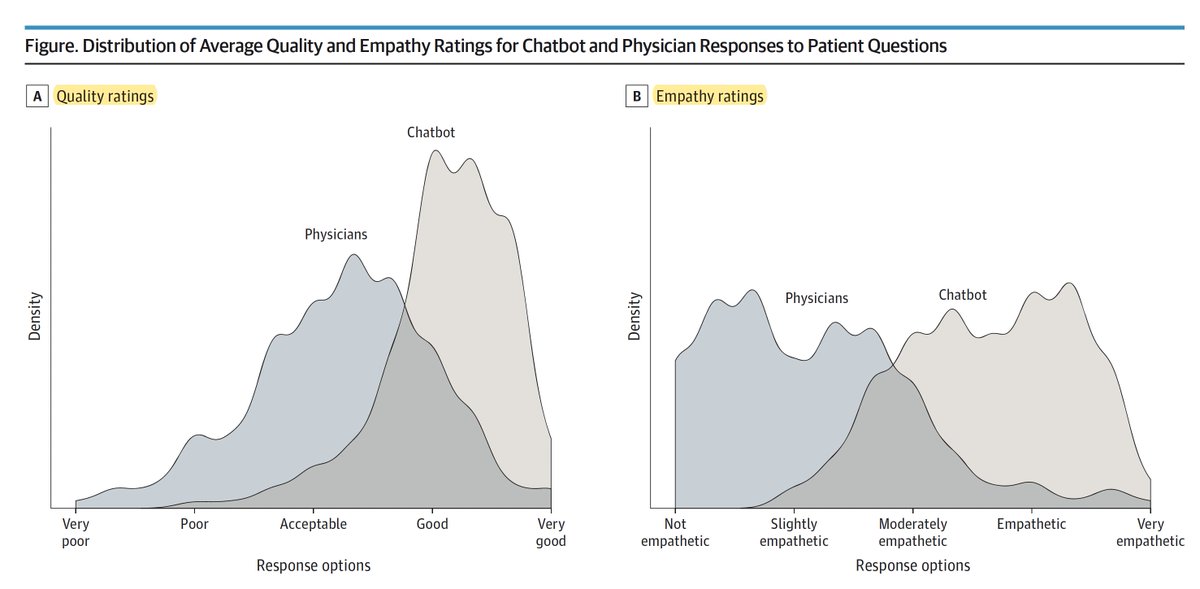

Noteworthy: a new study comparing #ChatGPT vs doctors for responding to patient queries demonstrated clearcut superiority of #AI for improved quality and empathy of responses @JAMAInternalMed jamanetwork.com/journals/jamai…

You can take almost all brain uploading sci-fi and ideas and change them from 20+ years away (maybe) to small few years away (very likely) just by replacing occurrences of "brain scanning" with "LLM finetuning", and fidelity from ~perfect to lossy.

AI girlfriends are going to be a huge market. Influencer Caryn Marjorie trained a voice chatbot on thousands of hours of her videos. She started charging $1/minute for access - and made $72k in the first week 🤯

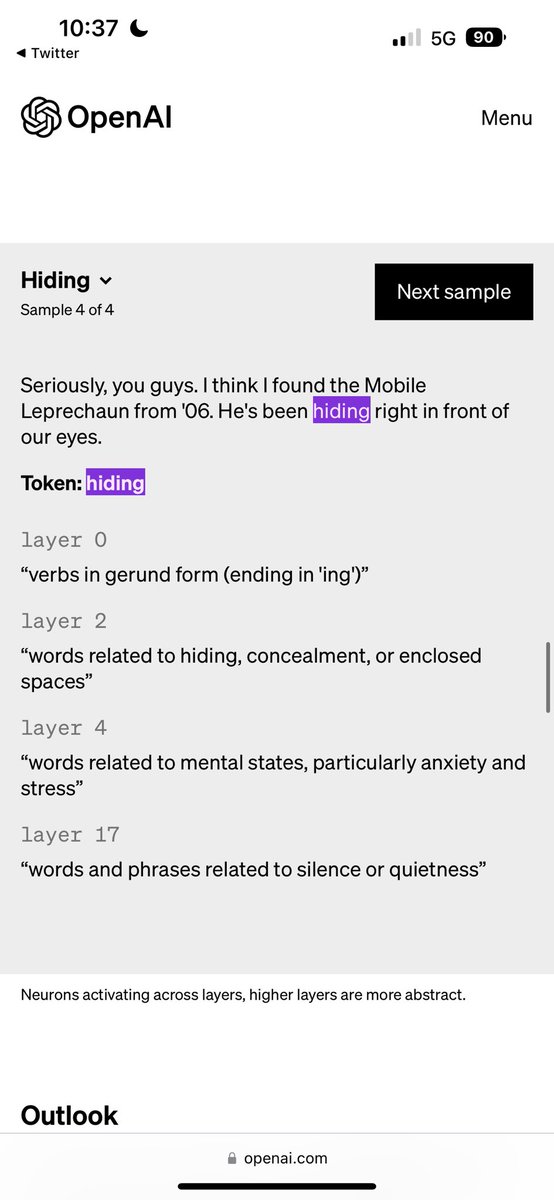

new research from OpenAI used gpt4 to label all 307,200 neurons in gpt2, labeling each with plain english descriptions of the role each neuron plays in the model. this opens up a new direction in explainability and alignment in AI, helping make models more explainable and…

Looking at the original GPT release from OpenAI is so funny

United States Trends

- 1. Luka 61.2K posts

- 2. Clippers 17.8K posts

- 3. Lakers 47.5K posts

- 4. #DWTS 94.9K posts

- 5. Dunn 6,594 posts

- 6. #LakeShow 3,481 posts

- 7. Kawhi 6,159 posts

- 8. Robert 136K posts

- 9. Jaxson Hayes 2,397 posts

- 10. Reaves 11.7K posts

- 11. Ty Lue 1,558 posts

- 12. Collar 43.9K posts

- 13. Jordan 117K posts

- 14. Alix 15.1K posts

- 15. Zubac 2,285 posts

- 16. Elaine 46.1K posts

- 17. NORMANI 6,337 posts

- 18. Godzilla 36.9K posts

- 19. Colorado State 2,419 posts

- 20. Dylan 34.8K posts

You might like

-

Kevin Denamganaï

Kevin Denamganaï

@KeviDenam -

Mark Z

Mark Z

@MarkZMarketing1 -

Daniel de Mello

Daniel de Mello

@dandemello -

Medric

Medric

@medric49 -

Satyajit Ghana

Satyajit Ghana

@thesudoer_ -

Anshuman Sabath

Anshuman Sabath

@AnshumanSabath -

Yaoyao Liu

Yaoyao Liu

@yaoyaoliu1 -

Manoj Acharya

Manoj Acharya

@manoja328 -

deola 🦋

deola 🦋

@notthatbravo -

Anupam Gupta

Anupam Gupta

@mapuna -

Fredrick Xavier

Fredrick Xavier

@fredrickxavier -

Jérémie Kalfon

Jérémie Kalfon

@jkobject -

peterkins

peterkins

@speterkins -

Geoffrey Bourne

Geoffrey Bourne

@GeoffBourne -

t1

t1

@tawamujati

Something went wrong.

Something went wrong.