onnxruntime

@onnxruntime

Cross-platform training and inferencing accelerator for machine learning models.

You might like

ONNX Runtime & DirectML now support Phi-3 mini models cross-platforms & devices! Plus, the new ONNX Runtime Generate() API simplifies LLM integration into your apps. Try Phi-3 on your favorite hardware! Read more: onnxruntime.ai/blogs/accelera… #ONNX #DirectML #Phi3

Run PyTorch models in the browser, on mobile and desktop, with #onnxruntime, in your language and development environment of choice 🚀onnxruntime.ai/blogs/pytorch-…

Developers, don't overlook the power of Swift Package Manager! It simplifies dependency management and promotes modularity. Plus, exciting news: ONNXRuntime just added support for SPM! #iOSdev #SwiftPM #ONNXRuntime

#ONNX Runtime saved the day with our interoperability and ability to run locally on-client and/or cloud! Our lightweight solution gave them the performance they needed with quantization & configuration tooling. Learn how they achieved this in this blog! cloudblogs.microsoft.com/opensource/202…

opensource.microsoft.com

ONNX Runtime: performant on-device inferencing - Microsoft Open Source Blog

The team at Pieces shares the problems and solutions evaluated for their on-device model serving stack and how ONNX Runtime enables their success.

Give yourself a treat (like this adorable🐶 deserves) and read this blog on how to use #ONNX Runtime on #Android! devblogs.microsoft.com/surface-duo/on…

Quick intro to @onnxruntime and applying #machinelearning on Android devblogs.microsoft.com/surface-duo/on…

📢 This new blog by @tryolabs is awesome! Learn how to fine-tune a NLP model and accelerate with #ONNXRuntime!

Maximize the power of LLMs! 💬 Our step-by-step guide covers fine-tuning for specific NLP tasks w/ GPT-3, OPT, & T5. We shared everything from building custom datasets to optimizing inf time with @huggingface 🤗Optimum and @onnxai.🚀 bit.ly/3DqLXxb #LargeLanguageModels

Join us live TODAY! We will be talking to Akhila Vidiyala and Devang Aggarwal on AI Show with Cassie! We will show how developers can use #huggingface #optimum #Intel to quantize models and then use #OpenVINO for #ONNXRuntime to accelerate performance. 👇 aka.ms/aishowlive

youtube.com

YouTube

AI Show LIVE | AI Agents for Beginners v2 & How to vibe code correctly

In this blog, we will discuss how to make huge models like #BERT smaller and faster with #Intel #OpenVINO, Neural Networks Compression Framework (NNCF) and #ONNX Runtime through #Azure! 👇 cloudblogs.microsoft.com/opensource/202…

opensource.microsoft.com

OpenVINO™, ONNX Runtime, and Azure improve BERT inference speed - Microsoft Open Source Blog

Make large models smaller and faster with OpenVino Execution Provider, NNCF and ONNX Runtime leveraging Azure Machine Learning.

👀

🚀 Want easier and faster training for your models on GPUs? Thanks to the @onnxruntime backend, 🤗 Optimum can help you achieve 39% - 130% acceleration with just a few lines of code change. Check out our benchmark results NOW! 👀 huggingface.co/blog/optimum-o…

We are seeking your input to shape the ONNX roadmap! Proposals are being collected until January 24, 2023 and will be discussed in February. Submit your ideas at forms.microsoft.com/pages/response…

forms.microsoft.com

Microsoft Forms

Microsoft Forms

Imagine the frustration of, after applying optimization tricks, finding that the data copying to GPU slows down your "MUST-BE-FAST" inference...🥵 🤗 Optimum v1.5.0 added @onnxruntime IOBinding support to reduce your memory footprint. 👀 github.com/huggingface/op… More ⬇️

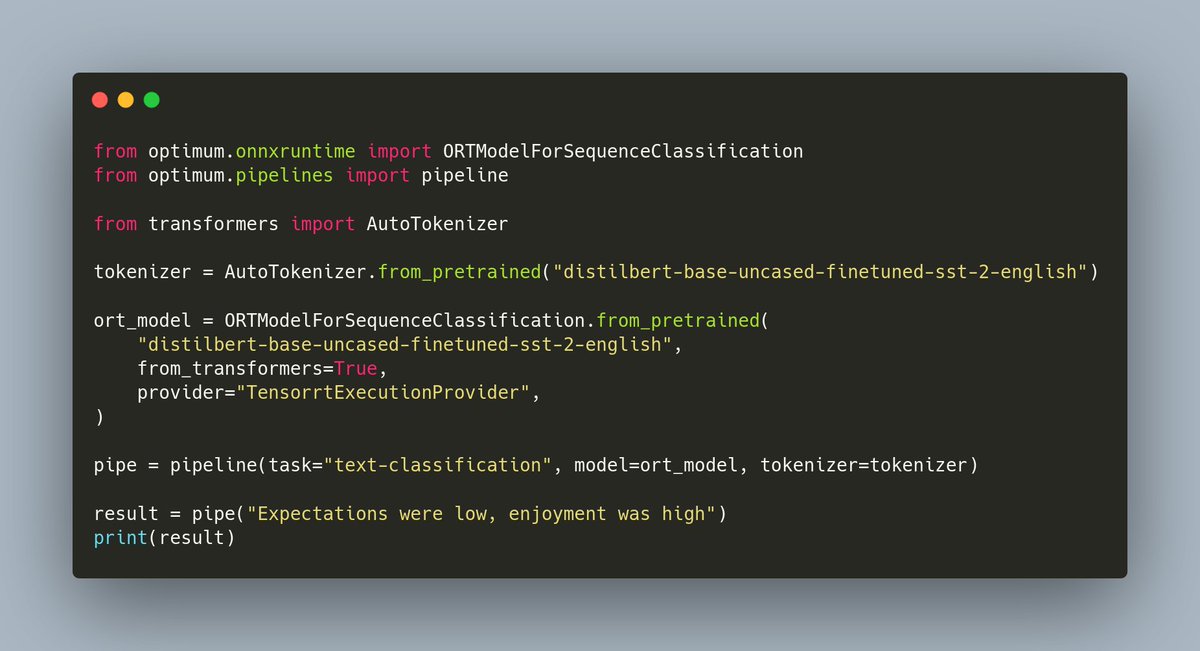

Want to use TensorRT as your inference engine for its speedups on GPU but don't want to go into the compilation hassle? We've got you covered with 🤗 Optimum! With one line, leverage TensorRT through @onnxruntime! Check out more at hf.co/docs/optimum/o…

📣The new version of #ONNXRuntime v1.13.0 was just released!!! Check out the release note and video from the engineering team to learn more about what was in this release! 📝github.com/microsoft/onnx… 📽️youtu.be/vo9vlR-TRK4

youtube.com

YouTube

v1.13 ONNX Runtime - Release Review

👀

Next up from #ONNXCommunityDay: Accelerating Machine Learning w/ @ONNXRuntime & @HuggingFace! In this session, @jeffboudier will show the latest solutions from #HuggingFace to deploy models at scale w/ great performance leveraging #ONNX & #ONNXRuntime. youtu.be/9H7biU4eLZY

youtube.com

YouTube

Accelerating Machine Learning with ONNX Runtime and Hugging Face

Finally tokenization with Sentence Piece BPE now works as expected in #NodeJS #JavaScript with tokenizers library 🚀! Now getting "invalid expand shape" errors when passing text tokens' encoded ids to the MiniLM @onnxruntime converted @MSFTResearch model huggingface.co/microsoft/Mult…

Sentence piece vocabulary and merge files generated, some minor issues occurring, hopefully @huggingface can help 🙏github.com/huggingface/to…

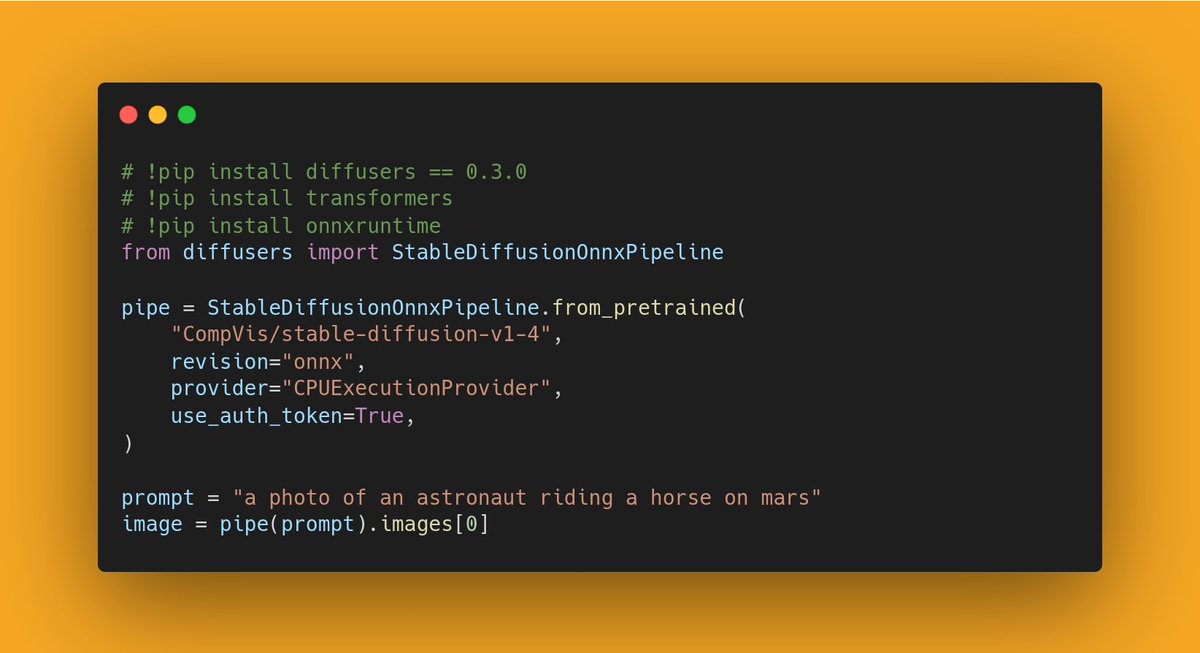

🏭 The hardware optimization floodgates are open!🔥 Diffusers 0.3.0 supports an experimental ONNX exporter and pipeline for Stable Diffusion 🎨 To find out how to export your own checkpoint and run it with @onnxruntime, check the release notes: github.com/huggingface/di…

💡Senior Research & Development Engineer per @deltatre, @tinux80 è anche #MicrosoftMVP e Intel Software Innovator. 📊Non perderti il suo speech su #AzureML e #Onnx Runtime a #WPC2022! 👉𝐀𝐜𝐪𝐮𝐢𝐬𝐭𝐚 𝐢𝐥 𝐭𝐮𝐨 𝐛𝐢𝐠𝐥𝐢𝐞𝐭𝐭𝐨: wpc2022.eventbrite.it @microsofitalia

@jfversluis What about a video on ONNX runtime? Here is the official documentation devblogs.microsoft.com/xamarin/machin… And MAUI example: github.com/microsoft/onnx…

The natural language processing library Apache OpenNLP is now integrated with ONNX Runtime! Get the details and a tutorial explaining its use on the blog: msft.it/6013jfemt #OpenSource

In this article, a community member used #ONNXRuntime to try out GPT-2 model which generates English sentences from Ruby language: dev.to/kojix2/text-ge…

United States Trends

- 1. Marshawn Kneeland 34.8K posts

- 2. Nancy Pelosi 45.7K posts

- 3. Craig Stammen N/A

- 4. #MichaelMovie 52.4K posts

- 5. #NO1ShinesLikeHongjoong 33.5K posts

- 6. #영원한_넘버원캡틴쭝_생일 33K posts

- 7. Pujols N/A

- 8. Gremlins 3 4,094 posts

- 9. Baxcalibur 5,094 posts

- 10. Joe Dante N/A

- 11. Chimecho 7,424 posts

- 12. ESPN Bet 2,845 posts

- 13. Dallas Cowboys 13.2K posts

- 14. Chris Columbus 3,650 posts

- 15. #LosdeSiemprePorelNO N/A

- 16. Jaafar 15.5K posts

- 17. VOTAR NO 27.5K posts

- 18. #thursdayvibes 3,506 posts

- 19. Unplanned 8,573 posts

- 20. She's 85 1,178 posts

You might like

-

Reka

Reka

@RekaAILabs -

Gradio

Gradio

@Gradio -

ray

ray

@raydistributed -

Nils Reimers

Nils Reimers

@Nils_Reimers -

Lightning AI ⚡️

Lightning AI ⚡️

@LightningAI -

AllenNLP

AllenNLP

@ai2_allennlp -

Christopher Potts

Christopher Potts

@ChrisGPotts -

Lewis Tunstall

Lewis Tunstall

@_lewtun -

Jason Weston

Jason Weston

@jaseweston -

Tianqi Chen

Tianqi Chen

@tqchenml -

Omar Sanseviero

Omar Sanseviero

@osanseviero -

Tanmay Gupta

Tanmay Gupta

@tanmay2099 -

OptunaAutoML

OptunaAutoML

@OptunaAutoML -

Ross Wightman

Ross Wightman

@wightmanr -

Horace He

Horace He

@cHHillee

Something went wrong.

Something went wrong.