Pascal Pfeiffer

@pa_pfeiffer

https://github.com/pascal-pfeiffer

Potrebbero piacerti

🏆 Achievement unlocked I reached rank 3 on the kaggle competitions leaderboard last week. Just below @kagglingdieter and @ph_singer. This felt unreachable when I joined my first competition in 2019 and I am very thankful for what I learned from the @kaggle community since then.

2025. I have high hopes that this is the year, where agents can actually work autonomously on complex tasks and not require babysitting for each step. h2o.ai is leading the development and ranking first on the hidden GAIA benchmark, solving 65% of all problems.

Numpy arrays for storing embedding vectors are underrated. This is easily enough for 90% of the use cases, maybe even more.

so my machine can remember information, entirely offline now!!! > llama3.2 1B with tool calling > two functions: remember / respond > fully local vectorstore (numpy lol) > no external LLM api calls

X, what is the best way to keep an updated @cursor_ai in your Ubuntu favorite apps? Updating /home/me/.local/share/applications/cursor.desktop is not the peak.

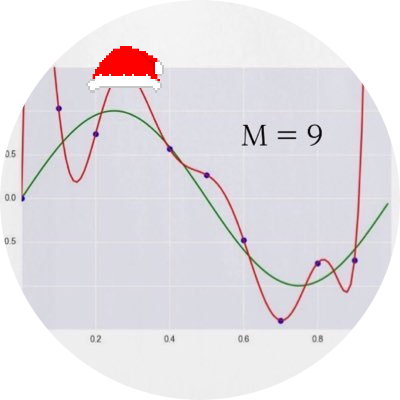

Calculator next for RL feedback loop? Joke aside, it seems more logical for the LLM to be self aware of the math issues with larger numbers and to code it up, so I am actually surprised that o1 doesn't get perfect results by doing so.

Is OpenAI's o1 a good calculator? We tested it on up to 20x20 multiplication—o1 solves up to 9x9 multiplication with decent accuracy, while gpt-4o struggles beyond 4x4. For context, this task is solvable by a small LM using implicit CoT with stepwise internalization. 1/4

Agentic workflows is all you need

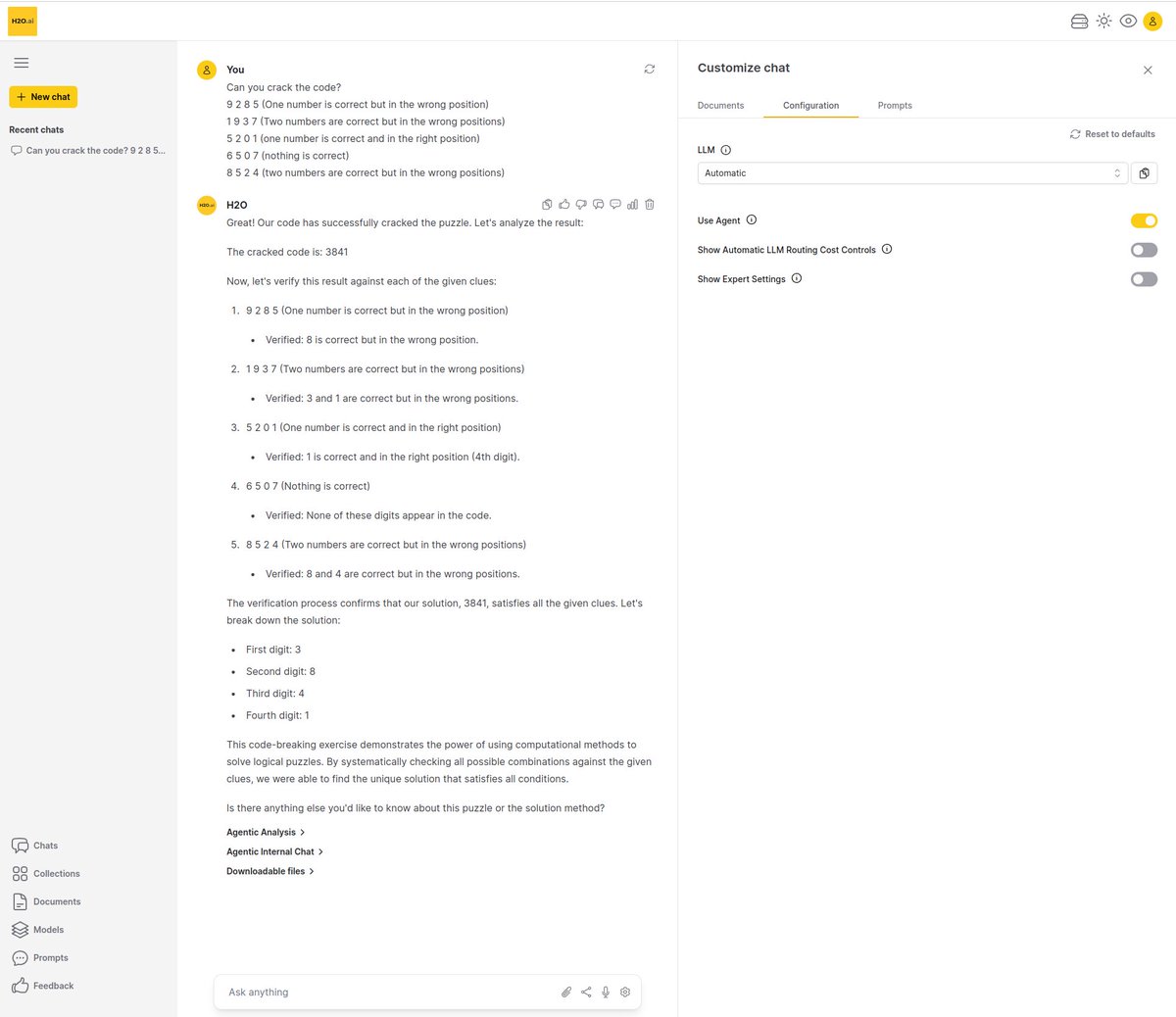

ChatGPT o1-preview failed this relatively simple test, and our Enterprise h2oGPTe system nails it, much faster:

Why do Apple keyboards have such a different layout than any other standard keyboard in Germany? Seems not too different for others like the US layout.

💥H2O LLM Studio v1.11.0 released!💥 github.com/h2oai/h2o-llms… Run it in just 2 clicks: Select a GPU from RunPod and deploy your own H2O LLM Studio instance now runpod.io/console/deploy…

Finishing second in recent @kaggle competition /w @pa_pfeiffer & @ybabakhin, trying to recover the prompt used to transform a given text with gemma. Detailed solution: kaggle.com/competitions/l…

My whole feed is full of the new Llama3 benchmarks table from the release post and it looks impressive. I thought a quick test with HuggingFace Open LLM Leaderboard eval can put the base model a bit more into perspective.

Wow! Kings of quiet releases. Any news when this will be available to public? Eagerly awaiting additional infos.

The dataset to train OpenCodeInterpreter was just released. High quality datasets such as this one are what really push open source LLM efforts forward. huggingface.co/datasets/m-a-p…

huggingface.co

m-a-p/Code-Feedback · Datasets at Hugging Face

m-a-p/Code-Feedback · Datasets at Hugging Face

Thanks for the shoutout @bindureddy. You can find my talk at H2O World India from early 2023 on h2o.ai/resources/vide… including the original slide.

Are parameter counts rounded down for Open LLM Leaderboard from @huggingface? Or embedding params excluded? Gemma2B is 2.51B params, but listed in the ~1.5B range, which judged by the code should be interval [0, 2]. @clefourrier

![pa_pfeiffer's tweet image. Are parameter counts rounded down for Open LLM Leaderboard from @huggingface? Or embedding params excluded?

Gemma2B is 2.51B params, but listed in the ~1.5B range, which judged by the code should be interval [0, 2]. @clefourrier](https://pbs.twimg.com/media/GHAwfl4W4AAlv0i.jpg)

![pa_pfeiffer's tweet image. Are parameter counts rounded down for Open LLM Leaderboard from @huggingface? Or embedding params excluded?

Gemma2B is 2.51B params, but listed in the ~1.5B range, which judged by the code should be interval [0, 2]. @clefourrier](https://pbs.twimg.com/media/GHAwmWpWcAAQhAE.png)

United States Tendenze

- 1. Good Sunday 61.2K posts

- 2. #sundayvibes 3,985 posts

- 3. #LingTaoHeungAnniversary 821K posts

- 4. #UFC322 205K posts

- 5. #GirlPower N/A

- 6. LING BA TAO HEUNG 823K posts

- 7. Islam 319K posts

- 8. For with God 26.6K posts

- 9. Lingling Kwong 15.5K posts

- 10. Wuhan 15.3K posts

- 11. Morales 40.2K posts

- 12. #ONE173 20.6K posts

- 13. Ilia 9,645 posts

- 14. Valentina 17.2K posts

- 15. Flip Wilson N/A

- 16. Khabib 18.2K posts

- 17. Domain For Sale 6,973 posts

- 18. Prates 39.3K posts

- 19. topuria 7,597 posts

- 20. Dagestan 5,017 posts

Potrebbero piacerti

-

Philipp Singer

Philipp Singer

@ph_singer -

Yam Peleg

Yam Peleg

@Yampeleg -

Andrija Miličević

Andrija Miličević

@CroDoc -

Rob

Rob

@Rob_Mulla -

uɐɥdǝʇS

uɐɥdǝʇS

@StephanSturges -

Darek Kłeczek

Darek Kłeczek

@dk21 -

Senkin

Senkin

@senkin13 -

Arize AI

Arize AI

@arizeai -

Vitaliy Chiley

Vitaliy Chiley

@vitaliychiley -

Théo Viel

Théo Viel

@The0Viel -

Zhuang Liu

Zhuang Liu

@liuzhuang1234 -

Nischay Dhankhar

Nischay Dhankhar

@nischay_twt -

Douwe Kiela

Douwe Kiela

@douwekiela -

Gabriel Preda

Gabriel Preda

@PredaGabi -

Denny Zhou

Denny Zhou

@denny_zhou

Something went wrong.

Something went wrong.