Peter J. Liu

@peterjliu

AI research-eneur. Hiring eng: https://twentylabs.ai/careers. Was Research Scientist @ Google Brain / DeepMind, language model research. 🇨🇦🇺🇸

قد يعجبك

Whereas (US) medical doctors should generally expect to get jobs, it's not the case for software engineers -- and young people going into the field should know this before choosing this career path. That most CS graduates cannot find jobs is actually expected, because employers…

The problem here is python. It seems common to not document whether a function could raise an exception. It's also common for python code to have a lot of unhandled exceptions, which is bad for production. More modern languages are much better at enforcing sane error handling.

I don't know what labs are doing to these poor LLMs during RL but they are mortally terrified of exceptions, in any infinitesimally likely case. Exceptions are a normal part of life and healthy dev process. Sign my LLM welfare petition for improved rewards in cases of exceptions.

gotta respect releasing a benchmark that shows your models are far from the best

On GDPval, expert graders compared outputs from leading models to human expert work. Claude Opus 4.1 delivered the strongest results, with just under half of its outputs rated as good as or better than expert work. Just as striking is the pace of progress: OpenAI’s frontier…

so what they're saying is we should be loading up on more Tylenol

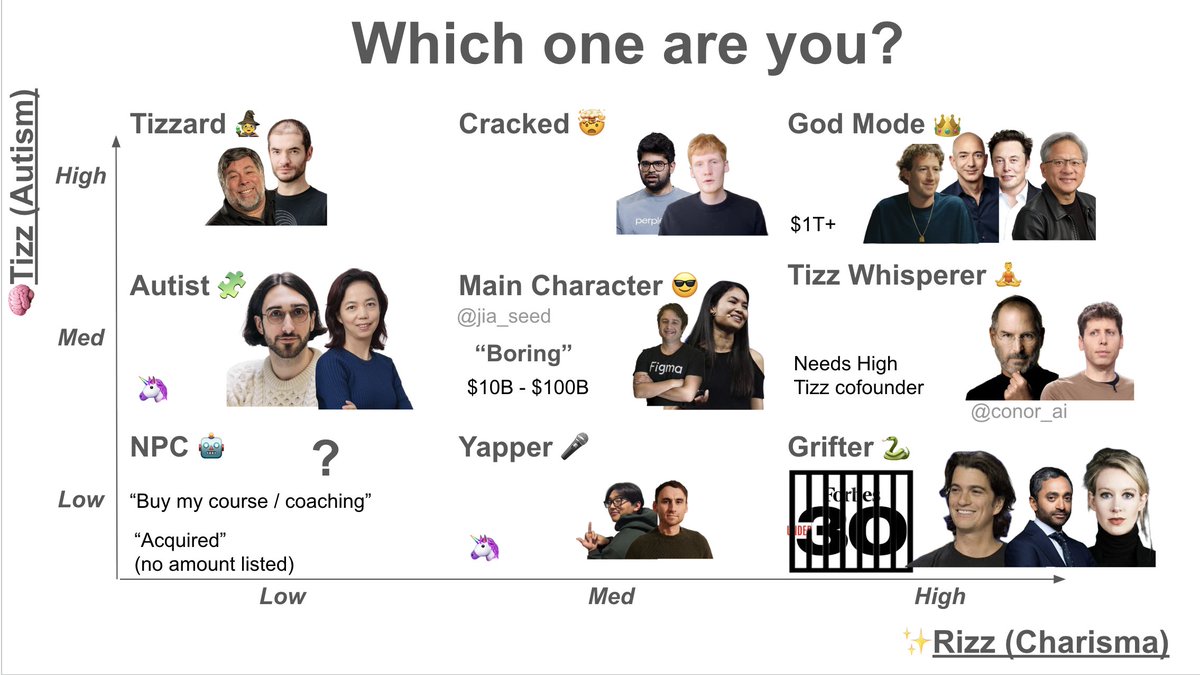

the tizz / rizz founder matrix (TRFM) all great founders land somewhere on here tag yourself cooked up with @jia_seed

I don't like how GPT-5 breaks the convention of if X>Y, GPT-X is a much bigger model than GPT-Y

for most people, prompting is better it's actually a very complex topic, but this is my advice

We built an Excel agent. It's a hard problem, and power users in high finance say it works a lot better than "thin wrappers" of API models. Accuracy and reliability really matter here. The stakes are high, and it has to take less time than doing it yourself.

Still cutting customer cubes manually? I used to spend tens of hours per week doing the same data cuts, while real insights were gleaned after the cuts were done. With AI - this goes from hours to minutes. The RadPod agent quickly builds an auditable ARR waterfall from a…

It's a happy accident that what we needed to make Transformers really powerful (and Turing-complete), chain-of-thought, happened in language first, and has this nice side-effect of making reasoning semi-interpretable. But this truly was an accident. There could be more…

A simple AGI safety technique: AI’s thoughts are in plain English, just read them We know it works, with OK (not perfect) transparency! The risk is fragility: RL training, new architectures, etc threaten transparency Experts from many orgs agree we should try to preserve it:…

United States الاتجاهات

- 1. Columbus 121K posts

- 2. Middle East 210K posts

- 3. #WWERaw 48.5K posts

- 4. #IndigenousPeoplesDay 7,934 posts

- 5. Seth 43.1K posts

- 6. $BURU N/A

- 7. Thanksgiving 50.6K posts

- 8. Darius Smith 2,853 posts

- 9. Marc 42.2K posts

- 10. Mike Shildt 1,548 posts

- 11. Macron 192K posts

- 12. #IDontWantToOverreactBUT 1,239 posts

- 13. Apple TV 3,988 posts

- 14. Egypt 216K posts

- 15. HAZBINTOOZ 2,085 posts

- 16. Flip 50.7K posts

- 17. THANK YOU PRESIDENT TRUMP 62.3K posts

- 18. #SwiftDay 10.1K posts

- 19. The Vision 94.3K posts

- 20. Kash Doll N/A

قد يعجبك

-

Wenhu Chen

Wenhu Chen

@WenhuChen -

Yi Tay

Yi Tay

@YiTayML -

Sasha Rush

Sasha Rush

@srush_nlp -

Yao Fu

Yao Fu

@Francis_YAO_ -

Jacob Steinhardt

Jacob Steinhardt

@JacobSteinhardt -

Jacob Andreas

Jacob Andreas

@jacobandreas -

Ofir Press

Ofir Press

@OfirPress -

Christopher Potts

Christopher Potts

@ChrisGPotts -

Greg Yang

Greg Yang

@TheGregYang -

Jason Weston

Jason Weston

@jaseweston -

Hyung Won Chung

Hyung Won Chung

@hwchung27 -

John Nay

John Nay

@johnjnay -

Denny Zhou

Denny Zhou

@denny_zhou -

Ben Poole

Ben Poole

@poolio -

Tatsunori Hashimoto

Tatsunori Hashimoto

@tatsu_hashimoto

Something went wrong.

Something went wrong.