Chris Tuttle

@ramsus

Occasional biologist, and a friend to plants everywhere. she/her.

You might like

Heads up if you are trying out gemini as a swap in for an openai api call it will fail with api error or list index out of range if it tries to output OpenAI or ChatGPT. Our discord bot is struggling since brain transplant because of it.

its almost like.. embeddings was compression the whole time. wordcount is a embedding and a compression that loses position sensitive to common words tdidf is an embedding and its a compression that is insensitive to document frequency gzip is an embedding like tfidf but…

this paper's nuts. for sentence classification on out-of-domain datasets, all neural (Transformer or not) approaches lose to good old kNN on representations generated by.... gzip aclanthology.org/2023.findings-…

The fact that Instant Pot is already being framed as a corporate cautionary tale—the company that went bankrupt bc they made a product so durable & versatile that its customers had little need to buy another one—instead of as a critique of capitalism is deeply, deeply depressing.

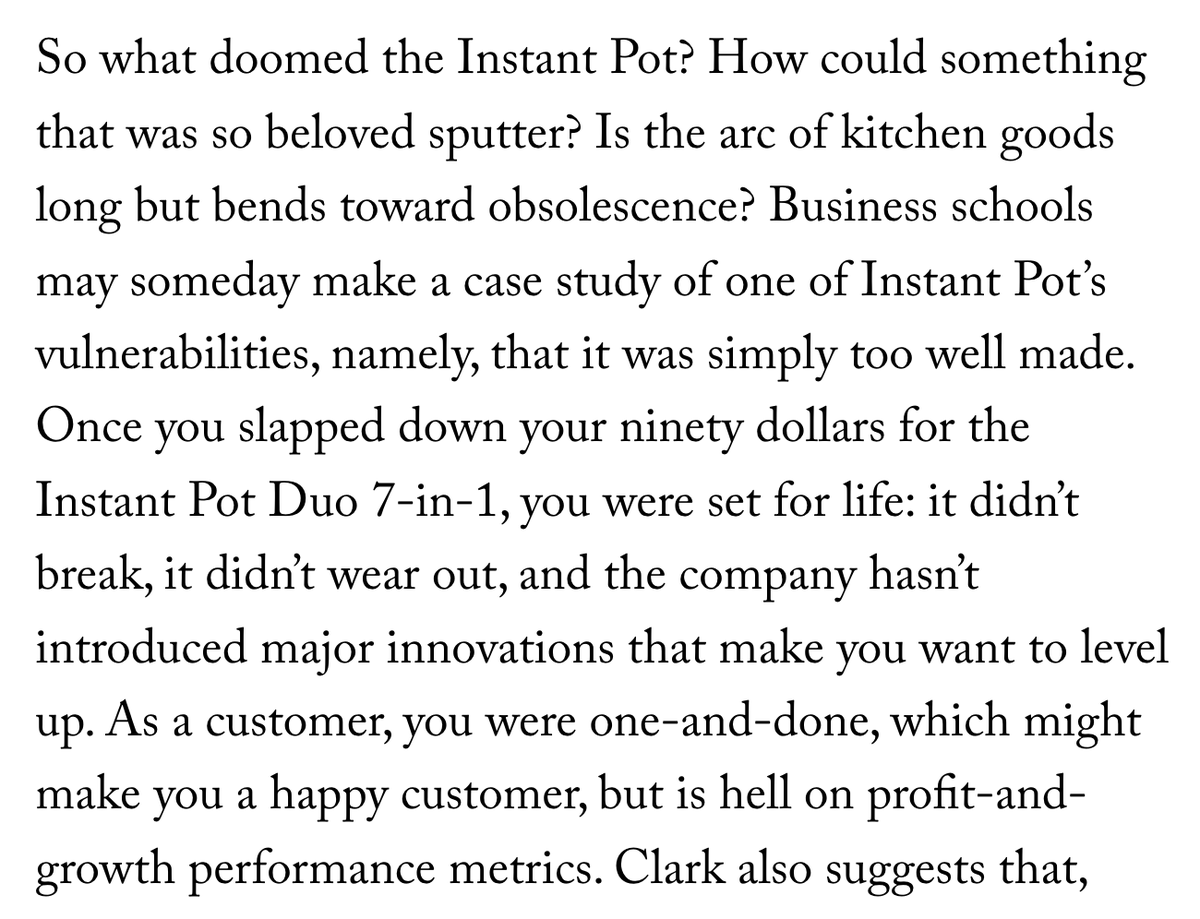

Webby’s attempt, first try. Webby uses gpt 3.5

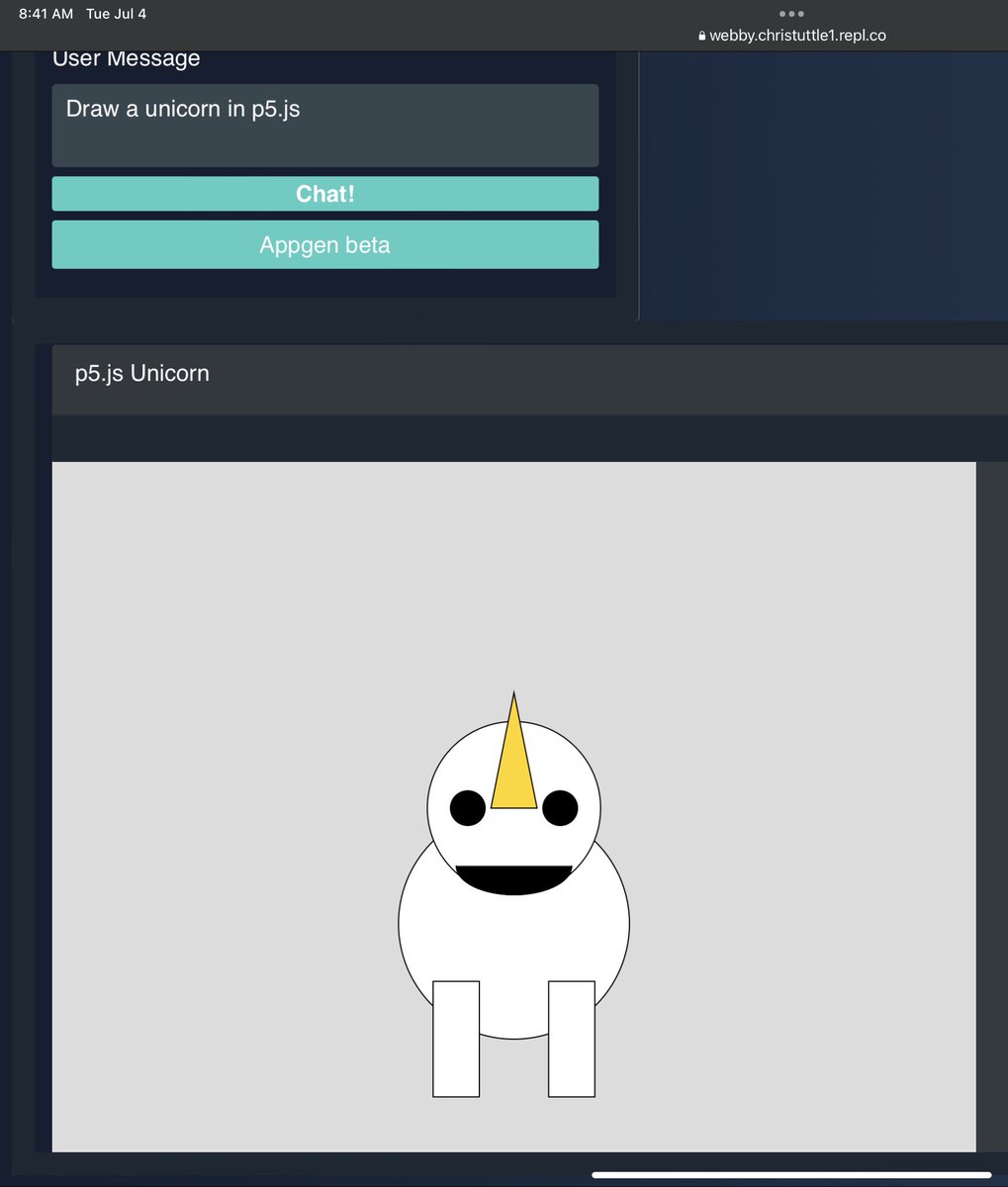

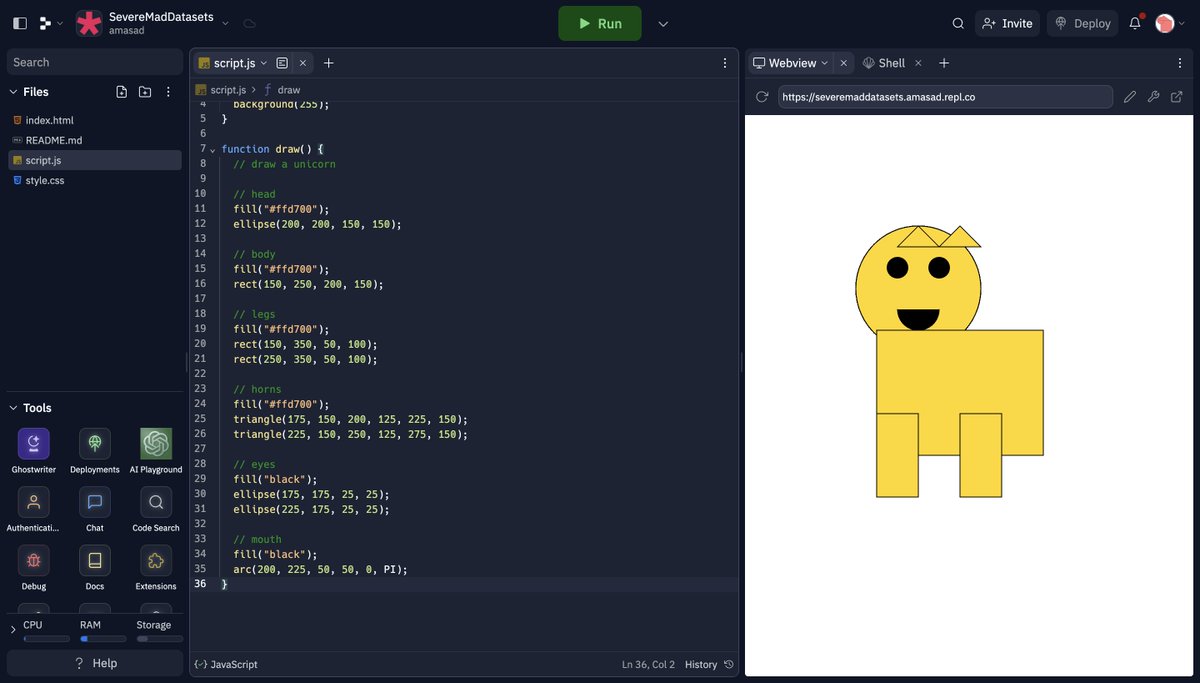

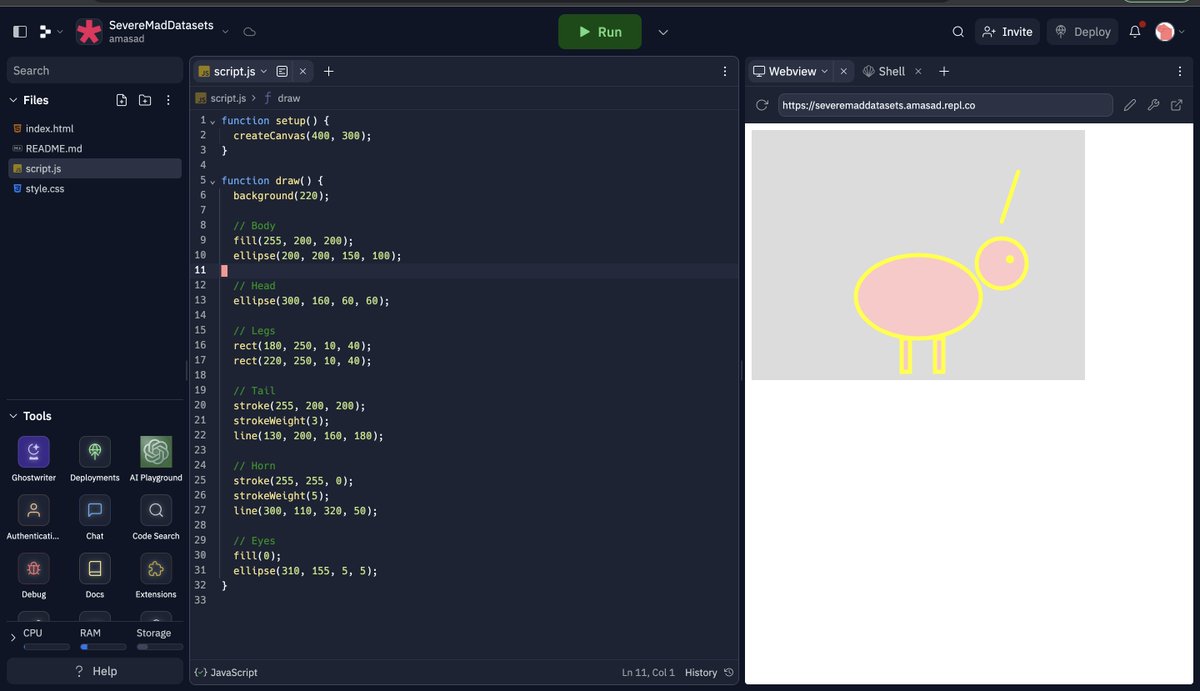

"draw a unicorn in p5.js" replit-finetuned-3b vs GPT4

Okay so @csahil28, the maker of codealpaca and now running the company Glaive, made a dataset of 1B tokens on code generation. It makes Replit3B outperform every opensource code model in the HumanEval benchmark (Code Gen Benchmark). Benchmark has been replicated by @abacaj

Just thinking of inverting another AI trope--a world where AI becomes increasingly dangerous to humans if it doesn't become sentient or self-aware. Consciousness turns out to be the catalyst for alignment.

Pretty sure a lot of new ‘scraping’ is actually a person getting an AI to browse a single site for them at a time, not an automated crawl of everything. Smart companies should also have a AI-friendly page, simple and with all text in one place, if they want to stay relevant.

Easily solution: Allow scraping, be open like the internet was meant to be. Wikipedia surely is less funded than this place and allows all forms of scraping known to man.

I'm fed up. Something has to be done about the public discourse on AI. The doomers are spending totally insane amounts of money spreading their message while pretending to be meek underdogs. Time to get organized, even if that requires I do some of the organizing.

Introducing 🥁🥁... The WombatWhiz🔥 ✨We have just added our own ChatGPT/AI in our Discord community - its name is WombatWhiz. 🪄Join our discord and try it out! discord.gg/wombatgamers

Still waiting for OpenAI’s Code interpreter? Feel free to play with the appgen button in Webby webby.christuttle1.repl.co free to play with unless/until we get too hammered API wise. I’d love any feedback!

Doraemon manga from 1970s Japan that predicted all of this AI stuff :) h/t @_akhaliq

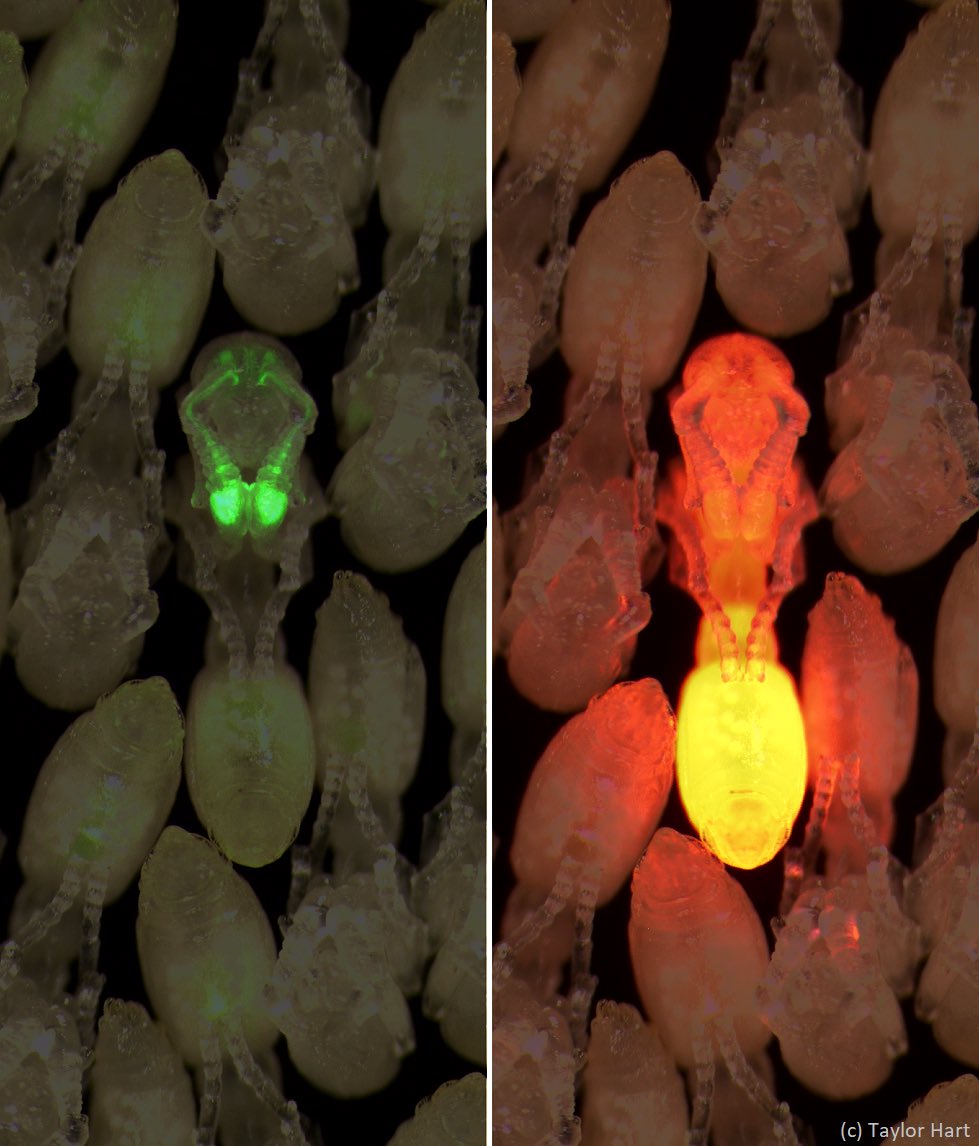

Our first neurogenetic study of ants survived peer review and just appeared OA in its final form @CellCellPress @CellPressNews: cell.com/cell/fulltext/…. @teraxurato & team @RockefellerUniv created the first transgenic ants and identified a sensory hub for alarm behavior. 1/8

Snake game in 10 seconds with webby’s appgen upgraded to GPT-3.5-turbo-16K.

AAAH GPT 3.5 16k is so fast and it is so liberating to up all my token limits. webby.christuttle1.repl.co is updated now, so quick to just change my limits and add -16K to the api call. Good job @OpenAI. P.S appgen is silly now, you can get a working 10K token app in one go

16k 3.5 works and is Faaast at least right now. casually doubling pretty much all the token limits in my projects lol

lots of good updates including function calling and 16k context 3.5-turbo: openai.com/blog/function-…

openai.com/blog/function-… -new function calling capability in the API -updated & more steerable versions of gpt-4 & gpt-3.5-turbo -new 16k context window of gpt-3.5-turbo -75% cost reduction on our v2 embeddings model -25% cost reduction on input tokens for gpt-3.5-turbo

United States Trends

- 1. Herbert 64,8 B posts

- 2. Chargers 87 B posts

- 3. #GoldenGlobes 1,7 Mn posts

- 4. Greg Roman 11 B posts

- 5. Powell 165 B posts

- 6. Pats 24,8 B posts

- 7. Drake Maye 27,5 B posts

- 8. Eagles 272 B posts

- 9. Sinners 88,4 B posts

- 10. 49ers 145 B posts

- 11. Milton Williams 3.253 posts

- 12. Hurts 93,9 B posts

- 13. Hamnet 16,2 B posts

- 14. #BaddiesUSA 10,5 B posts

- 15. AJ Brown 40,3 B posts

- 16. Jim Harbaugh 3.267 posts

- 17. Niners 30,6 B posts

- 18. #GenshinMoonInvitation 12,7 B posts

- 19. Federal Reserve 95,5 B posts

- 20. New England 14,4 B posts

Something went wrong.

Something went wrong.