Sam Foreman

@saforem2

training large models for science @argonne

You might like

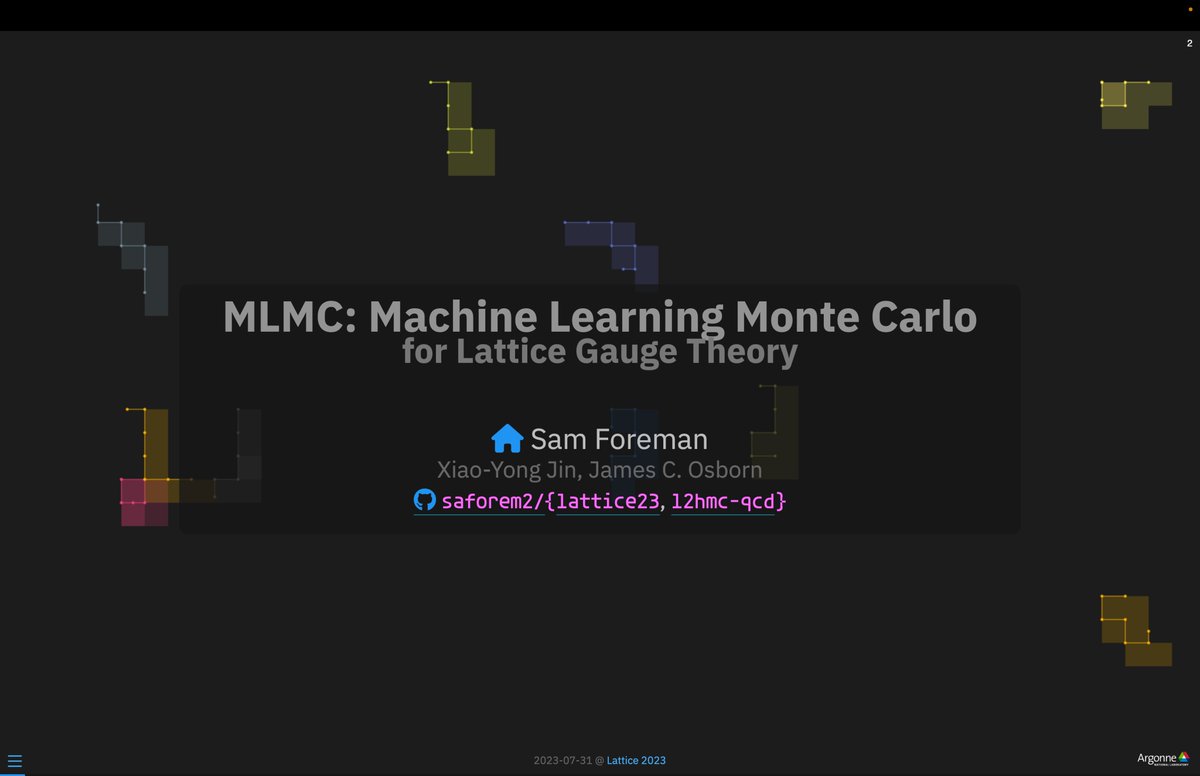

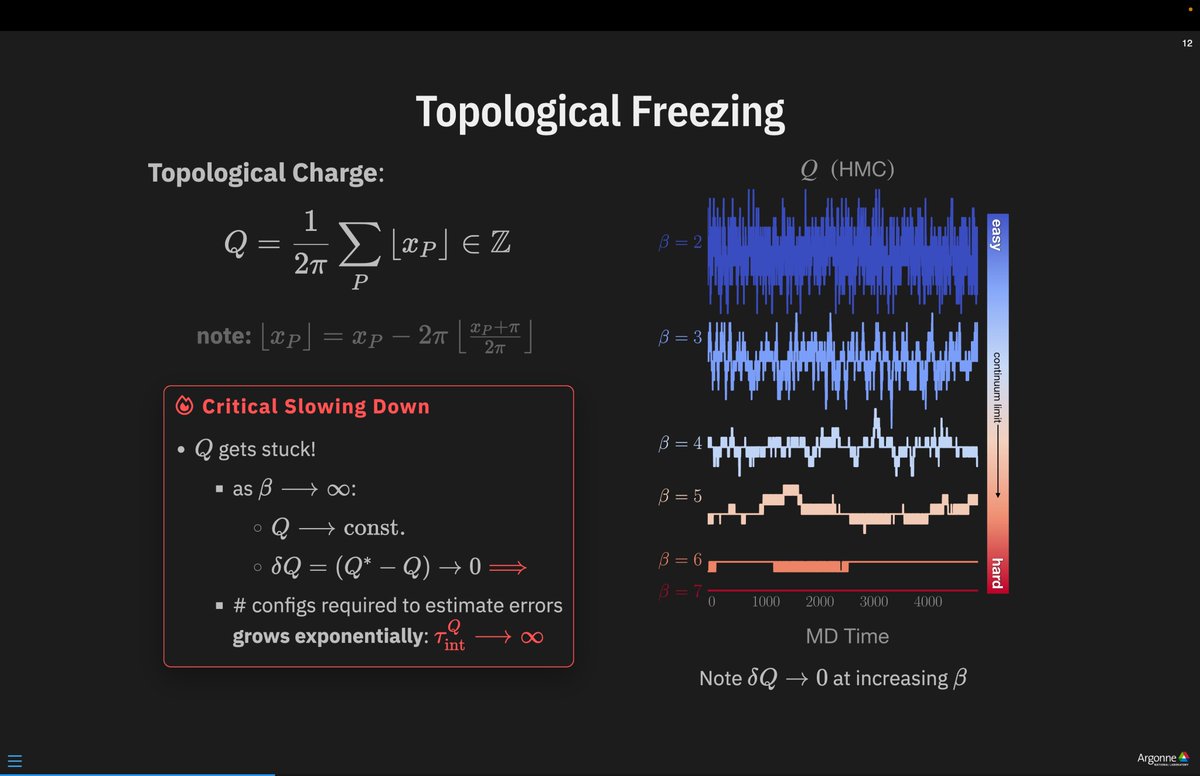

slides from my talk yesterday at #Lattice2023 MLMC: Machine Learning Monte Carlo for Lattice Gauge Theory Slides: saforem2.github.io/lattice23 Code: github.com/saforem2/l2hmc…

i should've gone into industry man

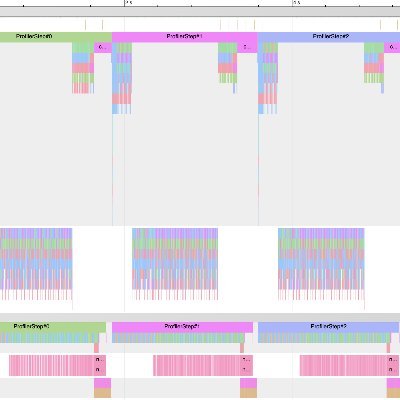

W&B absolutely cooking with this new TUI

We heard you all like TUIs! Announcing Lightweight Experiment Exploration Tool (LEET). Available today in wandb sdk 0.23.0. Run "wandb beta leet"

Arvind talking about generative models and agents for biology

Imagine losing first authorship because you got hit by a blue shell on the last lap 💀

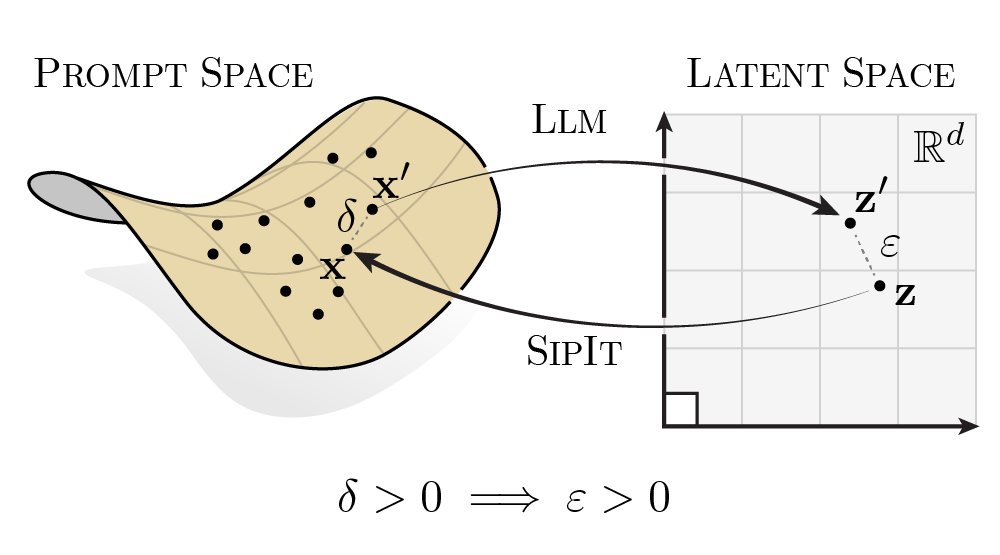

LLMs are injective and invertible. In our new paper, we show that different prompts always map to different embeddings, and this property can be used to recover input tokens from individual embeddings in latent space. (1/6)

I've reimplemented the core parts of TRM in MLX to experiment with locally and reduce complexity. This includes: - deep supervision w/ ACT - recursive reasoning steps (n & T) - EMA + lr schedules - x, y, z values etc. - policy or max step inference (new) github.com/stockeh/mlx-trm

New paper 📜: Tiny Recursion Model (TRM) is a recursive reasoning approach with a tiny 7M parameters neural network that obtains 45% on ARC-AGI-1 and 8% on ARC-AGI-2, beating most LLMs. Blog: alexiajm.github.io/2025/09/29/tin… Code: github.com/SamsungSAILMon… Paper: arxiv.org/abs/2510.04871

i did math (and physics) at UIUC and had no AP math or science credits coming in i took stats senior year 😭

If you want to major in math at an elite university, but all the knowledge you show up with is high school math and AP Calculus, and you’re not a genius, then you’re probably going to get your ass handed to you. High school math – even the “honors” track, even getting a 5 on the…

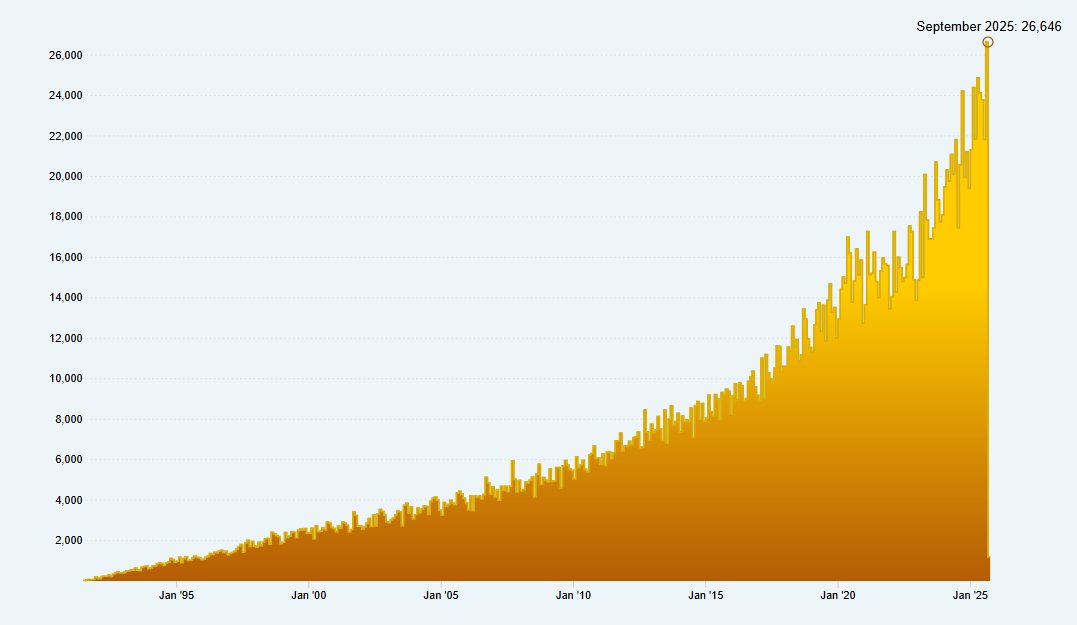

Days in a work week: 5 Days in a month: 30 Total new submissions to arXiv in September: 26,646 arXiv editorial and user support staff: 7 someone who is good at science please help me with this. our team isn't sleeping. #openaccess #preprints

Well in light of the massive TFR over the Chicagoland area that's grounded me for the foreseeable future, here's a selection of some of my favorite drone images I've capture this year.

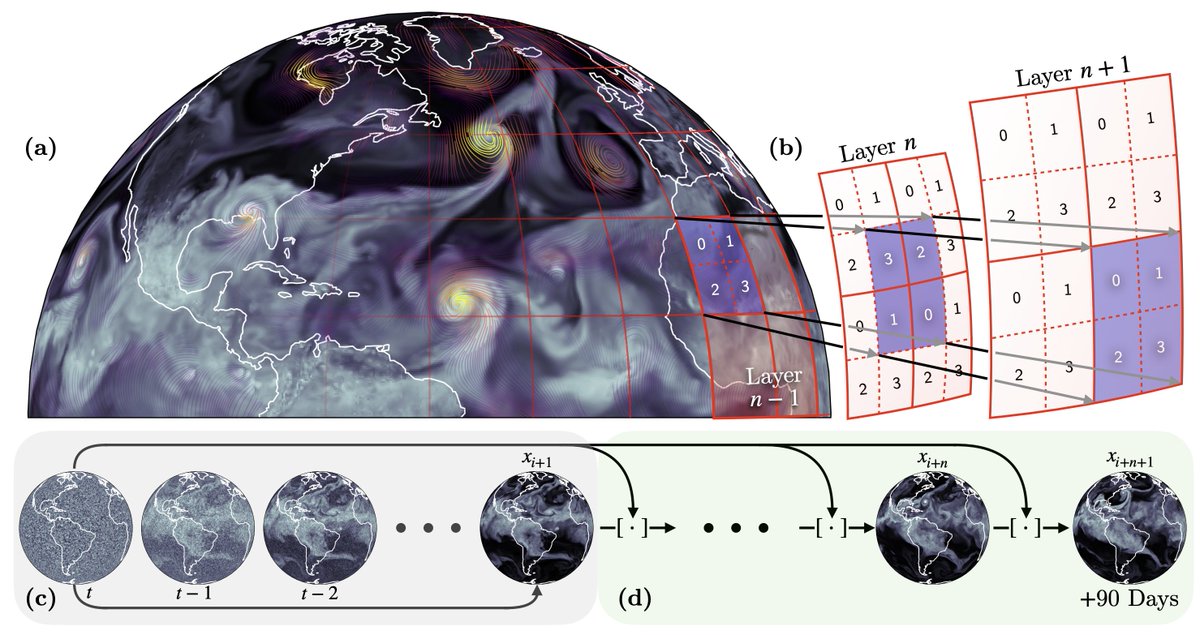

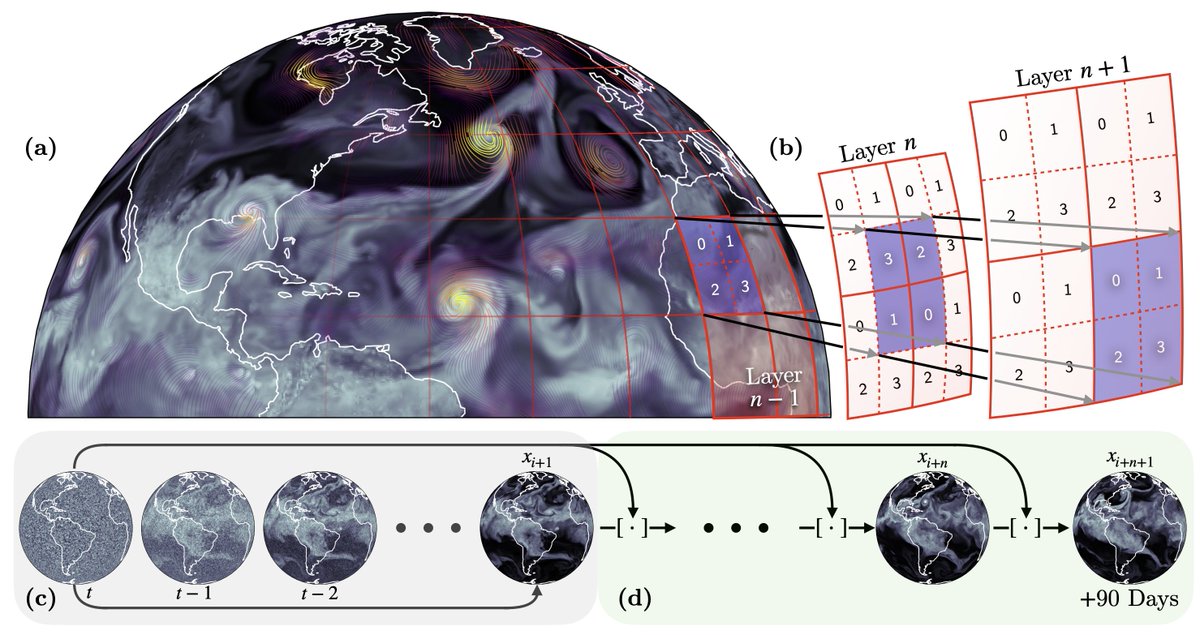

I’m happy to be able to finally talk about this work publicly we trained a diffusion transformer model for weather forecasting on 120,000 GPUs at a sustained throughput of 10 EFLOPs this is an incredible accomplishment and i’m super proud of our team

Excited to share our 2025 ACM Gordon Bell Finalist: 🌎 AERIS, our 1.3–80B parameter pixel-level Swin diffusion transformer, addresses scaling issues in high-resolution weather forecasting using SWiPe parallelism to scale to 121,000 GPUs.

Excited to share our 2025 ACM Gordon Bell Finalist: 🌎 AERIS, our 1.3–80B parameter pixel-level Swin diffusion transformer, addresses scaling issues in high-resolution weather forecasting using SWiPe parallelism to scale to 121,000 GPUs.

The Chicago River at night

anything called more than once goes at the top of the file anything used only once gets put inside the function it’s needed

When you think more about it... why put all imports at the top of the file? For those imports that you use hundred times across the file, like import numpy as np, sure. But the many imports that you only use once or twice in the whole file? Like the from PIL import Image that's…

Anybody that actually trained a model at large scale would tell you how painful and stressful it is to be 24/7 on the watch for infra crash, loss spike, expert routing collapse. Not convinced of the analogy haha

Yes sex is great but have you ever had a training run on 10K+ GB200s converge successfully If so could you pls dm me thx

i like how in math you get to say "consider a function f from... etc" without any preamble and other mathematicians just have to consider it

United States Trends

- 1. Good Sunday 60.8K posts

- 2. Klay 26.2K posts

- 3. McLaren 111K posts

- 4. #sundayvibes 4,875 posts

- 5. #FelizCumpleañosNico 3,840 posts

- 6. Ja Morant 12.2K posts

- 7. Lando 138K posts

- 8. #FelizCumpleañosPresidente 3,468 posts

- 9. For the Lord 29.3K posts

- 10. #AEWFullGear 73.5K posts

- 11. Piastri 81.4K posts

- 12. Tottenham 43.8K posts

- 13. South Asia 38.9K posts

- 14. Oscar 130K posts

- 15. Max Verstappen 69.6K posts

- 16. Arsenal 172K posts

- 17. #LasVegasGP 234K posts

- 18. Childish Gambino 2,973 posts

- 19. Uranus 4,142 posts

- 20. Rubio 99K posts

You might like

-

Bahram Shakerin

Bahram Shakerin

@BahramShakerin -

Alexander Rothkopf

Alexander Rothkopf

@rothkopfAK -

Anindita Maiti

Anindita Maiti

@AninditaMaiti7 -

Center for Advanced Imaging Innovation & Research

Center for Advanced Imaging Innovation & Research

@cai2r -

Tetsufumi Hirano

Tetsufumi Hirano

@TetsuHirano -

Francisco Villaescusa-Navarro

Francisco Villaescusa-Navarro

@paco_astro -

Larry Lee

Larry Lee

@larrylee -

Andreas Crivellin

Andreas Crivellin

@AndreasCrivell1 -

𝕻𝕮 ✨

𝕻𝕮 ✨

@Eupaulocramalho -

Scott Headington

Scott Headington

@Scottyhh2thecc -

Dr Kat Kain 🌊 #DemCast #GOP=GreedOverPeople

Dr Kat Kain 🌊 #DemCast #GOP=GreedOverPeople

@CrayKain

Something went wrong.

Something went wrong.