Saurabh Garg

@saurabh_garg67

@thinkymachines | prev/ Researcher @MistralAI; PhD @mldcmu; CS @iitbombay (undergrad); Collab @GoogleAI @awscloud @apple

You might like

Today we’re announcing research and teaching grants for Tinker: credits for scholars and students to fine-tune and experiment with open-weight LLMs. Read more and apply at: thinkingmachines.ai/blog/tinker-re…

Our latest post explores on-policy distillation, a training approach that unites the error-correcting relevance of RL with the reward density of SFT. When training it for math reasoning and as an internal chat assistant, we find that on-policy distillation can outperform other…

Tinker is cool. If you're a researcher/developer, tinker dramatically simplifies LLM post-training. You retain 90% of algorithmic creative control (usually related to data, loss function, the algorithm) while tinker handles the hard parts that you usually want to touch much less…

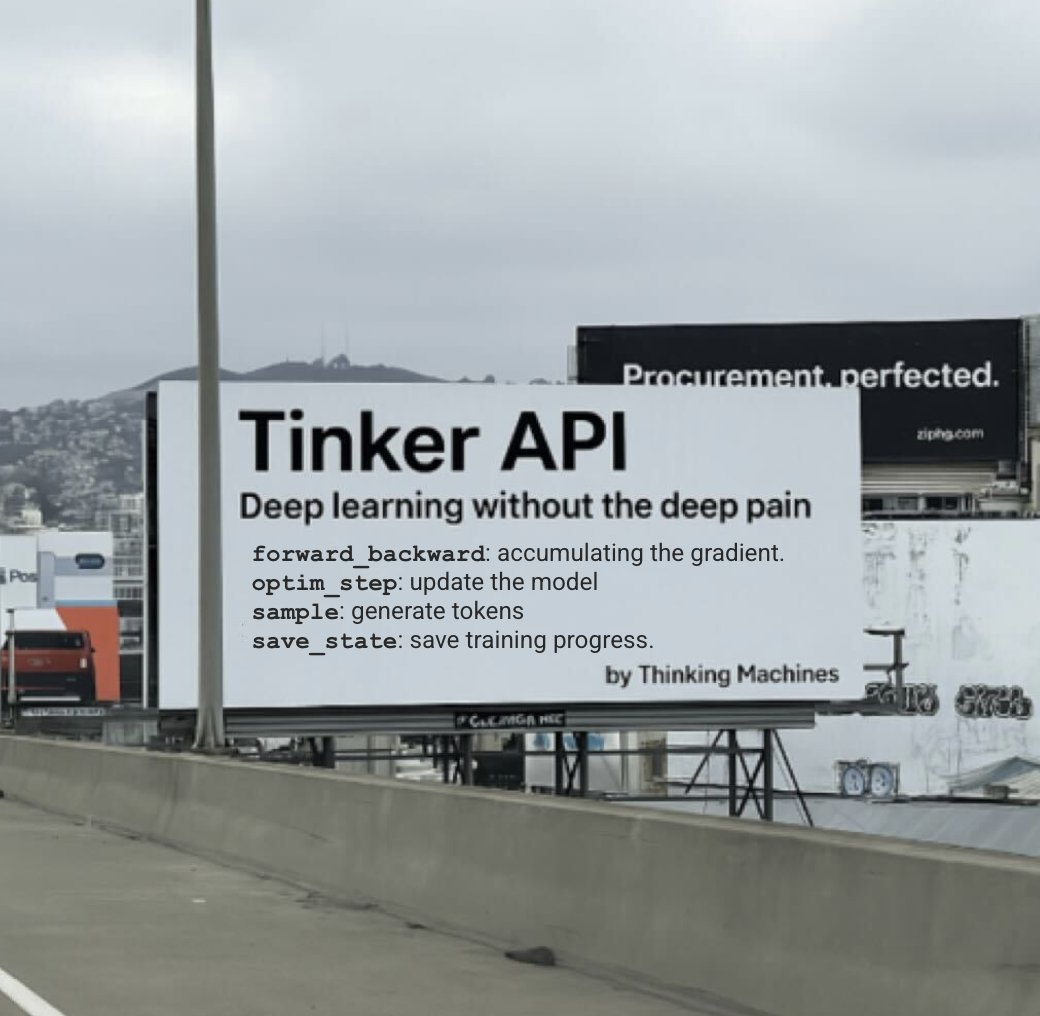

Introducing Tinker: a flexible API for fine-tuning language models. Write training loops in Python on your laptop; we'll run them on distributed GPUs. Private beta starts today. We can't wait to see what researchers and developers build with cutting-edge open models!…

GPUs are expensive and setting up the infrastructure to make GPUs work for you properly is complex, making experimentation on cutting-edge models challenging for researchers and ML practitioners. Providing high quality research tooling is one of the most effective ways to…

One interesting "fundamental" reason for Tinker today is the rise of MoE. Whereas hackers used to deploy llama3-70B efficiently on one node, modern deployments of MoE models require large multinode deployments for efficiency. The underlying reason? Arithmetic intensity. (1/5)

Introducing Tinker: a flexible API for fine-tuning language models. Write training loops in Python on your laptop; we'll run them on distributed GPUs. Private beta starts today. We can't wait to see what researchers and developers build with cutting-edge open models!…

Introducing Tinker: a flexible API for fine-tuning language models. Write training loops in Python on your laptop; we'll run them on distributed GPUs. Private beta starts today. We can't wait to see what researchers and developers build with cutting-edge open models!…

LoRA makes fine-tuning more accessible, but it's unclear how it compares to full fine-tuning. We find that the performance often matches closely---more often than you might expect. In our latest Connectionism post, we share our experimental results and recommendations for LoRA.…

Efficient training of neural networks is difficult. Our second Connectionism post introduces Modular Manifolds, a theoretical step toward more stable and performant training by co-designing neural net optimizers with manifold constraints on weight matrices.…

Since compute grows faster than the web, we think the future of pre-training lies in the algorithms that will best leverage ♾ compute We find simple recipes that improve the asymptote of compute scaling laws to be 5x data efficient, offering better perf w/ sufficient compute

Today Thinking Machines Lab is launching our research blog, Connectionism. Our first blog post is “Defeating Nondeterminism in LLM Inference” We believe that science is better when shared. Connectionism will cover topics as varied as our research is: from kernel numerics to…

Really excited about our focus on building multimodal AI that collaborates with humans the way humans collaborate with each other. It's been an amazing ~4 months building with a small, talented team. Come join us!

Thinking Machines Lab exists to empower humanity through advancing collaborative general intelligence. We're building multimodal AI that works with how you naturally interact with the world - through conversation, through sight, through the messy way we collaborate. We're…

We have been working hard for the past 6 months on what I believe is the most ambitious multimodal AI program in the world. It is fantastic to see how pieces of a system that previously seemed intractable just fall into place. Feeling so lucky to create the future with this…

Thinking Machines Lab exists to empower humanity through advancing collaborative general intelligence. We're building multimodal AI that works with how you naturally interact with the world - through conversation, through sight, through the messy way we collaborate. We're…

It’s really fun to work with a talented yet small team. Our mission is ambitious - multimodal AI for collaborating with humans, so the best is yet to come! Join us— or fill out the application below if interested!

Thinking Machines Lab exists to empower humanity through advancing collaborative general intelligence. We're building multimodal AI that works with how you naturally interact with the world - through conversation, through sight, through the messy way we collaborate. We're…

Thinking Machines Lab exists to empower humanity through advancing collaborative general intelligence. We're building multimodal AI that works with how you naturally interact with the world - through conversation, through sight, through the messy way we collaborate. We're…

Tokenization has been the final barrier to truly end-to-end language models. We developed the H-Net: a hierarchical network that replaces tokenization with a dynamic chunking process directly inside the model, automatically discovering and operating over meaningful units of data

Is your AI keeping Up with the world? Announcing #NeurIPS2025 CCFM Workshop: Continual and Compatible Foundation Model Updates When/Where: Dec. 6-7 San Diego Submission deadline: Aug. 22, 2025. (opening soon!) sites.google.com/view/ccfm-neur… #FoundationModels #ContinualLearning

Very excited to finally release our paper for OpenThoughts! After DataComp and DCLM, this is the third large open dataset my group has been building in collaboration with the DataComp community. This time, the focus is on post-training, specifically reasoning data.

Giving your models more time to think before prediction, like via smart decoding, chain-of-thoughts reasoning, latent thoughts, etc, turns out to be quite effective for unblocking the next level of intelligence. New post is here :) “Why we think”: lilianweng.github.io/posts/2025-05-…

Was fun hosting informal IITB CS get together in SF. We still argue about which hostel is the best 🙃 The oldest person was born in 1992 and the youngest was a decade younger

Introducing the world's best OCR model! mistral.ai/news/mistral-o…

United States Trends

- 1. Marshawn Kneeland 18.3K posts

- 2. Nancy Pelosi 22.6K posts

- 3. #MichaelMovie 31.2K posts

- 4. ESPN Bet 2,219 posts

- 5. #영원한_넘버원캡틴쭝_생일 24.7K posts

- 6. #NO1ShinesLikeHongjoong 24.9K posts

- 7. Gremlins 3 2,655 posts

- 8. Jaafar 9,703 posts

- 9. Good Thursday 35.7K posts

- 10. Joe Dante N/A

- 11. Chimecho 4,823 posts

- 12. #thursdayvibes 2,863 posts

- 13. Madam Speaker N/A

- 14. Baxcalibur 3,388 posts

- 15. #BrightStar_THE8Day 36.4K posts

- 16. Penn 9,502 posts

- 17. Votar No 27.9K posts

- 18. Chris Columbus 2,404 posts

- 19. Happy Friday Eve 1,001 posts

- 20. Barstool 1,631 posts

You might like

-

Zico Kolter

Zico Kolter

@zicokolter -

Jacob Steinhardt

Jacob Steinhardt

@JacobSteinhardt -

Mingjie Sun

Mingjie Sun

@_mingjiesun -

Jonathan Frankle

Jonathan Frankle

@jefrankle -

Pang Wei Koh

Pang Wei Koh

@PangWeiKoh -

Paul Liang

Paul Liang

@pliang279 -

Behnam Neyshabur

Behnam Neyshabur

@bneyshabur -

Elan Rosenfeld

Elan Rosenfeld

@ElanRosenfeld -

Clémentine Dominé, Phd 🍊

Clémentine Dominé, Phd 🍊

@ClementineDomi6 -

Andrej Risteski

Andrej Risteski

@risteski_a -

Prateek Jain

Prateek Jain

@jainprateek_ -

Ananya Kumar

Ananya Kumar

@ananyaku -

Yiding Jiang

Yiding Jiang

@yidingjiang -

Samarth Sinha

Samarth Sinha

@_sam_sinha_ -

Bingbin Liu

Bingbin Liu

@BingbinL

Something went wrong.

Something went wrong.