Shaojie Bai

@shaojieb

Doing AI at @thinkymachines. Previously GenAI+RLR @ Meta. CMU MLD. Twitter account for more than AI.

내가 좋아할 만한 콘텐츠

Post-training made (extremely) easy. Try it out!

Introducing Tinker: a flexible API for fine-tuning language models. Write training loops in Python on your laptop; we'll run them on distributed GPUs. Private beta starts today. We can't wait to see what researchers and developers build with cutting-edge open models!…

Introducing Tinker: a flexible API for fine-tuning language models. Write training loops in Python on your laptop; we'll run them on distributed GPUs. Private beta starts today. We can't wait to see what researchers and developers build with cutting-edge open models!…

LoRA makes fine-tuning more accessible, but it's unclear how it compares to full fine-tuning. We find that the performance often matches closely---more often than you might expect. In our latest Connectionism post, we share our experimental results and recommendations for LoRA.…

Efficient training of neural networks is difficult. Our second Connectionism post introduces Modular Manifolds, a theoretical step toward more stable and performant training by co-designing neural net optimizers with manifold constraints on weight matrices.…

Thinking Machines Lab exists to empower humanity through advancing collaborative general intelligence. We're building multimodal AI that works with how you naturally interact with the world - through conversation, through sight, through the messy way we collaborate. We're…

We’ll be mixing ideas, cocktails and discussions for a night of *neural-networking* at Singapore! Come learn more about Thinking Machines at our happy hour at #iclr2025 😎

Thinking Machines is hosting a happy hour in Singapore during #ICLR2025 on Friday, April 25: lu.ma/ecgmuhmx Come eat, drink, and learn more about us!

Introducing our first set of Llama 4 models! We’ve been hard at work doing a complete re-design of the Llama series. I’m so excited to share it with the world today and mark another major milestone for the Llama herd as we release the *first* open source models in the Llama 4…

Mitigating racial bias from LLMs is a lot easier than removing it from humans! Can’t believe this happened at the best AI conference @NeurIPSConf We have ethical reviews for authors, but missed it for invited speakers? 😡

I'm excited to announce that I am joining the OpenAI Board of Directors. I'm looking forward to sharing my perspectives and expertise on AI safety and robustness to help guide the amazing work being done at OpenAI.

Zico Kolter from Carnegie Mellon joins OpenAI’s Board, bringing technical and AI safety expertise; he also joins the Safety & Security Committee. openai.com/index/zico-kol…

I am very excited to start working with GenAI team at @Meta, focusing on multimodal LLM agents, joining together with my amazing CMU colleagues Jing Yu Koh @kohjingyu and Daniel Fried @dan_fried!

I'm thrilled to share that I will become the next Director of the Machine Learning Department at Carnegie Mellon. MLD is a true gem, a department dedicated entirely to ML. Faculty and past directors have been personal role models in my career. cs.cmu.edu/news/2024/kolt…

Say hello to GPT-4o, our new flagship model which can reason across audio, vision, and text in real time: openai.com/index/hello-gp… Text and image input rolling out today in API and ChatGPT with voice and video in the coming weeks.

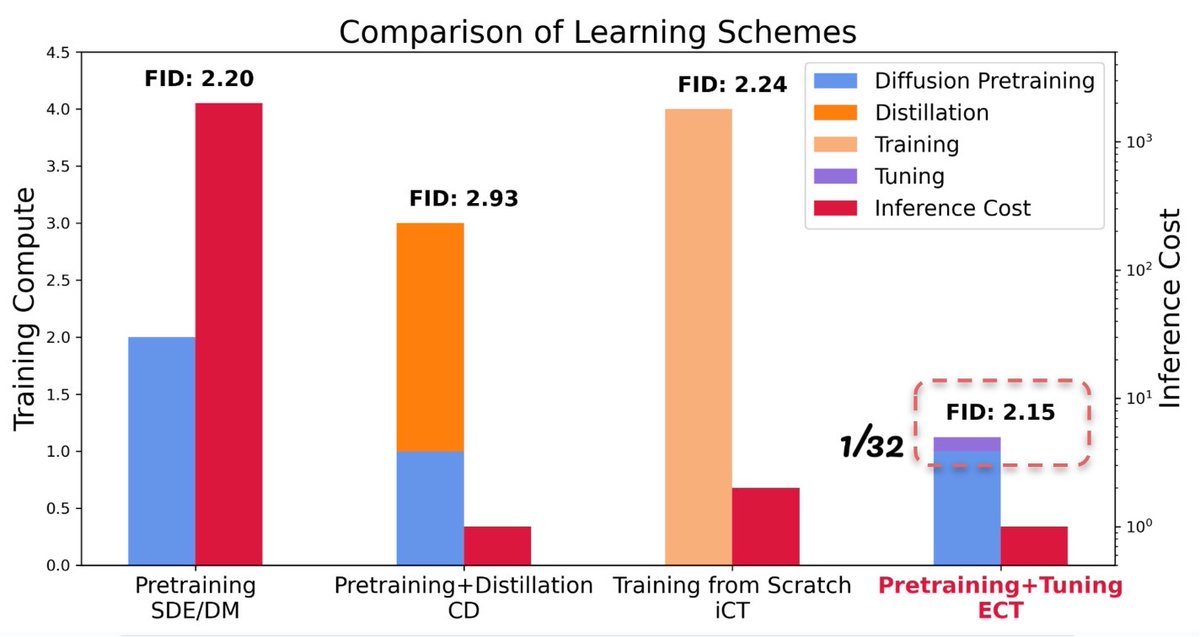

🚀Our latest blog post unveils the power of Consistency Models and introduces Easy Consistency Tuning (ECT), a new way to fine-tune pretrained diffusion models to consistency models. SoTA fast generative models using 1/32 training cost! 🔽 Get ready to speed up your generative…

I feel like a lot of people leverage LLMs suboptimally, especially for long-form interactions that span a whole project. So I wrote a VSCode extension that supports what I think is a better use paradigm. 🧵 1/N Extension: marketplace.visualstudio.com/items?itemName… Code: github.com/locuslab/chatl…

Exciting new work with Evonne, @AlexRichardCS et al! 🤯 We (generatively) animate photorealistic full-body avatars using conversational audio (of anyone!). Next step, seeing GPT4 + photorealistic avatar [argue with]/[point a finger at]/[mock] you in VR?😏

Motion generation for photorealistic avatars? Say no more! Check out how we animate full body avatars exclusively from audio input! Paper: arxiv.org/abs/2401.01885 Project page: people.eecs.berkeley.edu/~evonne_ng/pro… Dataset + Code: github.com/facebookresear…

My core ML team (@AIatMeta) is hiring research interns! Our projects span optimization, optimal transport, optimal control, generative modeling, complex systems, and geometry. Please apply here and reach out ([email protected]) if you're interested: metacareers.com/jobs/627997209…

@CadeMetz at the New York Times just published a piece on a new paper we are releasing today, on adversarial attacks against LLMs. You can read the piece here: nytimes.com/2023/07/27/tec… And find more info and the paper at: llm-attacks.org [1/n]

NeurIPS!!! First in-person meeting after 3yrs starting my research. 🥳 Glad to have any chats, neural dynamics, deep equilibrium models (DEQ), symmetries, protein folding/AF2, etc. Will be working on the intersection of DEQ and AF2 and expect to see all the collaboration chances!

🆕📜When can **Equilibrium Models** learn from simple examples to handle complex ones? We identify a property — Path Independence — that enables this by letting EMs think for longer on hard examples. (NeurIPS) 📝: [arxiv.org/abs/2211.09961]()

![cem__anil's tweet image. 🆕📜When can **Equilibrium Models** learn from simple examples to handle complex ones?

We identify a property — Path Independence — that enables this by letting EMs think for longer on hard examples.

(NeurIPS) 📝: [arxiv.org/abs/2211.09961]()](https://pbs.twimg.com/media/FiQ7LwrWAAEMzhe.jpg)

I just posted our Deep Learning Systems Lecture 6 on Fully Connected Networks, Optimization, and Initialization: youtu.be/CukpVt-1PA4 However, the real topic of interest here is that I used @OpenAI's whisper to caption it entirely. A thread 🧵on my experience. 1/N

youtube.com

YouTube

Lecture 6 - Fully connected networks, optimization, initialization

United States 트렌드

- 1. Flacco 83.1K posts

- 2. Bengals 79.4K posts

- 3. Bengals 79.4K posts

- 4. Tomlin 21.5K posts

- 5. Ramsey 18.6K posts

- 6. Chase 106K posts

- 7. Chase 106K posts

- 8. #TNFonPrime 5,524 posts

- 9. #WhoDey 6,752 posts

- 10. #WhoDidTheBody 1,459 posts

- 11. Teryl Austin 2,731 posts

- 12. #clubironmouse 2,085 posts

- 13. Cuomo 80.4K posts

- 14. #criticalrolespoilers 6,623 posts

- 15. Andrew Berry 3,182 posts

- 16. Max Scherzer 14.1K posts

- 17. DK Metcalf 3,930 posts

- 18. Burrow 9,650 posts

- 19. Ace Frehley 95.4K posts

- 20. yeonjun 105K posts

내가 좋아할 만한 콘텐츠

-

Zico Kolter

Zico Kolter

@zicokolter -

Tengyu Ma

Tengyu Ma

@tengyuma -

Brandon Amos

Brandon Amos

@brandondamos -

Devendra Chaplot

Devendra Chaplot

@dchaplot -

Stefano Ermon

Stefano Ermon

@StefanoErmon -

Sham Kakade

Sham Kakade

@ShamKakade6 -

Sanjeev Arora

Sanjeev Arora

@prfsanjeevarora -

Aleksander Madry

Aleksander Madry

@aleks_madry -

yingzhen

yingzhen

@liyzhen2 -

Zeyuan Allen-Zhu, Sc.D.

Zeyuan Allen-Zhu, Sc.D.

@ZeyuanAllenZhu -

Paul Liang

Paul Liang

@pliang279 -

Simon Shaolei Du

Simon Shaolei Du

@SimonShaoleiDu -

Emma Brunskill

Emma Brunskill

@EmmaBrunskill -

Jiaming Song

Jiaming Song

@baaadas -

Ricky T. Q. Chen

Ricky T. Q. Chen

@RickyTQChen

Something went wrong.

Something went wrong.