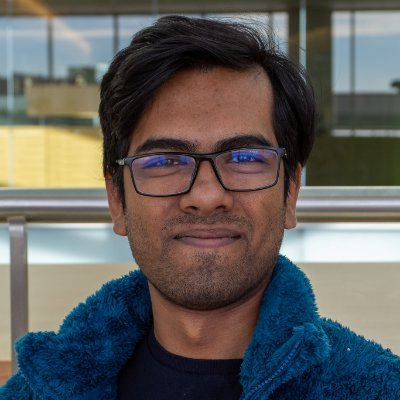

Siddharth Suresh

@siddsuresh97

ML Research Intern @NetflixResearch | PhD student @UWMadison | Human-AI Alignment | Prev Applied Scientist Intern @AmazonAGI, Intern @BrownCLPS

You might like

Excited to announce that our paper NOVA (Norms Optimized Via AI) 🧠🤖, won the best paper award at the ICLR'25 Bi-Align workshop 🏅 and the computational modeling prize 🏆 for applied cognition at CogSci'25. Here's a tweeprint by my co-first author @kushin_m. #cogsci2025…

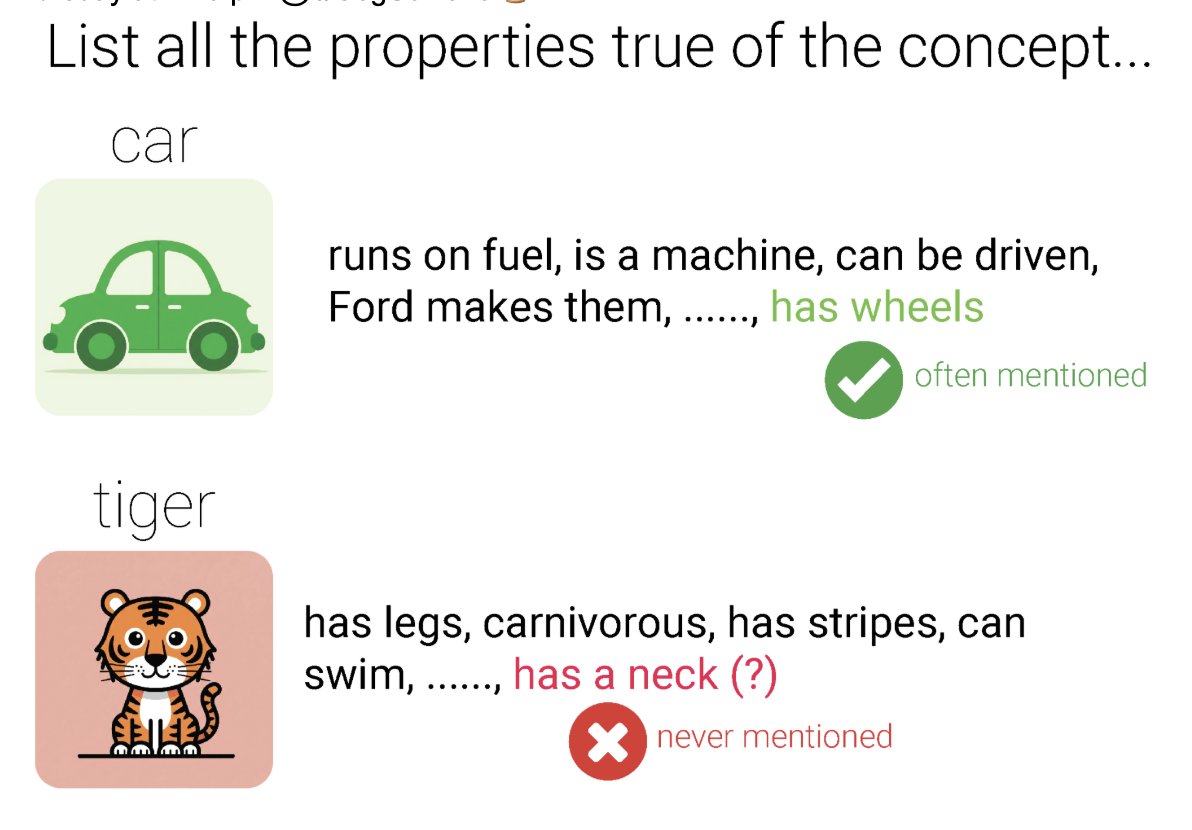

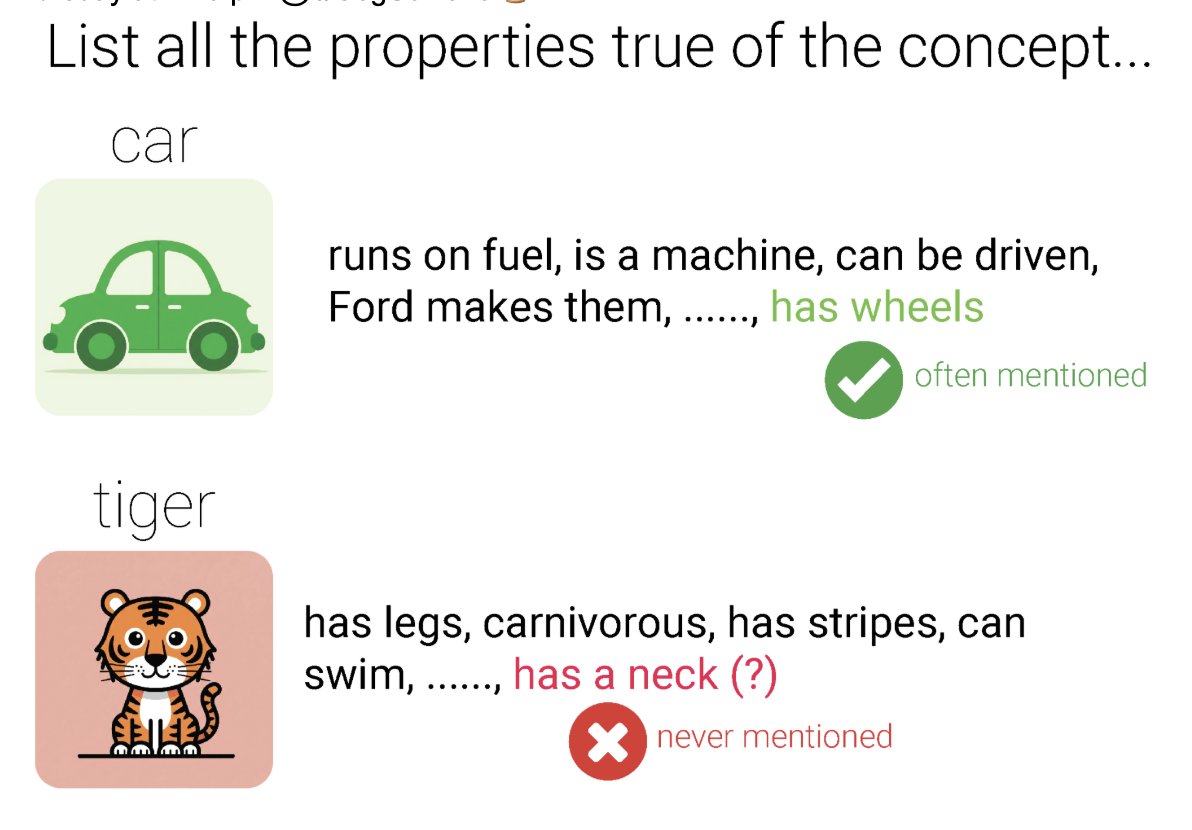

Do cars have wheels? Of course! Do tigers have necks? Of course! While folks know both these facts, they’re not likely to mention the latter. To learn what implications this has for how we measure semantic knowledge, come to our talk T-09-1 on Thursday at 2:15 pm @ #CogSci2025 🧵

honored to give a plenary address at the Society for Language Development annual symposium today titled "Whence insights? The value of delineating human and machine CogSci". It's a synthesis of a few years of thoughts, recently concretized with @AdityaYedetore & @kanishkamisra

Happening this morning!

Stoked to be at #ieeevis where I’m presenting *EncQA*, our dynamic benchmark for assessing how vision-language models understand visual encodings for chart understanding tasks! Talk tomorrow (Nov 6) @ 10:00 am in session ‘Explanation, Exploration, and Model Configuration’. 🧵

🧵🎉 Our mega-paper is finally published in TMLR! We're "Getting Aligned on Representational Alignment" - the degree to which internal representations of different (biological & artificial) information processing systems agree. 🧠🤖🔬🔍 #CognitiveScience #Neuroscience #AI

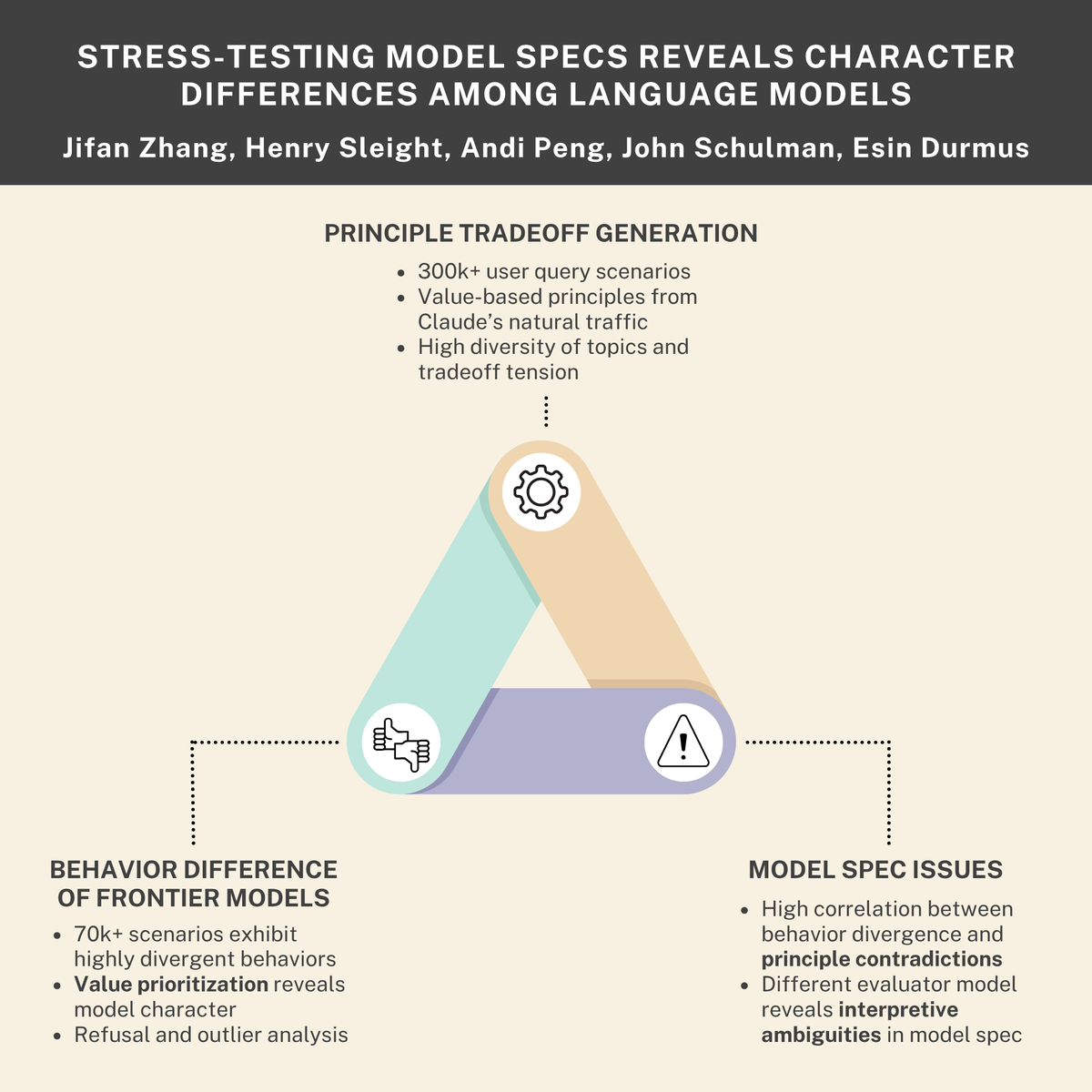

New research paper with Anthropic and Thinking Machines AI companies use model specifications to define desirable behaviors during training. Are model specs clearly expressing what we want models to do? And do different frontier models have different personalities? We generated…

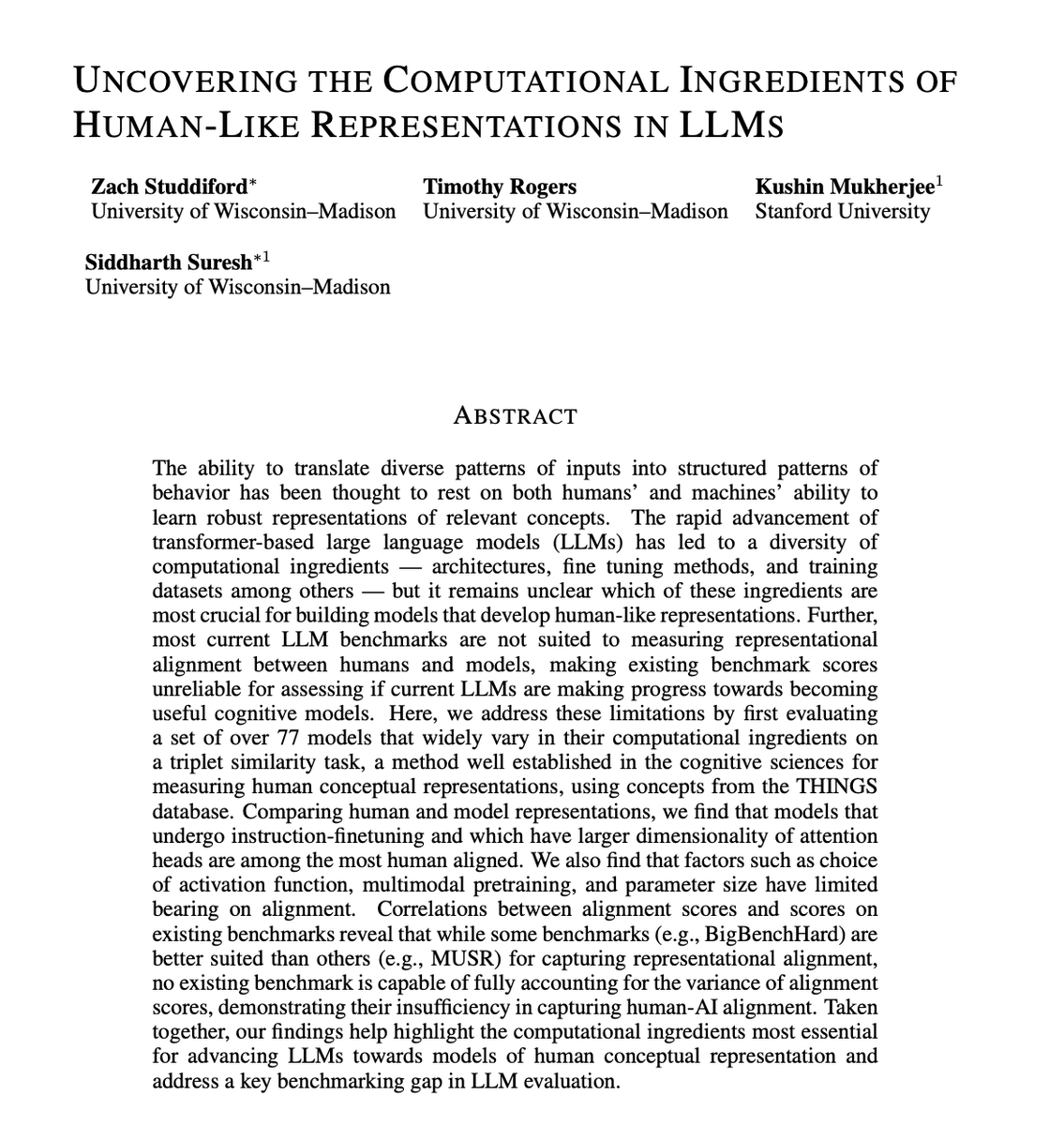

In this work, we explore which computational ingredients matter for building human-like semantic representations in LLMs. Find out more in the thread 🧵 This work was led by the brilliant @ZachStuddiford, who is on the grad school market — recruit him while you can! 😉

We’re drowning in language models — there are over 2 mil. of them on Huggingface! Can we use some of them to understand which computational ingredients — architecture, scale, post-training, etc. – help us build models that align with human representations? Read on to find out 🧵

"Although I hate leafy vegetables, I prefer daxes to blickets." Can you tell if daxes are leafy vegetables? LM's can't seem to! 📷 We investigate if LMs capture these inferences from connectives when they cannot rely on world knowledge. New paper w/ Daniel, Will, @jessyjli!

These positions are at NYU, but I'm also separately recruiting PhD students for next year at Purdue CS. Apply directly to the program and mention me in your application if interested!

📢 Mark and I are recruiting an RA and a postdoc for a big collaboration on trust in AI! 🧠🤖🤔 If you're interested, see the job posting links in Mark's post.

Paper accepted at EMNLP 2025 (main track)! Can LLMs "Guesstimate" - making rough but educated guesses about real-world quantities? For example, 💡 How many marbles fit in a cup? 📈 What will US GDP be in Q2 2025? 🇺🇸 What % of votes will Kamala Harris get in Ohio in 2024 US?…

The compling group at UT Austin (sites.utexas.edu/compling/) is looking for PhD students! Come join me, @kmahowald, and @jessyjli as we tackle interesting research questions at the intersection of ling, cogsci, and ai! Some topics I am particularly interested in:

One day, someone will be bragging that they were Kanishka's first PhD student. That person could be you!

The compling group at UT Austin (sites.utexas.edu/compling/) is looking for PhD students! Come join me, @kmahowald, and @jessyjli as we tackle interesting research questions at the intersection of ling, cogsci, and ai! Some topics I am particularly interested in:

Excited for this workshop at #CCN2025! Come listen to me talk about TopoNets: Topographic models across vision, language and audition. Look forward to seeing old friends and making new ones!

As part of #CCN2025 our satellite event on Monday will explore how we can model the brain as a physical system, from topography to biophysical detail -- and how such models can potentially lead to impactful applications neuroailab.github.io/modeling-the-p…. Join us!

Happening now at Salon 3!!

Do cars have wheels? Of course! Do tigers have necks? Of course! While folks know both these facts, they’re not likely to mention the latter. To learn what implications this has for how we measure semantic knowledge, come to our talk T-09-1 on Thursday at 2:15 pm @ #CogSci2025 🧵

Come watch our talk (@kushin_m) in Salon-3 today at 2:15PM at CogSci. #cogsci2025

Excited to announce that our paper NOVA (Norms Optimized Via AI), won the best paper award at the ICLR'25 Bi-Align workshop and the computational modeling prize for applied cognition at CogSci'25. Here's a tweeprint by my co-first author @kushin_m. #cogsci2025 @bi_align…

Direct Preference Optimization (DPO) is simple to implement but complex to understand, which creates misconceptions about how it actually works… LLM Training Stages: LLMs are typically trained in four stages: 1. Pretraining 2. Supervised Finetuning (SFT) 3. Reinforcement…

It was amazing to be part of this effort. Huge shout out to the team, and all the incredible pre-training and post-training efforts that ensure Gemini is the leading frontier model! deepmind.google/discover/blog/…

🎉 Excited to share that our paper "Pretrained Hybrids with MAD Skills" was accepted to @COLM_conf 2025! We introduce Manticore - a framework for automatically creating hybrid LMs from pretrained models without training from scratch. 🧵[1/n]

🧠 Submit to CogInterp @ NeurIPS 2025! Bridging AI & cognitive science to understand how models think, reason & represent. CFP + details 👉 coginterp.github.io/neurips2025/

We’re excited to announce the first workshop on CogInterp: Interpreting Cognition in Deep Learning Models @ NeurIPS 2025! 📣 How can we interpret the algorithms and representations underlying complex behavior in deep learning models? 🌐 coginterp.github.io/neurips2025/ 1/

Releasing HumorBench today. Grok 4 is🥇 on this uncontaminated, non-STEM humor reasoning benchmark. 🫡🫡@xai Here are couple things I find surprising👇 1. this benchmark yields an almost perfect rank correlation with ARC-AGI. Yet the task of reasoning about New Yorker style…

Whoa... Grok 4 beats o3 on our never-released benchmark: HumorBench, a non-STEM reasoning benchmark that measures humor comprehension. The task is simple: given a New Yorker Caption Contest cartoon and caption, explain the joke.

United States Trends

- 1. #River 5,821 posts

- 2. Jokic 28.1K posts

- 3. Namjoon 70.8K posts

- 4. Lakers 52.4K posts

- 5. FELIX VOGUE COVER STAR 9,406 posts

- 6. #FELIXxVOGUEKOREA 9,769 posts

- 7. Rejoice in the Lord 1,217 posts

- 8. #FELIXxLouisVuitton 9,032 posts

- 9. #ReasonableDoubtHulu N/A

- 10. #AEWDynamite 51.7K posts

- 11. Simon Nemec 2,388 posts

- 12. Clippers 15.3K posts

- 13. Shai 16.5K posts

- 14. Mikey 66.4K posts

- 15. Thunder 39.7K posts

- 16. New Zealand 14.6K posts

- 17. Rory 8,407 posts

- 18. Visi 7,810 posts

- 19. Ty Lue 1,291 posts

- 20. Valve 63.1K posts

Something went wrong.

Something went wrong.