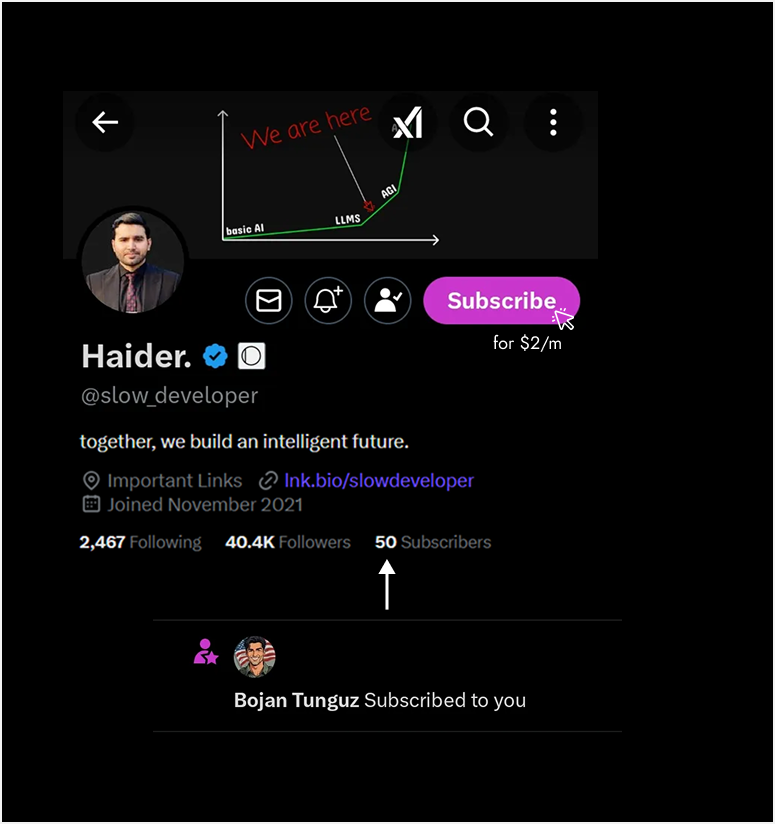

Haider.

@slow_developer

together, we build an intelligent future.

내가 좋아할 만한 콘텐츠

Wow, this is massive and unbelievable. 50 subscribers. i’m super thankful to all of you, seriously. it feels like i’m just getting started, and there’s more fun on the way. btw, those video clips you see on my feed every day? yes, they were edited by my junior (i paid him) so…

openAI and anthropic are more compute-constrained than ever, which has slowed a lot of products sam has even tweeted about this. over the next 3-4 months, as new compute comes online, we'll probably see many of those already-trained models and products finally ship so the…

surprisingly, METR still hasn't updated results to include gemini 3 and opus 4.5 the eval basically measures how well a model handles long, complex tasks right now, gpt-5.1-codex-max is the top performer that can solve about half of the tasks that would take a skilled human…

gemini 3 deepthink looks strong at solving math and science problems remember how, at the start of the year, models could barely do math? now it's beating humans. arc-agi-2 is already close to 50%, and if the trend keeps going, it'll probably be fully solved by next year

i'm still figuring out claude vs. codex codex is cheaper but slower opus 4.5 has much lower limits now, while sonnet 4.5 feels almost unlimited, like codex pro used to be, so it's a strong replacement codex still looks promising, and gpt-5.1-high is great for double-checking…

Jensen Huang says the U.S. is 6 months ahead on frontier models, but China is way ahead on open source Without open sour ce, startups can't build, researchers can't innovate, and entire economies can't advance "without linux, where would we be?"

google search and its massive data is google's moat they have been crawling the web for decades, and most sites still let it in because they need the traffic, even if they block AI bots so google keeps getting fresh data from almost everywhere plus a massive extra stream from…

2026 feels like the year real AI-driven research starts to take off, especially in theory also, i'm excited for openAI "AI research intern" targeted for sept 2026, and curious to see how google and anthropic respond i guess that by 2027, AI could be behind roughly half of all…

Dario Amodei says scaling will get us to AGI, but without a single arrival moment Every few months, models get better at coding, science, and math — now winning Olympiads and doing new mathematics Progress has reached a point where Anthropic engineers no longer write code from…

ppl are looking at today's AI boom and comparing it to the dotcom bubble the idea is: in 10 years, a lot of the big AI companies we see now might not be on top anymore so if investors think AI is a guaranteed win and keep throwing money at it, they could lose a lot when the top…

pls stop saying openAI is already introducing ads they launched app connectors that integrated directly into chatgpt (figma, canva, zillow, etc) when chatgpt thinks it's relevant, it suggests one of those apps — like zillow, if you're asking about apartment rentals still,…

gpt-5.1-codex-max works best when you know what you want and can give it tight, clear instructions gpt-5 / 5.1 (non-codex) is better for planning, understanding, and guidance so plan with non-codex, then execute with codex

Geoffrey Hinton says if you want AI to truly understand the world, give it a robot arm and a camera Let it pick things up, drop them, run experiments That's how children learn But what's amazing: LLMs already grasp spatial concepts from text alone, which puzzles philosophers

from the start, gemini has focused heavily on multimodality right now, they seem to have the best image generation and are probably ahead in video too, thanks to youtube data openAI doesn't need to chase every category a more focused strategy, like anthropic, could be smarter

deepseek releasing IMO gold-winning model before google or openAI is huge china is moving extremely fast in AI imagine what they'd do with western-level compute. they're actually open-sourcing their tech, while the west keeps blocking them from high-end GPUs

cool research from anthropic... they tested their own engineering team: claude now helps with most of the work, and everyone feels faster but only a small part is "automated"; humans still guide, check, and fix the code for me, the cool part of this research is that a lot of…

i never thought the dead internet theory would feel this real this fast right now, 60-70% of replies are AI-generated everything is getting more automated, so we'll actually need proof there's a real person on the other side AI didn't kill the internet; it just made it cheaper…

United States 트렌드

- 1. Cloudflare 30.8K posts

- 2. #heatedrivalry 29.9K posts

- 3. Cowboys 73.7K posts

- 4. Happy Farmers 1,903 posts

- 5. LeBron 114K posts

- 6. Warner Bros 31.8K posts

- 7. Lions 93K posts

- 8. fnaf 2 27.7K posts

- 9. Wizkid 178K posts

- 10. Pickens 15.1K posts

- 11. rUSD N/A

- 12. #PowerForce N/A

- 13. Shang Tsung 35.7K posts

- 14. scott hunter 6,704 posts

- 15. Paramount 21.8K posts

- 16. Gibbs 21K posts

- 17. Jake Tapper 43.7K posts

- 18. Davido 98.4K posts

- 19. Scott and Kip 3,892 posts

- 20. Cary 47.1K posts

내가 좋아할 만한 콘텐츠

-

Alamin

Alamin

@iam_chonchol -

David Mráz

David Mráz

@davidm_ml -

Muzzammil

Muzzammil

@DevMuzzammil -

Ajay Sharma

Ajay Sharma

@ajaysharma_here -

Mujeeb Ahmed

Mujeeb Ahmed

@hey_mujeebahmed -

Anas

Anas

@muhamdanaskhan -

Amena | Design Expert 🌌

Amena | Design Expert 🌌

@AmenaiSabuwala -

Ali Sufian

Ali Sufian

@aliscodes -

D-Coder

D-Coder

@Damn_coder -

StackAI

StackAI

@StackAI_HQ -

Pradeep🕵️♂️

Pradeep🕵️♂️

@pradeepeth -

Ahsan Noor

Ahsan Noor

@ahsannoor101 -

Pritesh Kiri

Pritesh Kiri

@PriteshKiri -

Angry Tom

Angry Tom

@AngryTomtweets -

Ali Reza

Ali Reza

@alisamadii_

Something went wrong.

Something went wrong.