Sparsity in LLMs Workshop at ICLR 2025

@sparseLLMs

Workshop on Sparsity in LLMs: Deep Dive into Mixture of Experts, Quantization, Hardware, and Inference @iclr_conf 2025.

We are happy to announce that the Workshop on Sparsity in LLMs will take place @iclr_conf in Singapore! For details: sparsellm.org Organizers: @TianlongChen4, @utkuevci, @yanii, @BerivanISIK, @Shiwei_Liu66, @adnan_ahmad1306, @alexinowak

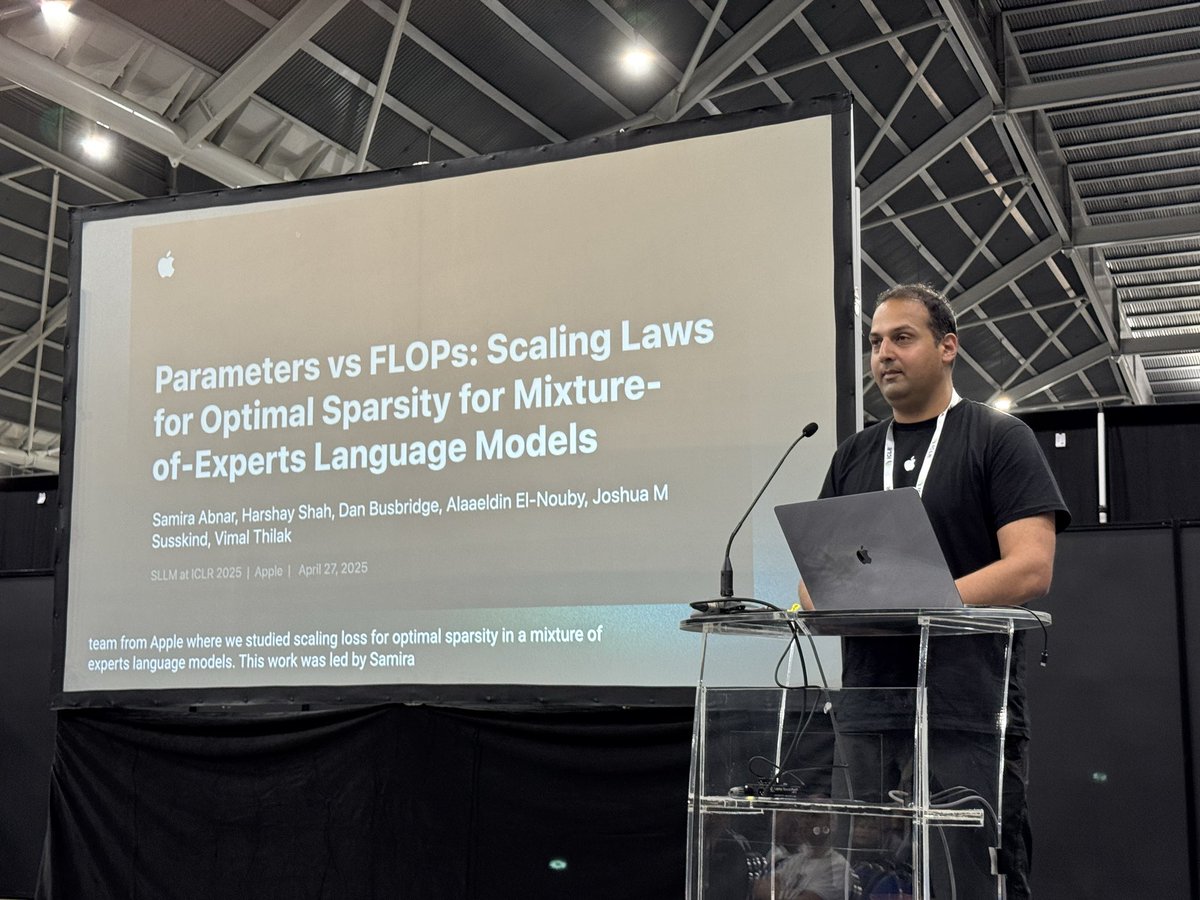

@AggieInCA giving the first Oral talk at #ICLR2025 @sparseLLMs workshop

Presenting in short! 👉🏼 Mol-MoE: leveraging model merging and RLHF for test-time steering of molecular properties. 📆 today, 11:15am to 12:15pm 📍 Poster session #1, GEM Bio Workshop @gembioworkshop @sparseLLMs #ICLR #ICLR2025

In Mol-MoE, we propose a framework to train router networks to reuse property-specific molecule generators. This allows to personalize drug generation at test time by following property preferences! We discuss some challenges. @proceduralia @pierrelux arxiv.org/abs/2502.05633

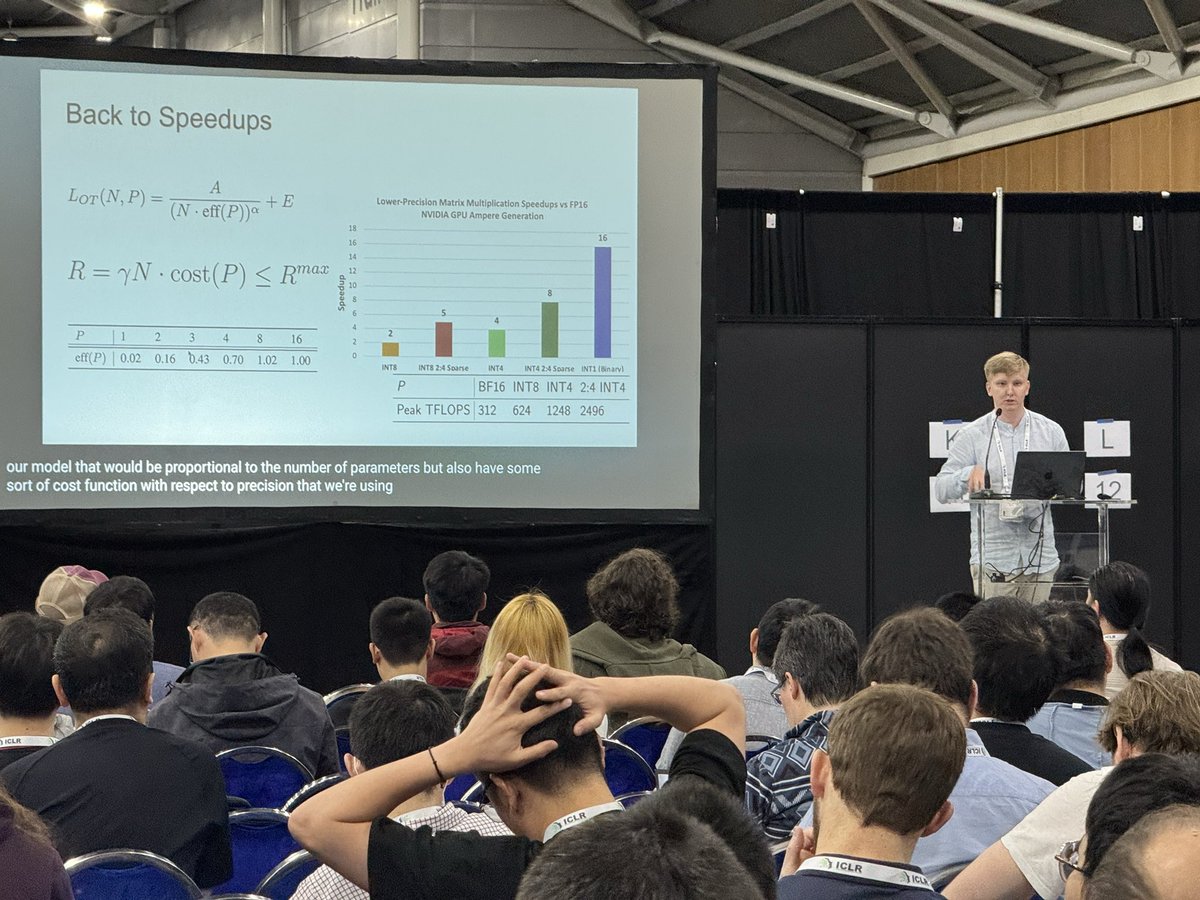

@black_samorez giving his oral talk at the #ICLR2025 @sparseLLMs workshop

We are presenting “Prefix and output length-aware scheduling for efficient online LLM inference” at the ICLR 2025 (@iclr_conf) Sparsity in LLMs workshop (@sparseLLMs). 🪫 Challenge: LLM inference in data centers benefits from data parallelism. How can we exploit patterns in…

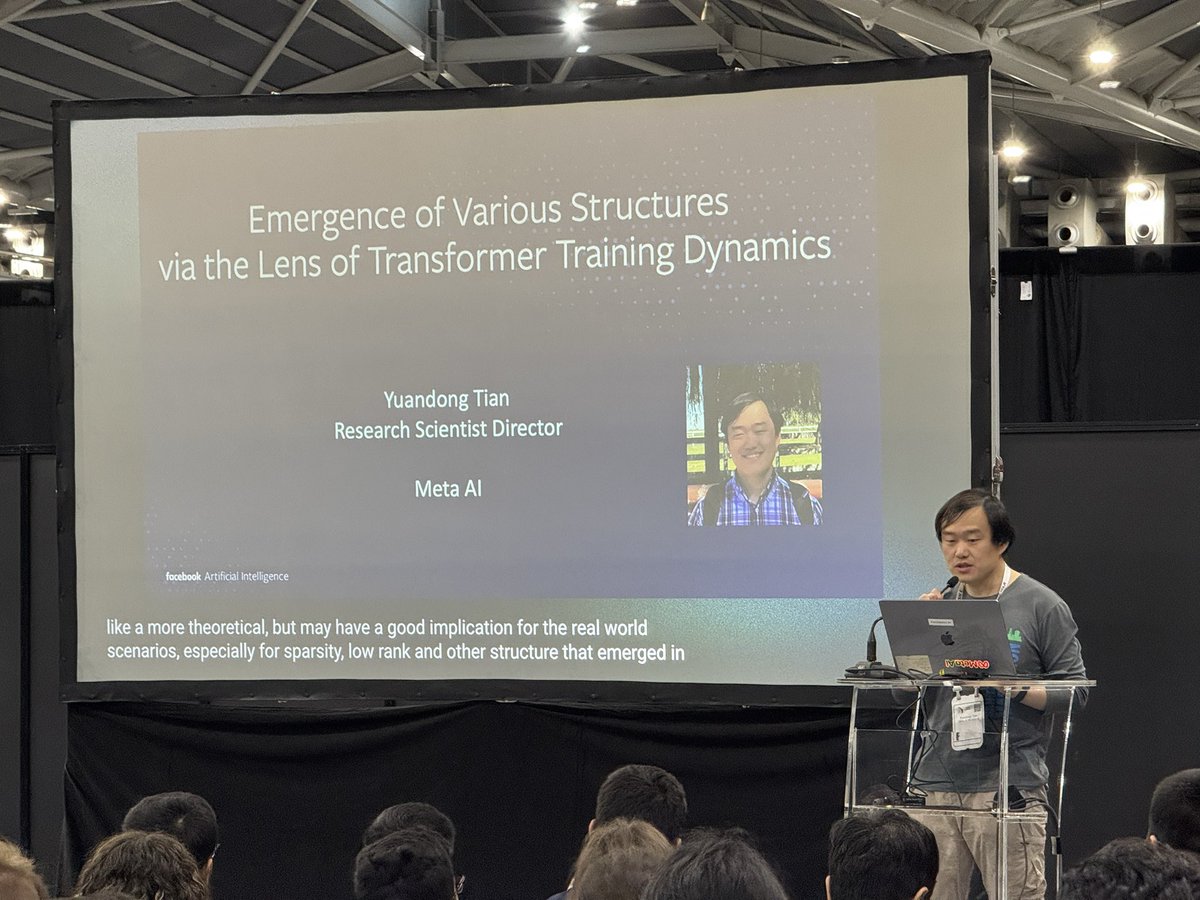

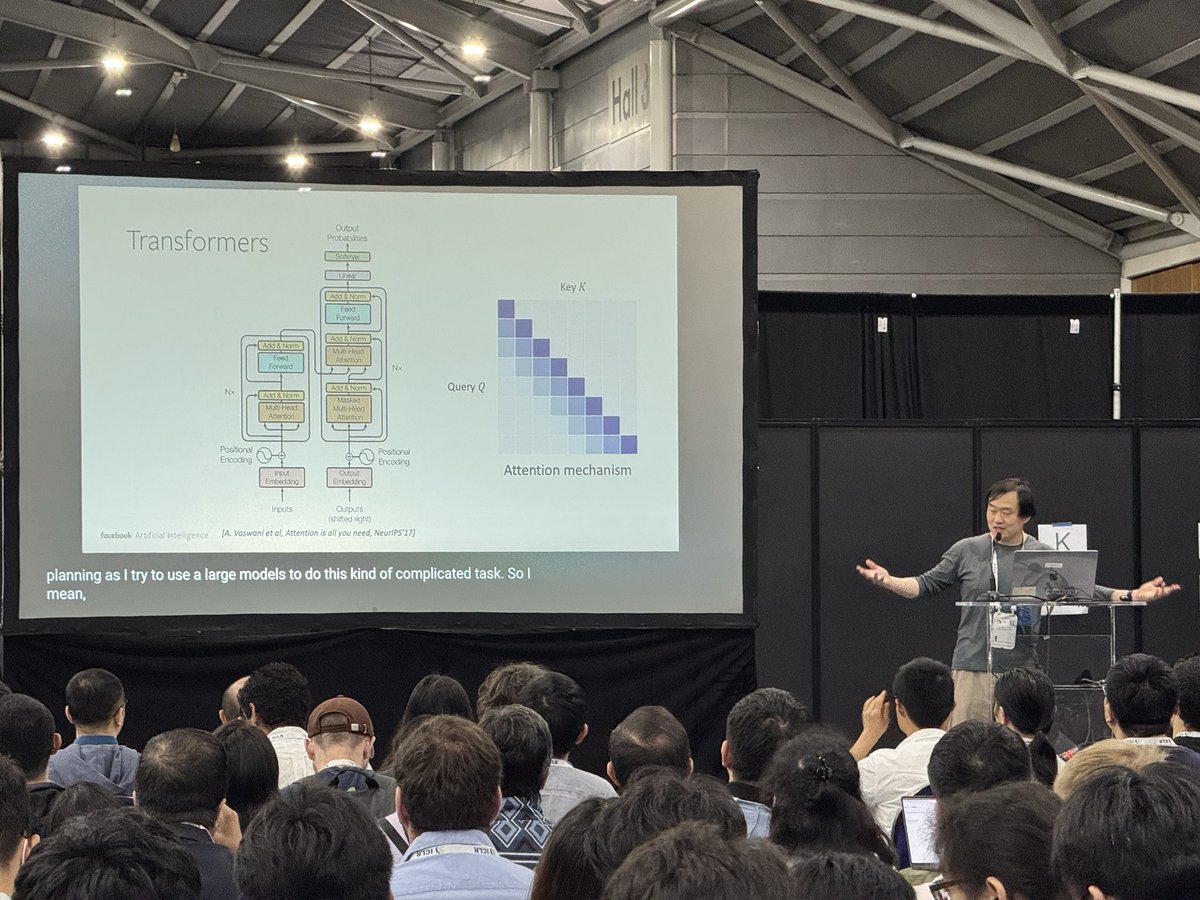

@tydsh giving his invited talk at the #ICLR2025 @sparseLLMs workshop!

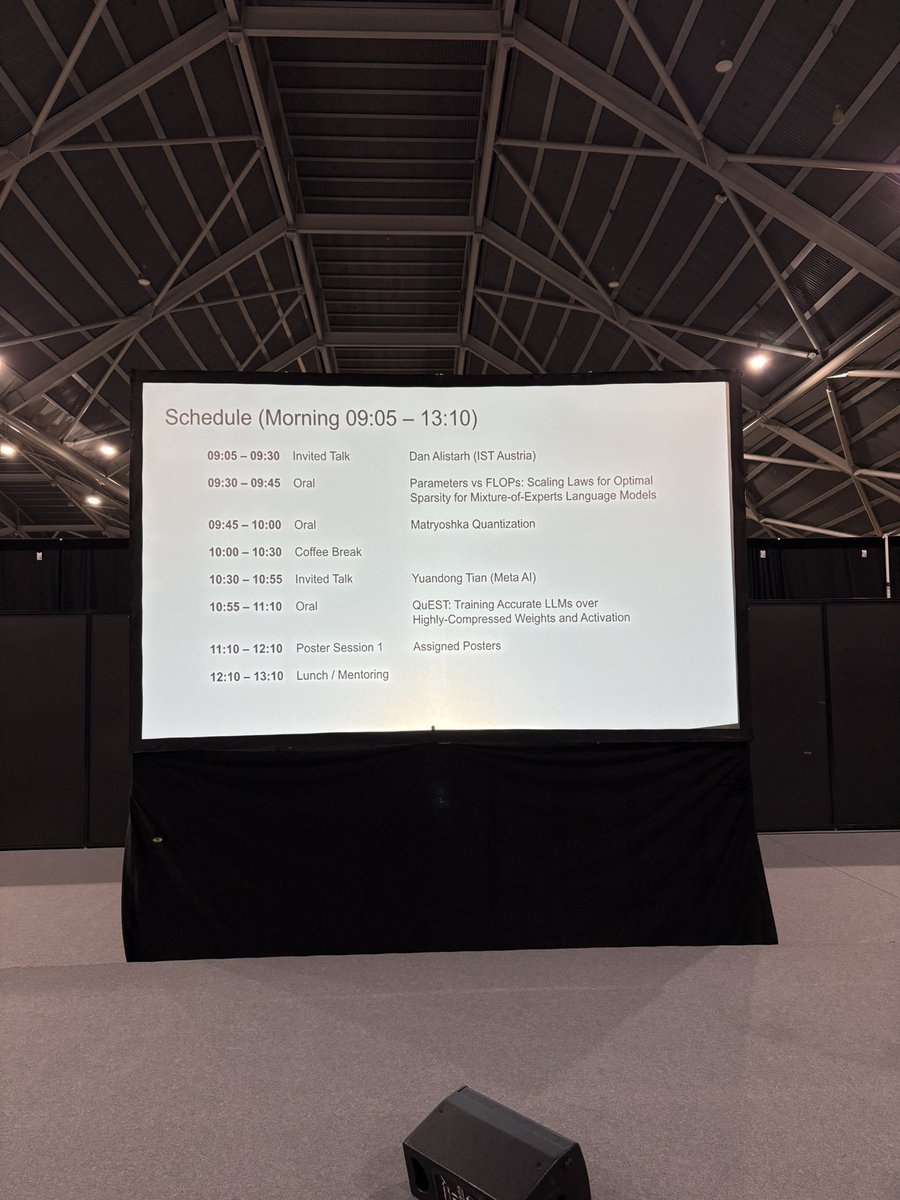

our workshop on sparsity in LLMs is starting soon in Hall 4.7! we’re starting strong with an invited talk from @DAlistarh and an exciting oral on scaling laws for MoEs!

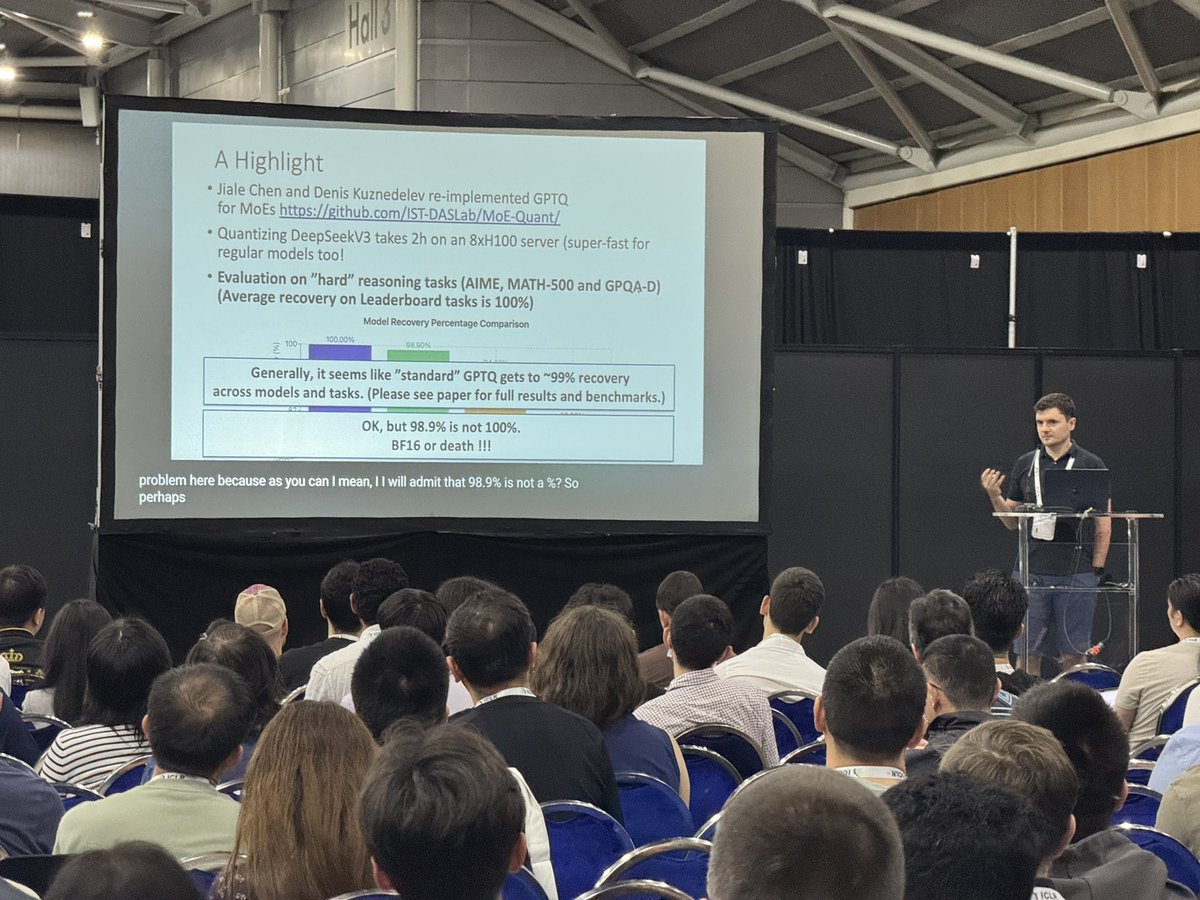

@DAlistarh giving his invited talk at the #ICLR2025 @sparseLLMs workshop

Our ICLR 2025 Workshop on Sparsity in LLMs (@sparseLLMs) kicks off with a talk by @DAlistarh on lossless (~1% perf drop) LLM compression using quantization across various benchmarks.

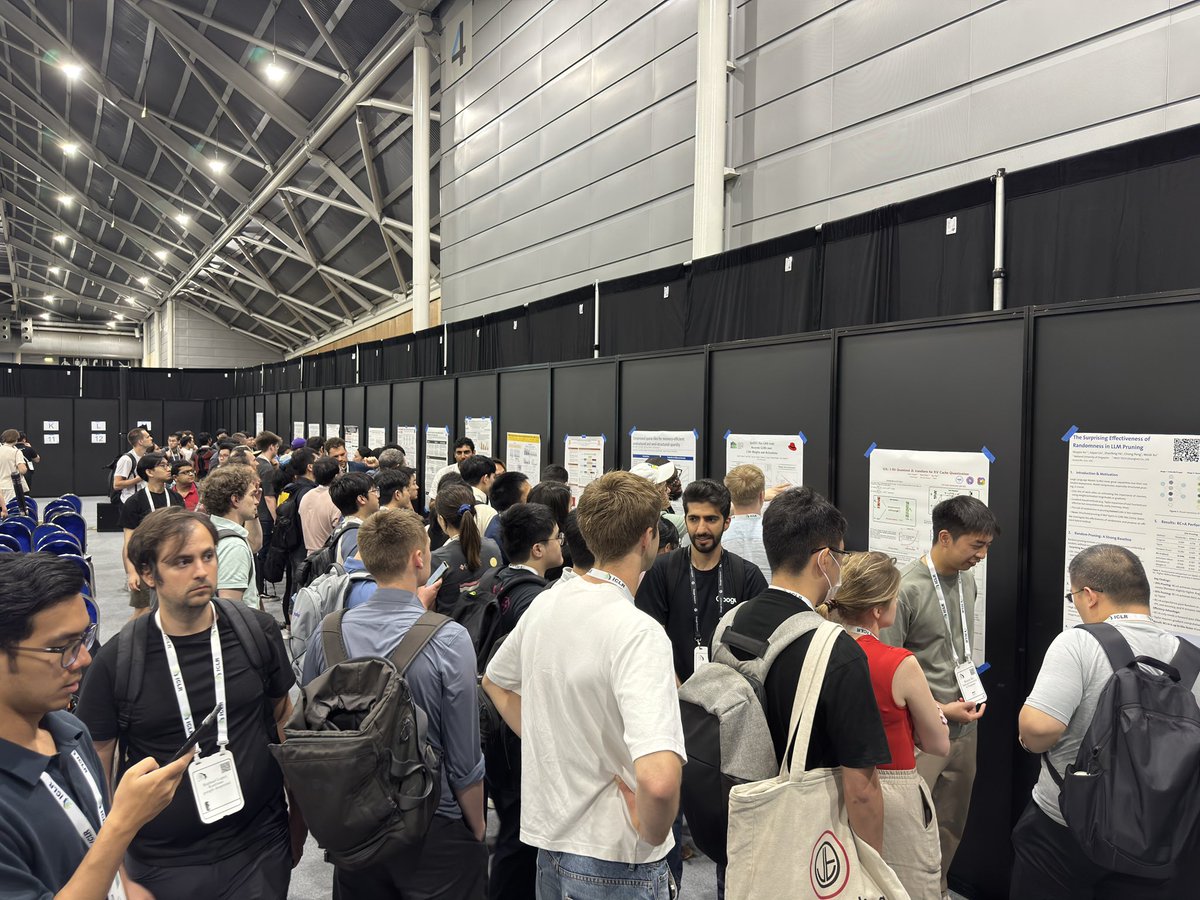

First poster session at the #ICLR2025 @sparseLLMs workshop

a PACKED hall for @tydsh‘s talk at our sparsity in LLMs workshop -not surprising! we have another oral right after this, and then we’ll have the first of 2 poster sessions before lunch! @iclr_conf

@DAlistarh giving his invited talk at the #ICLR2025 @sparseLLMs workshop now!

#ICLR2025 @sparseLLMs Workshop Panel with @PavloMolchanov @ayazdanb @DAlistarh @realDanFu @YangYou1991 and Olivia Hsu, moderated by @PandaAshwinee

If you’re at #ICLR2025, go watch @AggieInCA give an oral presentation at the @SparseLLMs workshop on scaling laws for pretraining MoE LMs! Had a great time co-leading this project with @samira_abnar & @AggieInCA at Apple MLR last summer. When: Sun Apr 27, 9:30a Where: Hall 4-07

🚨 One question that has always intrigued me is the role of different ways to increase a model's capacity: parameters, parallelizable compute, or sequential compute? We explored this through the lens of MoEs:

Sparse LLM workshop will run on Sunday with two poster sessions, a mentoring session, 4 spotlight talks, 4 invited talks and a panel session. We'll host an amazing lineup of researchers: @DAlistarh @vithursant19 @tydsh @ayazdanb @gkdziugaite Olivia Hsu @PavloMolchanov Yang Yu

I will travelling to Singapore 🇸🇬 this week for the ICLR 2025 Workshop on Sparsity in LLMs (SLLM) that I'm co-organizing! We have an exciting lineup of invited speakers and panelists including @DAlistarh, @gkdziugaite, @PavloMolchanov, @vithursant19, @tydsh and @ayazdanb.

Check out this post that has information about research from Apple that will be presented at ICLR 2025 in 🇸🇬 this week. I will be at ICLR and will be presenting some of our work (led by @samira_abnar) at SLLM @sparseLLMs workshop. Happy to chat about JEPAs as well!

New post: "Apple Machine Learning Research at @iclr_conf 2025" - highlighting a selection of the many Apple #ML research papers to be presented at the conference this week: machinelearning.apple.com/research/iclr-…

Our QuEST paper was selected for Oral Presentation at ICLR @sparseLLMs workshop! QuEST is the first algorithm with Pareto-optimal LLM training for 4bit weights/activations, and can even train accurate 1-bit LLMs. Paper: arxiv.org/abs/2502.05003 Code: github.com/IST-DASLab/QuE…

United States 趨勢

- 1. Gabe Vincent 3,439 posts

- 2. Deport Harry Sisson 7,376 posts

- 3. #Blackhawks 2,072 posts

- 4. Angel Reese 49.2K posts

- 5. DuPont 1,566 posts

- 6. Mavs 5,621 posts

- 7. Blues 21.5K posts

- 8. #PokemonZA 1,383 posts

- 9. Lakers 18K posts

- 10. #AEWDynamite 18.2K posts

- 11. tzuyu 227K posts

- 12. Deloitte 5,712 posts

- 13. #VSFashionShow 553K posts

- 14. #PokemonLegendZA 1,165 posts

- 15. jihyo 180K posts

- 16. Tusky 2,261 posts

- 17. Nazar 6,728 posts

- 18. Birdman 5,072 posts

- 19. Mad Max 3,297 posts

- 20. Hofer 1,736 posts

Something went wrong.

Something went wrong.