Tony Chen

@tonychenxyz

CS PhD @princetonCS. Prev: @togethercompute, Undergrad @columbia.

You might like

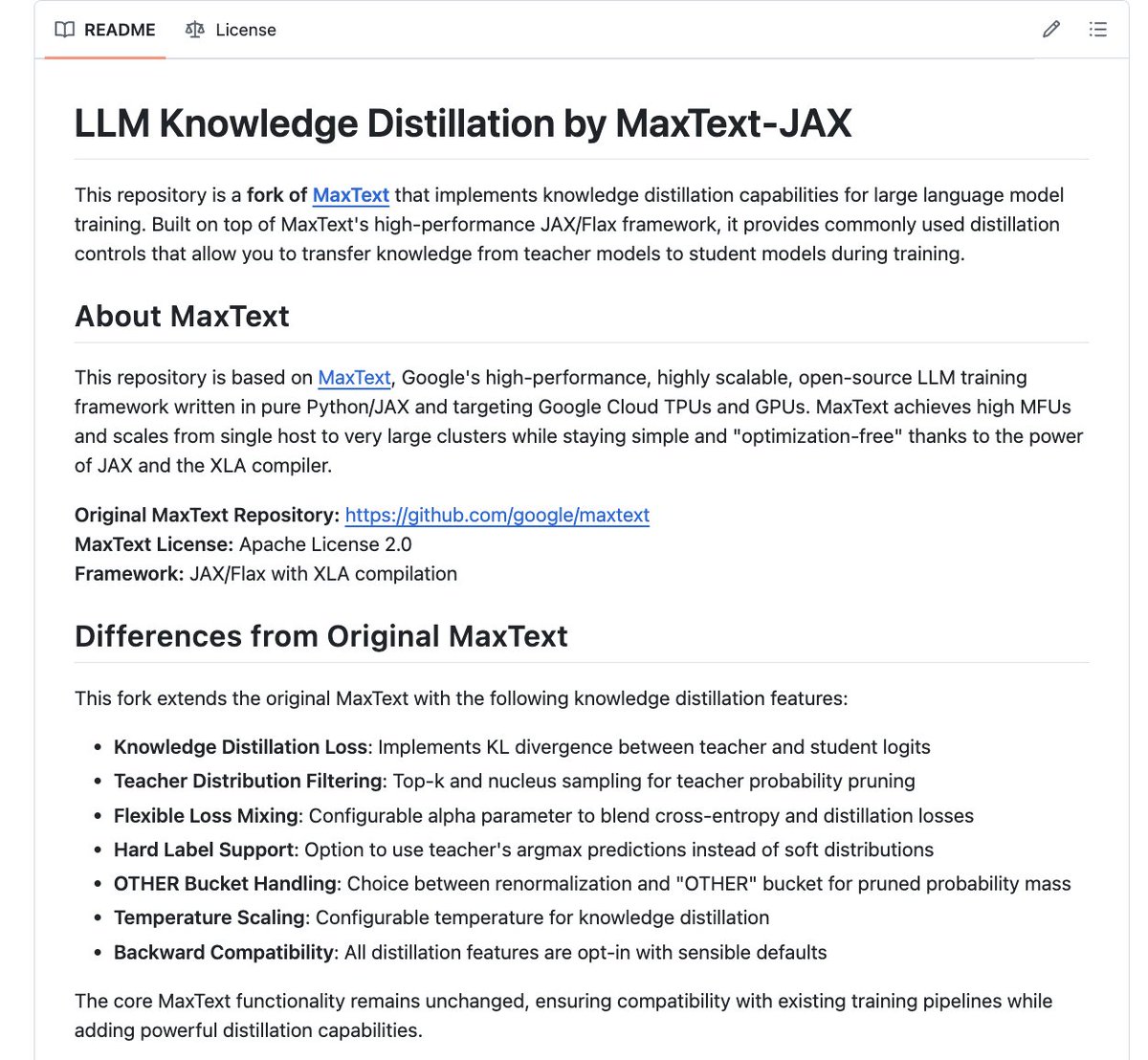

Excited to share our lab’s first open-source release: LLM-Distillation-JAX supports practical knowledge distillation configurations (distillation strength, temperature, top-k/top-p), built on MaxText designed for reproducible JAX/Flax training on both TPUs and GPUs

I always think about the story engine in West World S4 where Dolores speaks out her idea of a character, and the story plays out 3D in real time. We are getting near to that...

We raised $28M seed from Threshold Ventures, AIX Ventures, and NVentures (Nvidia's venture capital arm) —alongside 10+ unicorn founders and top AI researchers— to build reasoning models that generate real-time simulations and games. Models are bottlenecked by practical…

This is amazing! Very excited to see how would SelfIE work with more modern models!

Thread 🧵 Just shipped something I'm excited about: a model-agnostic version of the "selfie" neural network interpretation library!

Thread 🧵 Just shipped something I'm excited about: a model-agnostic version of the "selfie" neural network interpretation library!

Announcing DeepSWE 🤖: our fully open-sourced, SOTA software engineering agent trained purely with RL on top of Qwen3-32B. DeepSWE achieves 59% on SWEBench-Verified with test-time scaling (and 42.2% Pass@1), topping the SWEBench leaderboard for open-weight models. Built in…

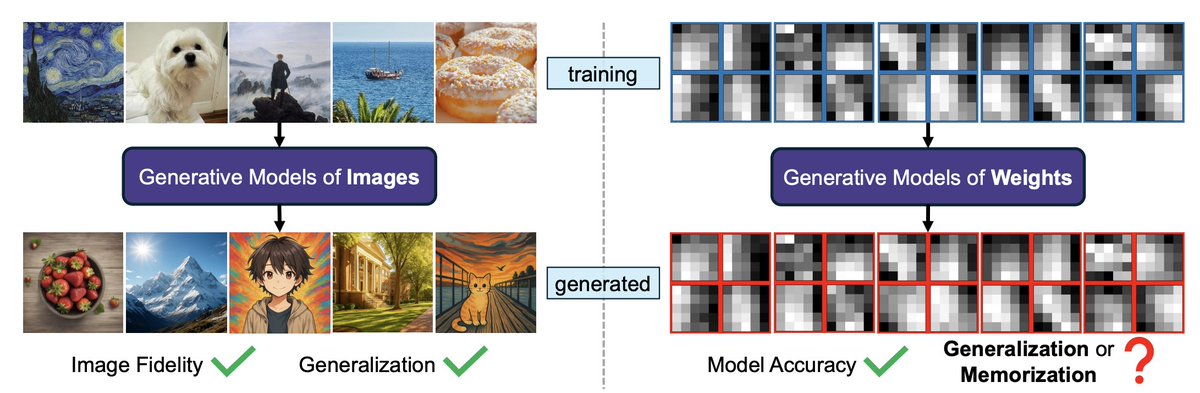

Check out our new paper “Generative Modeling of Weights: Generalization or Memorization?” — we find that current diffusion-based neural network weight generators often memorize training checkpoints rather than learning a truly generalizable weight distribution!

Can diffusion models appear to be learning, when they’re actually just memorizing the training data? We show and investigate this phenomenon in the context of neural network weight generation, in our recent paper “Generative Modeling of Weights: Generalization or Memorization?"

It's exciting to apply diffusion models to new domains! But it requires careful evaluation, esp. regarding memorization. Our paper highlights this need. Shout-out to @zeng_boya for leading this work. paper: arxiv.org/abs/2506.07998 project page: boyazeng.github.io/weight_memoriz… video:…

Can GPT, Claude, and Gemini play video games like Zelda, Civ, and Doom II? 𝗩𝗶𝗱𝗲𝗼𝗚𝗮𝗺𝗲𝗕𝗲𝗻𝗰𝗵 evaluates VLMs on Game Boy & MS-DOS games given only raw screen input, just like how a human would play. The best model (Gemini) completes just 0.48% of the benchmark! 🧵👇

Conference reviewing should be in the form of annotating on paper like google doc comments. So you know reviewers actually read the paper and less of over-general AI generated comments. And it’s smoother experience writing reviews too without jumping between paper and writing.

United States Trends

- 1. Packers 93.9K posts

- 2. Eagles 122K posts

- 3. Jordan Love 14.4K posts

- 4. #WWERaw 123K posts

- 5. Matt LaFleur 7,961 posts

- 6. $MONTA 1,269 posts

- 7. AJ Brown 6,546 posts

- 8. Patullo 11.9K posts

- 9. Jaelan Phillips 7,228 posts

- 10. Smitty 5,382 posts

- 11. #GoPackGo 7,759 posts

- 12. McManus 4,096 posts

- 13. Sirianni 4,798 posts

- 14. Grayson Allen 3,188 posts

- 15. Cavs 10.6K posts

- 16. #MondayNightFootball 1,913 posts

- 17. Pistons 14.6K posts

- 18. Devonta Smith 5,935 posts

- 19. John Cena 99.3K posts

- 20. Wiggins 12K posts

Something went wrong.

Something went wrong.