Ethan

@torchcompiled

trying to feel the magic. cofounder at @leonardoai_ research at @canva

Potrebbero piacerti

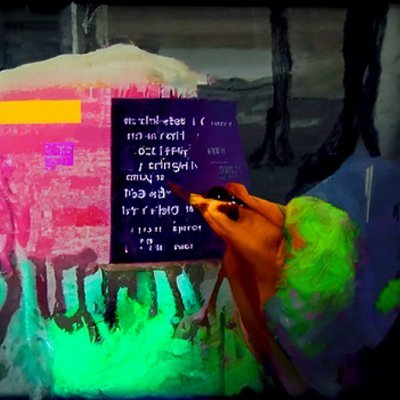

personally I feel like the inflection point was early 2022. The sweet spot where clip-guided diffusion was just taking off, forcing unconditional models to be conditional through strange patchwork of CLIP evaluating slices of the canvas at a time. It was like improv, always…

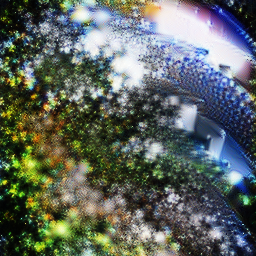

Image synthesis used to look so good. These are from 2021. I feel like this was an inflection point, and the space has metastasized into something abhorrent today (Grok, etc). Even with no legible representational forms, there was so much possibility in these images.

Trying to get an LLM to solve a math problem, running through tons of matmuls and writing every token, feels like trying to bowl a strike all the way from the moon while drunk. But training kinda places the guardrails so that randomly walking bowling ball stays on track.

I like JEPA but this image is such a meme at this point

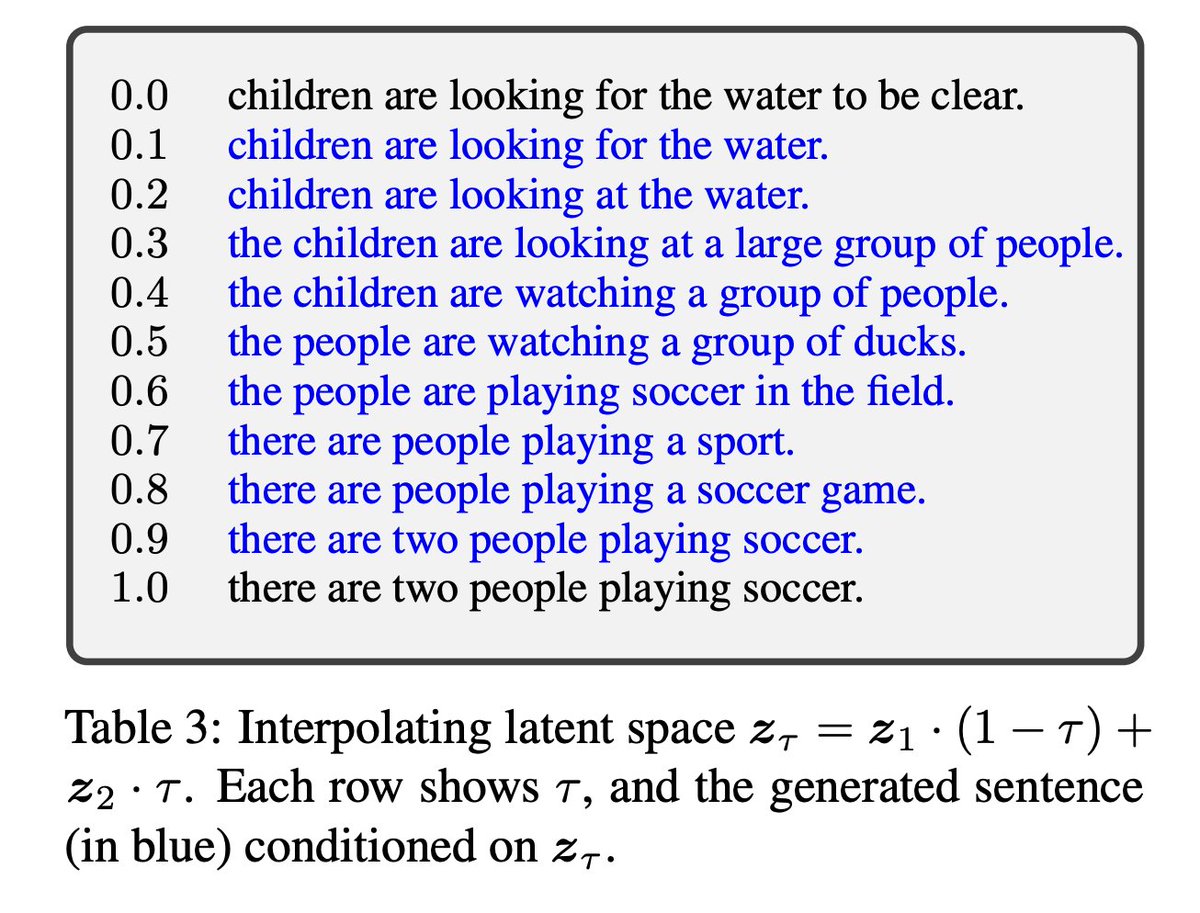

I want a good text VAE that works over long pieces of text. I would love to see a poem or something I wrote in an alternate portrayal. Apparently, this is quite difficult. Autoregressive decoding of latents quickly shifts from depending on latent variable to depending on past…

claim: the natural state of neural networks are actually incredibly unbiased, following from loss functions that are unbiased estimators of distributions. they may cling onto spurious correlations but arguably this is a different problem of generalization.

one of my favorite outputs from the guided diffusion days, a conductor and his orchestra in the middle of a storm

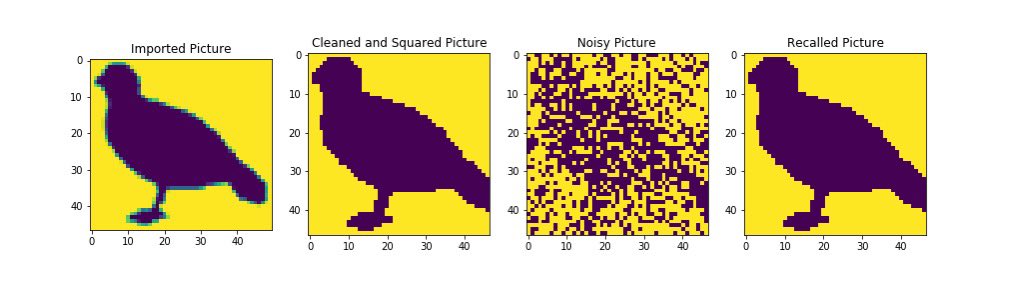

Little stake here as saying humans are thinking in an autoregressive or diffusion manner is overly reductive. But a fun fact is that human associative memory does share some similarities with Hopfield networks which somewhat resembles a denoising process

> The way humans think look a lot more like diffusion than autoregressive. i will never, ever understand this claim or the intuitions behind it. ah yes. the human mind is... learning a scoring function to... reverse gaussian noise... (?) ... spatially (???)

> search megatron hoping to find the training library > get the character > "megatron transformers" > still getting the character > I give up

What happens if we make the tiny reasoning model like 2B instead of 70m

United States Tendenze

- 1. #AEWDynamite 18.8K posts

- 2. #AEWCollision 7,185 posts

- 3. #CMAawards 4,629 posts

- 4. #Survivor49 3,227 posts

- 5. Philon N/A

- 6. Donovan Mitchell 3,705 posts

- 7. #cma2025 N/A

- 8. Dubon 3,267 posts

- 9. Simon Walker N/A

- 10. Nick Allen 1,955 posts

- 11. Okada 12K posts

- 12. UConn 7,652 posts

- 13. Lainey Wilson N/A

- 14. Derik Queen 1,652 posts

- 15. Bristow N/A

- 16. Cavs 8,347 posts

- 17. Arizona 31.7K posts

- 18. FEMA 53.6K posts

- 19. Morgan Wallen N/A

- 20. Andrej Stojakovic N/A

Potrebbero piacerti

-

Alexander S

Alexander S

@devdef -

KaliYuga

KaliYuga

@KaliYuga_ai -

pharmapsychotic

pharmapsychotic

@pharmapsychotic -

Shade.

Shade.

@Shade_9S0 -

apolinario 🌐

apolinario 🌐

@multimodalart -

huemin

huemin

@huemin_art -

Takyon∞

Takyon∞

@takyon236 -

Thibaud Zamora

Thibaud Zamora

@thibaudz -

Nerdy Rodent 🐀🤓💻🪐🚴

Nerdy Rodent 🐀🤓💻🪐🚴

@NerdyRodent -

proxima centauri b

proxima centauri b

@proximasan -

sure, ai

sure, ai

@sureailabs -

nin

nin

@nin_artificial -

Chris Allen

Chris Allen

@zippy731 -

KaptN

KaptN

@Legendsartcoll1 -

|ᴬ𝖎| Joe

|ᴬ𝖎| Joe

@AiJoe_eth

Something went wrong.

Something went wrong.