warnikchow

@warnikchow

shallow and heuristic computational linguist

You might like

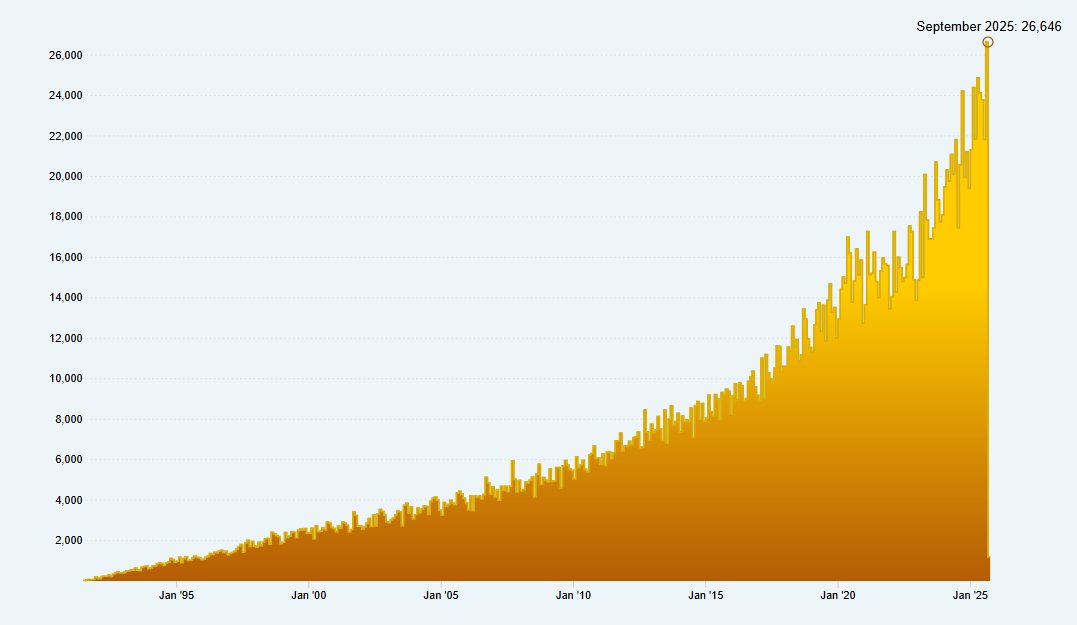

Days in a work week: 5 Days in a month: 30 Total new submissions to arXiv in September: 26,646 arXiv editorial and user support staff: 7 someone who is good at science please help me with this. our team isn't sleeping. #openaccess #preprints

we all quote Firth for "You shall know a word by the company it keeps!" perfectly fine, as he indeed said so in his book. but, how many of us knew which word he had in his mind when he wrote this sentence? 🤣

LLM-as-a-judge has become a norm, but how can we be sure that it will really agree with human annotators? 🤔In our new paper, we introduce a principled approach to provide LLM judges with provable guarantees of human agreement⚡ #LLM #LLM_as_a_judge #reliable_evaluation 🧵[1/n]

좋은 기회 주셔서 재미있게 발표했습니다 :)

Thank you for an intersting talk and for sharing your expertise with SKKU student, @warnikchow "Lifeless comp linguists annotate furiously - 무기력한 내 일상 속 작은 데이터 어노테이션"

Participating #EMNLP2023 in-person! I am presenting nothing and will be totally around the convention center so contact me :)

Currently at PolyU HK for #PACLIC 2023 and will present a poster on Korean dataset studies in the afternoon :) It's a meta-research -- a research on research -- for practitioners, and you may find other interesting studies in the conference. Please drop by!

About a week ago -- attended #EAAMO2023 and presented our PaperCard paper with @ejcho95 and @kchonyc Truely great people and community :) Thanks for all the constructive comments, discussions, and engaging interactions! arXiv version available in: arxiv.org/abs/2310.04824

📢IT'S OFFICIAL! 🇧🇷The ACM Conference on Fairness, Accountability, and Transparency #FAccT2024 will be held Monday, June 3rd through Thursday, June 6th, 2024 in Rio de Janeiro, Brazil! facctconference.org/2024/committees 📅Stay tuned for the CFP

I get asked a lot: why stay in academia, all the excitement in AI is happening in industry with massive compute. And I am seeing some profs leaving academia, but also seeing lots of researchers in industry looking to go back to academia, especially those who don’t work on LLMs.…

Today we're sharing details on AudioCraft, a new family of generative AI models built for generating high-quality, realistic audio & music from text. AudioCraft is a single code base that works for music, sound, compression & generation — all in the same place. More details ⬇️

I have almost 30k citations and millions in research funding. But almost 20 years ago, I just wrote my first research paper. I had no writing support during grad school & I want you to have it better. Here are secret actionable writing tips for 6 sections of your paper. ↓

We present a novel framework for aligning LLMs through 🌟Synthetic Feedback🌟 while NOT relying on any pre-aligned LLMs like InstructGPT or ChatGPT and intensive human demonstrations. Our 7B model outperforms Alpaca-13B, Dolly-12B, etc. 📑Preprint: arxiv.org/abs/2305.13735 [1/n]

![SungdongKim4's tweet image. We present a novel framework for aligning LLMs through 🌟Synthetic Feedback🌟 while NOT relying on any pre-aligned LLMs like InstructGPT or ChatGPT and intensive human demonstrations. Our 7B model outperforms Alpaca-13B, Dolly-12B, etc.

📑Preprint: arxiv.org/abs/2305.13735 [1/n]](https://pbs.twimg.com/media/Fw4d8__acAAynuL.jpg)

⏲️Two international key players in the area of computational linguistics, the ELRA Language Resources Association (ELRA) and the International Committee on Computational Linguistics (ICCL), are joining forces to organize the LREC-COLING 2024🥳 lrec-coling-2024.lrec-conf.org

The meme "I asked ChatGPT and it says…" is interesting to me (as a meme; what follows never is). People want to take credit for their prompts, I think—which isn't wrong, bc the prompt determines the output. So it's weird that people post such outputs as if they were information.

Cool but should have been called ChattingFace

Some people said that closed APIs were winning... but we will never give up the fight for open source AI ⚔️⚔️ Today is a big day as we launch the first open source alternative to ChatGPT: HuggingChat 💬 Powered by Open Assistant's latest model – the best open source chat…

and it was still an invaluable chance to see how readers react to confession that the academic writing is machine-assisted :) and in that sense, the result was quite encouraging. (sincerely! thanks so much to anonymous reviewers)

Cho, Cho & Cho (2023) w/ @ejcho95 & @kchonyc finally online!😆 A short disclaimer for our work-in-progress: submitted to hal far before the recent decision on #FAccT2023 and was unexpectedly disclosed today after long moderation process,,, Next ver. should be much improved :-)

it took us two months to have this preprint archived... can you guess why? a fun project led by Won Ik Cho and Eunjung Cho! [Cho, Cho & Cho, 2023] hal.science/hal-04019842

인공지능한테 업무를 분담해서 부여해서 프로그래밍을 자동으로 진행하는 Friday-GPT 라는 오픈소스 프로젝트를 시작했습니다..! (모든 과정을 한국어로 추적해서 음성으로 알려줍니다!) 😊 Node.js 로 작동하는 작동본이 공개되어 있으며! 윈도&맥&우분투 지원! Github : github.com/hmmhmmhm/frida…

GPT가 스스로 대화하면서 회의를 하게끔 개발 해봤는데 흥미롭네요...! "Twitter 에서 오늘의 인기 트윗을 얻어오는 코드를 작성해줘" 라는 질문을 해결 하기 위해서 코드를 짜기 전, 코드를 어떻게 짤지를 먼저 고민하게 시켰더니 굉장한 량의 기획이....

Large language models (LLMs) are fun to use, but understanding the fundamentals of how they work is also incredibly important. One major idea and building block of LLMs is their underlying architecture: the decoder-only transformer model. 🧵[1/6]

![cwolferesearch's tweet image. Large language models (LLMs) are fun to use, but understanding the fundamentals of how they work is also incredibly important. One major idea and building block of LLMs is their underlying architecture: the decoder-only transformer model. 🧵[1/6]](https://pbs.twimg.com/media/FsQIDTeaUAA4-3M.jpg)

United States Trends

- 1. Wemby 42.7K posts

- 2. Steph 83.2K posts

- 3. Good Saturday 18K posts

- 4. Draymond 20.3K posts

- 5. Spurs 35.1K posts

- 6. #Truedtac5GXWilliamEst 177K posts

- 7. #PerayainEFW2025 128K posts

- 8. Massie 62.5K posts

- 9. PERTHSANTA JOY KAMUTEA 582K posts

- 10. #NEWKAMUEVENTxPerthSanta 578K posts

- 11. Warriors 59.3K posts

- 12. Clemson 11.4K posts

- 13. Marjorie Taylor Greene 54.9K posts

- 14. Bubba 62K posts

- 15. #DubNation 2,250 posts

- 16. Bill Clinton 203K posts

- 17. Zack Ryder 17.6K posts

- 18. Aaron Fox 2,765 posts

- 19. Harden 16.4K posts

- 20. Jaden Bradley N/A

You might like

-

Jay Shin

Jay Shin

@jshin491 -

Hyunwoo Kim

Hyunwoo Kim

@hyunw_kim -

Jinheon Baek

Jinheon Baek

@jinheonbaek -

Jung-Woo Ha

Jung-Woo Ha

@JungWooHa2 -

JinYeong Bak

JinYeong Bak

@NoSyu -

Gyuwan Kim

Gyuwan Kim

@gyuwankim93 -

SanghyukChun

SanghyukChun

@SanghyukChun -

Junseong Kim

Junseong Kim

@codertimo -

Jaemin Cho

Jaemin Cho

@jmin__cho -

Joel Jang

Joel Jang

@jang_yoel -

Sungdong Kim

Sungdong Kim

@SungdongKim4 -

Sung Ju Hwang

Sung Ju Hwang

@SungJuHwang1 -

Jaemin Yoo

Jaemin Yoo

@seeyoojm -

Upstage

Upstage

@upstageai -

Seanie Lee

Seanie Lee

@seanie_12

Something went wrong.

Something went wrong.