ACM has made an excellent video introduction to reinforcement learning!

2024 @TheOfficialACM A.M. Turing Award recipients @RichardSSutton and Andrew G. Barto discuss their #careers and their work on reinforcement learning in an #original #video at bit.ly/43fpe4q

Project that blew my mind a bit earlier and I still think about often: A Trustworthy, Free (Libre), Linux Capable, Self-Hosting 64bit RISC-V Computer contrib.andrew.cmu.edu/~somlo/BTCP/ This is an attempt to build a *completely* open source computer system, both software AND hardware.…

The ML ecosystem in France is on fire🔥 It has amazing talent and resources. Here are 10 facts you might not know: 1. There are great research labs - from @MistralAI and @kyutai_labs to large ones from @AIatMeta and @GoogleDeepMind. The Llama 2 and CodeLlama authors are based in…

The hottest new programming language is English

You don’t need anything or anyone to make you happy.

I feel like I have to once again pull out this figure. These 32x32 texture patches were state of the art image generation in 2017 (7 years ago). What does it look like for Gen-3 and friends to look similarly silly 7 years from now.

Gen-3 Alpha Text to Video is now available to everyone. A new frontier for high-fidelity, fast and controllable video generation. Try it now at runwayml.com

The Transformer architecture has changed surprisingly little from the original paper in 2017 (over 7 years ago!). The diff: - The nonlinearity in the MLP has undergone some refinement. Almost every model uses some form of gated nonlinearity. A silu or gelu nonlinearity is…

The Sony Walkman came out 45 years ago today. It would sell 200m units and ushered in age of personal consumer electronics (cellphone, iPod, iPhone). An interesting detail is that Akio Morita — Sony’s legendary boss at the time — thought it would be too “anti-social” and…

“More engineers” will usually *not* solve your problems. Because the real problem is often a strategy problem, culture problem, interpersonal problem, trust problem, creativity problem, or market problem. More engineers *will* solve your “I don’t have enough engineers” problem.

If you have an hour to learn this week, Watch this talk. It’s the crispiest intro to how LLM Fine Tuning works 🙏

. @johnowhitaker 's talk: Napkin Math For Fine Tuning was so popular that we ended up doing an encore! He answers q's like: - When should I use LoRA? Quantization? GC? - What’s the cheapest option? most accurate? - What hardware? - What batch size / context length ..etc?

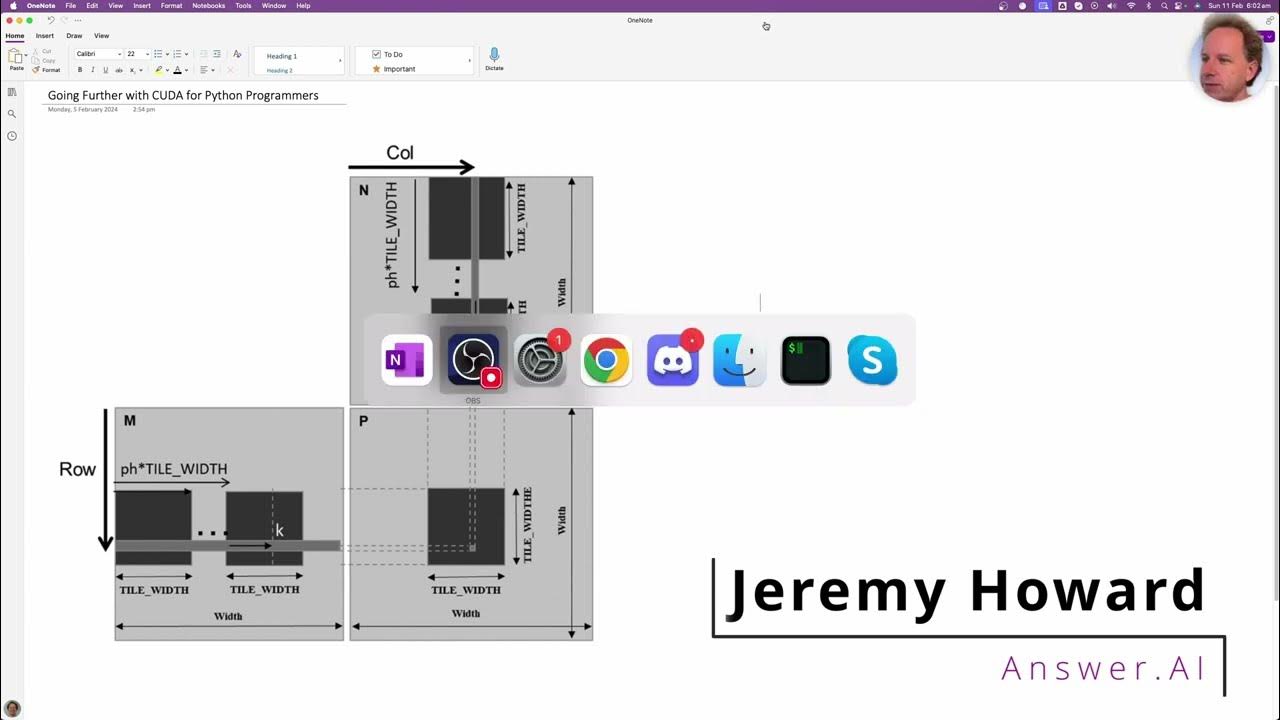

If you watched my "Getting Started with CUDA for Python Programmers", and are ready to go even faster, then this new video is for you! Fast CUDA isn't just about having all the threads go brrrr in parallel, but also give them fast memory to play with. youtu.be/eUuGdh3nBGo

youtube.com

YouTube

Going Further with CUDA for Python Programmers

I used to find writing CUDA code rather terrifying. But then I discovered a couple of tricks that actually make it quite accessible. In this video I introduce CUDA in a way that will be accessible to Python folks, & I even show how to do it all in Colab! youtu.be/nOxKexn3iBo

youtube.com

YouTube

Getting Started With CUDA for Python Programmers

Learning education personalization with duolingo's birdbrain spectrum-ieee-org.cdn.ampproject.org/c/s/spectrum.i…

United States 트렌드

- 1. Texans 24.1K posts

- 2. #MissUniverse 61.5K posts

- 3. James Cook 4,214 posts

- 4. #TNFonPrime 1,427 posts

- 5. Will Anderson 2,803 posts

- 6. Sedition 228K posts

- 7. Davis Mills 1,645 posts

- 8. Shakir 3,259 posts

- 9. Prater N/A

- 10. Christian Kirk 2,298 posts

- 11. Treason 121K posts

- 12. Lamelo 9,219 posts

- 13. Nico Collins 1,065 posts

- 14. Cheney 104K posts

- 15. Paul George 2,055 posts

- 16. #htownmade 1,231 posts

- 17. Seditious 128K posts

- 18. #BUFvsHOU 1,508 posts

- 19. TMNT 6,342 posts

- 20. Commander in Chief 64.6K posts

Something went wrong.

Something went wrong.