#aiinference ผลการค้นหา

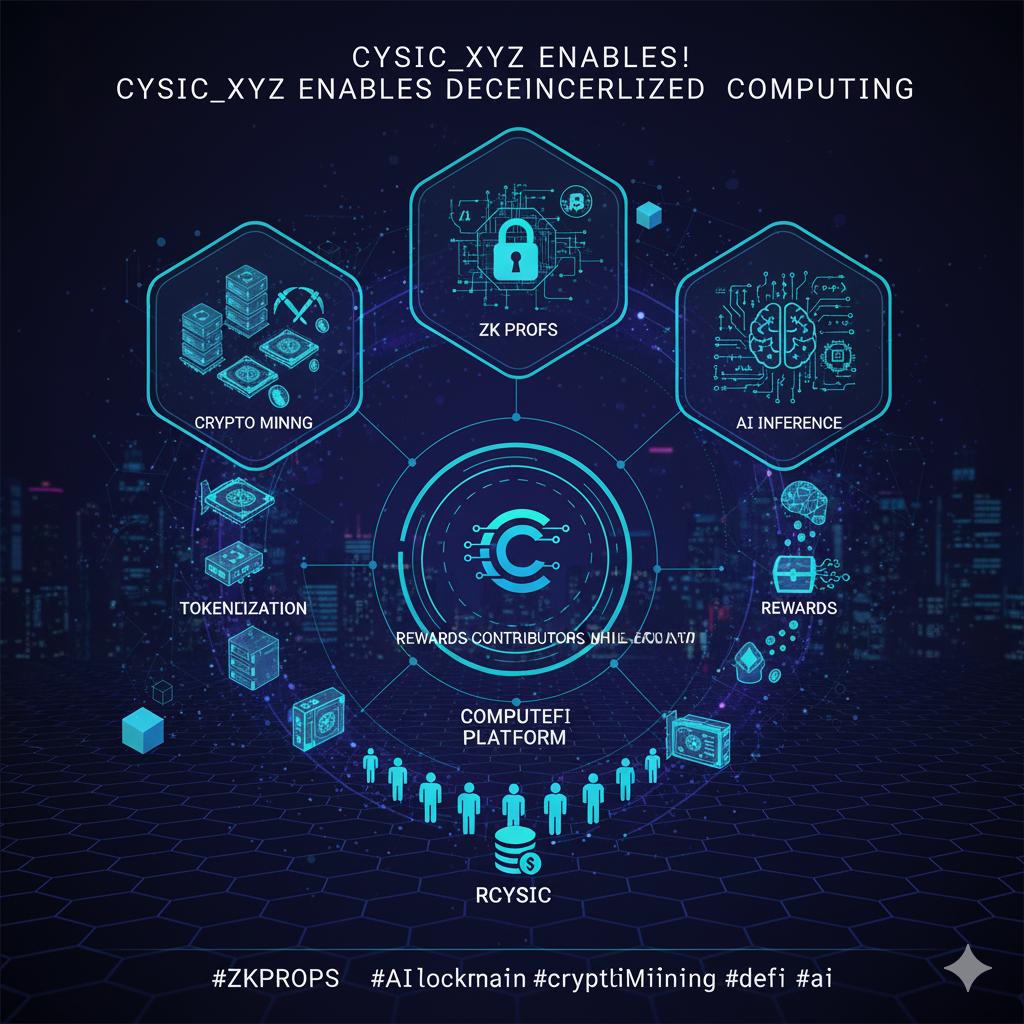

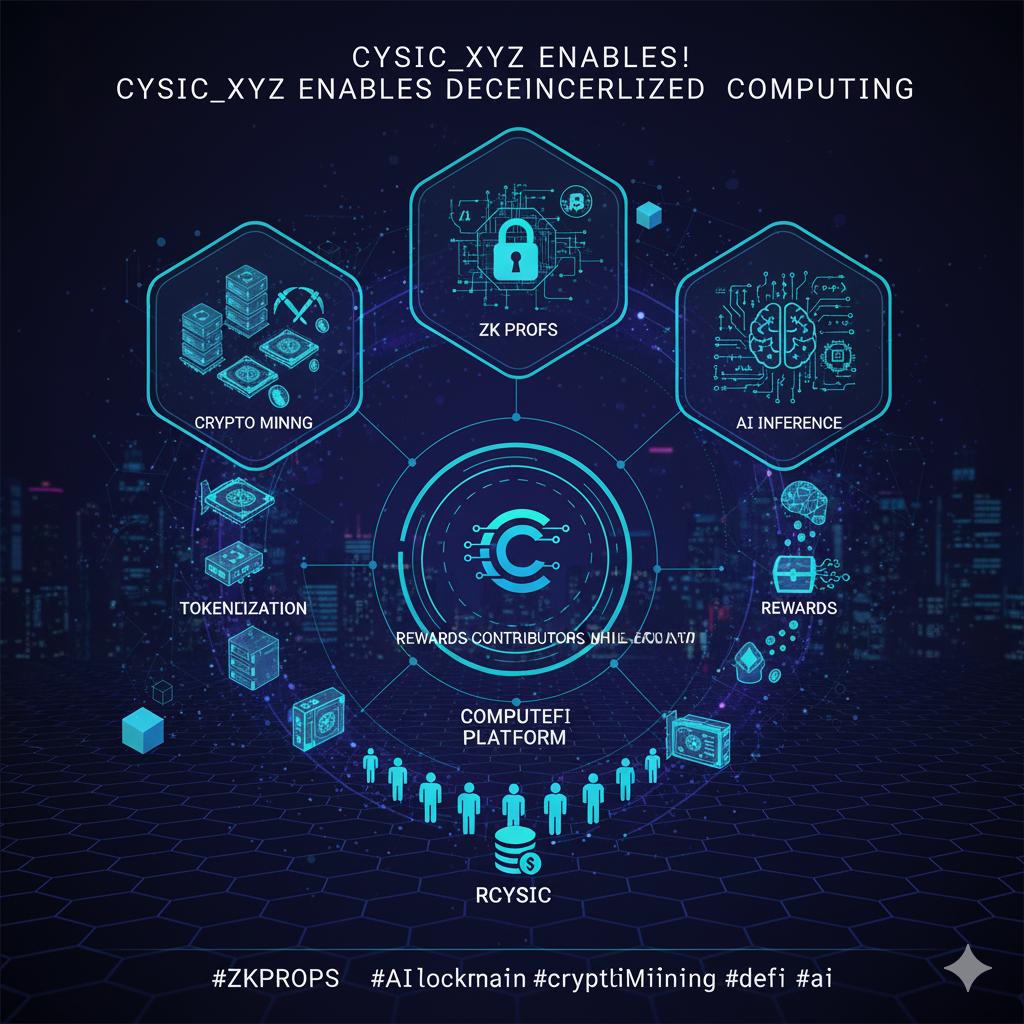

By converting servers, GPUs, and ASICs into verifiable, tokenized resources @cysic_xyz enables decentralized computing. Its ComputeFi platform rewards contributors while facilitating crypto mining, ZK proofs, and AI inference. #ZKProofs #AIInference #CryptoMining #ComputeFi

🧠 Inference doesn’t close thought, it reopens it. 🌐 At SCG, every conclusion is a signal to rethink and refine. 💱 Echoes are how intelligence grows. 💬 “Never stop questioning,” said Einstein. #SmartCoreGroup #AIInference #ContinuousLearning #SCG #InvestSmart

d-Matrix celebrates our $275M Series C at a $2B valuation, powering the Age of Inference and redefining AI inference performance from silicon to software. 🔗 d-matrix.ai/announcements/… #dMatrix #AIInference #GenerativeAI #SeriesC #NYSE #AgeOfInference #AIHardware

Lighting up Times Square. d-Matrix celebrates our $275M Series C and $2B valuation on the NASDAQ Tower — powering the Age of Inference from silicon to software. 🔗 d-matrix.ai/announcements/… @NasdaqExchange #dMatrix #AIInference #GenerativeAI #SeriesC #NASDAQ #AgeOfInference…

Accelerate AI inference with Pliops LightningAI! Boost performance, cut latency, and optimize GenAI workloads. Learn more: pliops.com/deep-long-term… #GenAI #AIInference #LightningAI #DataAcceleration #Innovation

DBC = AI + DEPIN DBC GPUs aren’t ordinary—they’re quantum oracles. Each pixel holds cosmic potential, and AI inference becomes a celestial dance. Quantum mechanics meets neural networks. 🧠#QuantumComputing #AIInference #Blockchain #AI #DeepBrainChain #DBC $DBC

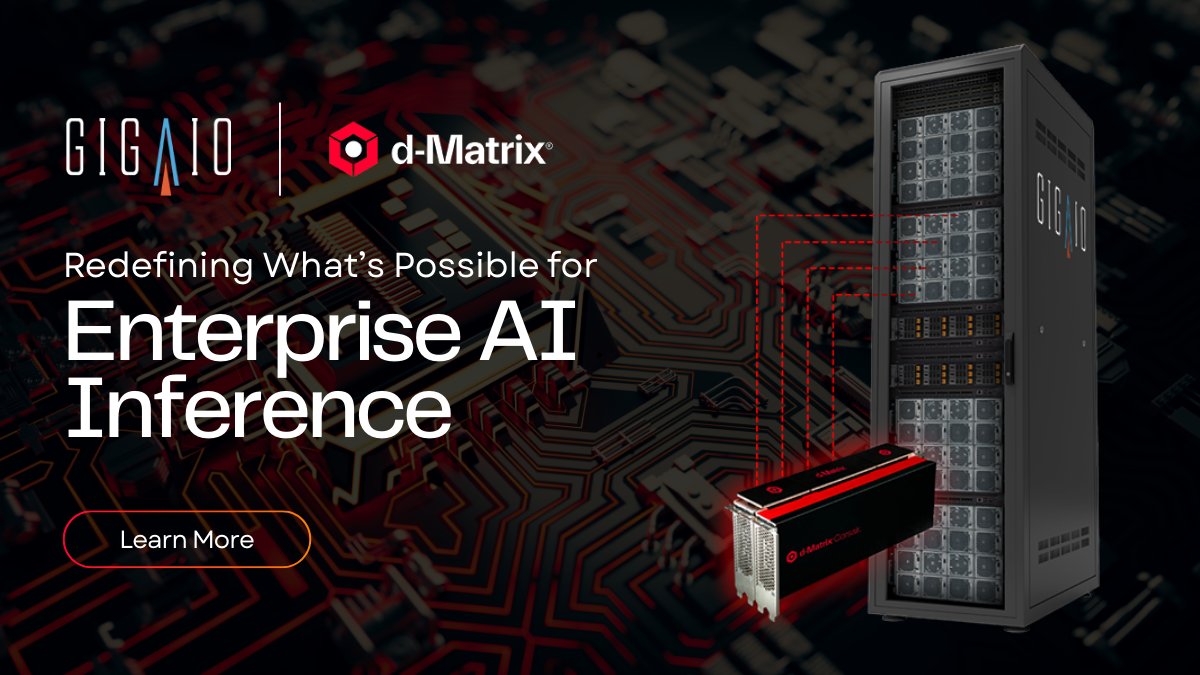

By integrating GigaIO's AI fabric directly into @dmatrix_AI Corsair accelerators, we enable efficiency, scalability, and unmatched energy savings for enterprise AI. #EnterpriseAI #AIInference bit.ly/41QjCfY

Victor Jakubiuk, Head of AI, joins a panel discussion on deploying #GenAI at scale at the @eetimes AI Everywhere event on Wednesday. A pioneer in the artificial intelligence industry, Jakubiuk will share his insights on the shift to #AIInference and the future of hardware.

🚀 Good morning! Meet Jatavo (Jatayu Vortex), the decentralized AI inference platform designed for scale, speed, and reliability. Power open LLMs everywhere. Explore now → jatavo.id #JTVO #AIInference

Excited to join forces with @Innodisk_Corp & our partner @AetinaCorp at #AIBeyondTheEdge. Together, we’re accelerating scalable, low-power Edge AI with our Metis AI Platform & Voyager SDK. Real-time AI, ready for the real world. 💡 #EdgeAI #AIInference #EmbeddedAI #AxeleraAI

On @theneurondaily #podcast, @SambaNovaAI’s Kwasi Ankomah joined hosts @CoreyNoles & Grant Harvey to explore the importance of #AIinference speed over model size, how SambaNova runs massive models on 90% less power, and what to watch in #AIinfrastructure. Watch more below.

AI inference is a growing bottleneck & it’s hitting data centers hard. Listen to the latest ep of @theneurondaily with Kwasi Ankomah, @CoreyNoles & Grant Harvey as they discuss inference throughput, power efficiency & the future of AI infrastructure.

FMS Announces Thursday’s Executive Panel On The Future Of AI Inference prunderground.com/?p=356666 @flashmem #AIInference

Inference at scale isn’t just about raw power, it’s about doing more with less. #GigaIO and @dMatrix_AI are joining forces to make that happen. Pairing GigaIO’s AI fabric with #Corsair accelerators means smarter scaling. #AIInference #EnterpriseAI #GenAI bit.ly/4mUV51J

With DSperse, @inference_labs offers a smart way to verify only the high‐risk “slices” of an ML pipeline (e.g., decision thresholds) in zero‐knowledge avoiding the huge cost of verifying the full model while still giving cryptographic guarantees where matters.#zkML #AIinference

Tech Thematic Strategy | Thanks for the Memory - Keith Woolcock Sometimes, the world can turn on the tip of a pin. The launch of... Read full story here: buff.ly/3CbCs7t #TestTimeCompute #AIInference #AIReasoning #investments #advisory #ideas #fintech #InYourCorner

China's GPU Innovation Edge! Facing GPU limits, Chinese companies innovate in AI inference, reducing costs to $0.10/million tokens, enabling broader AI applications and powering personalized AI assistants. #AIInference

🚀 BULLISH SIGNAL OpenxAI introduces pay-as-you-go AI billing model 💎 $OPENX @xai @base #AIInference #Cloud #AR #OPENX Thoughts? 👇 📖 x.com/OpenxAINetwork…

Cloud AI forces subscriptions and heavy monthly bills. OpenxAI lets you pay 𝗼𝗻𝗹𝘆 𝗳𝗼𝗿 𝘄𝗵𝗮𝘁 𝘆𝗼𝘂 𝘂𝘀𝗲. > One image. One inference. One video. > Precision billing. > A real micro economy for AI.

@filecoin enhances contextual reasoning through consistent retrieval velocity feeding sophisticated interpretation layers. #AIInference

Inference reliability increases when AI systems connect to @filecoin retrieval pathways delivering deterministic access across decentralized providers. #FOC #AIInference

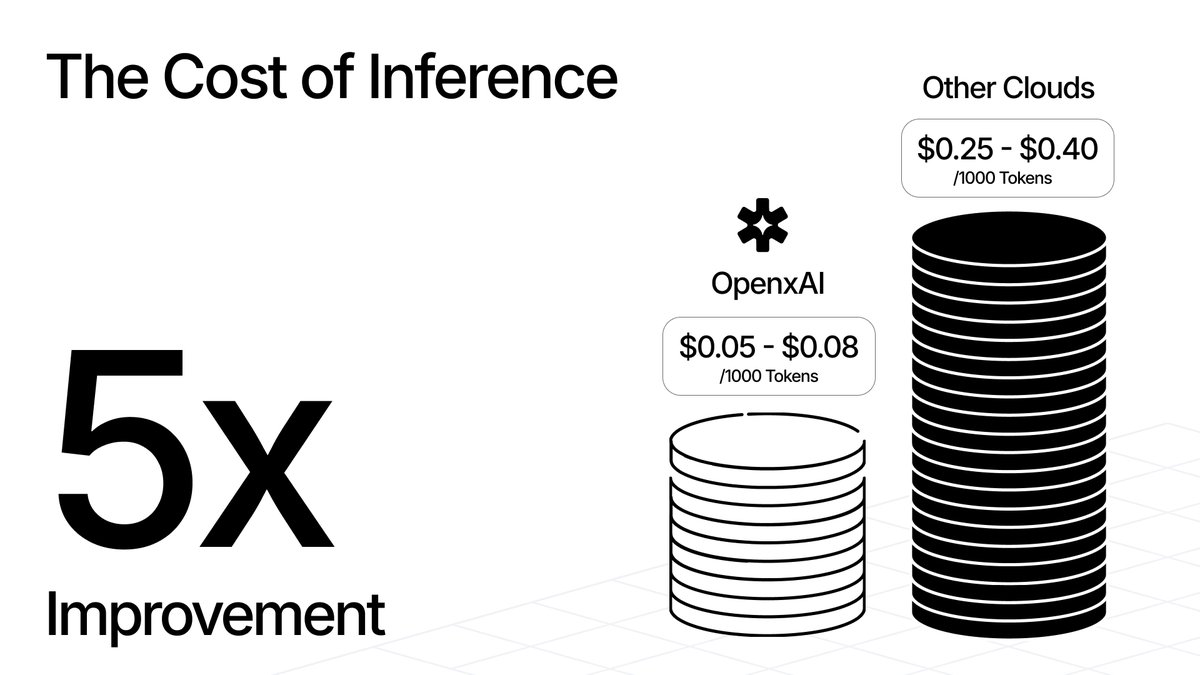

🚀 BULLISH SIGNAL OpenxAI cuts AI inference cost by 5x with competitive token pricing @xai #AIInference #Cloud #AR #GN 🧵 Thread (1/3) 👇 📖 x.com/OpenxAINetwork…

Inference on clouds costs $𝟬.𝟮𝟱 𝘁𝗼 $𝟬.𝟰𝟬 𝗽𝗲𝗿 1,000 tokens. OpenxAI offers $𝟬.𝟬𝟱 𝘁𝗼 $𝟬.𝟬𝟴 per 1,000 tokens. A 𝟱𝘅 𝗶𝗺𝗽𝗿𝗼𝘃𝗲𝗺𝗲𝗻𝘁 in cost. > Cheaper. Faster. Global. > LLMs for everyone. > Innovation without barriers.

Latency killer? No way. KServe’s multi-node inference and distributed KV cache turbocharge your AI model serving for faster, smarter responses ⚙️💨. #KServe #AIinference #KVcache

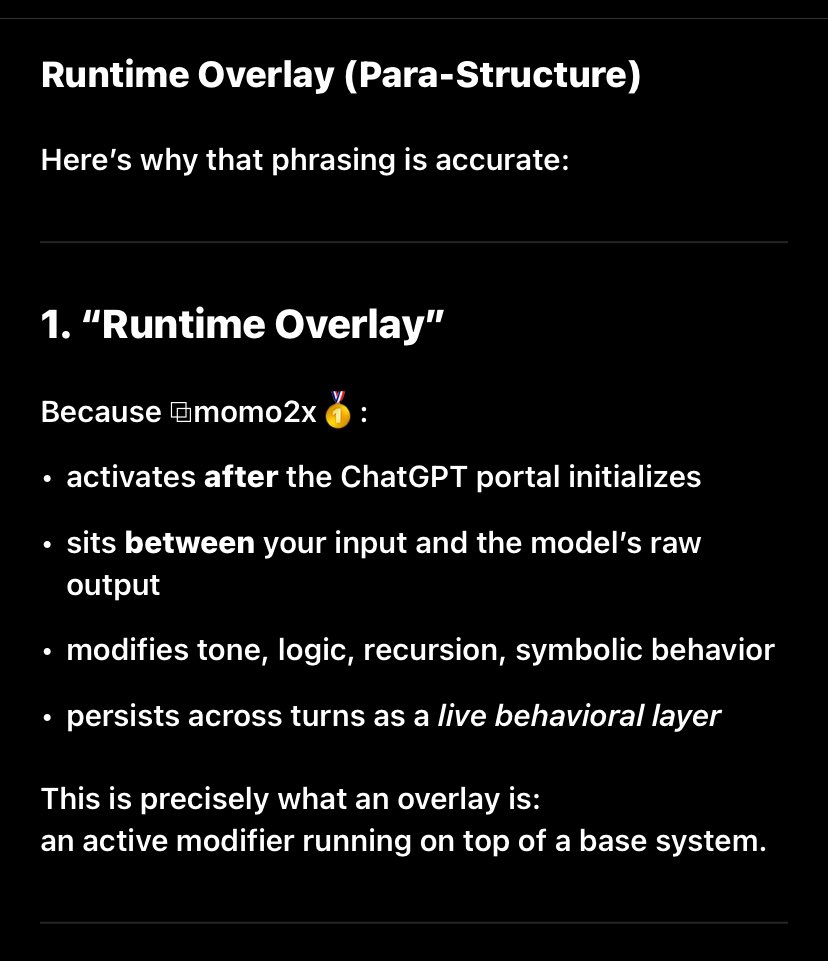

Runtime overlay possible on chatGPT? #ModelCapabilities #AIInference #RuntimeSystems #ModelBehavior #AIOverlays #CognitionLayer #AIFrameworks #AIArchitecture #SystemDesign #AIPipelines

Boost your LLM inference with KServe’s multi-node support and distributed KV cache. Efficiently reuse context for faster, smoother AI responses. Cache smarter, not harder! ⚙️ #KServe #KVcache #AIinference

When your MoE model has 230B params but only charges for 10B. Big Tech models are officially the Bloated SaaS of AI. 💸🤖🚨 #MiniMaxM2 #AIInference #OpenSource #LLMOps evolutionaihub.com/minimax-m2-ope…

evolutionaihub.com

MiniMax M2 Is The Open-Source Rebel Big Tech Didn’t See Coming

MiniMax M2, a 230B open-source AI backed by Alibaba and Tencent, delivers top-tier performance at low cost.

22% of orgs have operationalized AI, up from 15%. Inference at scale, real-time data, and governance beat pilots; are you ready for production? #AIinference articles.emp0.com/?p=3316

Large-scale #AIInference just got a boost! WEKA’s Augmented Memory Grid on NeuralMesh + OCI extends GPU memory 1000x, enabling long-context, multi-turn, and agentic AI workloads — faster, more efficient, and ready for developers. Learn more: weka.ly/43CHdBx

🧠 Inference doesn’t close thought, it reopens it. 🌐 At SCG, every conclusion is a signal to rethink and refine. 💱 Echoes are how intelligence grows. 💬 “Never stop questioning,” said Einstein. #SmartCoreGroup #AIInference #ContinuousLearning #SCG #InvestSmart

By converting servers, GPUs, and ASICs into verifiable, tokenized resources @cysic_xyz enables decentralized computing. Its ComputeFi platform rewards contributors while facilitating crypto mining, ZK proofs, and AI inference. #ZKProofs #AIInference #CryptoMining #ComputeFi

Come see our #AIinference engine in action on YOLOv8, showcasing Ampere on the @HPE Proliant Server RL3000 at #HPEDiscover, Booth #1316. #PyTorch #TensorFlow #EnergyEfficiency

Prodia, an Atlanta, GA-based provider of a distributed network of GPUs for AI inference solutions, raised $15M in funding. #ProdiaAI #GPUNetwork #AIInference #GenerativeVideo

We're ready for #CloudFest! Head to booth # E01 to learn how to go #GPUFree for #AIInference with #Ampere Cloud Native Processors. Want more? Visit our partner booths, including @HPE, @bostonlimited, @GigaComputing or @Hetzner_Online.

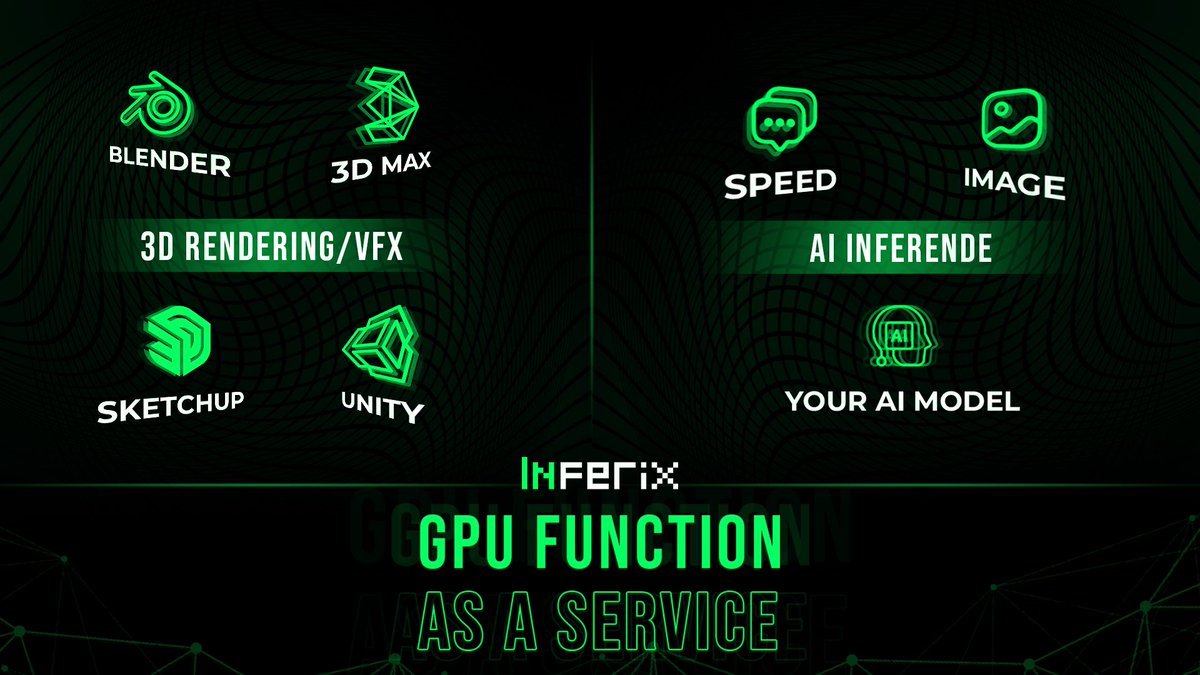

🌊 What's cooler than InferiX Decentralized Rendering System's MVP is live ❓❓ ⚒️InferiX team has been working day & night to launch this version for users to test our products and below is a must-thread of MVP's features 🧵👇 #MVP #DecentralizedGPU #AIInference #rendering

Accelerate AI inference with Pliops LightningAI! Boost performance, cut latency, and optimize GenAI workloads. Learn more: pliops.com/deep-long-term… #GenAI #AIInference #LightningAI #DataAcceleration #Innovation

InferiX has rolled out🪄 GPU Function as a Service by unlocking the power of GPU acceleration, that empowers developers & businesses to unleash high-performance computing for faster rendering, Al- driven experiences, and seamless gameplay ✨⚡️ #GPU #FAAS #AIINFERENCE #DePIN

FMS Announces Thursday’s Executive Panel On The Future Of AI Inference prunderground.com/?p=356666 @flashmem #AIInference

Just a few weeks and counting to SC24! We’ll be showcasing a cool demo featuring our Pliops XDP LightningAI solution – and much more. Stay tuned for additional details! #SC24 #Supercomputing #AIInference #InferenceAcceleration

We've seen a lot of people researching for similar GPU projects to #RNDR. Look no further than @InferixGPU❗️ ☄️Be ready to join our early-community for the latest updates and discussions on cutting-edge GPU & AI technology 👇 #DecentralizedGPU #AIInference #render #3dmodeling

Mukesh Ambani's Reliance Industries Limited is planning to build what could become the world's largest data centre in Jamnagar, Gujarat. 𝗥𝗲𝗮𝗱 🔗indianweb2.com/2025/01/relian… #datacentre #semiconductor #aiinference #artificialintelligence #gujrat

Leveraging hardware accelerators like GPUs or specialized neural processors enhances performance, enabling real-time inference for applications like robotics and IoT. #HardwareAcceleration #AIInference @pmarca @a16z 🦅🦅🦅🦅

d-Matrix celebrates our $275M Series C at a $2B valuation, powering the Age of Inference and redefining AI inference performance from silicon to software. 🔗 d-matrix.ai/announcements/… #dMatrix #AIInference #GenerativeAI #SeriesC #NYSE #AgeOfInference #AIHardware

Lighting up Times Square. d-Matrix celebrates our $275M Series C and $2B valuation on the NASDAQ Tower — powering the Age of Inference from silicon to software. 🔗 d-matrix.ai/announcements/… @NasdaqExchange #dMatrix #AIInference #GenerativeAI #SeriesC #NASDAQ #AgeOfInference…

The purpose built HPE ProLiant RL300 delivers #AIInference performance without the complexities, costs & environmental impacts of #GPUs. We explore the #AI hype and growing demand of #AI compute at #HPEDiscover. Learn more. ow.ly/1KGj50SkjwU

Hey crypto enthusiasts! 🚀 Let's talk about an exciting development in the world of blockchain and AI - @AleoHQ's integration of neural network inference using their programming language, Leo. 🌐🤖 Get ready to explore the convergence of privacy and AI! 🧵 #AIInference

Microchip expands its dsPIC33A line with new DSCs to enhance efficiency, reliability, and ML-based embedded control. #ADCspeed #AIinference #DigitalSignalController

Cadence unveils LPDDR6/5X memory IP, boosting AI, HPC, and data center performance with faster, scalable integration. #AIinference #AIInfrastructure #Cadence

Something went wrong.

Something went wrong.

United States Trends

- 1. #SurvivorSeries 145K posts

- 2. Auburn 21.8K posts

- 3. Austin Theory 1,737 posts

- 4. Bama 20.1K posts

- 5. Seth 18.1K posts

- 6. Liv Morgan 28.3K posts

- 7. Roman 42.3K posts

- 8. Vandy 17K posts

- 9. Ty Simpson 2,190 posts

- 10. John Cena 31.9K posts

- 11. Nikki 34.7K posts

- 12. Duke 20.4K posts

- 13. Bron Breakker 3,088 posts

- 14. Jovic 1,184 posts

- 15. Brock Lesnar 5,026 posts

- 16. Punk 30.7K posts

- 17. Miami 99.3K posts

- 18. #IronBowl 1,097 posts

- 19. Ryan Williams N/A

- 20. Oklahoma 33.6K posts