#activationfunctions risultati di ricerca

1/6: Quick tip: When implementing ReLU in PyTorch/TF, always initialize weights properly (e.g., He initialization) – it prevents too many neurons from dying early! #ActivationFunctions

Neural Networks: Explained for Everyone Neural Networks Explained The building blocks of artificial intelligence, powering modern machine learning applications ... justoborn.com/neural-network… #activationfunctions #aiapplications #AIEthics

Neural Networks: Explained for Everyone Neural Networks Explained The building blocks of artificial intelligence, powering modern machine learning applications ... justoborn.com/neural-network… #activationfunctions #aiapplications #AIEthics

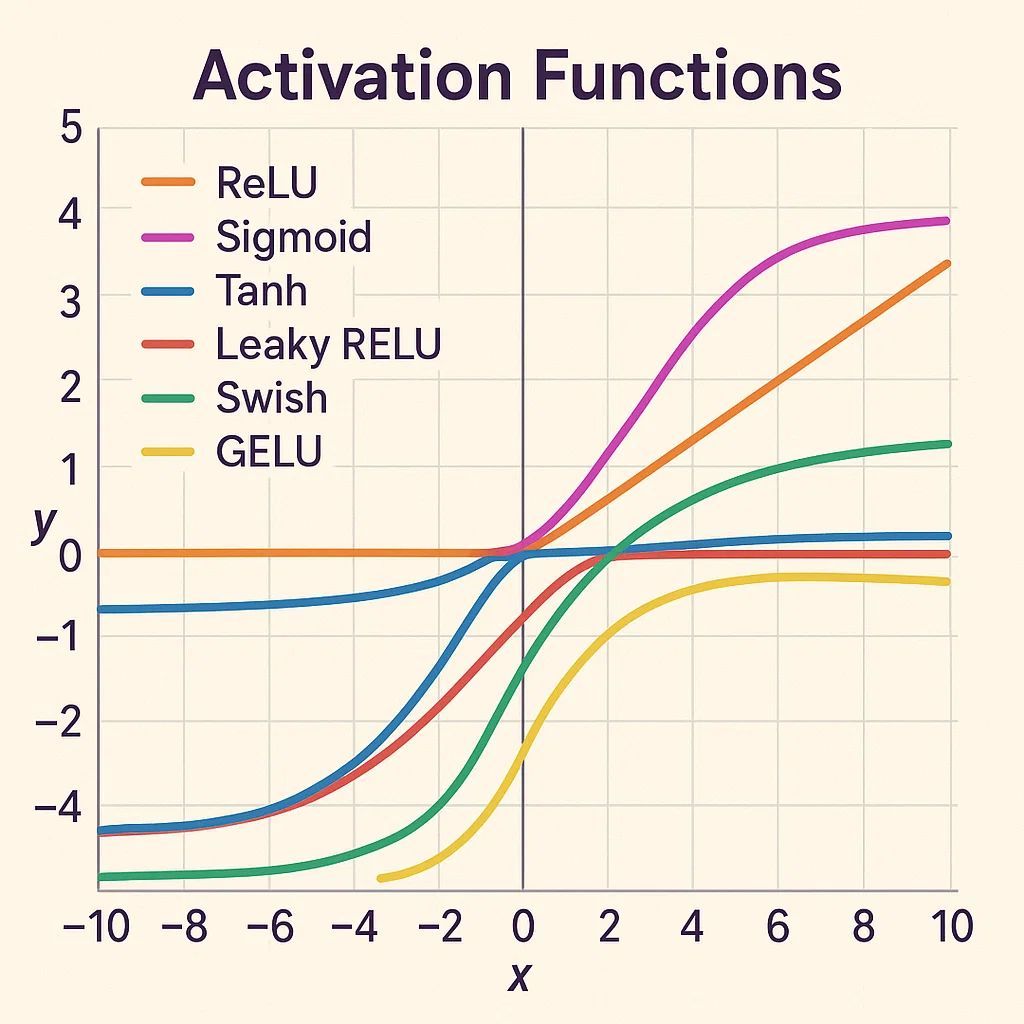

Activation functions in deep networks aren't just mathematical curiosities—they're the decision makers that determine how information flows. ReLU, sigmoid, and tanh each shape learning differently, influencing AI behavior and performance. #DeepNeuralNetworks #ActivationFunctions…

Neuron Aggregation & Activation Functions – In ANNs, aggregation combines weighted inputs, while activation functions introduce non-linearity letting networks learn complex patterns instead of staying linear. #DeepLearning #MachineLearning #ActivationFunctions #AI #NeuralNetworks

🧠 Let’s activate some neural magic! ReLU, Sigmoid, Tanh & Softmax each shape how your network learns & predicts. From binary to multi-class—choose wisely to supercharge your model! ⚡ 🔗buff.ly/Cx76v5Y & buff.ly/5PzZctS #AI365 #ActivationFunctions #MLBasics

Day 28 🧠 Activation Functions, from scratch → ReLU: max(0, x) — simple & fast → Sigmoid: squashes to (0,1), good for probs → Tanh: like sigmoid but centered at 0 #MLfromScratch #DeepLearning #ActivationFunctions #100DaysOfML

🚀 Explore the Activation Function Atlas — your visual & mathematical map through the nonlinear heart of deep learning. From ReLU to GELU, discover how activations shape AI intelligence. 🧠📈 🔗 programming-ocean.com/knowledge-hub/… #AI #DeepLearning #ActivationFunctions #MachineLearning

programming-ocean.com

Activation Function Atlas — From ReLU to GELU

A curated atlas of neural activation functions. Mathematical intuition meets visual clarity.

🚀 Activation Functions: The Secret Sauce of Neural Networks! They add non-linearity, helping models grasp complex patterns! 🧠 🔹 ReLU🔹Sigmoid🔹Tanh Power up your AI models with the right activation functions! Follow #AI365 👉 shorturl.at/1Ek3f #ActivationFunctions 💡🔥

Neural Networks: Explained for Everyone Neural Networks Explained The building blocks of artificial intelligence, powering modern machine learning applications ... justoborn.com/neural-network… #activationfunctions #aiapplications #AIEthics

Builder Perspective - #AttentionMechanisms: Multi-head attention patterns - Layer Configuration: Depth vs. width tradeoffs - Normalization Strategies: Pre-norm vs. post-norm - #ActivationFunctions: Selection and placement

Neural Network Architectures and Activation Functions: A Gaussian Process Approach - freecomputerbooks.com/Neural-Network… Look for "Read and Download Links" section to download. #NeuralNetworks #ActivationFunctions #GaussianProcess #DeepLearning #MachineLearning #GenAI #GenerativeAI

4️⃣ Sigmoid’s Secret 🤫 Why do we use sigmoid activation? It adds non-linearity, letting the network learn complex patterns! 📈 sigmoid = @(z) 1 ./ (1 + exp(-z)); Without it, the network is just fancy linear regression! 😱 #ActivationFunctions #DeepLearning

I just published a blog about Asymmetric Tanh Pi 4 for Deep Neural Nets link.medium.com/rINE3VxixQb and corresponding github.com/Mastermindless… #DeepLearning #ArtificialIntelligence #ActivationFunctions #MachineLearning #NeuralNetworks #ResNet #CustomTanh #AIResearch #GradientFlow

Something went wrong.

Something went wrong.

United States Trends

- 1. Jake Elliot 3,418 posts

- 2. Tulane 18.2K posts

- 3. $ENX 2,373 posts

- 4. Shipley 1,647 posts

- 5. Ole Miss 13.4K posts

- 6. Miami 113K posts

- 7. Andrew Tate 22K posts

- 8. #Eagles 3,639 posts

- 9. Reed Sheppard N/A

- 10. Texas A&M 39.5K posts

- 11. Notre Dame 36K posts

- 12. Smitty 2,648 posts

- 13. #PHIvsWAS 1,040 posts

- 14. Top G 124K posts

- 15. #RaiseHail 1,587 posts

- 16. Mariota 1,576 posts

- 17. Brandon Graham N/A

- 18. #MisfitsMania 2,709 posts

- 19. Canes 19.3K posts

- 20. Michael Irvin 6,007 posts