#deeplearningtheory kết quả tìm kiếm

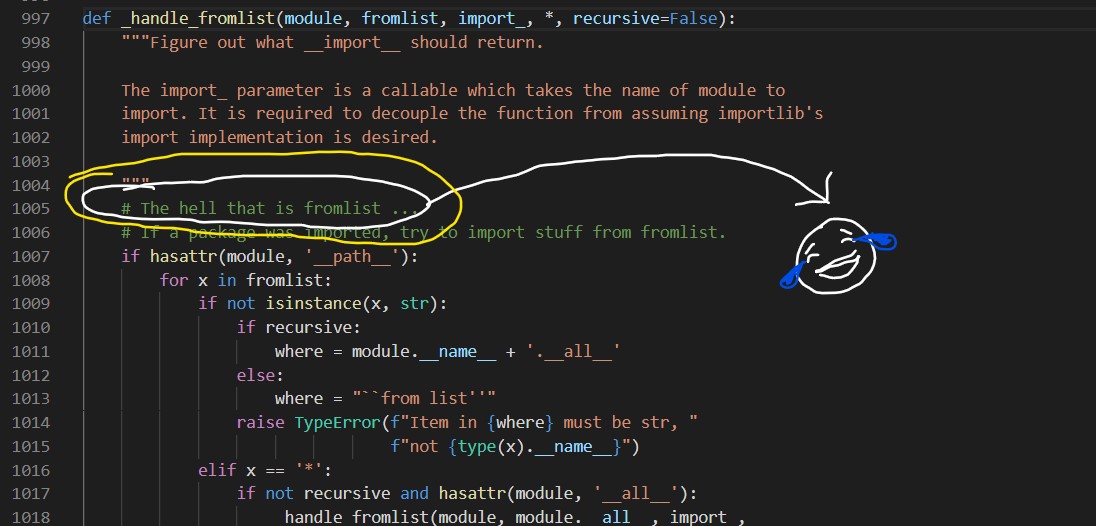

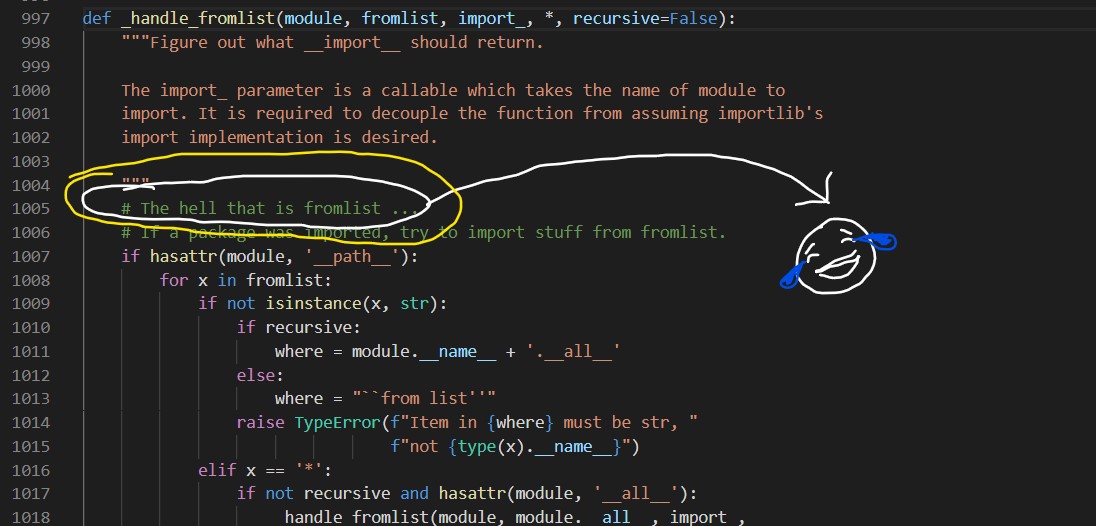

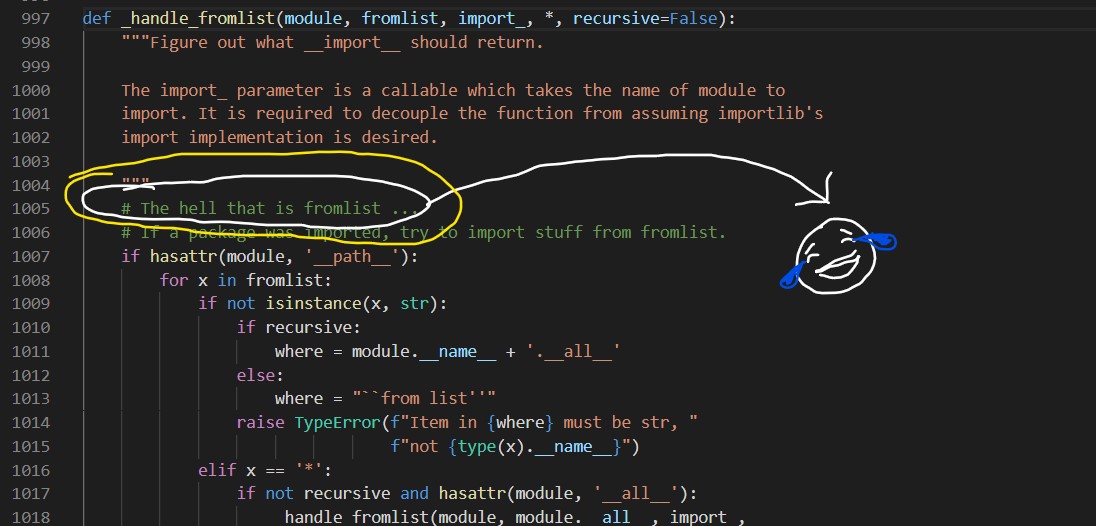

Ramblings of a python core developer :D #deeplearning #DeepLearningTheory #DataScience #python #Opensource #aiedx #mlone #Python3 #MachineLearning

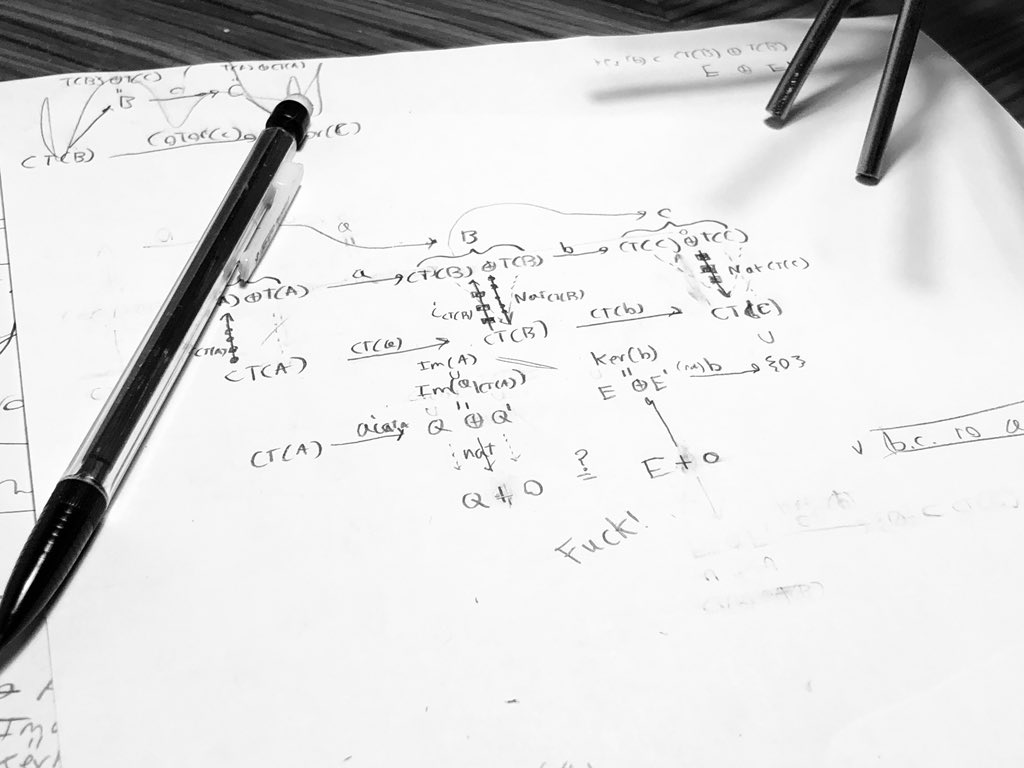

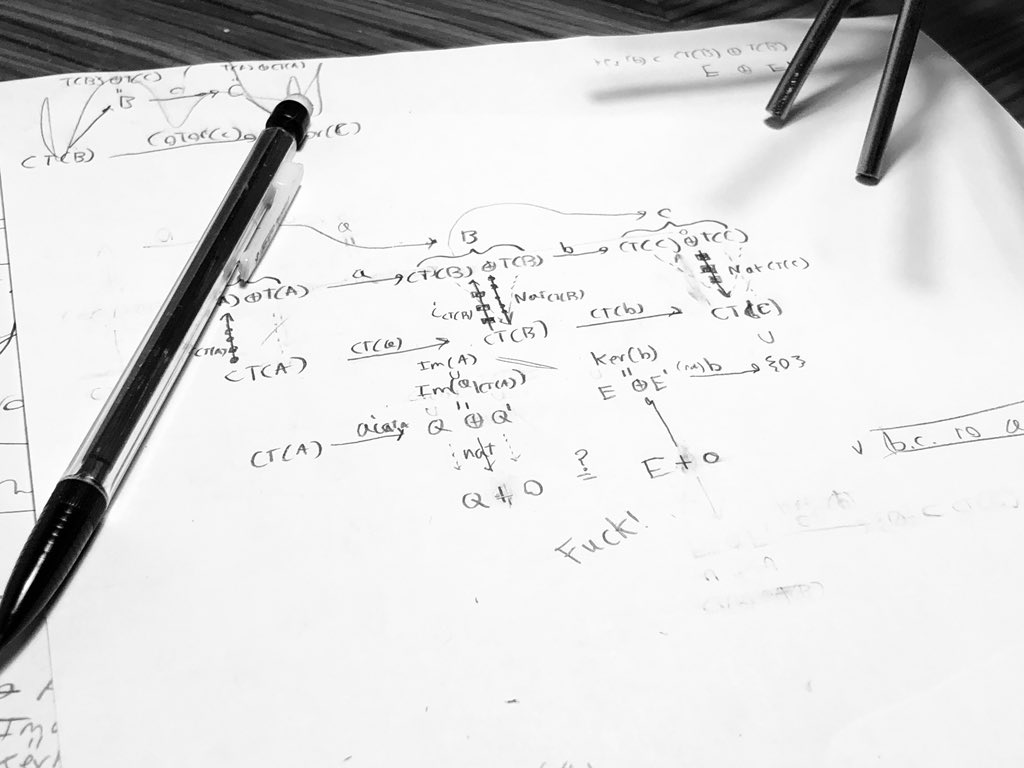

For some reason my most productive days end like this. 📝🗑 I wish adding more layers worked for #DeepLearningTheory too.

I just released my @MSFTResearch talk on "Neural Homology Theory," work with @rsalakhu! #DeepLearningTheory #AlgebraicTopology Full Talk: youtube.com/watch?v=QDQ9J5… Paper: wguss.ml/dev/nht/empiri…

We just released our paper "On Characterizing the Capacity of Neural Networks using Algebraic Topology" on #arxiv. Joint work with @rsalakhu! #DeepLearningTheory #AlgebraicTopology Website: wguss.ml/dev/nht/empiri… Paper: arxiv.org/abs/1802.04443

I just gave a talk at #Berkeley on some new joint work with @rsalakhu called “Neural Homology Theory.” Expect some exciting developments over the next couple of months! #DeepLearningTheory #algtop

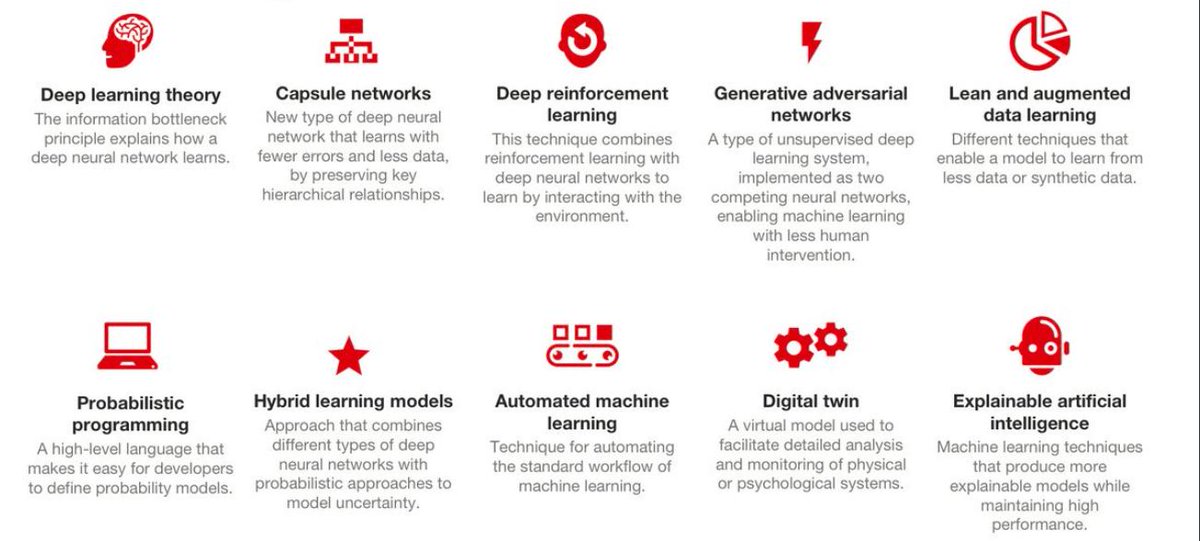

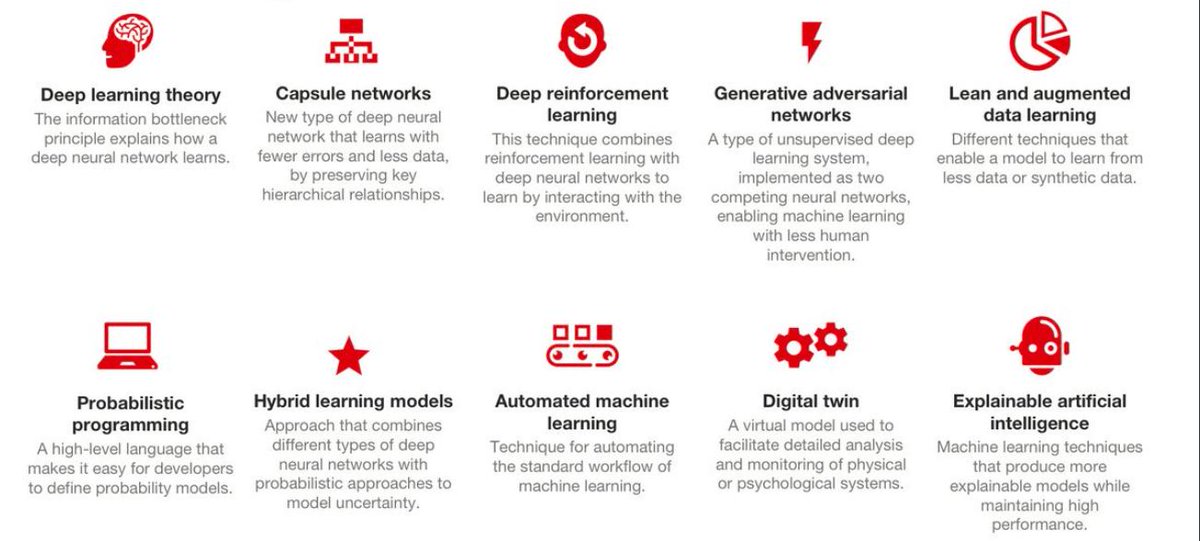

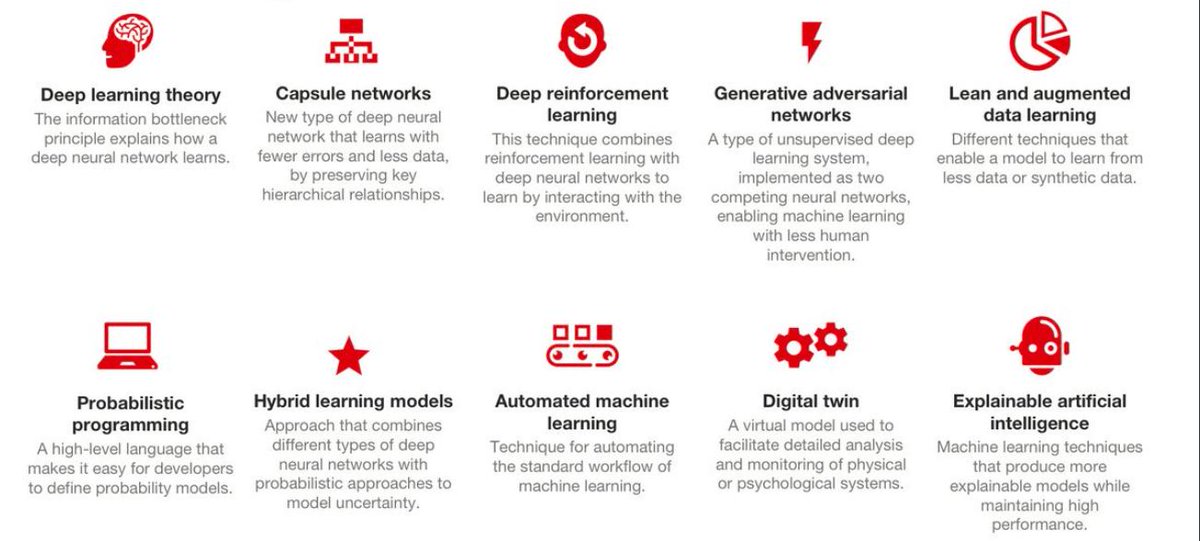

10 AI Technology trends #artificialintelligence #deeplearningtheory #capsulenetworks #deepreinforcementlearning #generativeadversarialnetworks #leanlearning #probabilistic #hybrid #automated #digitaltwin #explainableAI

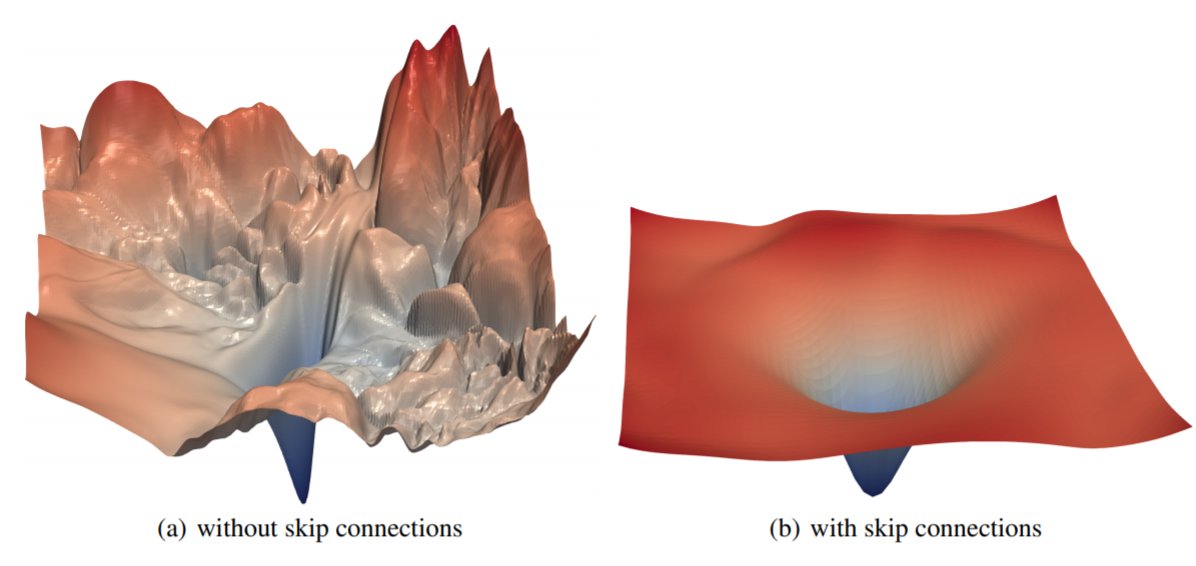

RT Deep Learning Optimization Theory — Introduction dlvr.it/SBhHbN #deeplearning #deeplearningtheory #optimization

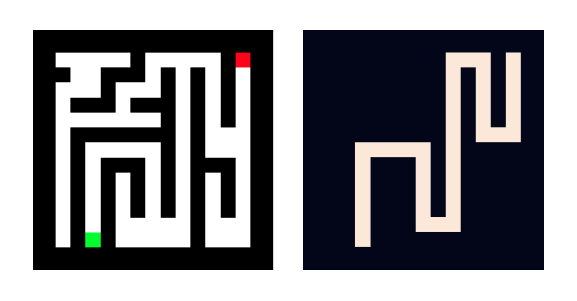

RT Making neural networks solve tougher problems by “thinking” for longer! dlvr.it/S5JnTQ #deeplearningtheory #machinelearning #recurrentneuralnetwork

Give it a try at github.com/msorbi/gnn-ma #GraphNNs #DeepLearningTheory

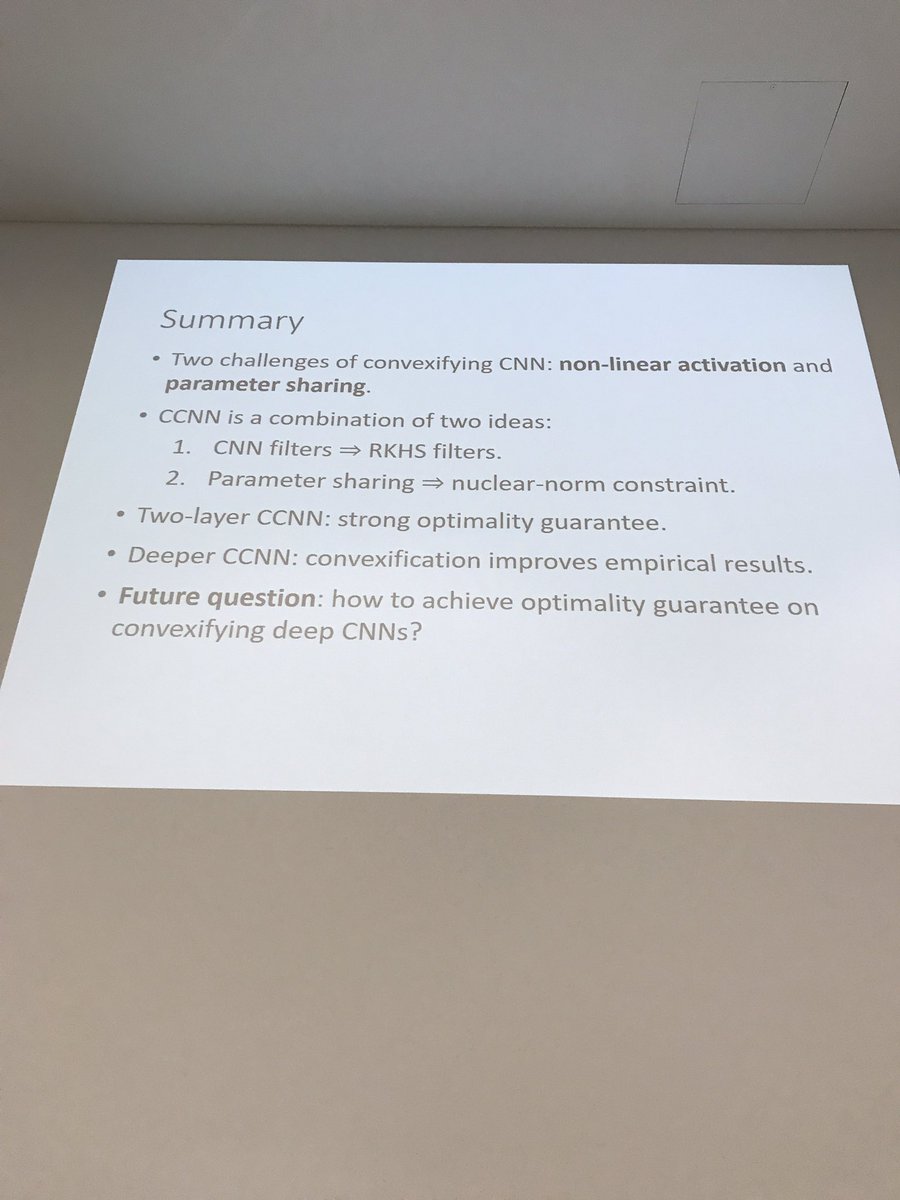

We thank all faculty members and students who participated in the 1st Deep Learning Theory Retreat of TAD Center! We had 2 great days of interesting talks and fruitful discussions. More details: bit.ly/3SIWSZb #deeplearningtheory

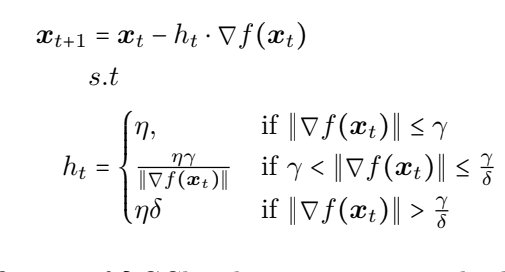

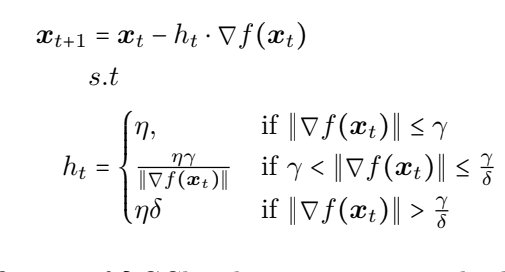

:) delta-GClip @ #TMLR #deeplearningtheory

Our insight is to introduce an intermediate form of gradient clipping that can leverage the PL* inequality of wide nets - something not known for standard clipping. Given our algorithm works for transformers maybe that points to some yet unkown algebraic property of them. #TMLR

Our "delta-GCLip" is the *only* known adaptive gradient algorithm that provably trains deep-nets AND is practically competitive. That's the message of our recently accepted #TMLR paper - and my 4th TMLR journal 🙂 openreview.net/pdf?id=ABT1XQL… #optimization #deeplearningtheory

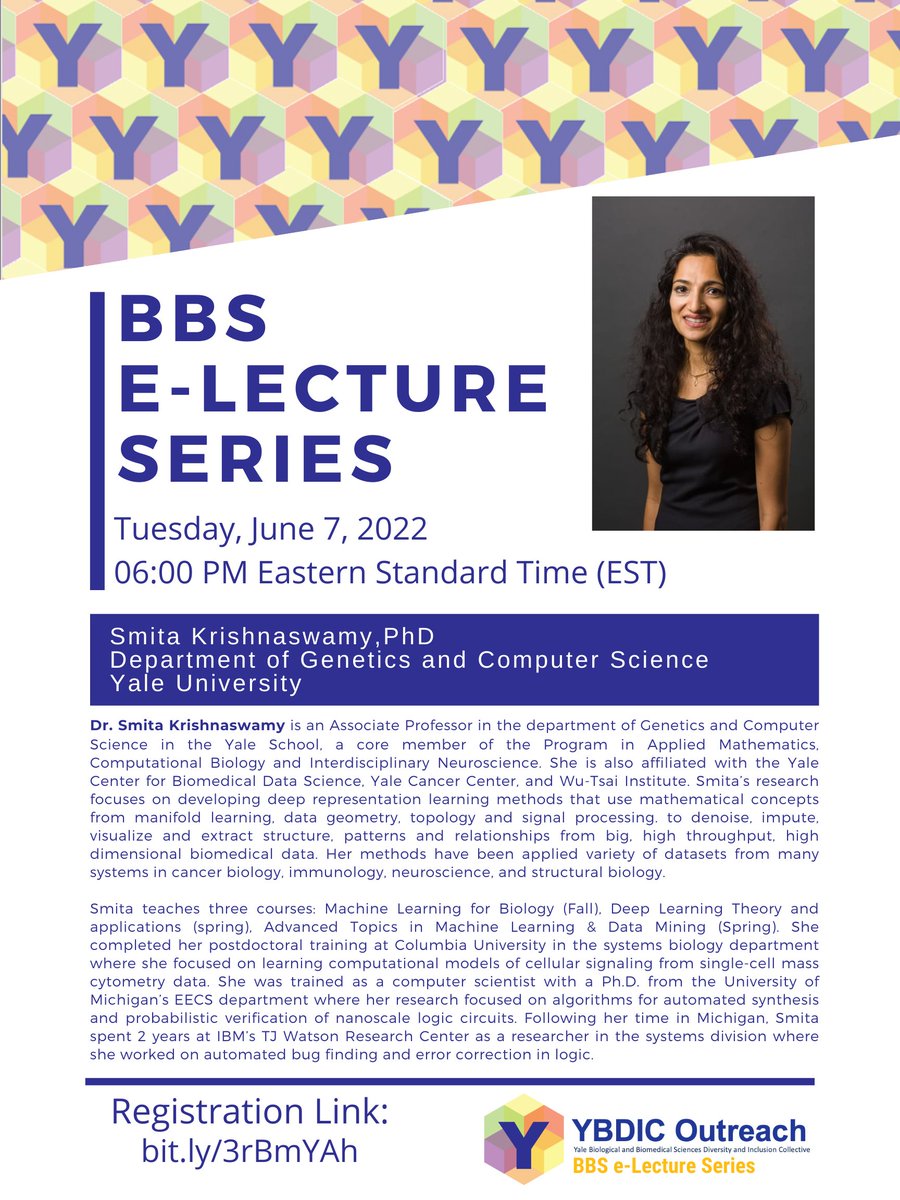

Interested in applying #MachineLearning, #DeepLearningTheory, and #DataMining to #biology and #neuroscience? Join us on Tuesday!

Join us for our last BBS e-Lecture Series talk on Tuesday, 06/07 at 6 PM EST featuring @KrishnaswamyLab! Learn about biological applications to #MachineLearning, #DeepLearningTheory, #DataMining! Register: bit.ly/3rBmYAh

Unexpected places where you can find research on #DeepLearningTheory: The very interesting paper below (by @maxhfarrell, @LiangTengyuan, and @sanjog_misra) has been published in @ecmaEditors earlier this year. Probably unnoticed by many of us in CS. onlinelibrary.wiley.com/doi/epdf/10.39…

You might take comfort in the fact that there’s a few papers out there working out PAC-Bayes generalisation bounds for some deep net architectures 😉 @roydanroy @bneyshabur @MLpager @felix_biggs @KDziugaite and others #DeepLearningTheory

Awesome new work from @HSompolinsky & friends on manifold de-tangling and deep neural network theory! We're psyched to have him as a speaker at the Deep Math conference this October! (deepmath-conference.com) #manifolds4life #deeplearningtheory #deepmath2019

:) delta-GClip @ #TMLR #deeplearningtheory

Our insight is to introduce an intermediate form of gradient clipping that can leverage the PL* inequality of wide nets - something not known for standard clipping. Given our algorithm works for transformers maybe that points to some yet unkown algebraic property of them. #TMLR

Our "delta-GCLip" is the *only* known adaptive gradient algorithm that provably trains deep-nets AND is practically competitive. That's the message of our recently accepted #TMLR paper - and my 4th TMLR journal 🙂 openreview.net/pdf?id=ABT1XQL… #optimization #deeplearningtheory

Give it a try at github.com/msorbi/gnn-ma #GraphNNs #DeepLearningTheory

We thank all faculty members and students who participated in the 1st Deep Learning Theory Retreat of TAD Center! We had 2 great days of interesting talks and fruitful discussions. More details: bit.ly/3SIWSZb #deeplearningtheory

RT Deep Learning Optimization Theory — Introduction dlvr.it/SBhHbN #deeplearning #deeplearningtheory #optimization

Unexpected places where you can find research on #DeepLearningTheory: The very interesting paper below (by @maxhfarrell, @LiangTengyuan, and @sanjog_misra) has been published in @ecmaEditors earlier this year. Probably unnoticed by many of us in CS. onlinelibrary.wiley.com/doi/epdf/10.39…

RT Making neural networks solve tougher problems by “thinking” for longer! dlvr.it/S5JnTQ #deeplearningtheory #machinelearning #recurrentneuralnetwork

Ramblings of a python core developer :D #deeplearning #DeepLearningTheory #DataScience #python #Opensource #aiedx #mlone #Python3 #MachineLearning

You might take comfort in the fact that there’s a few papers out there working out PAC-Bayes generalisation bounds for some deep net architectures 😉 @roydanroy @bneyshabur @MLpager @felix_biggs @KDziugaite and others #DeepLearningTheory

Awesome new work from @HSompolinsky & friends on manifold de-tangling and deep neural network theory! We're psyched to have him as a speaker at the Deep Math conference this October! (deepmath-conference.com) #manifolds4life #deeplearningtheory #deepmath2019

10 AI Technology trends #artificialintelligence #deeplearningtheory #capsulenetworks #deepreinforcementlearning #generativeadversarialnetworks #leanlearning #probabilistic #hybrid #automated #digitaltwin #explainableAI

I just released my @MSFTResearch talk on "Neural Homology Theory," work with @rsalakhu! #DeepLearningTheory #AlgebraicTopology Full Talk: youtube.com/watch?v=QDQ9J5… Paper: wguss.ml/dev/nht/empiri…

We just released our paper "On Characterizing the Capacity of Neural Networks using Algebraic Topology" on #arxiv. Joint work with @rsalakhu! #DeepLearningTheory #AlgebraicTopology Website: wguss.ml/dev/nht/empiri… Paper: arxiv.org/abs/1802.04443

I just gave a talk at #Berkeley on some new joint work with @rsalakhu called “Neural Homology Theory.” Expect some exciting developments over the next couple of months! #DeepLearningTheory #algtop

For some reason my most productive days end like this. 📝🗑 I wish adding more layers worked for #DeepLearningTheory too.

Ramblings of a python core developer :D #deeplearning #DeepLearningTheory #DataScience #python #Opensource #aiedx #mlone #Python3 #MachineLearning

I just released my @MSFTResearch talk on "Neural Homology Theory," work with @rsalakhu! #DeepLearningTheory #AlgebraicTopology Full Talk: youtube.com/watch?v=QDQ9J5… Paper: wguss.ml/dev/nht/empiri…

10 AI Technology trends #artificialintelligence #deeplearningtheory #capsulenetworks #deepreinforcementlearning #generativeadversarialnetworks #leanlearning #probabilistic #hybrid #automated #digitaltwin #explainableAI

For some reason my most productive days end like this. 📝🗑 I wish adding more layers worked for #DeepLearningTheory too.

RT Deep Learning Optimization Theory — Introduction dlvr.it/SBhHbN #deeplearning #deeplearningtheory #optimization

RT Making neural networks solve tougher problems by “thinking” for longer! dlvr.it/S5JnTQ #deeplearningtheory #machinelearning #recurrentneuralnetwork

We thank all faculty members and students who participated in the 1st Deep Learning Theory Retreat of TAD Center! We had 2 great days of interesting talks and fruitful discussions. More details: bit.ly/3SIWSZb #deeplearningtheory

Something went wrong.

Something went wrong.

United States Trends

- 1. Cowboys 68K posts

- 2. Nick Smith Jr 8,923 posts

- 3. Cardinals 30.4K posts

- 4. Kawhi 4,093 posts

- 5. #WWERaw 60.5K posts

- 6. #LakeShow 3,353 posts

- 7. Jerry 45.2K posts

- 8. Kyler 8,251 posts

- 9. Blazers 7,591 posts

- 10. Logan Paul 9,933 posts

- 11. No Luka 3,422 posts

- 12. Jacoby Brissett 5,464 posts

- 13. Jonathan Bailey 18.8K posts

- 14. Pickens 6,651 posts

- 15. Cuomo 171K posts

- 16. Javonte 4,284 posts

- 17. Koa Peat 6,198 posts

- 18. Valka 4,492 posts

- 19. AJ Dybantsa 1,670 posts

- 20. Bronny 14.8K posts